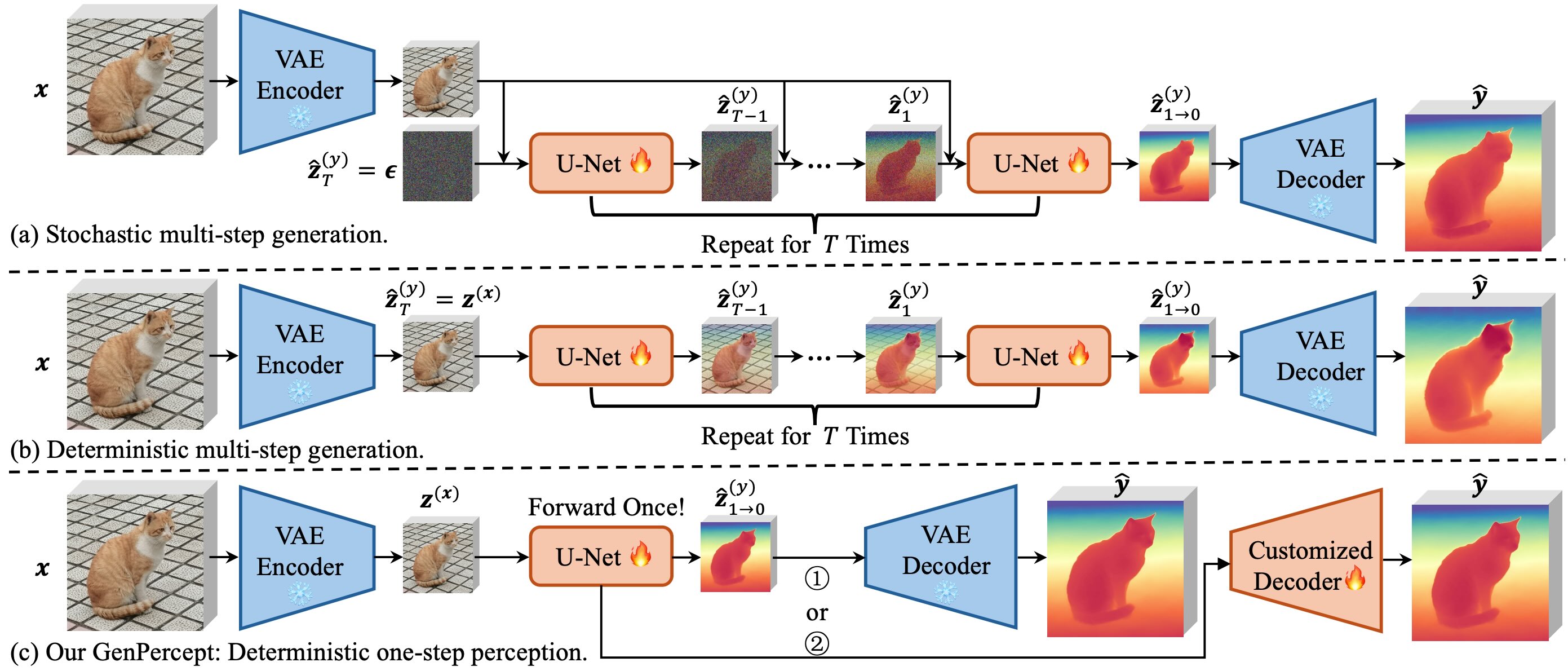

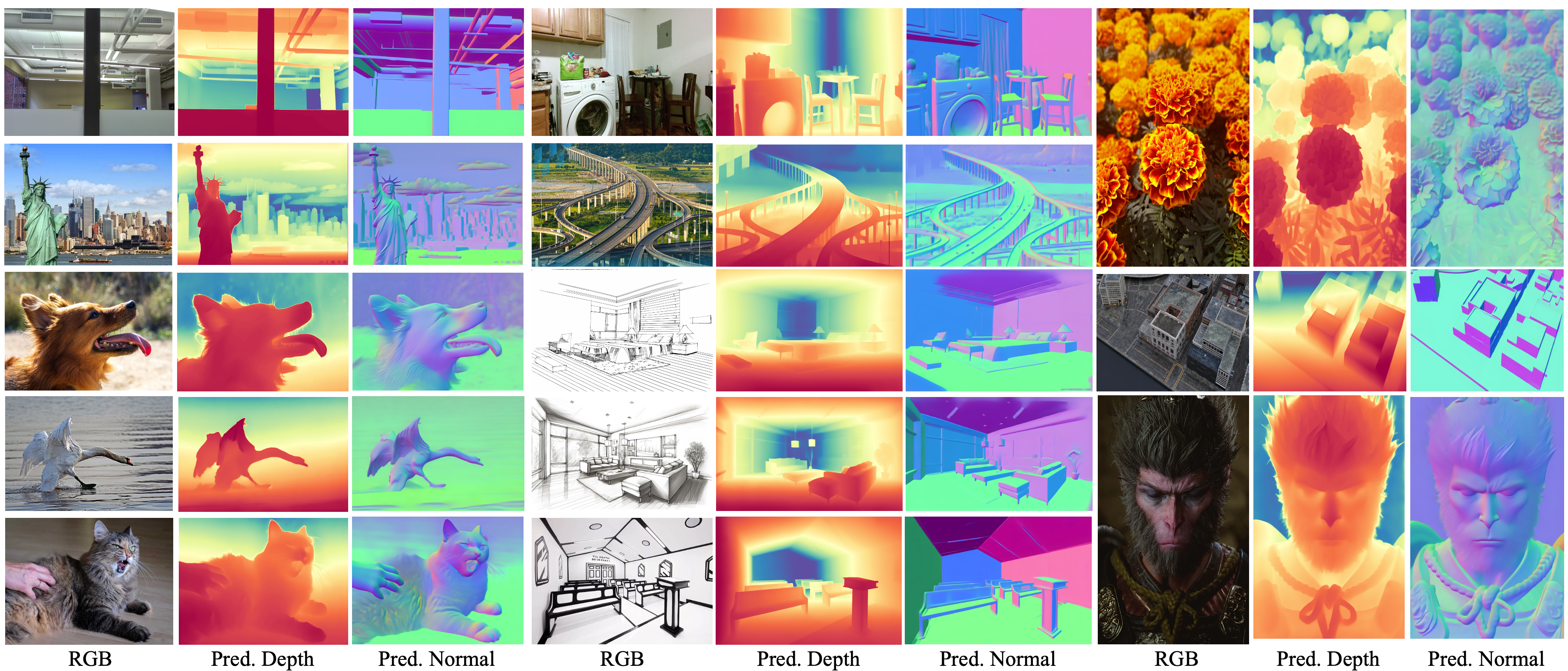

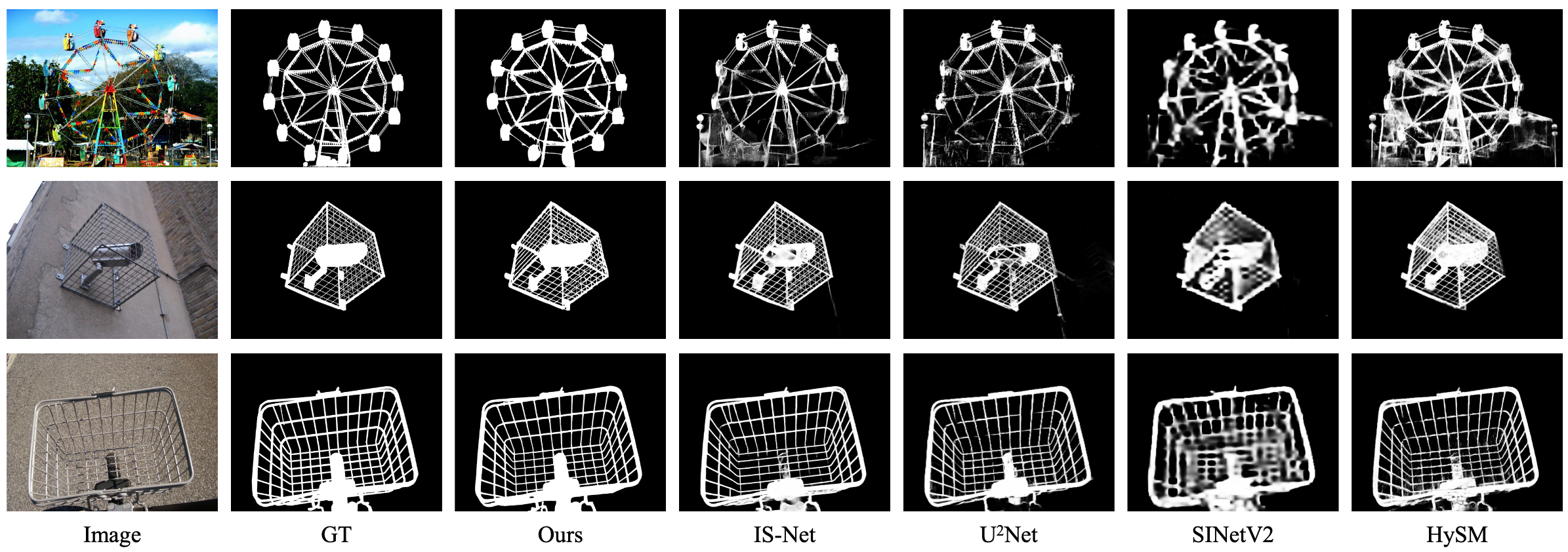

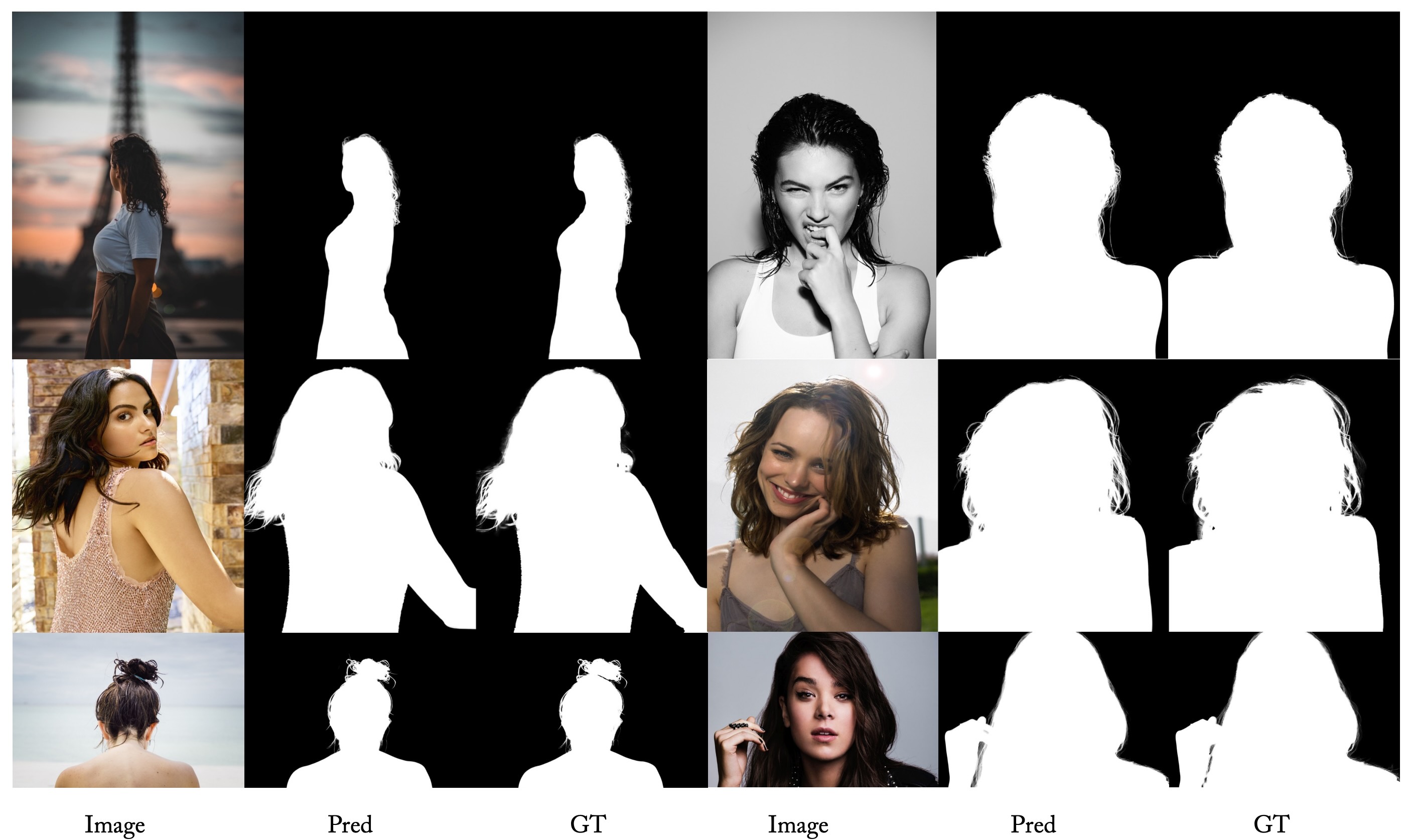

Former Title: "Diffusion Models Trained with Large Data Are Transferable Visual Models"

Guangkai Xu,

Yongtao Ge,

Mingyu Liu,

Chengxiang Fan,

Kangyang Xie,

Zhiyue Zhao,

Hao Chen,

Chunhua Shen,

Zhejiang University

- 2024.10.25 Update GenPercept Huggingface App demo.

- 2024.10.24 Release latest training and inference code, which is armed with the accelerate library and based on Marigold.

- 2024.10.24 Release arXiv v3 paper. We reorganize the structure of the paper and offer more detailed analysis.

- 2024.4.30: Release checkpoint weights of surface normal and dichotomous image segmentation.

- 2024.4.7: Add HuggingFace App demo.

- 2024.4.6: Release inference code and depth checkpoint weight of GenPercept in the GitHub repo.

- 2024.3.15: Release arXiv v2 paper, with supplementary material.

- 2024.3.10: Release arXiv v1 paper.

- Space-Huggingface demo: https://huggingface.co/spaces/guangkaixu/GenPercept.

- Models-all (including ablation study): https://huggingface.co/guangkaixu/genpercept-exps.

- Models-main-paper: https://huggingface.co/guangkaixu/genpercept-models.

- Models-depth: https://huggingface.co/guangkaixu/genpercept-depth.

- Models-normal: https://huggingface.co/guangkaixu/genpercept-normal.

- Models-dis: https://huggingface.co/guangkaixu/genpercept-dis.

- Models-matting: https://huggingface.co/guangkaixu/genpercept-matting.

- Models-seg: https://huggingface.co/guangkaixu/genpercept-seg.

- Models-disparity: https://huggingface.co/guangkaixu/genpercept-disparity.

- Models-disparity-dpt-head: https://huggingface.co/guangkaixu/genpercept-disparity-dpt-head.

- Datasets-input demo: https://huggingface.co/datasets/guangkaixu/genpercept-input-demo.

- Datasets-evaluation data: https://huggingface.co/datasets/guangkaixu/genpercept_datasets_eval.

- Datasets-evaluation results: https://huggingface.co/datasets/guangkaixu/genpercept-exps-eval.

conda create -n genpercept python=3.10

conda activate genpercept

pip install -r requirements.txt

pip install -e .Download the stable-diffusion-2-1 and our trained models from HuggingFace and put the checkpoints under ./pretrained_weights/ and ./weights/, respectively. You can download them with the script script/download_sd21.sh and script/download_weights.sh, or download the weights of depth, normal, Dichotomous Image Segmentation, matting, segmentation, disparity, disparity_dpt_head seperately.

Then, place images in the ./input/ dictionary. We offer demo images in Huggingface, and you can also download with the script script/download_sample_data.sh. Then, run inference with scripts as below.

# Depth

source script/infer/main_paper/inference_genpercept_depth.sh

# Normal

source script/infer/main_paper/inference_genpercept_normal.sh

# Dis

source script/infer/main_paper/inference_genpercept_dis.sh

# Matting

source script/infer/main_paper/inference_genpercept_matting.sh

# Seg

source script/infer/main_paper/inference_genpercept_seg.sh

# Disparity

source script/infer/main_paper/inference_genpercept_disparity.sh

# Disparity_dpt_head

source script/infer/main_paper/inference_genpercept_disparity_dpt_head.shIf you would like to change the input folder path, unet path, and output path, input these parameters like:

# Assign a values

input_rgb_dir=...

unet=...

output_dir=...

# Take depth as example

source script/infer/main_paper/inference_genpercept_depth.sh $input_rgb_dir $unet $output_dirFor a general inference script, please see script/infer/inference_general.sh in detail.

Thanks to our one-step perception paradigm, the inference process runs much faster. (Around 0.4s for each image on an A800 GPU card.)

TODO

NOTE: We implement the training with the accelerate library, but find a worse training accuracy with multi gpus compared to one gpu, with the same training effective_batch_size and max_iter. Your assistance in resolving this issue would be greatly appreciated. Thank you very much!

Datasets: TODO

Place training datasets unser datasets/

Download the stable-diffusion-2-1 from HuggingFace and put the checkpoints under ./pretrained_weights/. You can also download with the script script/download_sd21.sh.

The reproduction training scripts in arxiv v3 paper is released in script/, whose configs are stored in config/. Models with max_train_batch_size > 2 are trained on an H100 and max_train_batch_size <= 2 on an RTX 4090. Run the train script:

# Take depth training of main paper as an example

source script/train_sd21_main_paper/sd21_train_accelerate_genpercept_1card_ensure_depth_bs8_per_accu_pixel_mse_ssi_grad_loss.sh- Download evaluation datasets and place them in

datasets_eval. - Download our trained models of main paper and ablation study in Section 3 of arxiv v3 paper, and place them in

weights/genpercept-exps.

The evaluation scripts are stored in script/eval_sd21.

# Take "ensemble1 + step1" as an example

source script/eval_sd21/eval_ensemble1_step1/0_infer_eval_all.sh- Marigold: Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation. arXiv, GitHub.

- GeoWizard: Unleashing the Diffusion Priors for 3D Geometry Estimation from a Single Image. arXiv, GitHub.

- FrozenRecon: Pose-free 3D Scene Reconstruction with Frozen Depth Models. arXiv, GitHub.

For non-commercial academic use, this project is licensed under the 2-clause BSD License. For commercial use, please contact Chunhua Shen.

@article{xu2024diffusion,

title={What Matters When Repurposing Diffusion Models for General Dense Perception Tasks?},

author={Xu, Guangkai and Ge, Yongtao and Liu, Mingyu and Fan, Chengxiang and Xie, Kangyang and Zhao, Zhiyue and Chen, Hao and Shen, Chunhua},

journal={arXiv preprint arXiv:2403.06090},

year={2024}

}