Guangkai Xu, Yongtao Ge, Mingyu Liu, Chengxiang Fan, Kangyang Xie, Zhiyue Zhao, Hao Chen, Chunhua Shen,

Zhejiang University

conda create -n genpercept python=3.10

conda activate genpercept

pip install -r requirements.txt

pip install -e .Download the pre-trained depth model depth_v1.zip from BaiduNetDisk (Extract code: z938) or Rec Cloud Disk. Put the package under ./weights/ and unzip it, the checkpoint will be stored under ./weights/depth_v1/.

Then, place images in the ./input/ dictionary, and run the following script. The output depth will be saved in ./output/.

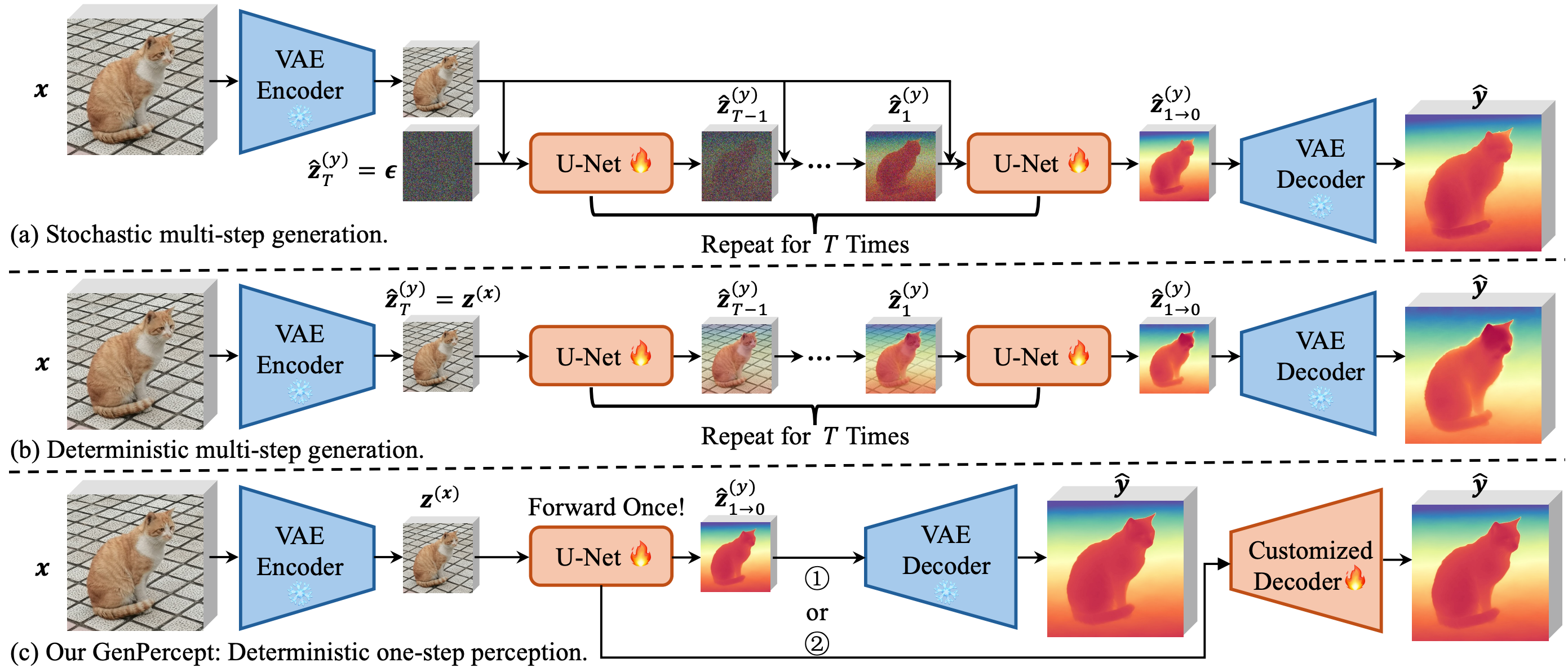

source scripts/inference_depth.shThanks to our one-step perception paradigm, the inference process runs much faster. (Around 0.4s for each image on an A800 GPU card.)

- Marigold: Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation. arXiv, GitHub.

- GeoWizard: Unleashing the Diffusion Priors for 3D Geometry Estimation from a Single Image. arXiv, GitHub.

- FrozenRecon: Pose-free 3D Scene Reconstruction with Frozen Depth Models. arXiv, GitHub.

For non-commercial use, this code is released under the LICENSE. For commercial use, please contact Chunhua Shen.

@article{xu2024diffusion,

title={Diffusion Models Trained with Large Data Are Transferable Visual Models},

author={Xu, Guangkai and Ge, Yongtao and Liu, Mingyu and Fan, Chengxiang and Xie, Kangyang and Zhao, Zhiyue and Chen, Hao and Shen, Chunhua},

journal={arXiv preprint arXiv:2403.06090},

year={2024}

}