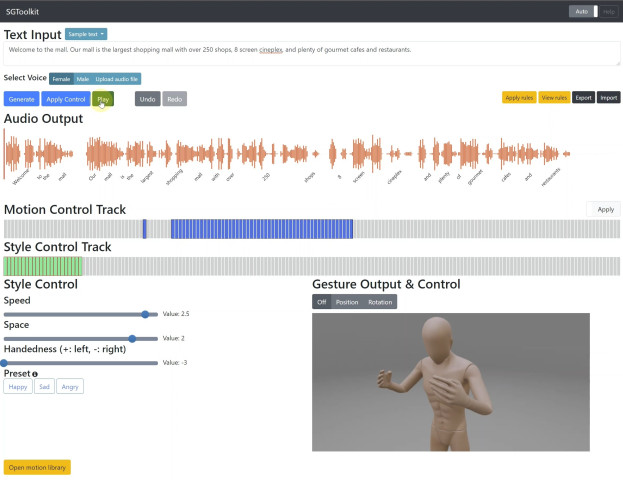

This is the code for SGToolkit: An Interactive Gesture Authoring Toolkit for Embodied Conversational Agents (UIST'21). We introduce a new gesture generation toolkit, named SGToolkit, which gives a higher quality output than automatic methods and is efficient than manual authoring. For the toolkit, we propose a neural generative model that synthesizes gestures from speech and accommodates fine-level pose controls and coarse-level style controls from users.

(please visit the ACM DL page for the supplementary video)

(This code is tested on Ubuntu 18.04 and Python 3.6)

-

Install gentle and put the path into PYTHONPATH

sudo apt install gfortran git clone https://github.com/lowerquality/gentle.git cd gentle ./install.sh # try 'sudo ./install.sh' if you encounter permission errors

-

Setup Google Cloud TTS. Please follow the manual and put your key file (

google-key.json) tosg_corefolder. -

Install Python packages

pip install -r requirements.txt

-

Download the model file (dropbox) and put it into

sg_core/output/sgtoolkitfolder

You can run the SGToolkit in your PC with the pretrained model. Run the Flask server python waitress_server.py and connect to localhost:8080 in a web browser supporting HTML5 such as Chrome and Edge.

Input speech text in the edit box or select example speech text,

and then click the generate button to synthesize initial gestures and click the play button to review the synthesized gestures.

You now can add pose and style controls. Select a desired frame in the pose or style tracks and add pose controls by editing mannequin or style controls by adjusting style values.

Press apply controls to get the updated results.

Note that the motion library and rule functions are not available. If you want to use them, please setup MongoDB and put the db address at app.py line 18.

-

Download the preprocessed TED dataset (OneDrive link) and extract to

sg_core/data/ted_dataset_2020.07 -

Run the train script

cd sg_core/scripts python train.py --config=../config/multimodal_context_toolkit.yml

- Export current animation to json and audio files by clicking the export button in the upper right corner of the SGToolkit, and put the exported files into a temporary folder.

- Open

blender/animation_uist2021.blendfile with Blender 2.8+. - Modify

data_pathat line 35 ofrenderscript to be the temporary path containing exported files, and run the scriptrender

- TED DB: https://github.com/youngwoo-yoon/youtube-gesture-dataset

- Base model: https://github.com/ai4r/Gesture-Generation-from-Trimodal-Context

- HEMVIP, the web-based video evaluation tool: https://github.com/jonepatr/genea_webmushra

If this work is helpful in your research, please cite:

@inproceedings{yoon2021sgtoolkit,

author = {Yoon, Youngwoo and Park, Keunwoo and Jang, Minsu and Kim, Jaehong and Lee, Geehyuk},

title = {SGToolkit: An Interactive Gesture Authoring Toolkit for Embodied Conversational Agents},

year = {2021},

publisher = {Association for Computing Machinery},

url = {https://doi.org/10.1145/3472749.3474789},

booktitle = {The 34th Annual ACM Symposium on User Interface Software and Technology},

series = {UIST '21}

}

- Character asset: Mixamo

- This work was supported by the ICT R&D program of MSIP/IITP. [2017-0-00162, Development of Human-care Robot Technology for Aging Society]