Official Implementation: TFG Diseño e implementación de la reconstrucción de series temporales a partir de imágenes

[[Paper]Coming Soon] [Dataset] [Citation]

Welcome to the official implementation repository of the TFG "Diseño e implementación de la reconstrucción de series temporales a partir de imágenes" based on paper titled "Diff-TSD: Modelling Time-series Data Generation with Diffusion Models". This repository provides detailed insights, datasets, and other essential resources related to our research and findings.

In the TFG "Diseño e implementación de la reconstrucción de series temporales a partir de imágenes", we explore the potential of reconstruction of the different image codification techniques and intricacies of generating time-series data using diffusion models. As an integral part of this work, this repository serves as a comprehensive platform to access the datasets, recurrence plots,grammian angular fields, markov transition fields and other relevant resources that were instrumental in our research.

We used WISDM dataset. The WISDM dataset focuses on data from smartwatch wearables. Our study particularly delves into the dataset which records 51 subjects performing 18 daily activities, such as "walking" and "jogging". We've honed in on five non-hand-oriented activities: "walking", "jogging", "stairs" (both ascending and descending), "sitting", and "standing", amassing a total of 1,053,141 instances. Data collection utilized the smartwatch's accelerometer, recording at a frequency of 20 Hz. For a visual representation of the acceleration waveforms for each activity.

| Activity | Instances | Percentage |

|---|---|---|

| Standing | 216,529 | 20.6% |

| Sitting | 213,018 | 20.2% |

| Walking | 210,495 | 20.0% |

| Stairs | 207,312 | 19.7% |

| Jogging | 205,787 | 19.5% |

As is common in various studies 1 utilizing this dataset for classification tasks, we opted to segment the data into full non-overlapping windowed segments (FNOW). Each segment contains 129 data points. The choice of 129, while seemingly unusual, is intentional; one additional data point beyond the typical 128 1 allows us to create recurrence plots of 128x128 pixels.

You can run the scripts with nohup which ignores the hangup signal. This means that you can close the terminal without stopping the execution. Also, don’t forget to add & so the script runs in the background:

$ nohup accelerate launch train.py --config CONFIG_FILE > your.log &In addition, to close remote terminal safely, you have to run the exit commant, and do not close manually the terminal:

$ exitFinally, you can identify the running processes of training script with:

$ ps ax | grep train.pyor list of all running processes of Python:

$ ps -fA | grep pythonthen, kill the desired one:

$ kill PIDIn all bash command, we can combine the use "nohup" command to execute a script withouth interuptions (avoiding terminal disconnections, etc.) and "&" symbol at the end of the command for a background execution. We also can use "> filename.log" to put the results in a log file.

Sampling techniques

- The Leave-One-Trial-Out (LOTO) approach is a cutting-edge method in sample generation. Each trial encompasses a unique raw activity signal for a single subject, ensuring an impartial evaluation and facilitating the creation of a sufficient number of samples. Additionally, this technique prevents the duplication of trials with identical raw signals (trials of the same label) across both training and testing datasets.

- The Leave-One-Subject-Out (LOSO) approach is a sampling technique inspired by the Leave-One-Trial-Out method. In this approach, all trials belonging to a single subject are considered as an indivisible unit, ensuring that there are no trials from the same subject duplicated in the training and testing datasets. This technique maintains data integrity and prevents potential biases caused by the presence of trials from the same subject in both datasets, allowing for a more robust and reliable evaluation of the model's performance. This technique is the most strict, which proposes a subject-wise approach instead record-wise, and in the literature is not commonly assessed, maybe due to its resulting lower accuracy.

We performed two experiments: One using LOTO to compare our results with previous results and the other using LOSO.

The table below presents the 3-fold data distribution for each sampling approach:

The table below presents the 3-fold data distribution for each sampling approach:

| 3-Folds | FNOW + LOTO | FNOW + LOSO | ||||

|---|---|---|---|---|---|---|

| Train samples | Test samples | Total | Train samples | Test samples | Total | |

| Fold-1 | 5392 | 2672 | 8064 | 5408 | 2688 | 8096 |

| Fold-2 | 5392 | 2688 | 8080 | 5344 | 2768 | 8112 |

| Fold-3 | 5392 | 2672 | 8064 | 5456 | 2640 | 8096 |

So, from the WISDM dataset, we extracted Recurrence plots with a legnth of 129 points (128x128 pixels) were generated for each of the five selected classes across every fold. These plots, inspired by the work of Lu and Tong in "Robust Single Accelerometer-Based Activity Recognition Using Modified Recurrence Plot", are available for download on the Hugging Face platform.

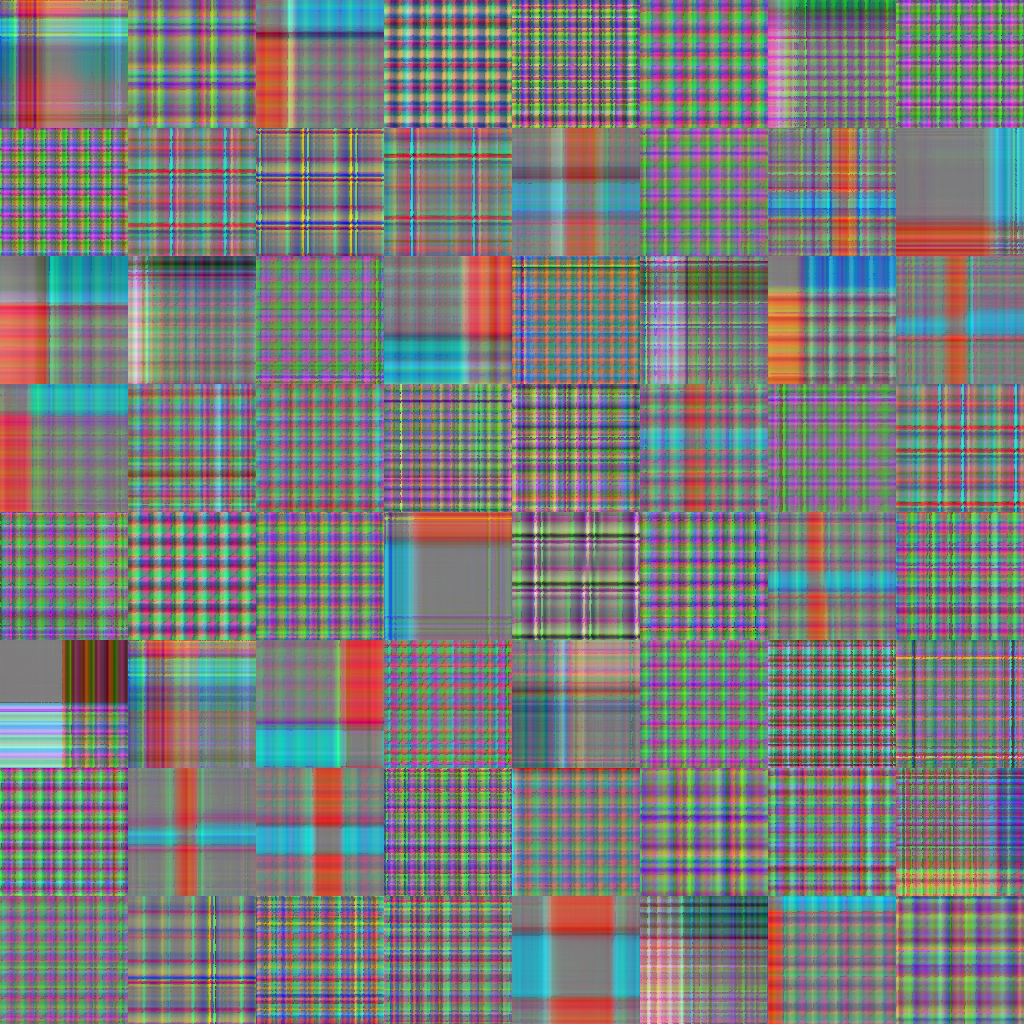

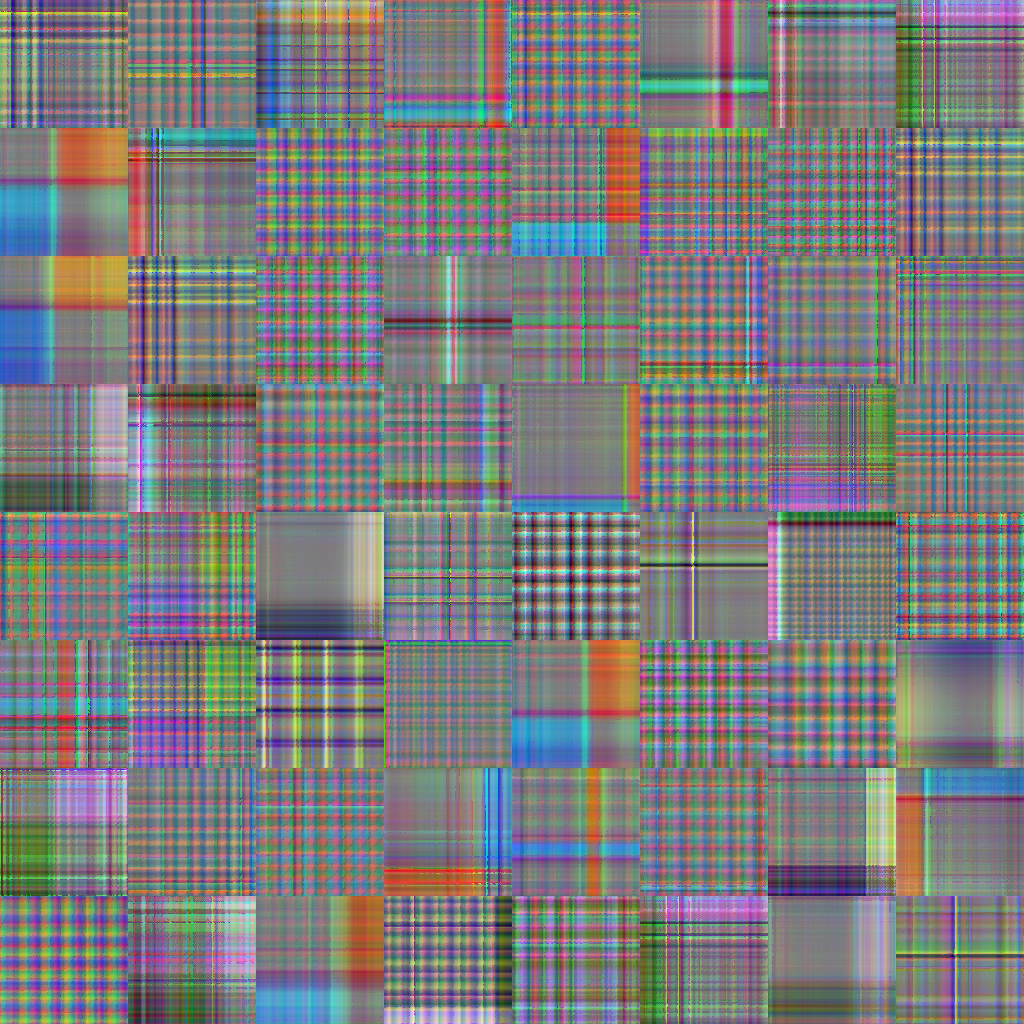

Here, an example of the reccurente plots dataset:

Class 0 (Walking) |

Class 1 (Jogging) |

Class 2 (Stairs) |

Class 3 (Sitting) |

Class 4 (Standing) |

If you want to create recurrence plots:

- "--create-numpies" is for create the first time the numpy arrays.

- With "--sampling loto" you can select the sampling method "loto" or "loso" with "--sampling loso".

- With "--image type " you can select the image construction or reconstruction method "--sampling loso". For LOTO approach:

$ nohup ./generate_images.py --data-name WISDM --n-folds 3 --image-type GAF --data-folder /home/adriano/Escritorio/TFG/data/WISDM/ --sampling loto > generate_images.log &

Then, we have to execute once generated the images the reconstruction of the time series:

$ nohup ./generate_time_series.py --data-name WISDM --n-folds 3 --image-type GAF --data-folder /home/adriano/Escritorio/TFG/data/WISDM/ --sampling loto > ts_plots_loto.log &