Code for our paper GOAT-Bench: A Benchmark for Multi-Modal Lifelong Navigation.

Mukul Khanna*, Ram Ramrakhya*, Gunjan Chhablani, Sriram Yenamandra, Theophile Gervet, Matthew Chang, Zsolt Kira, Devendra Singh Chaplot, Dhruv Batra, Roozbeh Mottaghi

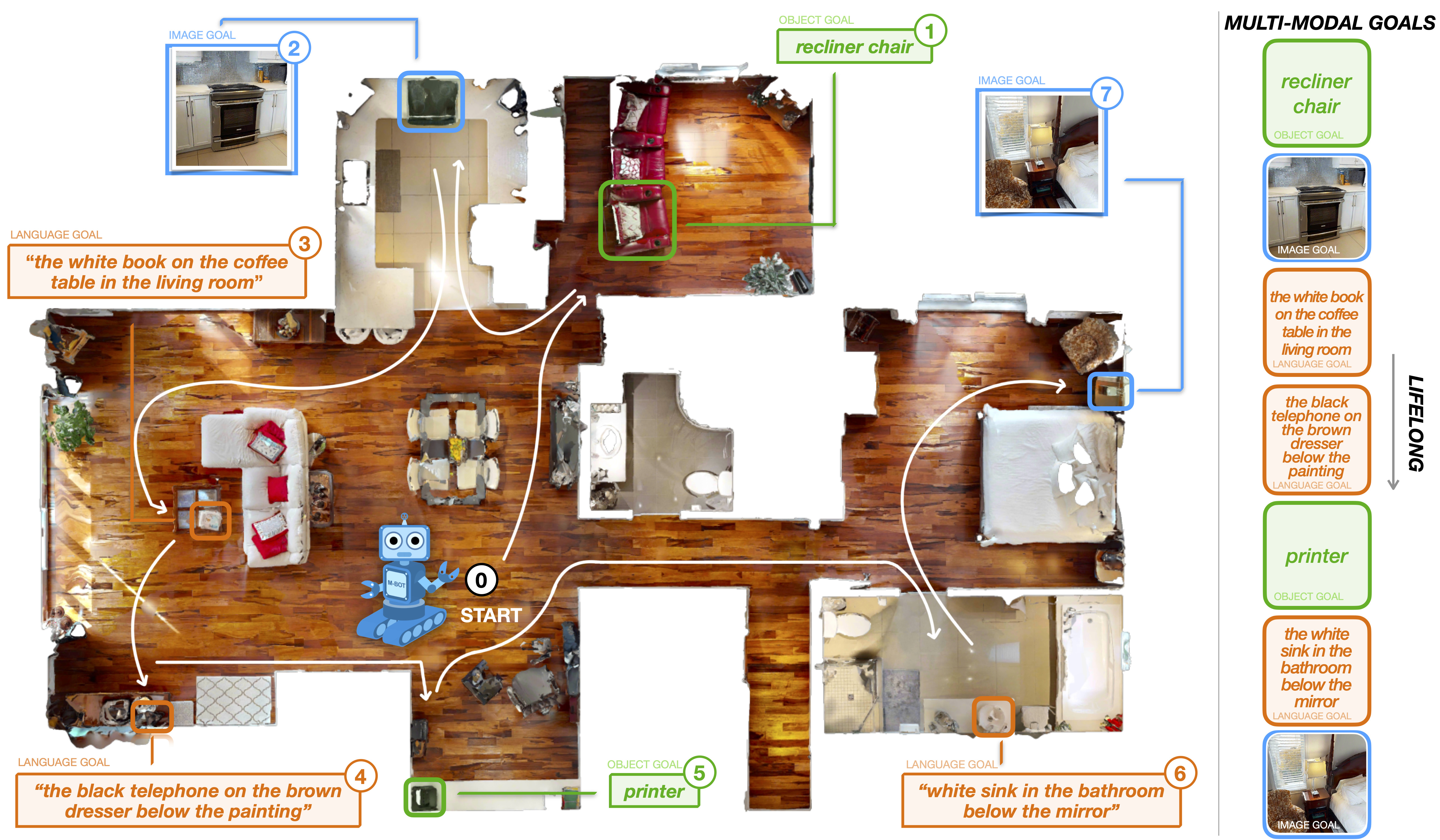

Sample episode from GOAT-Bench

GOAT-Bench is a benchmark for the Go to Any Thing (GOAT) task where an agent is spawned randomly in an unseen indoor environment and tasked with sequentially navigating to a variable number (in 5-10) of goal objects, described via the category name of the object (e.g. couch), a language description (e.g. a black leather couch next to coffee table), or an image of the object uniquely identifying the goal instance in the environment. We refer to finding each goal in a GOAT episode as a subtask. Each GOAT episode comprises 5 to 10 subtasks. We set up the GOAT task in an open-vocabulary setting; unlike many prior works, we are not restricted to navigating to a predetermined, closed set of object categories. The agent is expected to reach the goal object

Create the conda environment and install all of the dependencies. Mamba is recommended for faster installation:

# Create conda environment. Mamba is recommended for faster installation.

conda_env_name=goat

mamba create -n $conda_env_name python=3.7 cmake=3.14.0 -y

mamba install -n $conda_env_name \

habitat-sim=0.2.3 headless pytorch cudatoolkit=11.3 \

-c pytorch -c nvidia -c conda-forge -c aihabitat -y

# Install this repo as a package

mamba activate $conda_env_name

pip install -e .

# Install habitat-lab

git clone --branch v0.2.3 [email protected]:facebookresearch/habitat-lab.git

cd habitat-lab

pip install -e habitat-lab

pip install -e habitat-baselines

pip install -r requirements.txt

pip install git+https://github.com/openai/CLIP.git

pip install ftfy regex tqdm GPUtil trimesh seaborn timm scikit-learn einops transformers-

Download the HM3D dataset using the instructions here (download the full HM3D dataset for use with habitat)

-

Move the HM3D scene dataset or create a symlink at

data/scene_datasets/hm3d. -

Download the GOAT-Bench episode dataset from here.

The code requires the datasets in data folder in the following format:

├── goat-bench/

│ ├── data

│ │ ├── scene_datasets/

│ │ │ ├── hm3d/

│ │ │ │ ├── JeFG25nYj2p.glb

│ │ │ │ └── JeFG25nYj2p.navmesh

│ │ ├── datasets

│ │ │ ├── goat_bench/

│ │ │ │ ├── hm3d/

│ │ │ │ | ├── v1/

│ │ │ │ │ │ ├── train/

│ │ │ │ │ │ ├── val_seen/

│ │ │ │ │ │ ├── val_seen_synonyms/

│ │ │ │ │ │ ├── val_unseen/Run the following to train:

sbatch scripts/train/2-goat-ver.shRun the following to evaluate:

sbatch scripts/eval/2-goat-eval.sh