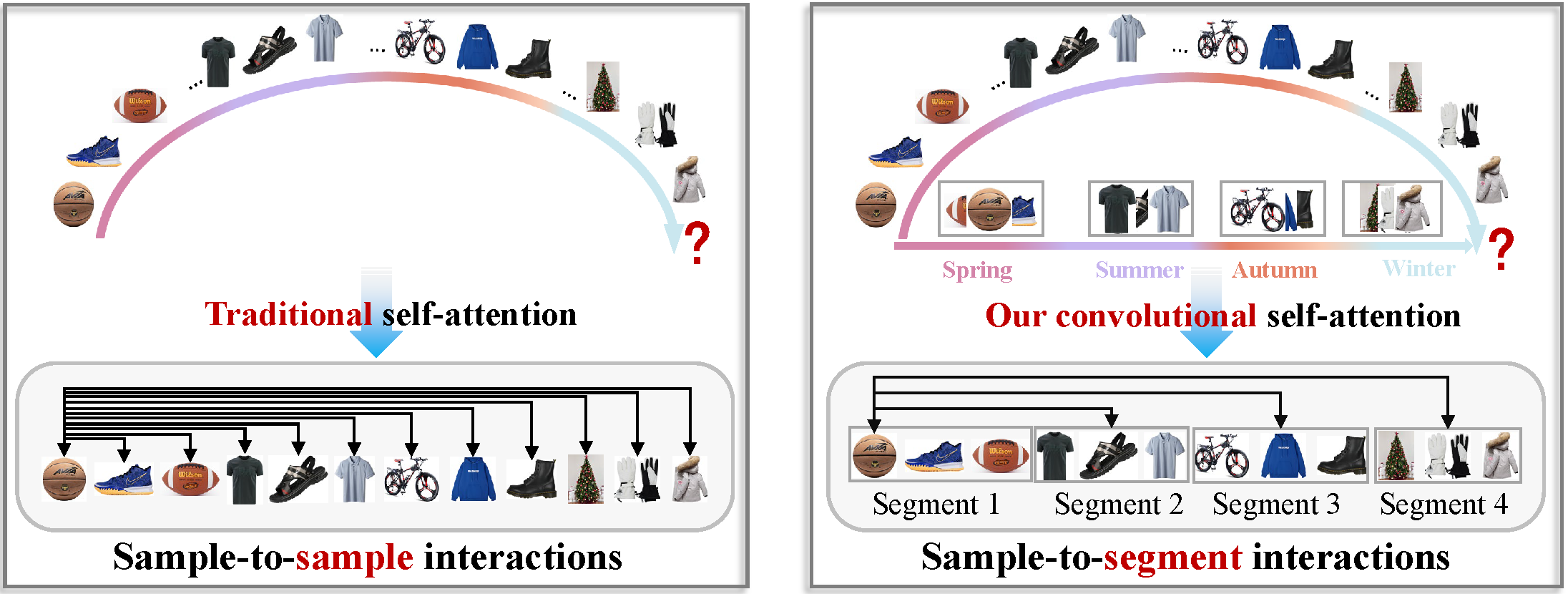

This is the official pytorch code for the SIGIR 2022 paper "Progressive Self-Attention Network with Unsymmetrical Positional Encoding for Sequential Recommendation". In this paper, to simultaneously capture the long-term and short-term dependencies among items (as shown in the figure below), we propose a novel convolutional self-attention network (see Figure 1 in our paper), namely PAUP, which helps progressively extract the sequential patterns. Our 5-min video talk about this work is available in the Supplemental Material.

We mainly recommend the following important dependencies.

- python==3.7.7

- pytorch==1.3.1

We use three real-world benchmark datasets, including Yelp, Amazon Books and ML-1M. The details about full version of these datasets are on RecSysDatasets. Download three well-processed benchmarks from RecSysDatasets, and put them in .\dataset.

Here, we already download the dataset ml-1m, and the PAUP model can be trained by script run_recbole.py, with a simple call given here:

python run_recbole.py Our work is inspired from many recent efforts in various Transformer-based methods including: LightSANs, Synthesizer, LinTrans, Performer, Linformer, BERT4Rec, SASRec. Thanks for their great work! We adopt LightSANs as our baseline model, and we keep most parameter settings to ensure fair comparsions. We mainly tune the parameter "m" and "k1" in "PAUP.yaml" to achieve better performance. Note that we encourage more experimentations on parameter m and k_1, which may resulte in better overall performance than that described in the paper.

If you find our paper or this project helps your research, please kindly consider citing our work via:

@inproceedings{Zhu2022PAUP,

title={Progressive Self-Attention Network with Unsymmetrical Positional Encoding for Sequential Recommendation},

author={Yuehua Zhu, Bo Huang, Shaohua Jiang, Muli Yang, Yanhua Yang and Wenliang Zhong},

booktitle={SIGIR},

year={2022}

}