In recent years, various LLMs, embedding models, and LLM flows utilizing them have been proposed, making it difficult to manually verify which flow or component is optimal.

This repository aims to treat LLMs and Embeddings as a hyperparameter, with the goal of automatically searching for the optimal hyperparameter of the LLM flow.

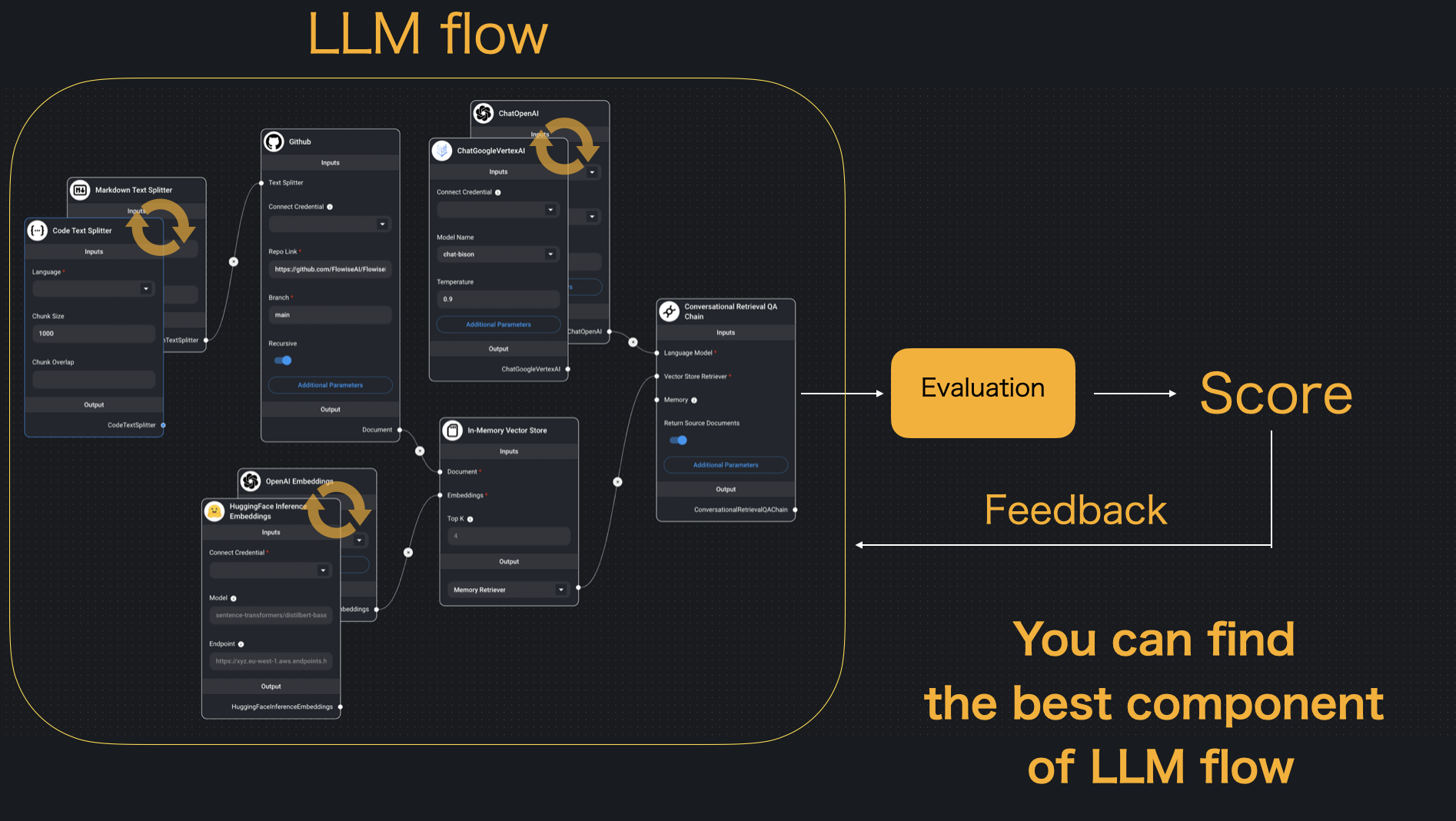

This image is the concept image of this repository (image is took from Flowise and slightly modified). Component of LangChain like a LLM or Embedding can be treated as hyperparameter. You will find component from various candidate that can optimize score.

This image is the concept image of this repository (image is took from Flowise and slightly modified). Component of LangChain like a LLM or Embedding can be treated as hyperparameter. You will find component from various candidate that can optimize score.

This repository is strongly inspired by lightning-hydra-template🎉

Any feedback, bug reports, and suggestions are appreciated!

-

Hydra : Hydra is an open-source Python framework that simplifies the development of research and other complex applications. It has the ability to dynamically create a hierarchical configuration system, which is its key feature

-

Optuna : Optuna is an open-source Python library for hyperparameter optimization. It offers a define-by-run API that allows users to construct search spaces, a mixture of efficient searching, and pruning algorithm to enhance the cost-effectiveness of optimization. Optuna also provides a web dashboard for visualization and evaluation of studies in real-time.

I will explain how to use this repository by using question answering as an example. In this example, we will use following technologies.

-

LangChain for model : LangChain is a framework for developing applications powered by large language models. It can be divided conceptually into components (like llm component, embedding component), which are well-abstracted and easy to switch. This is the reason we can treat each component as hyperparameter.

-

ragas for scoring : Ragas is an evaluation framework for Retrieval Augmented Generation (RAG) pipelines that provides tools based on the latest research for evaluating LLM-generated text to give insights about the RAG pipeline.

You can experience quick start by above colab notebook.

Please click Use this template to use this repository as template.

-

Clone your repository and install dependencies by following command.

git clone [email protected]:<YOUR_GITHUB_ID>/<YOUR_REPOSITORY_NAME>.git cd <YOUR_REPOSITORY_NAME>

-

We use poetry for package management. you can install poetry and python packages by following command.

pip install poetry poetry install

-

Rename

.env.exampleto.envand set your environment variables to use external API like OpenAI ChatGPT.

-

Define model architect like llmflowoptimizer/component/model/sample_qa.py.

The arguments in

__init__()can be used as hyperparameter and will be able to be optimized. -

Define model config on configs/model like example.

Example model config:

defaults: - _self_ - embedding: OpenAI - text_splitter: RecursiveCharacter - llm: OpenAI _target_: llmflowoptimizer.component.model.sample_qa.SampleQA # what we defined on llmflowoptimizer/component/model/sample_qa.py data_path: ${paths.reference_data_dir}/nyc_wikipedia.txt

-

Then you can check your model and config by following command.

poetry run python llmflowoptimizer/run.py extras.evaluation=false

-

Define evaluation system like llmflowoptimizer/model/evaluation.py, and set argument on configs/evaluation.

Optuna will optimize component based on the return value of this evaluation system.

-

You can check your evaluation system by following command.

poetry run python llmflowoptimizer/run.py

-

Since we use Hydra, you can change each LLM flow component by command line argument. For more detail, please check Override section.

-

Define search requirement on configs/hparams_search like example.

model/text_splitter: choice(RecursiveCharacter, CharacterTextSplitter) model.text_splitter.chunk_size: range(500, 1500, 100) model/llm: choice(OpenAI, GPTTurbo, GPT4)

This example if a part of configs/hparams_search/optuna.yaml, and it means this system will search best hyperparameter from

RecursiveCharacterorCharacterTextSplitterformodel.text_splittercomponent, chunk_size is between 500 and 1500, andOpenAI,GPTTurbo,GPT4formodel.llmcomponent.Also complicated search range can be defined by python like configs/hparams_search/custom-search-space-objective.py

-

You can start hyperparameter search by following command.

poetry run python llmflowoptimizer/run.py hparams_search=optuna

Then you can see the best parameter on logs/{task_name}/multirruns/{timestamp}/optimization_results.yaml.

You can modify components simply by adding commands, without needing to alter the code.

When you want to change single parameter, you have to use . between parameter name.

Example:

poetry run python llmflowoptimizer/run.py model.text_splitter.chunk_size=1000By doing this, you change model_name parameter of llm_for_answer to gpt-4.

when you want to change parameter in component scale, you have to define component in the same folder use / between parameter name and value.

poetry run python llmflowoptimizer/run.py model/llm_for_answer=OpenAIBy doing this, LLM flow use OpenAI.yaml model instead of ChatOpenAI.yaml model.

you can also save experimental config on configs/experiment.

after you save config configs/experiment, you can override like below.

poetry run python llmflowoptimizer/run.py experiment=exampleBefore you make PR, you have to test your code by following command. In sample test, we only check model and evaluation class can be initialized.

make testIf you want auto code formatting, you can install by

make fix-lint-installThen code format will be fixed automatically when you commit.

Also you can format code manually by

make fix-lintDELETE EVERYTHING ABOVE FOR YOUR PROJECT

What it does and what the purpose of the project is.

How to install the project.

How to run the project.