A LiDAR processing pipeline based on ROS2 Humble node system, improvement to https://github.com/YevgeniyEngineer/LiDAR-Processing.

See execution times captured with gprof in analysis.txt.

- KDTree

- Dynamic Radius Outlier Removal (DROR) filter

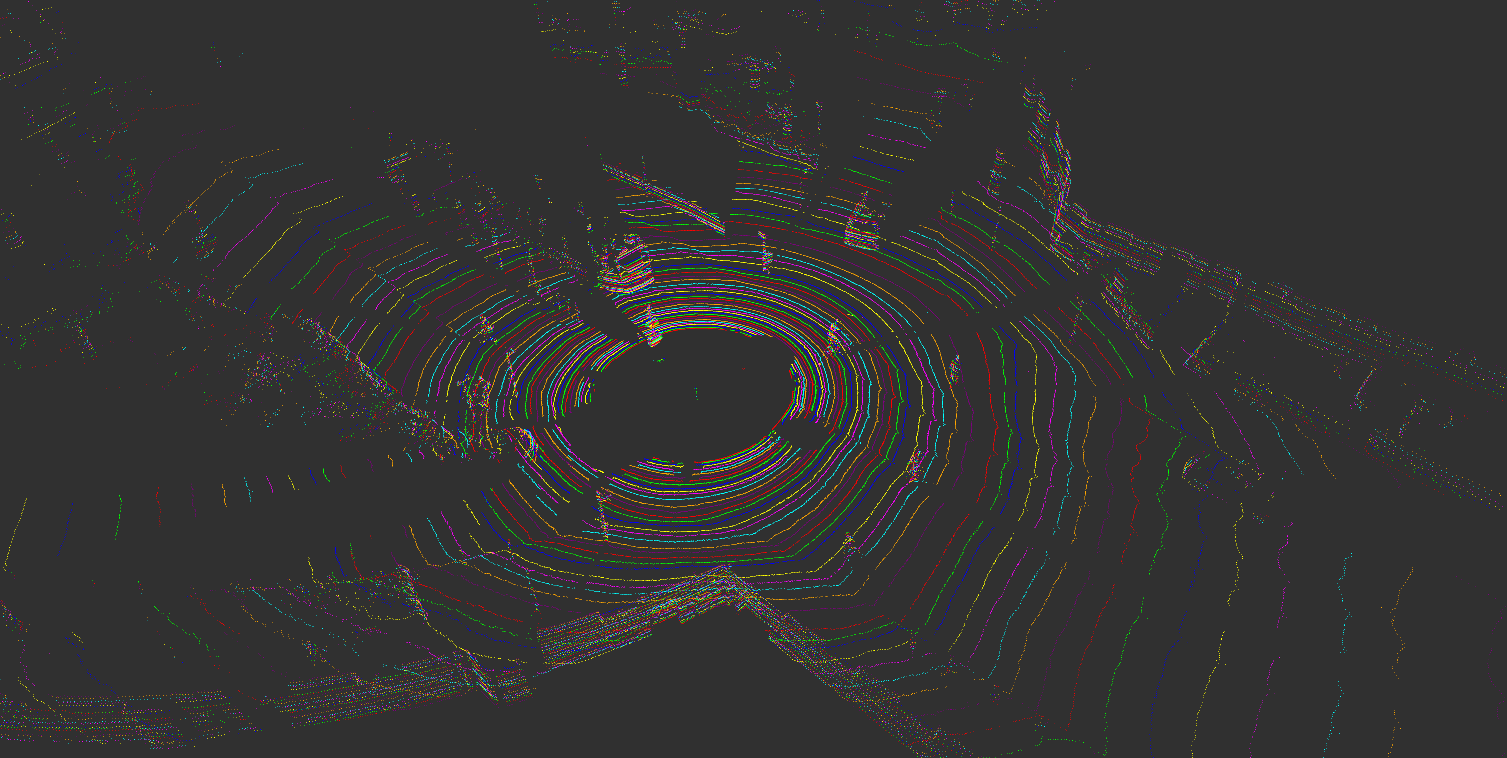

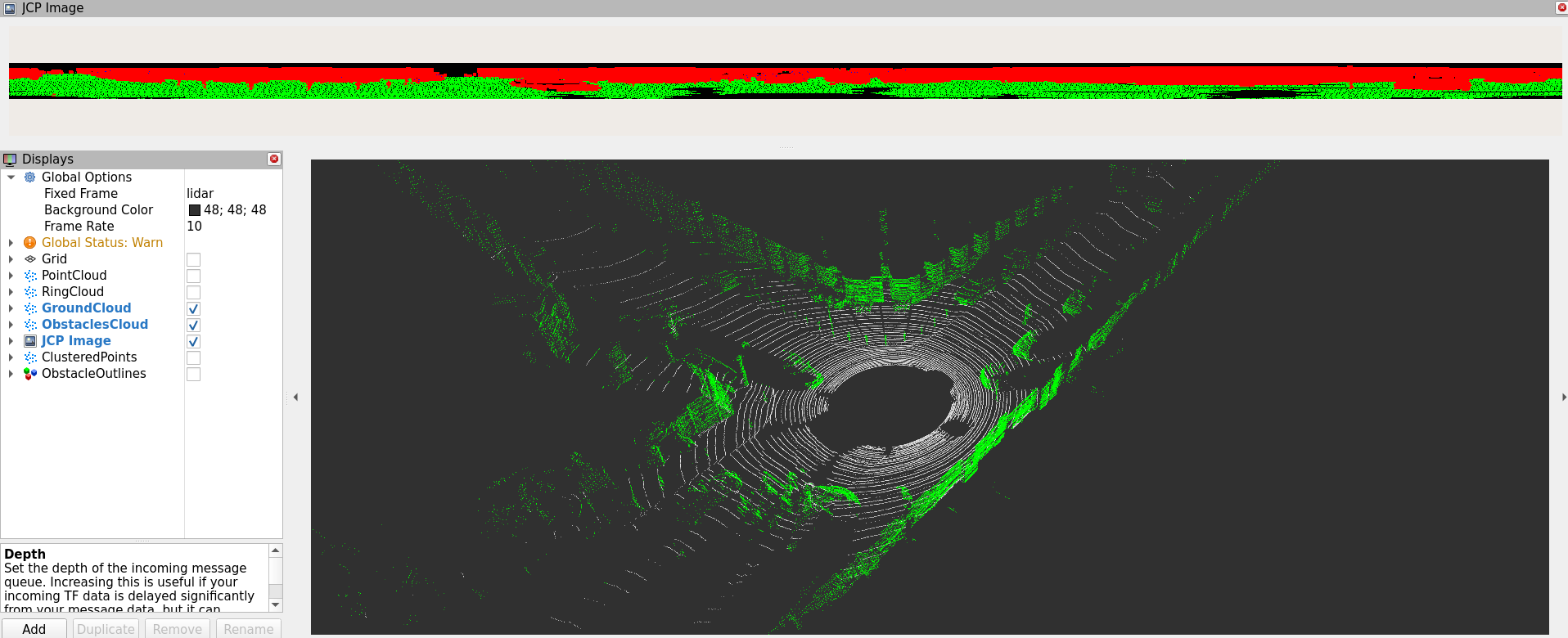

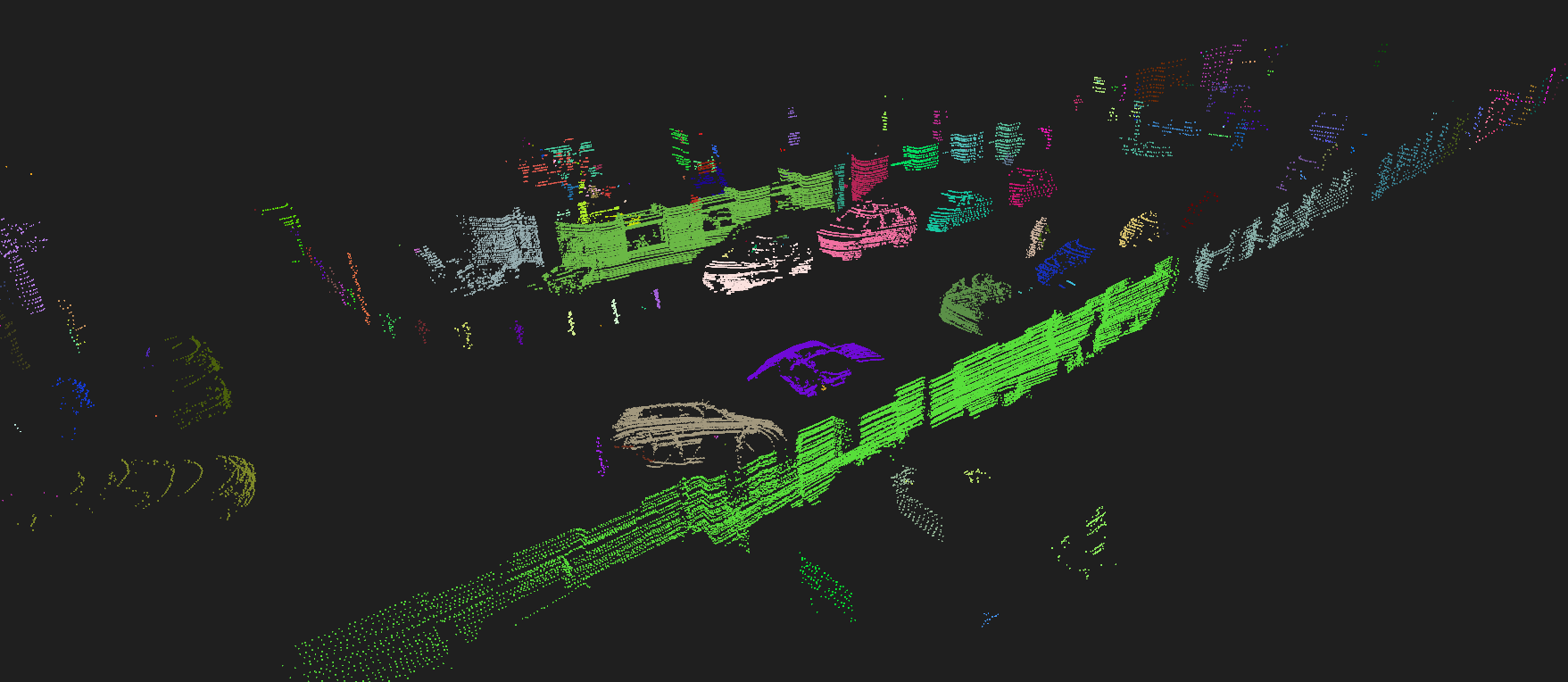

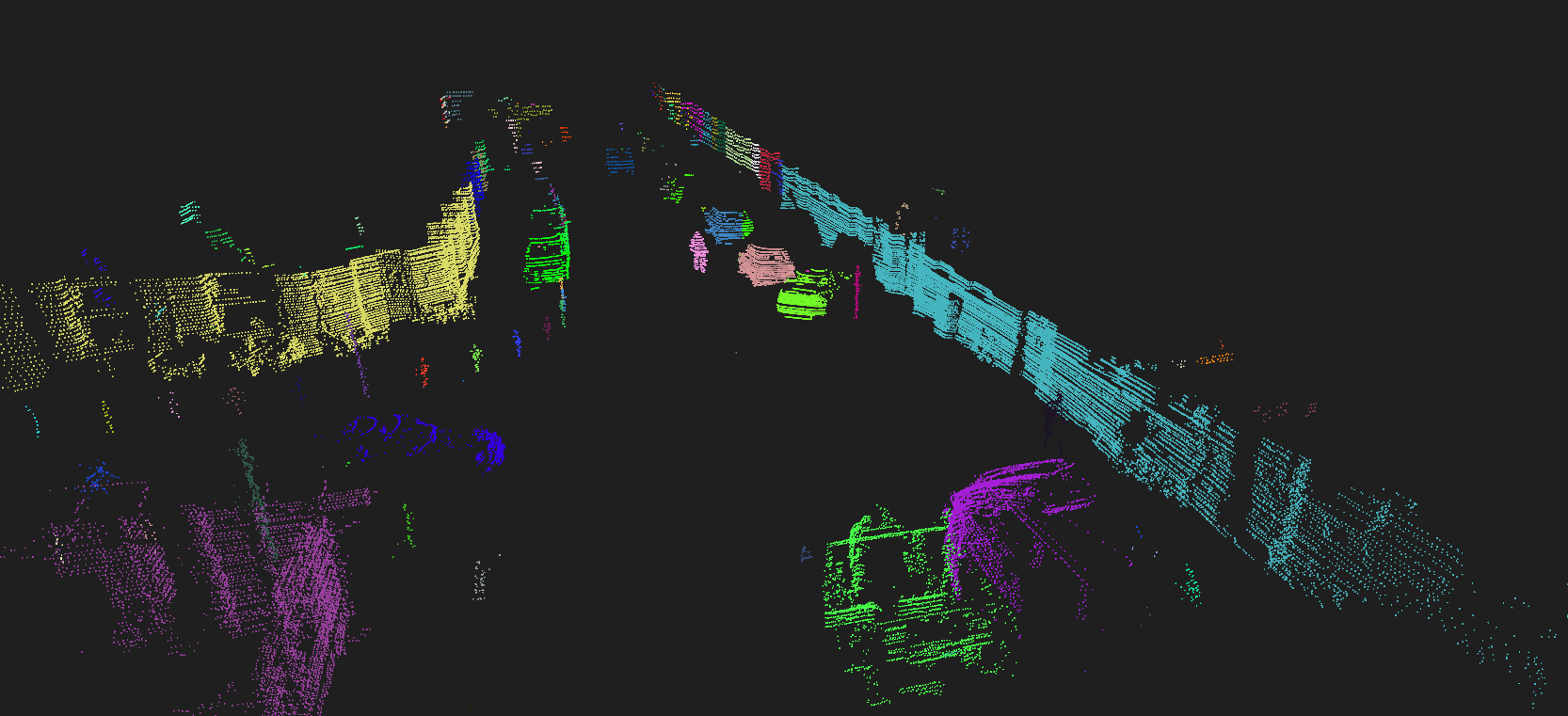

- Segmentation based on Jump Process Convolution and Ring-Shaped Elevation Conjunction Map

- Curved Voxel Clustering

- Andrew's Monotone Chain Convex Hull

- Shamos Algorithm for finding antipodal vertex pairs of convex polygon

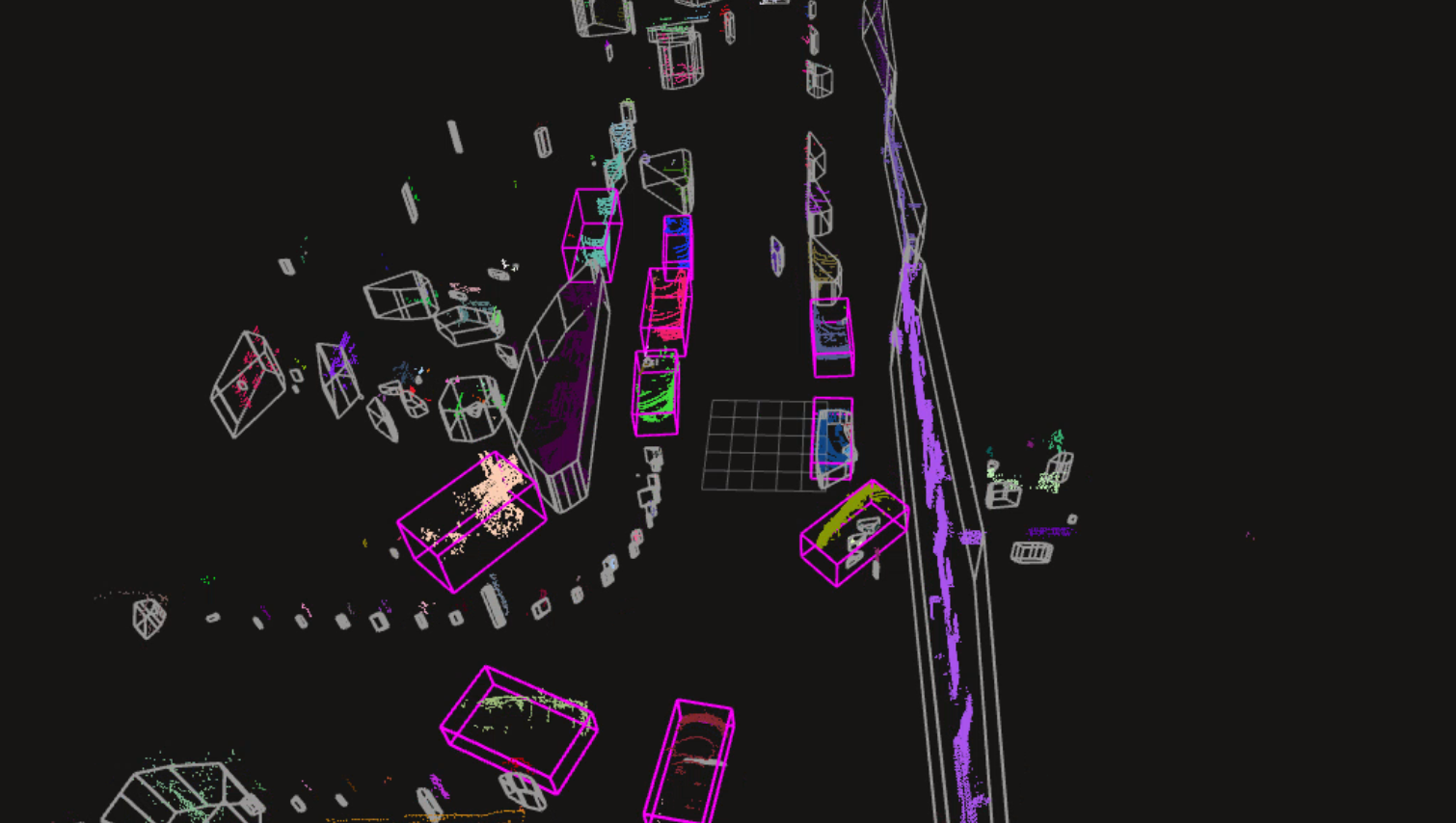

- Bounding Box fitting using Rotating Calipers and Shamos acceleration

- Bounding Box fitting using Principal Component Analysis

- Rudimental vehicle classification based on shape matching

The clustering algorithm uses unordered_dense library to speed up calculations and reduce memory usage.

To update submodule, git submodule update --init --recursive.

For ROS2 installation, if you prefer not using Devcontainer, follow https://docs.ros.org/en/humble/Installation/Ubuntu-Install-Debians.html#ubuntu-debian-packages.

I included Devcontainer setup instructions in Docker Setup section below.

build-essentialcmakeros-humble-desktoplibpcl-devlibopencv-dev

Once you are inside of Devcontainer, you can build and launch the nodes. In the main directory:

./scripts/clean.sh

./scripts/build.sh

./scripts/launch.shIt is recommended to setup GDB dashboard: https://github.com/cyrus-and/gdb-dashboard.

By convention, I assigned one node per package, so the node name matches its corresponding package name. This was done to simplify the launch scripts and setting up Tasks with VS Code.

# Build a single package in Debug mode

./scripts/build.sh -d processor

# Launch an individual node

./scripts/launch.sh dataloader

./scripts/launch.sh processor

./scripts/launch.sh rviz2

# Launch a GDB Debugger VS Code task

# Ctrl + Shift + P -> Tasks: Run Task -> Launch ROS2 Node with GDB

# When prompted, enter package name processorPrior to processing point cloud is converted from unorganized to organized, however this is strictly not necessary because I templated segmentation function to accept unorganized point cloud too. The approximate ring partitioning scheme is used to cluster rings, approximating the natural Velodyne HDL-64E scan pattern as closely as possible. If the cloud is provided in the unorganized format, the height index of the image will be determined from linear point mapping, considering vertical field of view of the Velodyne sensor.

The implementation is based on "Fast Ground Segmentation for 3D LiDAR Point Cloud Based on Jump-Convolution-Process". The implementation follows most of the steps outlined in the original paper, except I added an intermediate RANSAC filter to correct erroneous classification close to the vehicle, which are caused by either pitch deviation of the vehicle, uncertainty in sensor mounting position, or reflective LiDAR artifacs, present below the ground surface.

The implementation is based on "Curved-Voxel Clustering for Accurate Segmentation of 3D LiDAR Point Clouds with Real-Time Performance".

Obstacle clusters are further simplified by calculating the outer contour (polygon) of the point cluster.

There are three main types of simple polygons considered:

- Convex hull (Andrew's Monotone Chain) [Implemented]

- Oriented bounding box (Rotating Calipers with Shamos acceleration and Principal Component Analysis) [Implemented]

- Concave hull (X-Shape Concave Hull) [Not implemented]

The simplified obstacle contours can be used for:

- Filtering obstacles by size

- Obstacle tracking

- Collision detection

- Dynamic path planning

I attempted to extract vehicles from the scene with a reasonable success using classical processing techniques, without relying on neural networks. However, it seems there are limitations on how well vehicles can be extracted from the scene.

To enable code profiling, add the following lines in CMakeLists.txt:

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -pg")

set(CMAKE_EXE_LINKER_FLAGS "${CMAKE_EXE_LINKER_FLAGS} -pg")To generate the profiler analysis information:

gprof ./install/processor/lib/processor/processor ./gmon.out > analysis.txtcode --install-extension --force ms-vscode-remote.remote-containers && \

code --install-extension --force ms-azuretools.vscode-dockerUpdate the apt package index and install packages to allow apt to use a repository over HTTPS:

sudo apt-get update

sudo apt-get install --fix-missing apt-transport-https ca-certificates curl software-properties-commonAdd Docker’s official GPG key

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpgSet up the stable repository

echo "deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/nullInstall Docker engine

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.ioStart Docker engine and confirm installation

sudo systemctl start docker

sudo systemctl status dockerEnable Docker to Start at Boot

sudo systemctl enable docker.service

sudo systemctl enable containerd.serviceCreate docker group:

sudo groupadd dockerAdd your user to the Docker group:

sudo usermod -aG docker $USERRestart your computer.

For the group change to take effect, you need to log out and then log back in. This is necessary because permissions are only re-evaluated by the system at login. Alternatively, you can use the following command to apply the changes without logging out:

newgrp dockerSet up a Docker account here: https://hub.docker.com/signup to be able to pull Docker images.

Install pass:

sudo apt-get install passDownload and install credentials helper package:

wget https://github.com/docker/docker-credential-helpers/releases/download/v0.6.0/docker-credential-pass-v0.6.0-amd64.tar.gz && tar -xf docker-credential-pass-v0.6.0-amd64.tar.gz && chmod +x docker-credential-pass && sudo mv docker-credential-pass /usr/local/bin/Create a new key:

gpg2 --gen-keyInitialize pass using the newly created key:

pass init "<Your Name>"Open Docker config:

nano ~/.docker/config.jsonChange the config to:

{

"auths": {

"https://index.docker.io/v1/": {}

}

}Login to Docker using your own credentials (when prompted, enter your login and password):

docker loginAllow Docker containers to display GUI applications on your host’s X server.

xhost +local:dockeror you might need to allow the root user on local connections:

xhost +local:rootThis step is optional, only applicable if you have NVIDIA GPU.

Add the Package RepositoriesOpen a terminal and add the NVIDIA package repositories:

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.listInstall NVIDIA DockerUpdate your package list and install the NVIDIA docker package:

sudo apt-get update

sudo apt-get install -y nvidia-docker2Restart the Docker DaemonRestart the Docker daemon to apply the changes:

sudo systemctl restart dockerYou can configure Docker to use the NVIDIA runtime by default so that every container you launch utilizes the GPU.

Edit or Create the Docker Daemon Configuration FileOpen or create the Docker daemon configuration file in your editor:

sudo nano /etc/docker/daemon.jsonAdd the Default RuntimeAdd or modify the file to include the default NVIDIA runtime:

{

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

},

"default-runtime": "nvidia"

}Restart the Docker service to apply these configuration changes:

sudo systemctl restart dockerIf you use this software, please cite it as follows:

@software{simonov_lidar_pipeline_2024,

author = {Simonov, Yevgeniy},

title = {{LiDAR Processing Pipeline}},

url = {https://github.com/YevgeniyEngineer/LiDAR-Processing-V2},

version = {0.2.0},

date = {2024-06-09},

license = {GPL-3.0}

}