This is a clean and robust Pytorch implementation of Soft-Actor-Critic on discrete action space

|

|

|---|

All the experiments are trained with same hyperparameters. Other RL algorithms by Pytorch can be found here.

gymnasium==0.29.1

numpy==1.26.1

pytorch==2.1.0

python==3.11.5python main.pywhere the default enviroment is 'CartPole'.

python main.py --EnvIdex 0 --render True --Loadmodel True --ModelIdex 50which will render the 'CartPole'.

If you want to train on different enviroments

python main.py --EnvIdex 1The --EnvIdex can be set to be 0 and 1, where

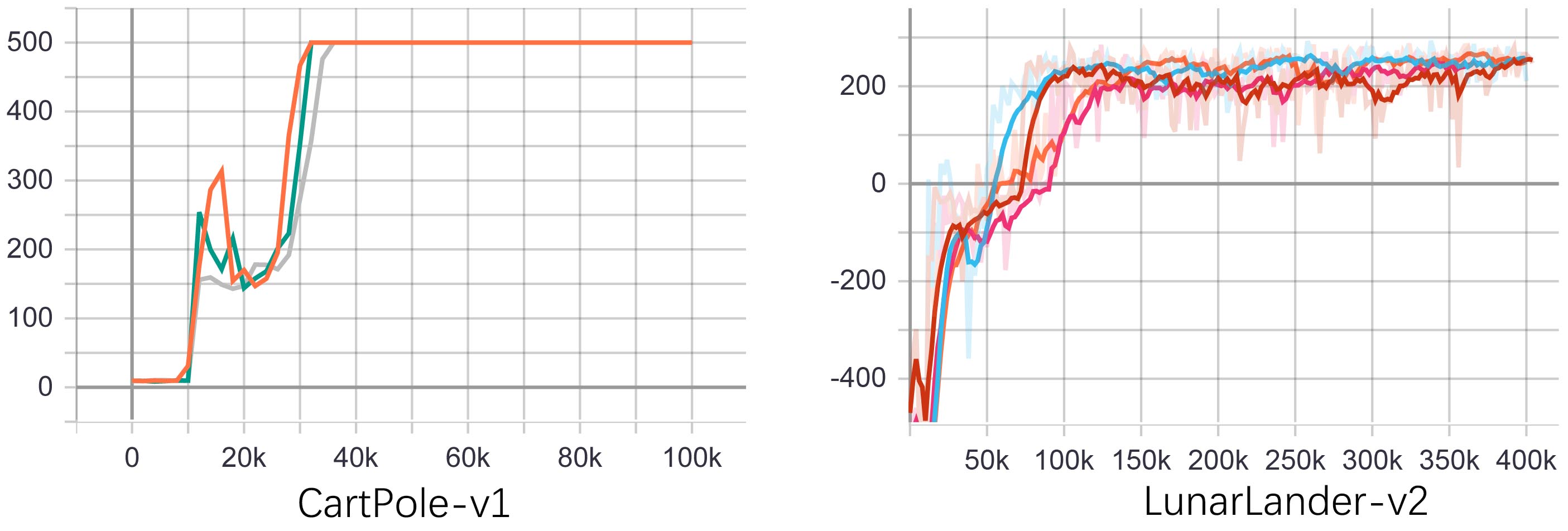

'--EnvIdex 0' for 'CartPole-v1'

'--EnvIdex 1' for 'LunarLander-v2' Note: if you want train on LunarLander-v2, you need to install box2d-py first. You can install box2d-py via:

pip install gymnasium[box2d]You can use the tensorboard to record anv visualize the training curve.

- Installation (please make sure Pytorch is installed already):

pip install tensorboard

pip install packaging- Record (the training curves will be saved at '\runs'):

python main.py --write True- Visualization:

tensorboard --logdir runsFor more details of Hyperparameter Setting, please check 'main.py'

Christodoulou P. Soft actor-critic for discrete action settings[J]. arXiv preprint arXiv:1910.07207, 2019.

Haarnoja T, Zhou A, Abbeel P, et al. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor[C]//International conference on machine learning. PMLR, 2018: 1861-1870.

Haarnoja T, Zhou A, Hartikainen K, et al. Soft actor-critic algorithms and applications[J]. arXiv preprint arXiv:1812.05905, 2018.