Yinwei Wei, Xiaohao Liu, Yunshan Ma, Xiang Wang, Liqiang Nie and Tat-Seng Chua, SIGIR'23

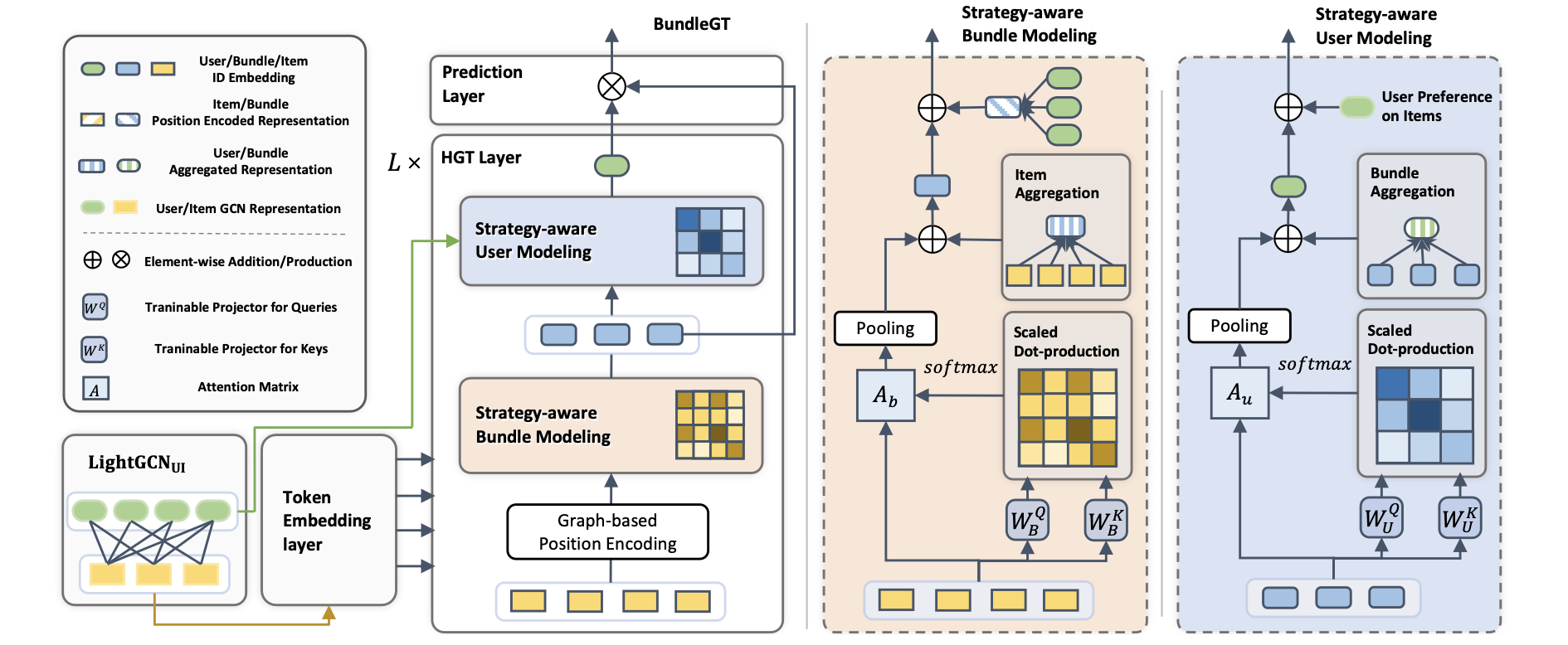

Bundle Graph Transformer, termed BundleGT, is a novel model for bundle recommendation, which explore the strategy-aware user and bundle representations for user-bundle interaction prediction. BundleGT consists of the token embedding layer, hierarchical graph transformer (HGT) layer, and prediction layer.

If you want to use our codes and datasets in your research, please cite:

@inproceedings{BundleGT2023,

author = {Yinwei Wei and

Xiaohao Liu and

Yunshan Ma and

Xiang Wang and

Liqiang Nie and

Tat{-}Seng Chua},

title = {Strategy-aware Bundle Recommender System},

booktitle = {{SIGIR}},

publisher = {{ACM}},

year = {2023}

}

- python == 3.7.3 or above

- supported(tested) CUDA versions: 10.2

- Pytorch == 1.9.0 or above

- The entry script for training and evaluation is: main.py.

- The config file is: config.yaml.

- The script for data preprocess and dataloader: utility.py.

- The model folder: ./models.

- The experimental logs in tensorboard-format are saved in ./runs.

- The experimental logs in txt-format are saved in ./log.

- The best model and associate config file for each experimental setting is saved in ./checkpoints.

To facilitate understanding and implementation of our model code, we have divided it into four parts:

- BundleGT.py, which contains the main Bundle Graph Transformer model,

- LGCN.py, which includes the LightGCN module,

- LiT.py, which contains the Light self-attention module, and

- HGT.py, which includes the Hierarchical Graph Transformer module.

-

Download and then decompress the dataset file into the current folder:

tar -zxvf dataset.tgz

Here we utilize Youshu, NetEase, and iFashion as datasets, which have the same settings as CrossCBR. The dataset can be downloaded from the CrossCBR repository in the dataset.tgz, and we recommend citing their paper (CrossCBR).

-

Train BundleGT on the dataset Youshu:

python main.py -g 0 -m BundleGT -d Youshu --info="" --batch_size_test=2048 --batch_size_train=2048 --lr=1e-3 --l2_reg=1e-5 --num_ui_layer=4 --gcn_norm=0 --num_trans_layer=3 --num_token=70 --folder="train" --early_stopping=40 --ub_alpha=0.5 --bi_alpha=0.5

There are two options available for configuring the hyperparameters: 1) modifying the file config.yaml, and 2) adding command line arguments, which can be found in the file main.py. We offer comprehensive and detailed choices for hyperparameter configuration, and we strongly recommend reading the paper to gain a better understanding of the effect of key hyperparameters.