This repository contains system demo codes and examples of paper Evaluating Reading Comprehension Exercises Generated by LLMs: A Showcase of ChatGPT in Education Applications in ACL23 BEA Workshop.

In this work, we implement a reading comprehension exercise generation system that provides high-quality and personalized reading materials for middle school English learners in China.

Our study makes threefold contributions:

- We fully leverage the capabilities of the state-of-the-art LLMs to tackle complex and compound tasks, integrating them within a carefully designed education system. The reading passages and exercise questions generated by our system significantly surpass the quality of those produced by previous models, with some even exceeding the standard of human-written textbook exercises.

- To the best of our knowledge, our reading exercise generation system is among the first applications of ChatGPT in the education context. The system has been utilized by middle school English teachers, making real impacts in schools.

- We gather feedback from both experts and general users regarding the efficacy of our system. We believe this is valuable, as there are few instances of ChatGPT applications being employed in real-world educational settings. Our findings offer insights for future researchers and practitioners to develop more effective AI-driven educational systems.

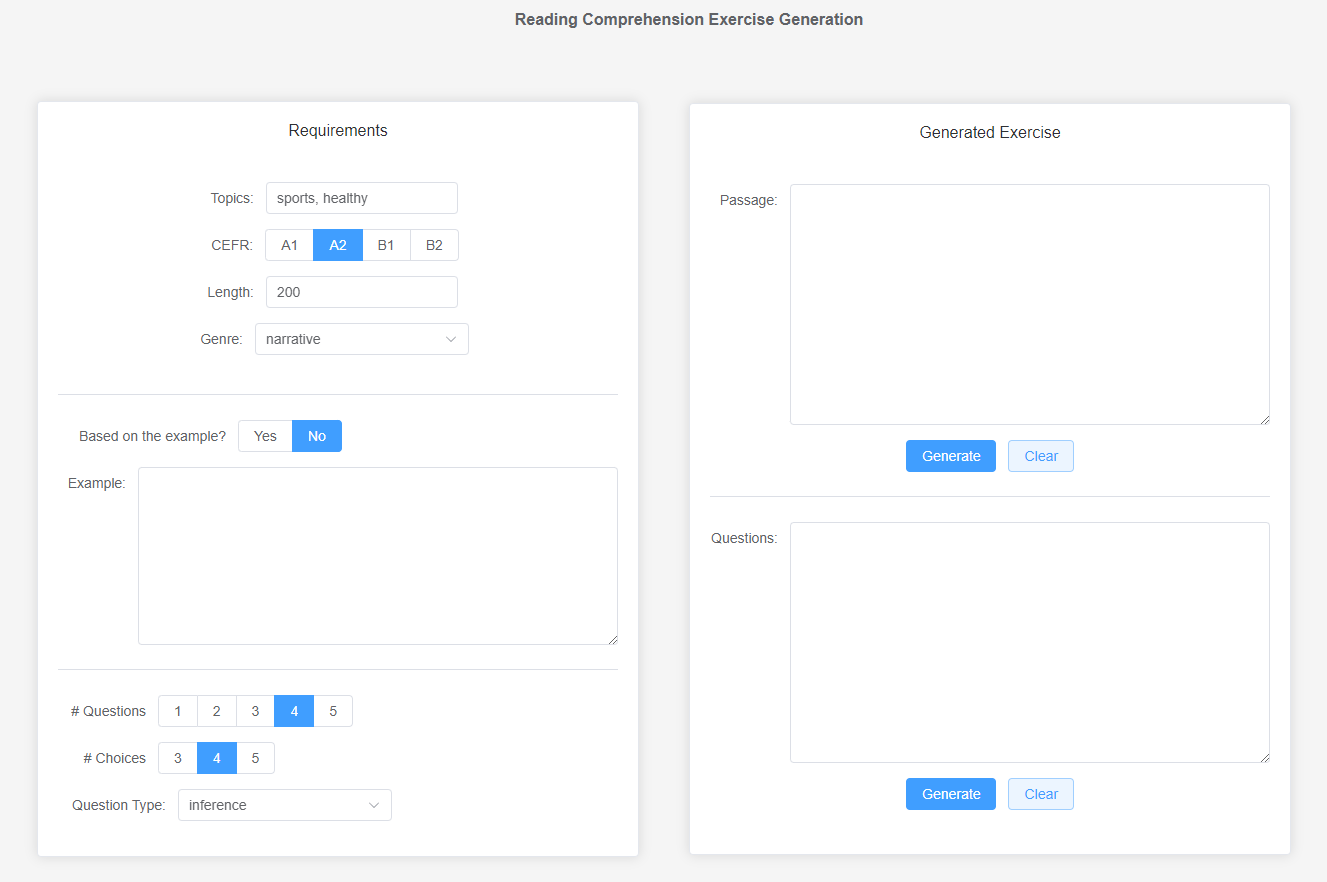

Our reading comprehension exercise generation system provides mainly two features: reading passage generation and multi-choice exercise question generation.

-

Reading passage generation: generate passages with given requirements.

- basic requirements: topics, difficulty level, length, genre

- in-context learning: generate or re-write based on given example text

-

Exercise question generation: generate multi-choice questions based on the passage (either input by human or generated by system)

- basic requirements: number of questions, number of choices, question type

An online demo is available here.

We also made our system codes public, so you can build the system on your device following the simple steps below.

We use Vue.js to build our system interface. First, you need to prepare basic requirements (node.js, npm, etc.) of Vue. Also, we do not include the node_modules folder in this repository. Before running the front-end service, necessary packages need to be installed (element-plus, vue-axios, etc.).

Running front-end service:

cd ./reading-demo

npm install

npm run serveWe implement the backend using Django. The OpenAI API is not included in this repository, you need to enter it in .\readingback\app\views.py.

Running backend service:

cd ./readingback

python manage.py runserverWe use ChatGPT with GPT-3.5 as the LLM in our system to generate both reading passages and exercise questions, and a previous version of fine-tuned GPT-2 + PPLM as the baseline for comparison.

The codes for the fine-tuned GPT-2 + PPLM baseline described in the paper are in .\gpt2_baseline\ folder, including:

- gpt2_finetune.py: fine-tuning GPT-2 medium with the reading material datasets

- pplm_tune.py: tune PPLM to find its optimal hyper-parameters for different topic keyword lists in

- passage_gen_example.py: generating example passages

Please install PPLM (See more guidance in the original PPLM repository) and other required packages.

git clone https://github.com/uber-research/PPLM.git

pip install -r requirements.txtDue to the confidentiality of educational resources, we are not able to publicly offer the access to the dataset. Nonetheless, our fine-tuned GPT-2 model is available through this Goolge Drive link.

The prompts we used in the system:

system_content = """

You are a helpful assistant to generate reading comprehension materials for Chinese middle school English learners. Your responses should not include any toxic content.

"""

prompt = """

Please generate a writing (without a title) satisfying the following requirements:

Topics: {}

Length: no more than {} words

Genre: {}

CEFR level: {}

""".format(topics, str(essay_len), genres, cefr)

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": system_content},

{"role": "user", "content": prompt}

]

)system_content = """

You are a helpful assistant to generate reading comprehension exercise questions for Chinese middle school English learners. Your responses should not include any toxic content.

"""

prompt = """

Please generate {} multiple choice questions (each question with {} choices), the corresponding answers and explanations for the following reading comprehension exercise. The type of questions should be {}.

Exercise: {}

""".format(q_num, a_num, q_type, essay)

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": system_content},

{"role": "user", "content": prompt}

]

)We conduct extensive evaluations of the generated passages, questions and the usage of our reading exercise generation system, which are visually depicted in the following figure.

Some examples of the generated passages and questions are in .\examples\ folder.

If you find our work useful, please consider citing:

@inproceedings{xiao-etal-2023-evaluating,

title = "Evaluating Reading Comprehension Exercises Generated by {LLM}s: A Showcase of {C}hat{GPT} in Education Applications",

author = "Xiao, Changrong and

Xu, Sean Xin and

Zhang, Kunpeng and

Wang, Yufang and

Xia, Lei",

booktitle = "Proceedings of the 18th Workshop on Innovative Use of NLP for Building Educational Applications (BEA 2023)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.bea-1.52",

pages = "610--625",

}