This project is an experimental repository focusing on dealing with datasets containing a high level of noisy labels (50% and above). This repository features experiments conducted on the FashionMNIST and CIFAR datasets using the ResNet34 as the baseline classifier.

The repository explores various training strategies (trainers), including ForwardLossCorrection, CoTeaching, JoCoR, and O2UNet. Specifically, for datasets with unknown transition matrices, DualT is employed as the Transition Matrix Estimator. Given the computational complexity and practical performance considerations, our experiments primarily focus on ForwardLossCorrection and CoTeaching. We conducted multiple experiments with different random seeds to compare these two methods.

Initial explorations on FashionMNIST0.5 with JoCoR and O2UNet have shown promising results. This repository serves as a resource for those interested in robust machine learning techniques under challenging conditions of high label noise.

A brief pipeline:

-

Datasets (3 classes: 0, 1, 2; instead of 10):

-

Base Classifier:

- ResNet-34

-

Basic Robust Method(s):

- Data Augmentation

-

Robust Trainers:

- Loss correction:

ForwardLossCorrection- Includes:

SymmetricCrossEntropyLoss

- Includes:

- Multi-network learning:

CoTeaching - Multi-network learning:

JoCoR - Multi-round learning:

O2UNet

- Loss correction:

-

Transition Matrix Estimator:

Dual-T

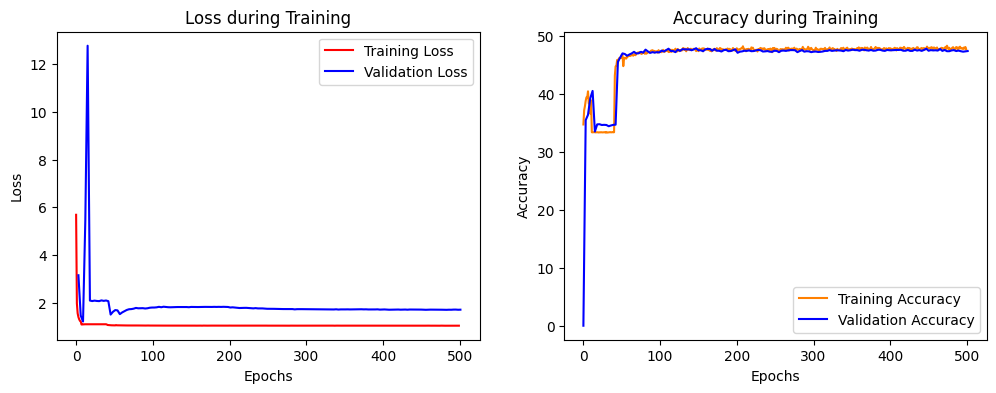

According to the the loss trends, we find that our robust trainers may also act as regularizers to avoid overfitting.

ForwardLossCorrection

CoTeaching

We have conducted a series of experiments utilizing 10 distinct random seeds to evaluate the performance of ForwardLossCorrection and CoTeaching. Below is a detailed comparison of their performances.

| Dataset | Metrics | Robust Trainer | |

| ForwardLossCorrection | CoTeaching | ||

| FashionMNIST0.5 | Accuracy | 77.47%(± 6.33%) | 90.33%(± 3.34%) |

| Precision | 78.87%(± 5.75%) | 90.93%(± 2.49%) | |

| Recall | 77.47%(± 6.33%) | 90.33%(± 3.34%) | |

| F1 Score | 77.53%(± 6.54%) | 90.29%(± 3.46%) | |

| FashionMNIST0.6 | Accuracy | 77.05%(± 6.61%) | 80.25%(± 12.44%) |

| Precision | 80.08%(± 3.64%) | 75.28%(± 20.81%) | |

| Recall | 77.05%(± 6.61%) | 80.25%(± 12.44%) | |

| F1 Score | 76.27%(± 8.55%) | 76.92%(± 17.83%) | |

| CIFAR | Accuracy | 49.81%(± 12.58%) | 47.28%(± 4.09%) |

| Precision | 50.11%(± 12.06%) | 33.41%(± 3.73%) | |

| Recall | 49.81%(± 12.58%) | 47.28%(± 4.09%) | |

| F1 Score | 49.09%(± 12.27%) | 38.04%(± 3.69%) | |

It becomes evident from our analysis that CoTeaching exhibits superior performance under conditions of low noise. However, as the noise level escalates, ForwardLossCorrection demonstrates enhanced robustness, outperforming CoTeaching.

In our preliminary experiments, both JoCoR and O2UNet showed promising results on the FashionMNIST0.5 dataset. Nevertheless, due to the substantial computational demands and the marginal improvements they offered over CoTeaching, we decided not to proceed with extensive experimentation on these methods.

- Estimation on FashionMNIST05

- Estimation on FashoinMNIST06

- Estimation on CIFAR