English Version | 中文版

🚀 Dive into the world of CAT! Imagine if computers could understand and combine the essence of both pictures and words, just like we humans naturally do. By marrying the strengths of Convolutions (think of it as the magic behind image filters) and Transformers (the genius tech behind language models), our CAT framework stands as a bridge, seamlessly blending visual and textual realms. So, whether you're marveling at a sunset photo or reading a poetic description, CAT seeks to decode, understand, and bring them together in harmony.

Looking for a swift kick-off? Explore our Jupyter Notebook directly in Google Colab!

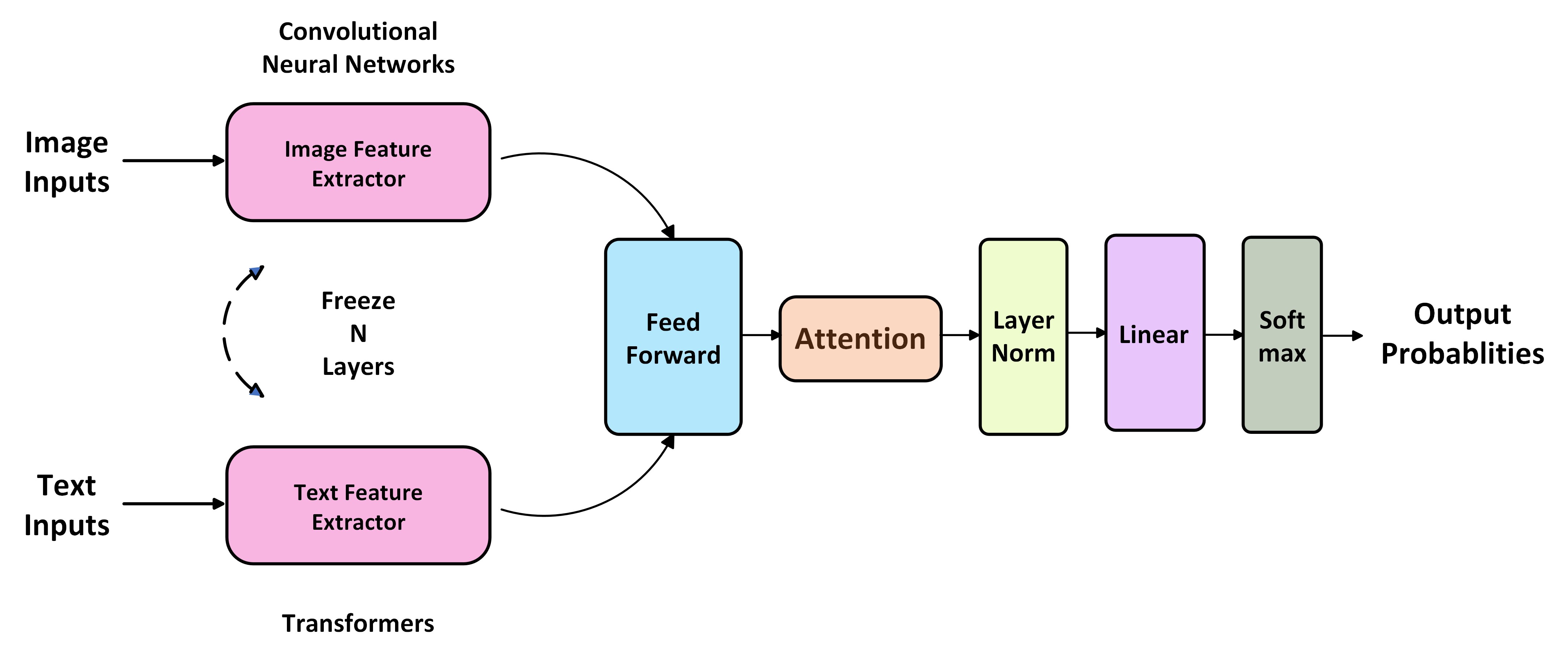

In this experimental endeavor, an innovative model architecture is proposed that leverages the capabilities of Convolutional Neural Networks (CNNs) for the extraction of salient features from images, and Transformer-based models for gleaning intricate patterns from textual data. Termed the Convolutions, Attention & Transformers or the CAT framework, the architecture deftly integrates attention mechanisms. These mechanisms serve as an intermediate conduit, facilitating the seamless amalgamation of visual and textual modalities.

| Architecture | |||

| Extractor | Modality | Module | Number of Unfrozen Blocks |

| Image | DenseNet-121 | 2 | |

| Text | TinyBert | ||

| Parallelism | Property | Module | Number of Input Dimensions |

| Fully-connected | Batch Normalization | 896 | |

| ReLU | |||

| Dropout | |||

| Attention | |||

| Classifier | Property | Module | Number of Input Dimensions |

| Linear | 896*2 | ||

| Training procedure | |||

| Class | Details | ||

| Strategy | Batch Size | 16 | |

| Number of epochs | 50 | ||

| Optimization | Loss Function | Binary Cross Entropy With Logits | |

| Optimizer | AdamW | ||

| Learning Rate | 1e-5 | ||

| Bias Correction | False | ||

| Auxiliary | Learning Rate Scheduler | Linear | |

| Number of Warmup Steps | 0 | ||

| Number of Training Steps | Total Number of Batches | ||

| Prediction | Output threshold | 0.39 | |

💡 How to process multimodal data? That is a good point!

- F1 score

- Model size

| Image classifiers | ||||||

| Model | Size(MB) | Training/Validation | Threshold | F1 score | Epochs | Efficiency(second/epoch) |

| ResNet-18 | 42.74 | Training | 0.5 | 0.6712 | 20 | 199.75 |

| Validation | 0.6612 | |||||

| ResNet-34 | 81.36 | Training | 0.6406 | 157.99 | ||

| Validation | 0.6304 | |||||

| DenseNet-201 | 70.45 | Training | 0.6728 | 179.58 | ||

| Validation | 0.6594 | |||||

| ResNet-50 | 90.12 | Training | 0.7090 | 175.89 | ||

| Validation | 0.7063 | |||||

| Training | 0.7283 | 50 | 163.43 | |||

| Validation | 0.7260 | |||||

| Text classifiers | ||||||

| Model | Size(MB) | Training/Validation | Threshold | F1 score | Epochs | Efficiency(second/epoch) |

| Tiny Bert | 54.79 | Training | 0.5 | 0.5955 | 50 | 53.76 |

| Validation | 0.5975 | |||||

| Bert Tiny | 16.76 | Training | 0.635 | 0.5960 | 50 | 17.30 |

| Validation | 0.5989 | |||||

| Unimodal models(Optimal) | ||||||

| Model | Size(MB) | Training/Validation | Threshold | F1 score | Epochs | Efficiency(second/epoch) |

| ResNet-50 | 90.12 | Training | 0.5 | 0.7283 | 50 | 163.43 |

| Validation | 0.7260 | |||||

| Bert Tiny | 16.76 | Training | 0.635 | 0.5960 | 17.30 | |

| Validation | 0.5989 | |||||

| Multimodal models(Baseline) | ||||||

| Model | Size(MB) | Training/Validation | Threshold | F1 score | Epochs | Efficiency(second/epoch) |

| DensityBert(DenseNet-121 + TinyBert) | 97.71 | Training | 0.35 | 0.8173 | 50 | 191.48 |

| Validation | 0.8173 | |||||

| Bensity(DenseNet-201 + BertTiny) | 100.83 | Training | 0.33 | 0.7980 | 190.01 | |

| Validation | 0.7980 | |||||

| ResT(ResNet-34 + BertTiny) | 100.92 | Training | 0.38 | 0.7836 | 170.06 | |

| Validation | 0.7766 | |||||

| Self-attention mechanism | |||||||

| Model | Query | Size(MB) | Training/Validation | Threshold | F1 score | Epochs | Efficiency(second/epoch) |

| DensityBert | / | 97.71 | Training | 0.35 | 0.8173 | 50 | 191.48 |

| Validation | 0.8173 | ||||||

| Bensity | 100.83 | Training | 0.33 | 0.7980 | 190.01 | ||

| Validation | 0.7980 | ||||||

| Cross-attention mechanism | |||||||

| Model | Query | Size(MB) | Training/Validation | Threshold | F1 score | Epochs | Efficiency(second/epoch) |

| CDBert | Text | 93.81 | Training | 0.29 | 0.7599 | 50 | 181.51 |

| Validation | 0.7564 | ||||||

| IMCDBert | Image | 91.02 | Training | 0.46 | 0.8026 | 147.75 | |

| Validation | 0.7985 | ||||||

| Censity | Text | 90.89 | Training | 0.33 | 0.7905 | 183.50 | |

| Validation | 0.7901 | ||||||

| IMCensity | Image | 81.14 | Training | 0.38 | 0.7869 | 174.49 | |

| Validation | 0.7801 | ||||||

| Variations | ||||||

| Model | Size(MB) | Training/Validation | Threshold | F1 score | Epochs | Efficiency(second/epoch) |

| DensityBert | 97.71 | Training | 0.35 | 0.8173 | 50 | 191.48 |

| Validation | 0.8173 | |||||

| MoDensityBert | 97.72 | Training | 0.38 | 0.8622 | 178.95 | |

| Validation | 0.8179 | |||||

| WarmDBert | 97.72 | Training | 0.38 | 0.8505 | 204.09 | |

| Validation | 0.8310 | |||||

| WarmerDBert | 97.72 | Training | 0.39 | 0.8567 | 258.34 | |

| Validation | 0.8345 | |||||

| WWDBert | 99.77 | Training | 0.40 | 0.8700 | 100 | 269.93 |

| Validation | 0.8464 | |||||

- Competition link: Here

- My ranking

├── cat

| ├── attentions.py

| ├── datasets.py

| ├── evaluator.py

| ├── multimodal.py

| ├── predict.py

| ├── trainer.py

| └── __init__.py

├── data

| ├── data/

| │ └── *.jpg

| ├── train.csv

| └── test.csv

├── model_hub

| └── *.pth

├── outcomes/

│ └── *.jpg/*.png/*.jpeg

├── LICENSE

├── notebook.ipynb

├── README.md

├── README.zh-CN.md

└── requirements.txt