This is the implementation of the text-to-image generation system for the DL4NLP (Deep Learning for Natural Language Processing) course final project, as a HAP/LAP master's student.

| Cyberpunk Samurai | Dark Matter | Flying penguin | Las Meninas |

|

|

|

|

The table above shows several inferences, you can check more of them inside inferences, poster and poster-session. The latter folder contains the inferences made by the people that attended the poster-session in-situ.

|

| System Architecture |

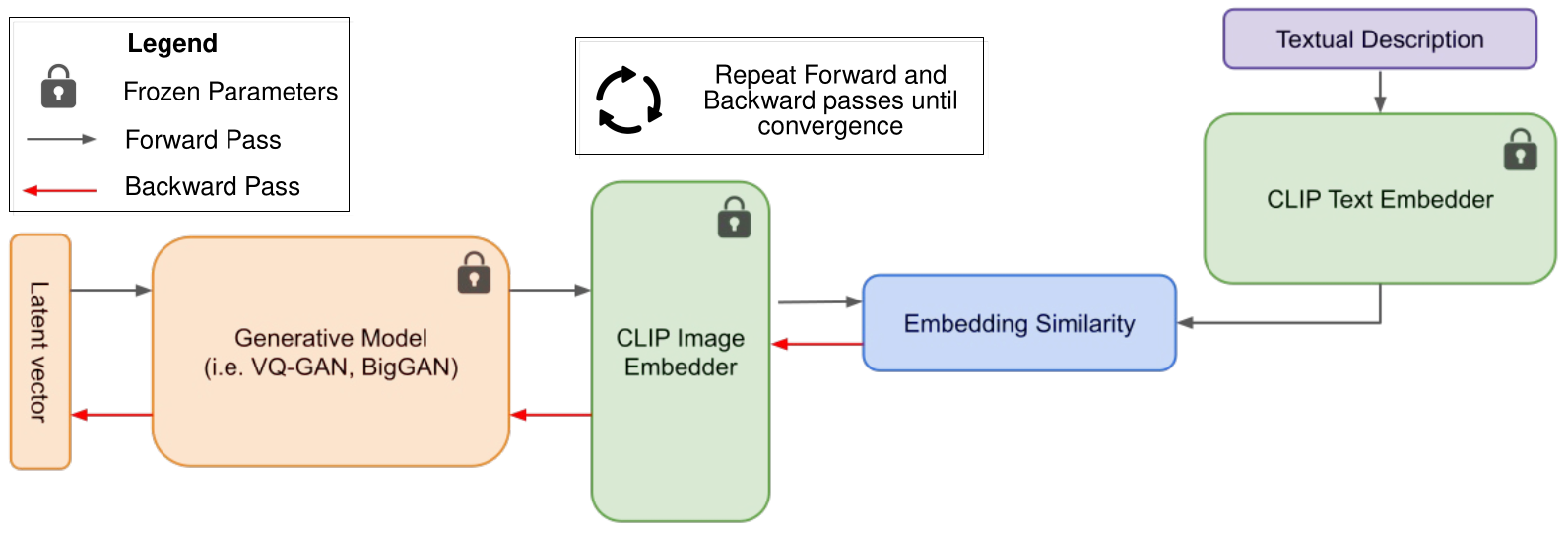

The implemented system is composed of two main modules:

- Generative Module: A generative network is used to generate an image from a latent vector.

- Language-Image Matching Module: CLIP is used as the model to steer the image generation towards the provided textual description.

Both modules are kept frozen, and the only parameters that are updated are those from the latent vector, such that the cosine similarity between the CLIP image embedding of the generated image and the CLIP text embedding of the provided textual description is maximized. This procedure is repeated for a predefined amount of iterations, or until convergence.

The Streamlit web application was built as a demo for the poster presentation session (you can check my poster here). At that time, this web app was being run in my personal computer sitting at home (for this reason, do not expect the QR code in the poster to work anymore). So, in order to handle possible concurrent text-to-image generation attempts, an SQLite3 based job queue was implemented from scratch. This way, we have two processes working at the same time: the producer (in this case, the Streamlit web client) and the consumer (job_executor.py).

One of the major pros of this design is its scaling ability, both vertically and horizontally, by instantiating multiple job_executor.py instances.

|

| Text-to-Image Generation Page View |

|

| Previous Inferences Page View |

|

| Vote Images Page View |

First, you will need to install PyTorch with GPU compatibility. Follow the installation instructions here, and then run the commands below:

git clone --recurse-submodules https://github.com/Xabilahu/Text-to-Image-Generation.git

cd Text-to-Image-Generation

pip3 install -r requirements.txtWARNING: if you plan to run this app, take into account that it has been implemented so that every tensor computation is done in the GPU. The GPU memory usage with the defaults implemented is around 5.9 GiB, but you can always tweak it by modifying the amount of data augmentations performed (32 by default), or changing the resolution of the image that is being generated (300x300 by default).

NOTE: If you have installed the requirements inside a virtual environment, you need to activate it inside set_up_env.sh and run_executor.sh.

In order to run the system manually, we need to fire up the Streamlit web app, and also the job_executor.py instance.

streamlit run webapp.py &

chmod u+x run_executor.sh

./run_executor.sh &chmod u+x set_up_env.sh run_executor.sh

./set_up_env.shNOTE 2: set_up_env.sh script creates a TMUX session and fires up both, the Streamlit web app and the

job_executor.py instance. It also creates a pane to check NVIDIA GPU usage by running

watch -n 0.1 nvdia-smi.

- Radford, A., Kim, J. W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., ... & Sutskever, I. (2021, July). Learning transferable visual models from natural language supervision. In International Conference on Machine Learning (pp. 8748-8763). PMLR.

- Esser, P., Rombach, R., & Ommer, B. (2021). Taming transformers for high-resolution image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 12873-12883).

- Brock, A., Donahue, J., & Simonyan, K. (2018). Large scale GAN training for high fidelity natural image synthesis. arXiv preprint arXiv:1809.11096.