The official implementation of the paper SeTAR: Out-of-Distribution Detection with Selective Low-Rank Approximation.

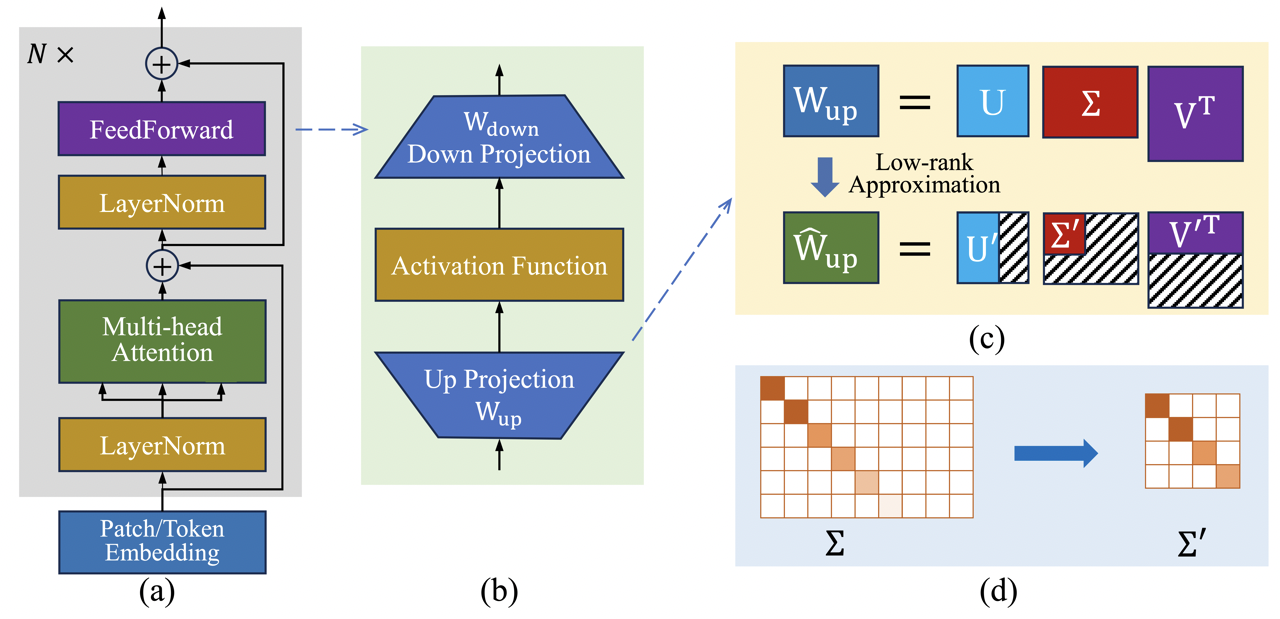

Out-of-distribution (OOD) detection is crucial for the safe deployment of neural networks. Existing CLIP-based approaches perform OOD detection by devising novel scoring functions or sophisticated fine-tuning methods. In this work, we propose SeTAR, a novel, training-free OOD detection method that leverages selective low-rank approximation of weight matrices in vision-language and vision-only models. SeTAR enhances OOD detection via post-hoc modification of the model’s weight matrices using a simple greedy search algorithm. Based on SeTAR, we further propose SeTAR+FT, a fine-tuning extension optimizing model performance for OOD detection tasks. Extensive evaluations on ImageNet1K and Pascal-VOC benchmarks show SeTAR’s superior performance, reducing the false positive rate by up to 18.95% and 36.80% compared to zero-shot and fine-tuning baselines. Ablation studies further validate our approach’s effectiveness, robustness, and generalizability across different model backbones. Our work offers a scalable, efficient solution for OOD detection, setting a new state-of-the-art in this area.

We run the experiments on a single NVIDIA 4090 GPU with 24GB memory. The code is developed and tested on Ubuntu 20.04.6 with Python 3.10 and CUDA11.8.

To install the required packages, please follow the instructions below.

conda create -n svd_ood python=3.10 -y

conda activate svd_ood

# Install dependencies

pip install torch==2.0.1 torchvision transformers==4.37.2 datasets scipy scikit-learn matplotlib seaborn pandas tqdm ftfy timm tensorboard

# Install SVD_OOD

cd src/svd_ood

pip install -e .

# Install peft-0.10.1.dev0 from source, accelerate also installed

cd ../peft

pip install -e .For peft, we implement the modified_lora to specified rank at each layer. Refer to test.py for a simple example.

Please follow this instruction to download and preprocess the datasets.

We provide all the experiment settings in the configs folder, refer to configs/README.md for more details. Please change the

data_rootin the config file to your datasets path before running the experiments.

-

To run the training-free SeTAR on ImageNet1K as In-Distribution (ID) dataset with CLIP-base, run the following command. We provide the output in results folder.

python search.py --config_file "configs/training_free/clip_base/ID_ImageNet/SeTAR.json" -

To run the fine-tuning SeTAR+FT on ImageNet1K as In-Distribution (ID) dataset with CLIP-base, run the following command. We provide the output with seed 5 in results folder. You may change the seed to reproduce the average results.

python finetune.py --config_file "configs/finetune/clip_base/ID_ImageNet1K/SeTAR+FT.json"

The code is based on the following repositories, we greatly appreciate the authors for their contributions.

- CLIP (commit a1d0717): a neural network model by OpenAI for image-text tasks, jointly learning from text and image data.

- MCM (commit 640657e): proposes Maximum Concept Matching (MCM), a zero-shot OOD detection method based on aligning visual features with textual concepts.

- GL-MCM (commit dfcbdda): proposes Global-Local Maximum Concept Matching (GL-MCM), based on both global and local visual-text alignments of CLIP features.

- LoCoOp (commit d4c7ca8): proposes Local regularized Context Optimization (LoCoOp), which performs OOD regularization that utilizes the portions of CLIP local features as OOD features during training.

This project is licensed under the MIT License.

If you find this code useful, please consider citing our paper:

@misc{li2024setar,

title={SeTAR: Out-of-Distribution Detection with Selective Low-Rank Approximation},

author={Yixia Li and Boya Xiong and Guanhua Chen and Yun Chen},

year={2024},

eprint={2406.12629},

archivePrefix={arXiv}

}