Stephane Vujasinovic, Sebastian Bullinger, Stefan Becker, Norbert Scherer-Negenborn, Michael Arens, Rainer Stiefelhagen

ICIP 2022

📰 New Project (18/09/2023):

READMem: Robust Embedding Association for a Diverse Memory in Unconstrained Video Object Segmentation

TL;DR: We manage the memory of STM like sVOS methods to better deal with long video. To attain long-term performance we estimate the inter-frame diversity of the base memory and integrate the embeddings of an incoming frame into the memory if it enhances the diversity. In return, we are able to limit the number of memory slots and deal with unconstrained video sequences without hindering the performance on short sequences and alleviate the need for a sampling interval.

Abstract

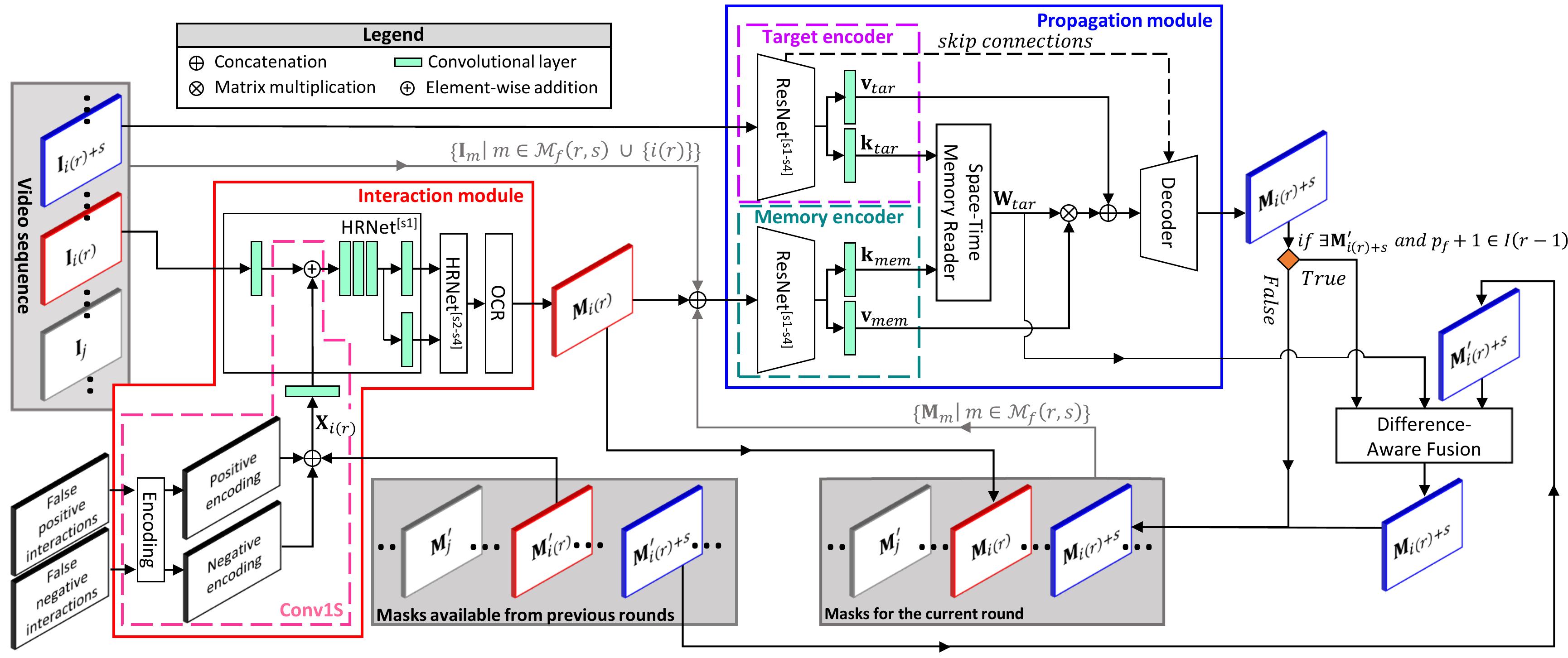

While current methods for interactive Video Object Segmentation (iVOS) rely on scribble-based interactions to generate precise object masks, we propose a Click-based interactive Video Object Segmentation (CiVOS) framework to simplify the required user workload as much as possible. CiVOS builds on de-coupled modules reflecting user interaction and mask propagation. The interaction module converts click-based interactions into an object mask, which is then inferred to the remaining frames by the propagation module. Additional user interactions allow for a refinement of the object mask. The approach is extensively evaluated on the popular interactive DAVIS dataset, but with an inevitable adaptation of scribble-based interactions with click-based counterparts. We consider several strategies for generating clicks during our evaluation to reflect various user inputs and adjust the DAVIS performance metric to perform a hardware-independent comparison. The presented CiVOS pipeline achieves competitive results, although requiring a lower user workload.

@INPROCEEDINGS{Vujasinović_2021_ICIP,

author={Vujasinović, Stéphane and Bullinger, Sebastian and Becker, Stefan and Scherer-Negenborn, Norbert and Arens, Michael and Stiefelhagen, Rainer},

booktitle={2022 IEEE International Conference on Image Processing (ICIP)},

title={Revisiting Click-Based Interactive Video Object Segmentation},

year={2022},

pages={2756-2760},

doi={10.1109/ICIP46576.2022.9897460}}

- The framework is built with Python 3.7 and relies on the following packages:

- NumPy

1.21.4 - SciPy

1.7.2 - PyTorch

1.10.0 - torchvision

0.11.1 - OpenCV

4.5.4(opencv-python-headless if you don't want to use the demo) - Cython

0.29.24 - scikit-learn

0.20.3 - scikit-image

0.18.3 - scipy

1.7.2 - Pillow

8.4.0 - imgaug

0.4.0 - albumentations

1.10 - tqdm

4.62.3 - PyYaml

6.0 - easydict

1.9 - future

0.18.2 - cffi

1.15.0 - davis-interactive

1.0.4 - networkx

2.6.3for DAVIS - gdown

4.2.0for downloading pretrained models - tensorboard

2.4.1

- NumPy

- Download the DAVIS dataset

download_datasets.py - Download the pretrained models

download_models.py

- Adapt the paths and variables in

Demo.yml - Launch

CiVOS_Demo.py(Nota bene: only 1 object can be segmented in the Demo) - Mouse and keyboard bindings:

- Positive interaction:

left mouse click - Negative interaction:

right mouse click - Predict a mask of the object of interest for the video sequence:

space bar - Visualize the results with the keys

x(forward direction) andy(backward direction) - Quit the demo with key

q

- Positive interaction:

- Adapt the paths and variables of

EXAMPLE_DEBUGGING.yml - Adapt and lauch the bash file

CiVOS_evaluation_script_example.sh - Read .csv files results with

Summarize_with_DAVIS_arbitrary_report.py

Quantitative evaluation on the interactive DAVIS 2017 validation set.

| Methods | Training interaction | Testing interaction | R-AUC-J&F | AUC-J&F | J&F@60s |

|---|---|---|---|---|---|

| MANet | Scribbles | Scribbles | 0.72 | 0.79 | 0.79 |

| ATNet | Scribbles | Scribbles | 0.75 | 0.80 | 0.80 |

| MiVOS | Scribbles | Scribbles | 0.81 | 0.87 | 0.88 |

| GIS-RAmap | Scribbles | Scribbles | 0.79 | 0.86 | 0.87 |

| MiVOS | Clicks | Clicks | 0.70 | 0.78 | 0.79 |

| CiVOS | Clicks | Clicks | 0.76 | 0.83 | 0.84 |

R-AUC-J&F results on the DAVIS 2017 validation set for CiVOS by generating clicks in three different ways.

| Maximal Number of Clicks | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| Interaction Strategy 1 | 0.69 | - | - | - | - | - | - |

| Interaction Strategy 2 | 0.72 | 0.76 | 0.76 | 0.75 | 0.75 | 0.75 | 0.76 |

| Interaction Strategy 3 | 0.74 | 0.77 | 0.78 | 0.78 | 0.78 | 0.78 | 0.78 |