The program here is the adaptation of Josh Starmer's video:

As I am blocking at coding the backpropagation algorithm in this video:

To be 100% sure of what is about gradient descent I will try to explain it.

But I will also try to make some hypotheses of what should be added to the gradient descent algorithm to work in a neural network, at least the one in the video mentioned earlier...

The goal of gradient descent:

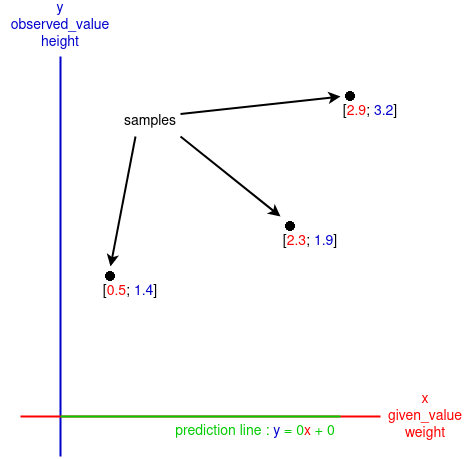

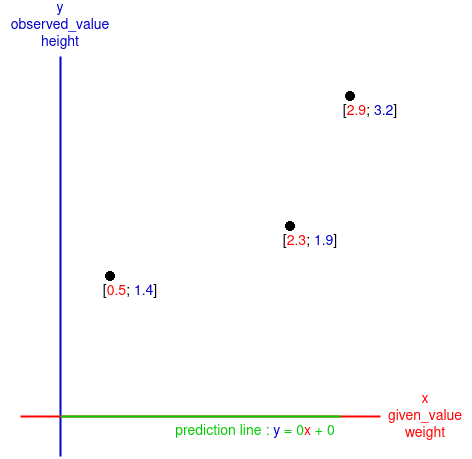

The goal of gradient descent is to determine the intercept and the slope of a "prediction line", here the line predict the

But the algorithm need some samples in order to have a grasp of how this line must look, in terms of intercept and slope, those sample are the given

First, the algorithm will have a given initial guess, those are stored in slope_intercept, there are initialised as two

With the guess value of the slope (

The goal of this algorithm is to change the position of the for each samples:

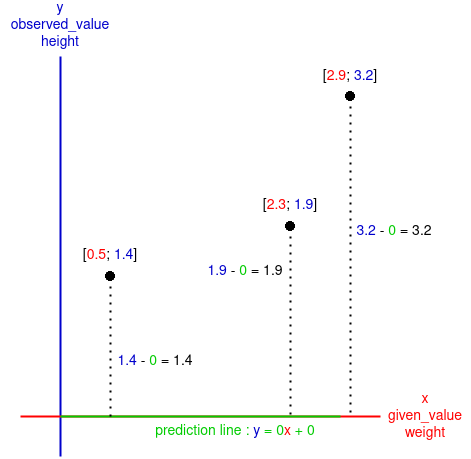

Now we know for each samples how much the

Note:

Sometimes the

If we make the sum of the difference we will get:

But that doesn't make sense because the result is lower than the difference between

The solution to this is to make sure the diffrences are positive by calculating the square of the negative one:

But we also need to calculate the square of positive differences to keep a sense of proportionality between the differences:

The sum of square for the main exemple:

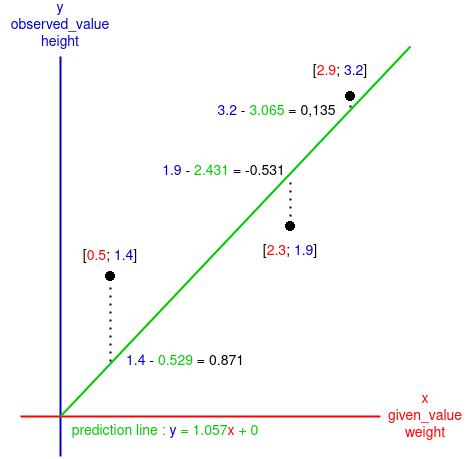

Now that we have the

Note

In the calculation of the Gradient descent the

To be efficient we need to calculate the

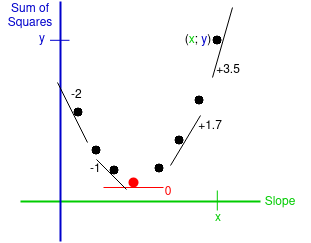

As mentioned before we want to reduce as much as possible the sum of the differences between

To know which

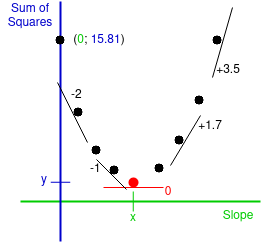

Here we can see the lowest sum of differences,

So good so far we have found, theoretically, the best value for the

- This method could take an infinite amount of time if we don't choose how much we want the

$\textrm{\color{blue}Sum of Squares}$ to be near$0$ , in terms of numbers of$0$ after the decimal point,$0.\textrm{\color{blue}y}$ or$0.00\textrm{\color{blue}y}$ ? - Testing all the values of the

$\textrm{\color{green}Slope}$ to guess the lowest value of the$\textrm{\color{blue}Sum of Squares}$ would take a monstrous amount of time.

In Josh's video about gradient descent(link in the introduction) the number to represent how much we want precision_success.

If we want to reduce the number of tests to guess the

This variable is the derivative of the

Here we can see the lowest value of the

That mean more the derivative is far from

With the current exemple:

Note

Here a recape before calculating the derivative of

To calculate the

In a more generale way:

for i in 1..= N {

x = // can be any calculation that involve i

sum = x + sum;

}But if

for i in 0..= N - 1 {

x = // can be any calculation that involve i

sum = x + sum;

}With those info we can rewrite the formula of

But as

We will calculat the dérivatives of the

Here is the math to calculat the derivative of

We can't do it directly, we have to use the chain rule taking note that the

Since the derivative is with respect to the

And:

Note: Here

Finally:

Note: for

With the samples of the example :

The goal is to find a way to decreas this value until it's reach precision_success, 0.001.

The

But

This why there is something caled

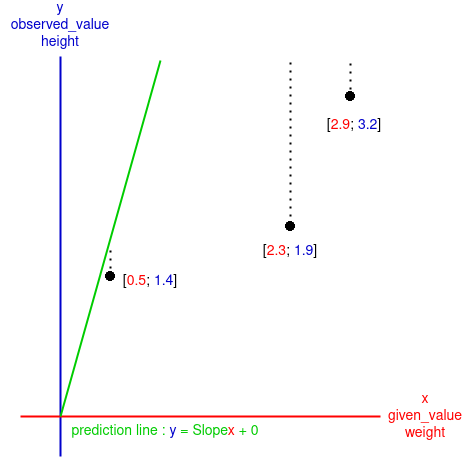

Now we know how much we have to change the value of the

But should we add the

For now, the

If we want the

Since

Note

This also work if the value of

In that case we would like to lower the

We have the the new value of the

First we calculate the

Since the derivative is with respect to the

And:

Finally:

We can now calculate the

Then we use a

And finally we use the

The new formula of the

And we use this new

Here is a little gif to show the process in action:

Additional note:

Values were the good intercept and slope are found (approximately):

(note: the prediction line found by Josh is:

|

|

Learning rate slope, weight | Learning rate intercept, bias | number of try | prediction line |

|---|---|---|---|---|

|

|

|

|||

|

|

|

|||

|

|

|