In today’s digital landscape, hate speech is an escalating concern, often fueling division and unrest across communities. DweshaMukt is an Advanced Multilingual and Multimodal Hate Speech Detection System designed to counteract this issue by harnessing the power of Bidirectional Encoder Representations from Transformers (BERT) alongside cutting-edge Deep Learning and Natural Language Processing (NLP) techniques.

Our system tackles a unique challenge: detecting hate speech within Hinglish—a dynamic blend of Hindi and English—while also supporting Hindi and English languages individually. DweshaMukt leverages a pre-trained BERT model, specially optimized for real-time scenarios, offering robust analysis across a range of media. Its multilingual and multimodal architecture enables hate speech detection across diverse content types: text, audio, video, images, GIFs, and YouTube comments.

With an accuracy of 88%, DweshaMukt stands as a promising solution for real-world hate speech detection applications, bridging language and media barriers to ensure safer, more inclusive online spaces.

Index Terms: Hate Speech Detection, BERT, Deep Learning, Natural Language Processing, Multilingual, Multimodal, Hinglish, Real-Time Analysis

- Start Date: 15th February 2023

- End Date: 20th October 2024

- Total Time Required: 1 Year, 8 Months, and 5 Days

| Team Members | GitHub Profile | LinkedIn Profile |

|---|---|---|

| Yash Suhas Shukla | GitHub | |

| Tanmay Dnyaneshwar Nigade | GitHub | |

| Suyash Vikas Khodade | GitHub | |

| Prathamesh Dilip Pimpalkar | GitHub |

| Guide | GitHub Profile | LinkedIn Profile |

|---|---|---|

| Prof. Rajkumar Panchal | GitHub |

The backend development was an intricate journey, involving months of rigorous research, experimentation, and iterative coding. Each phase contributed to refining the system’s ability to detect hate speech across various input types and languages.

Our Mark Model Index Document provides a comprehensive overview of this journey, showcasing each model’s evolution, from early concepts to the final optimized versions. Dive into the document to see how each model was crafted, tested, and fine-tuned to tackle the challenges of multilingual, multimodal hate speech detection.

The backend architecture of DweshaMukt enables the system to classify various forms of input text, audio, video, images, GIFs, and live YouTube comments by first converting each to text before applying the hate speech detection model. Here are the main scenarios handled by the system:

- Text Input: Processes user-entered text directly.

- Audio Input: Converts audio to text using

Google Speech to Text API, then classifies it. - Image Input: Extracts text from images via

Google Cloud Vision API. - GIF Input: Analyzes GIFs using

Google Video Intelligence APIfor text extraction. - Video Input: Extracts audio from videos, transcribes it, and classifies it.

- Live YouTube Video: Fetches live comments using

pytchatlibrary, then classifies them.

The combined code integrates all these scenarios into a unified detection system, including added emoticon-based classification for enhanced accuracy.

The DweshaMukt Dataset is a curated collection of comments carefully selected to support research on hate speech detection. This dataset includes multilingual data in Hinglish, Hindi, and English, capturing various instances of hate and non-hate speech. It is a valuable resource for researchers and developers working on projects aimed at building safer online communities.

- Datasets Used: CONSTRAINT 2021 and Hindi Hate Speech Detection (HHSD)

- Total Comments: 22,977

- Hate Comments: 9,705

- Non-Hate Comments: 13,272

To ensure responsible and secure usage, access to the DweshaMukt dataset is granted upon request. Please complete the form below to submit your application. We review each request to verify alignment with our project’s objectives.

Note: Approved requests will receive an email with download instructions within 2-3 business days.

By requesting access to this dataset, you agree to the following:

- The dataset is strictly for non-commercial, research, or educational purposes.

- You will cite the DweshaMukt project in any publications or presentations that use this dataset.

- Redistribution or sharing of the dataset with unauthorized parties is strictly prohibited.

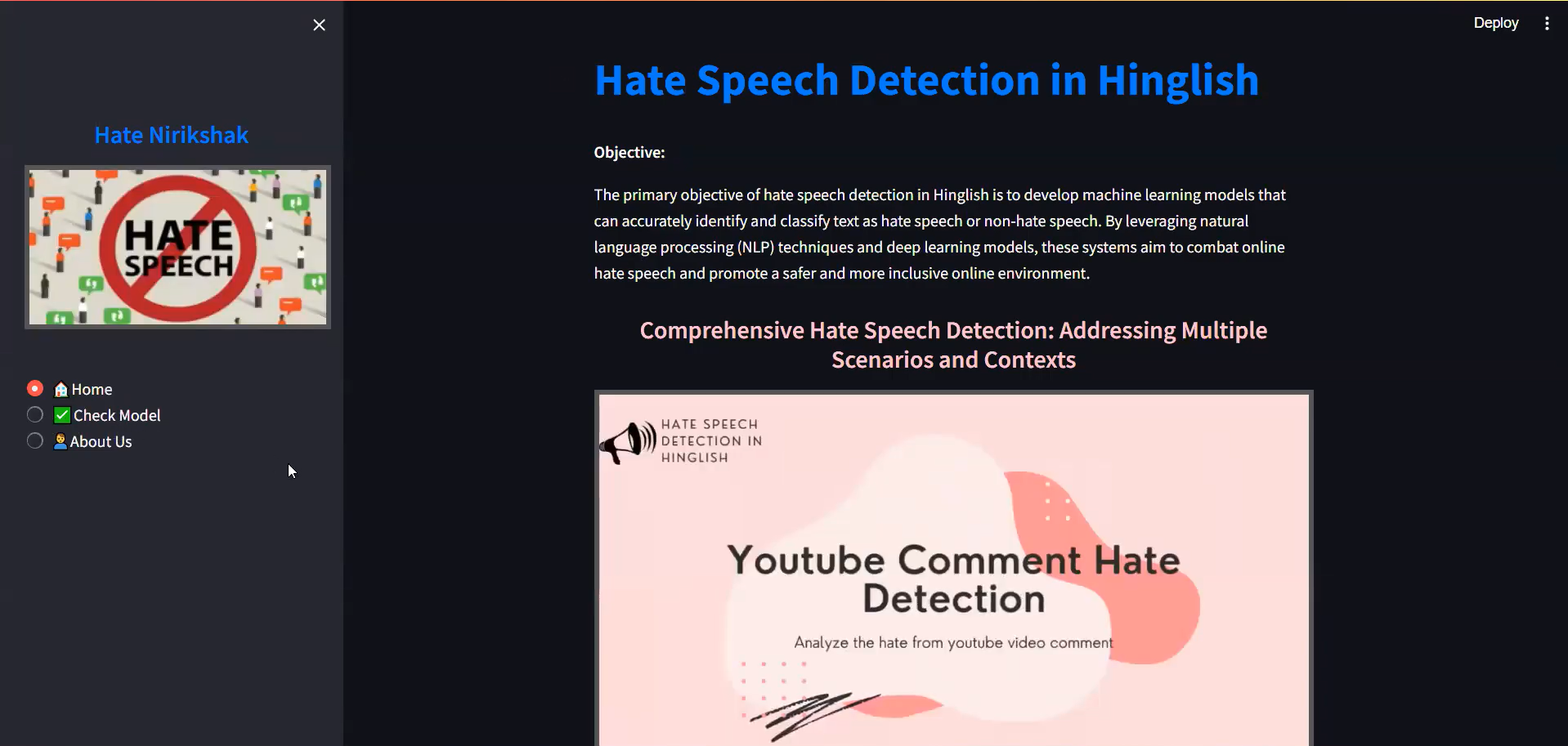

The frontend for this project is built as a Streamlit application, allowing users to interact seamlessly with our hate speech detection models across various input formats. This interface makes it easy to submit and analyze text, audio, video, images, GIFs, and YouTube comments.

- Multi-format Detection: Supports text, audio, video, images, GIFs, and YouTube comments.

- Real-time Analysis: Provides immediate feedback on uploaded content.

- User-friendly Interface: Simple navigation with clear instructions and dynamic visual feedback.

- Emoji Detection: Enhanced detection with emoticon analysis.

The DweshaMukt project is integrated with Telegram through a series of specialized bots, each designed to handle a different type of input. This allows users to classify text, audio, images, GIFs, video, and YouTube comments directly within the Telegram platform.

Each bot seamlessly interacts with the backend, delivering real-time classification results to users. Whether you're analyzing text, multimedia, or live YouTube comments, these bots ensure a versatile and accessible experience for hate speech detection.

To refer the code of these telegram bots, press the button below.

The DweshaMukt project was proudly showcased at the Nexus 1.0 State Level Project Competition on 15th April 2024. Held at the Army Institute of Technology, Pune, this prestigious event was organized by the Department of Information Technology and Computer Engineering under the AIT ACM Student Chapter.

Representing this project at Nexus 1.0 allowed our team to not only share our research and technical achievements but also to raise awareness about the importance of addressing hate speech in today’s digital world. Competitions like these offer valuable platforms for knowledge exchange, constructive feedback, and networking with other innovators, researchers, and industry experts.

Below are the participation certificates awarded to our team members for presenting DweshaMukt at Nexus 1.0.

Presenting this project at an international platform has been a milestone achievement for our team. Our research was showcased at the CVMI-2024 IEEE International Conference on Computer Vision and Machine Intelligence, hosted by IIIT Allahabad, Prayagraj on 19th and 20th October 2024. The conference offered a valuable opportunity for knowledge exchange with global experts and researchers, fostering discussions on the latest advancements in computer vision and machine learning.

- Research Paper Submission: Our research paper on this project was successfully submitted to IEEE.

- Conference Attendance: Represented by Yash Shukla and Prof. Rajkumar Panchal, the conference participation strengthened our network and insights within the academic community.

The conference report details our experiences and learnings from the event, including keynote sessions and other relevant presentations on emerging research trends. To read the Conference Report, press the button below.

To read our Research Paper, press the button below.

The following certificate was awarded for my participation and representation at CVMI-2024:

Showcasing our work at a conference of this caliber allowed us to share our vision for hate speech detection with an international audience, gaining invaluable feedback and recognition.

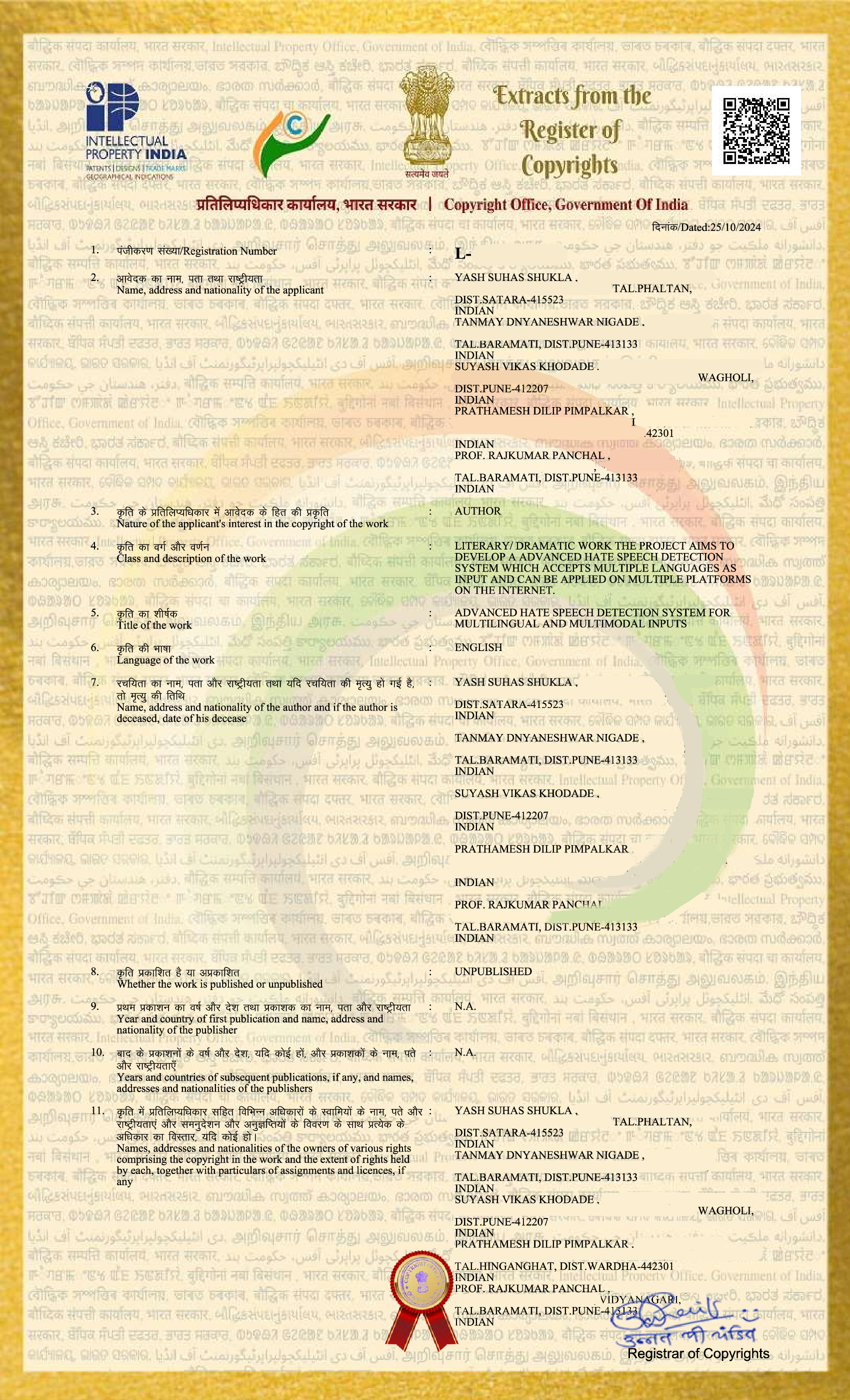

Securing copyright for this project marked an important milestone in safeguarding our innovation and intellectual property. Copyrighting our project not only protects the unique aspects of our hate speech detection system but also reinforces our commitment to responsible AI research. By copyrighting this idea, we ensure that the methods, models, and technological advances developed through this project remain attributed to our team.

Establishing copyright protection is a proactive step towards fostering innovation, ensuring recognition, and laying a foundation for future advancements in hate speech detection.

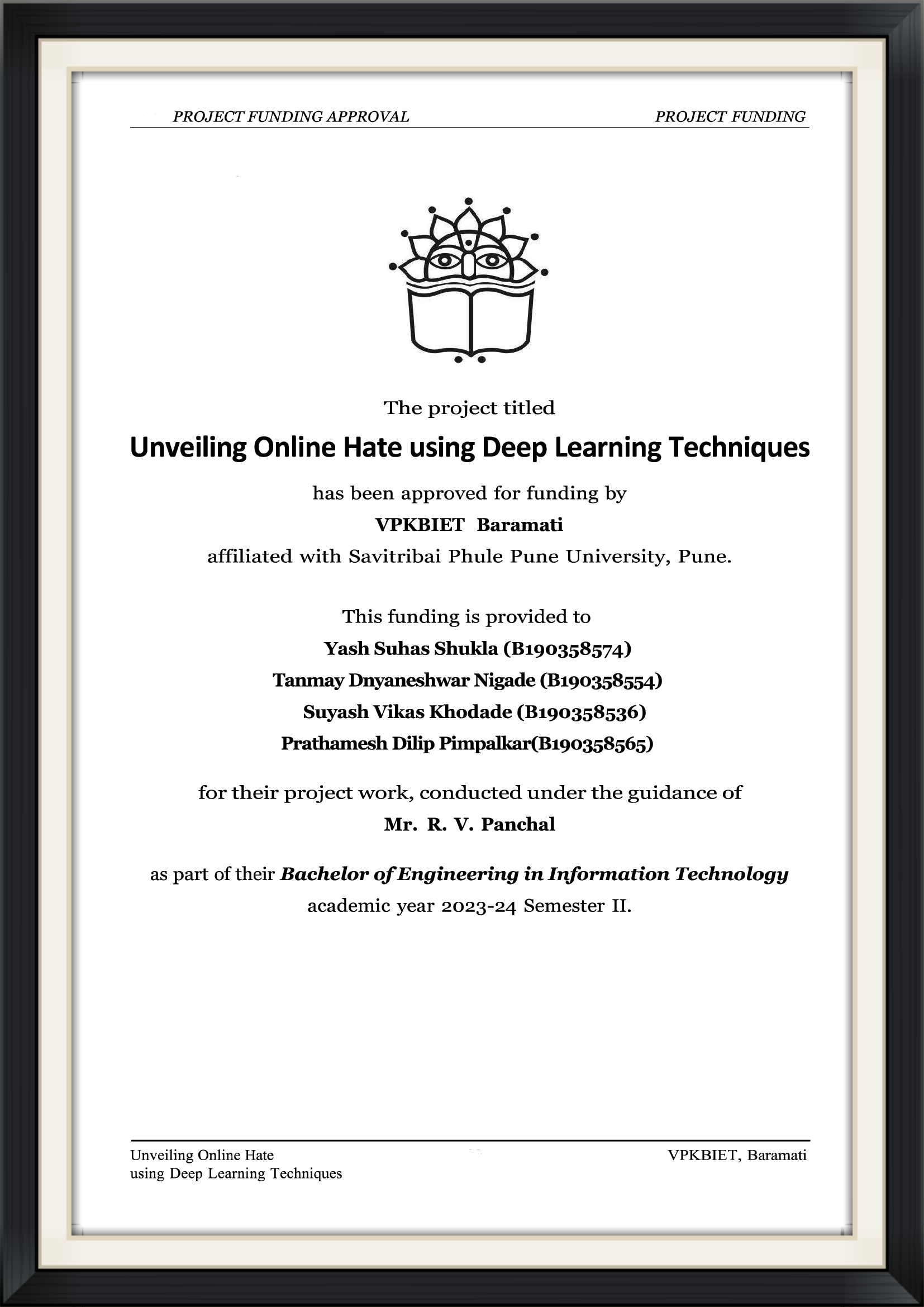

This project was generously funded by Vidya Pratishthan's Kamalnayan Bajaj Institute of Engineering and Technology College, whose support played a pivotal role in enabling our team to bring this ambitious vision to life. This funding allowed us to access essential resources, collaborate with experts, and ensure high-quality development across every phase of the project.

| Sr No | Demand Reason | Demand Cost |

|---|---|---|

| 1 | Google Colab Pro | 1025 |

| 2 | Online Courses | 2684 |

| 3 | Project Presentation Competition | 500 |

| 4 | Stationary Cost | 500 |

| Total | 4709 |

The above provided certificate is custom designed and not officially presented by the college itself. We extend our heartfelt gratitude to VPKBIET College for their trust and support. Their investment in this project has been invaluable in pushing the boundaries of AI-driven hate speech detection.

This project report is extremely detailed in terms of all the progress made till date in the project. The project report can be viewed by pressing the button below.

This project is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. For more details, please refer to the LICENSE file in the repository.

By using this project, you agree to give appropriate credit, not use the material for commercial purposes without permission, and share any adaptations under the same license.

Attribution should be given as: "DweshaMukt Project by DweshaMukt Team (https://github.com/StudiYash/DweshaMukt)"

Quick Overview regarding the permissions of usage of this project can be found on LICENSE DEED : CC BY-NC-SA 4.0

Contributions are welcome! Feel free to open an issue or submit a pull request.

-

Contributor License Agreement (CLA): By submitting a pull request, you confirm that you have read and agree to the terms of the Contributor License Agreement (CLA).

-

Code of Conduct: This project and everyone participating in it are governed by the DweshaMukt Code of Conduct.

-

Contributors: See the list of contributors here.

Made with ❤️ by DweshaMukt Team