A repository sharing the panorama of the methodology literature on Transformer architecture upgrades in Large Language Models for handling extensive context windows, with real-time updating the newest published works.

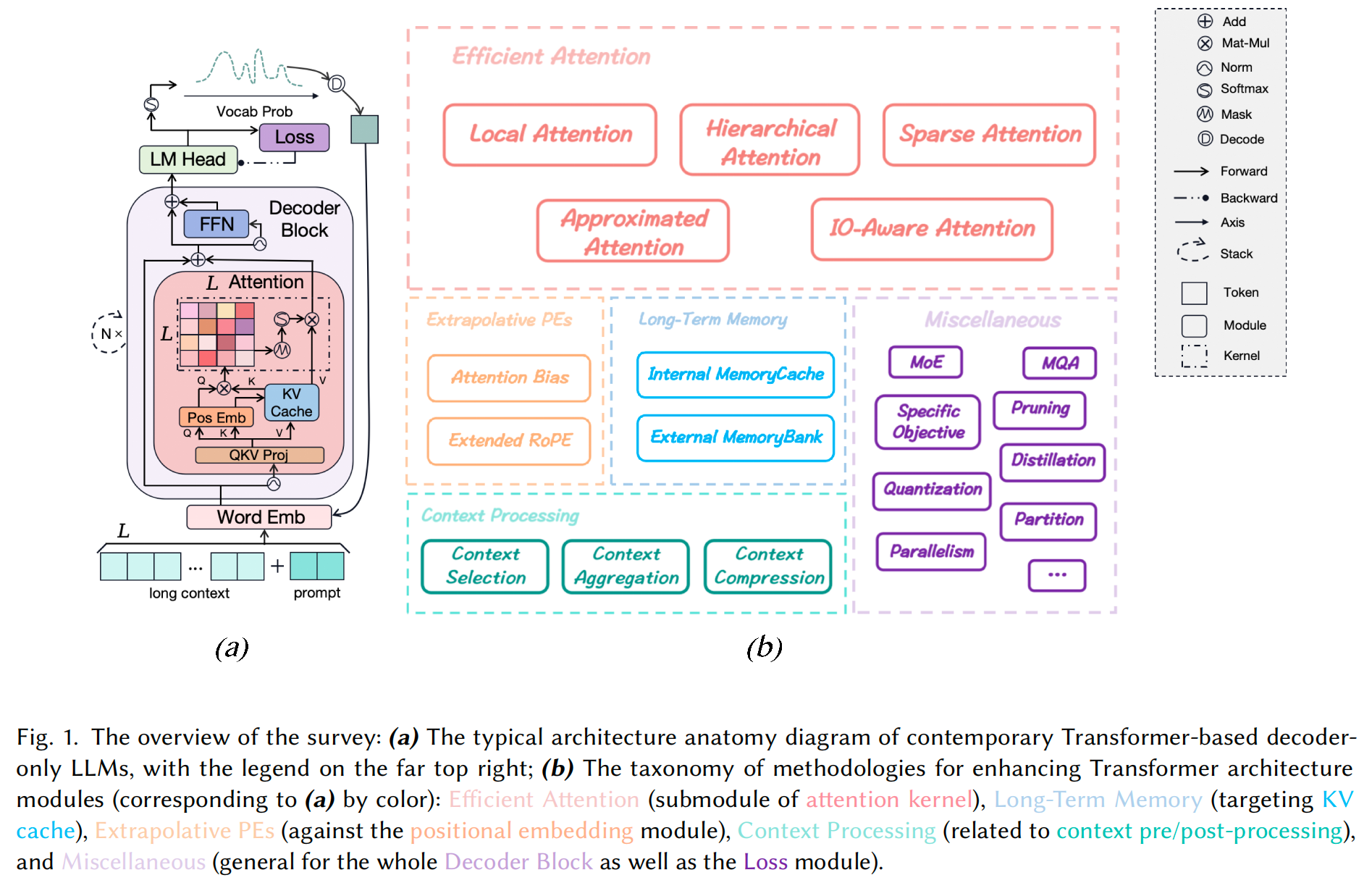

For a clear taxonomy and more insights about the methodology, you can refer to our survey: Advancing Transformer Architecture in Long-Context Large Language Models: A Comprehensive Survey with a overview shown below

We have augmented the great work rerope by Su with flash-attn kernel to combine rerope's infinite postional extrapolation capability with flash-attn's efficience, named as flash-rerope.

You can find and use the implementation as a flash-attn-like interface function here, with a simple precision and flops test script here.

Or you can further see how to implement llama attention module with flash-rerope here.

-

[2024.07]

-

FlashAttention-3: Fast and Accurate Attention with Asynchrony and Low-precision, located here in this repo.

-

MInference 1.0: Accelerating Pre-filling for Long-Context LLMs via Dynamic Sparse Attention, located here in this repo.

-

-

[2024.04] Linear Attention Sequence Parallelism, located here in this repo.

-

[2024.02] Data Engineering for Scaling Language Models to 128K Context, located here in this repo.

-

[2024.01] Lightning Attention-2: A Free Lunch for Handling Unlimited Sequence Lengths in Large Language Models, located here in this repo.

-

[2024.3.18] Longer than long, the Kimi AI assistant launches 200w lossless context window, located here in this repo.

-

[2024.01.30] chatglm-6b-128k with

$L_{max}$ 128k, located here in this repo. -

[2024.01.25] gpt-4-turbo-preview with

$L_{max}$ 128k, located here in this repo.

- [2023.12.19] InfiniteBench, located here in this repo.

- [2023.08.29] LongBench, located here in this repo.

- We've also released a building repo long-llms-evals as a pipeline to evaluate various methods designed for general / specific LLMs to enhance their long-context capabilities on well-known long-context benchmarks.

- This repo is also a sub-track for another repo llms-learning, where you can learn more technologies and applicated tasks about the full-stack of Large Language Models.

- Methodology

- Evaluation

- Tookits

- Empirical Study & Survey

If you want to make contribution to this repo, you can just make a pr / email us with the link to the paper(s) or use the format as below:

- (un)read paper format:

#### <paper title> [(UN)READ]

paper link: [here](<link address>)

xxx link: [here](<link address>)

citation:

<bibtex citation>

If you find the survey or this repo helpful in your research or work, you can cite our paper as below:

@misc{huang2024advancing,

title={Advancing Transformer Architecture in Long-Context Large Language Models: A Comprehensive Survey},

author={Yunpeng Huang and Jingwei Xu and Junyu Lai and Zixu Jiang and Taolue Chen and Zenan Li and Yuan Yao and Xiaoxing Ma and Lijuan Yang and Hao Chen and Shupeng Li and Penghao Zhao},

year={2024},

eprint={2311.12351},

archivePrefix={arXiv},

primaryClass={cs.CL}

}