TL;DR: repo2vec is a simple-to-use, modular library enabling you to chat with any public or private codebase.

Ok, but why chat with a codebase?

Sometimes you just want to learn how a codebase works and how to integrate it, without spending hours sifting through the code itself.

repo2vec is like GitHub Copilot but with the most up-to-date information about your repo.

Features:

- Dead-simple set-up. Run two scripts and you have a functional chat interface for your code. That's really it.

- Heavily documented answers. Every response shows where in the code the context for the answer was pulled from. Let's build trust in the AI.

- Runs locally or on the cloud.

- Plug-and-play. Want to improve the algorithms powering the code understanding/generation? We've made every component of the pipeline easily swappable. Google-grade engineering standards allow you to customize to your heart's content.

We currently support two options for indexing the codebase:

-

Locally, using the open-source Marqo vector store. Marqo is both an embedder (you can choose your favorite embedding model from Hugging Face) and a vector store.

You can bring up a Marqo instance using Docker:

docker rm -f marqo docker pull marqoai/marqo:latest docker run --name marqo -it -p 8882:8882 marqoai/marqo:latestThen, to index your codebase, run:

pip install -r requirements.txt python src/index.py github-repo-name \ # e.g. Storia-AI/repo2vec --embedder_type=marqo \ --vector_store_type=marqo \ --index_name=your-index-name -

Using external providers (OpenAI for embeddings and Pinecone for the vector store). To index your codebase, run:

pip install -r requirements.txt export OPENAI_API_KEY=... export PINECONE_API_KEY=... python src/index.py github-repo-name \ # e.g. Storia-AI/repo2vec --embedder_type=openai \ --vector_store_type=pinecone \ --index_name=your-index-nameWe are planning on adding more providers soon, so that you can mix and match them. Contributions are also welcome!

We provide a gradio app where you can chat with your codebase. You can use either a local LLM (via Ollama), or a cloud provider like OpenAI or Anthropic.

To chat with a local LLM:

- Head over to ollama.com to download the appropriate binary for your machine.

- Pull the desired model, e.g.

ollama pull llama3.1. - Start the

gradioapp:python src/chat.py \ github-repo-name \ # e.g. Storia-AI/repo2vec --llm_provider=ollama --llm_model=llama3.1 --vector_store_type=marqo \ # or pinecone --index_name=your-index-name

To chat with a cloud-based LLM, for instance Anthropic's Claude:

export ANTHROPIC_API_KEY=...

python src/chat.py \

github-repo-name \ # e.g. Storia-AI/repo2vec

--llm_provider=anthropic \

--llm_model=claude-3-opus-20240229 \

--vector_store_type=marqo \ # or pinecone

--index_name=your-index-name

To get a public URL for your chat app, set --share=true.

The src/index.py script performs the following steps:

- Clones a GitHub repository. See RepoManager.

- Make sure to set the

GITHUB_TOKENenvironment variable for private repositories.

- Make sure to set the

- Chunks files. See Chunker.

- For code files, we implement a special

CodeChunkerthat takes the parse tree into account.

- For code files, we implement a special

- Batch-embeds chunks. See Embedder. We currently support:

- Marqo as an embedder, which allows you to specify your favorite Hugging Face embedding model, and

- OpenAI's batch embedding API, which is much faster and cheaper than the regular synchronous embedding API.

- Stores embeddings in a vector store. See VectorStore.

Note you can specify an inclusion or exclusion set for the file extensions you want indexed. To specify an extension inclusion set, you can add the --include flag:

python src/index.py repo-org/repo-name --include=/path/to/file/with/extensions

Conversely, to specify an extension exclusion set, you can add the --exclude flag:

python src/index.py repo-org/repo-name --exclude=src/sample-exclude.txt

Extensions must be specified one per line, in the form .ext.

The src/chat.py brings up a Gradio app with a chat interface as shown above. We use LangChain to define a RAG chain which, given a user query about the repository:

- Rewrites the query to be self-contained based on previous queries

- Embeds the rewritten query using OpenAI embeddings

- Retrieves relevant documents from the vector store

- Calls a chat LLM to respond to the user query based on the retrieved documents.

The sources are conveniently surfaced in the chat and linked directly to GitHub.

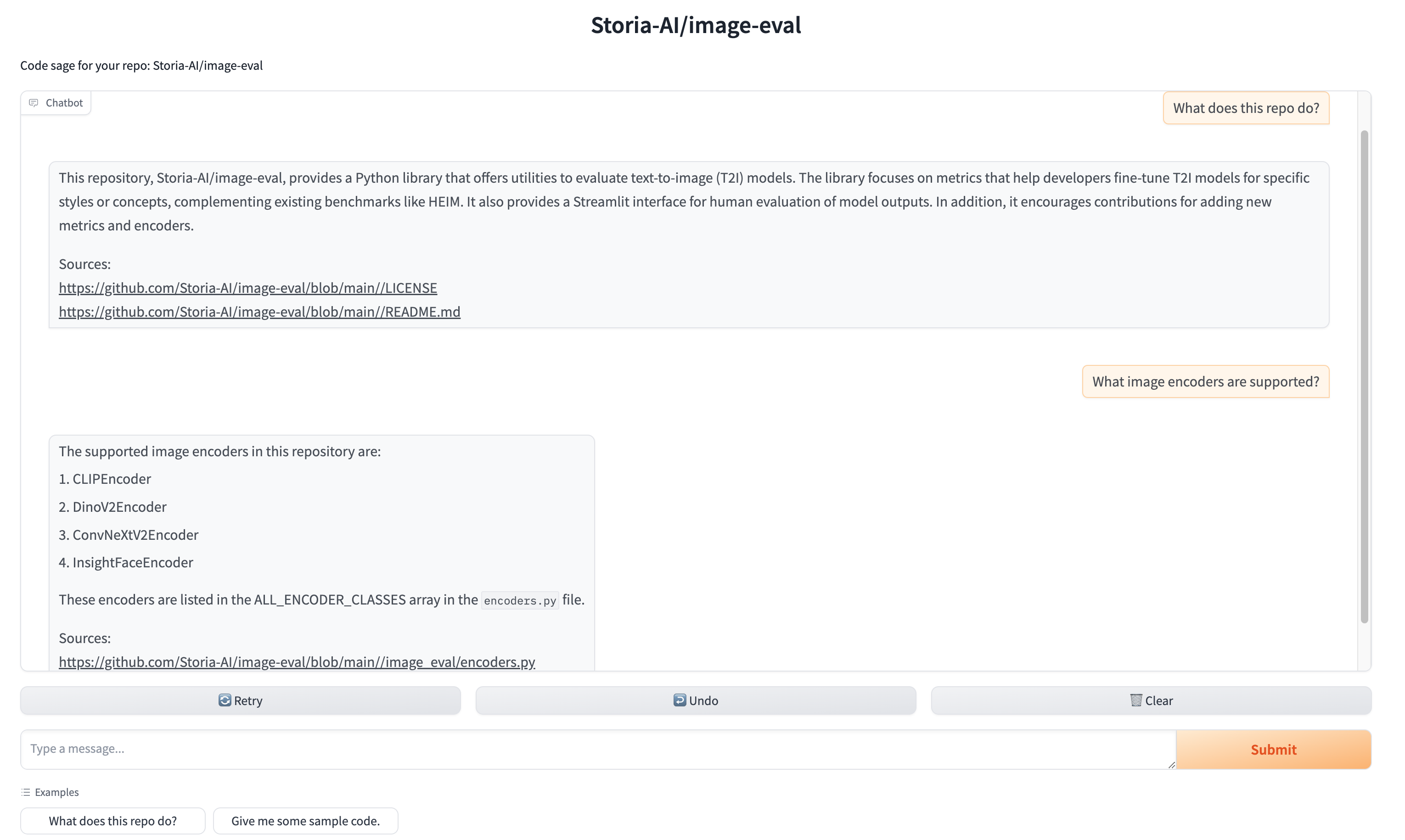

We're working to make all code on the internet searchable and understandable for devs. You can check out our early product, Code Sage. We pre-indexed a slew of OSS repos, and you can index your desired ones by simply pasting a GitHub URL.

If you're the maintainer of an OSS repo and would like a dedicated page on Code Sage (e.g. sage.storia.ai/your-repo), then send us a message at [email protected]. We'll do it for free!

We built the code purposefully modular so that you can plug in your desired embeddings, LLM and vector stores providers by simply implementing the relevant abstract classes.

Feel free to send feature requests to [email protected] or make a pull request!