This repository is a official Pytorch implementation of RGINP.

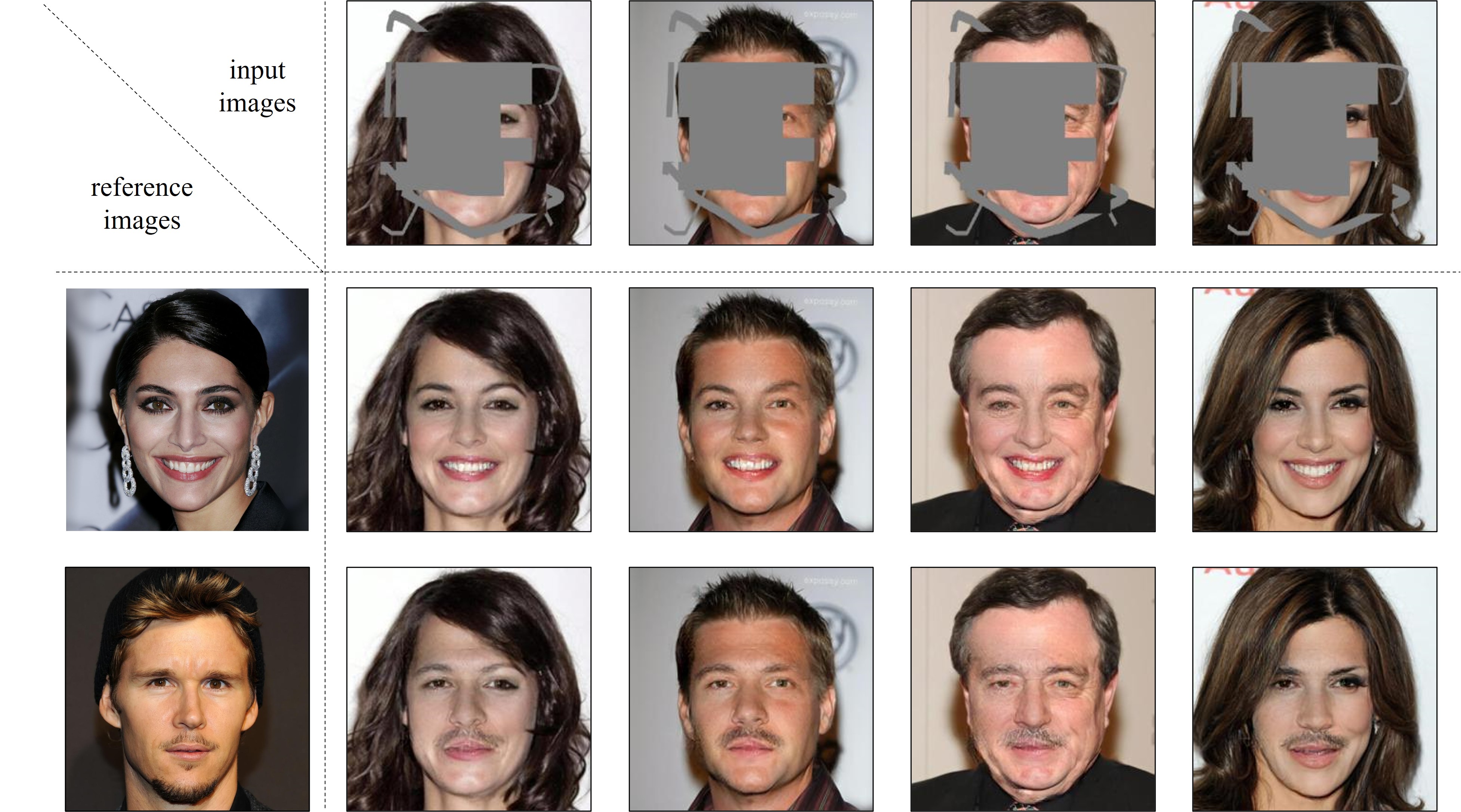

"Reference Guided Image Inpainting using Facial Attributes"

Dongsik Yoon, Jeong-gi Kwak, Yuanming Li, David K Han, Youngsaeng Jin and Hanseok Ko

British Machine Vision Conference (BMVC), 2021

- pytorch

- numpy

- Python3

- munch

- Pillow

We utilize all the experiments in this paper using CelebA-HQ dataset and Quick Draw Irregular Mask dataset.

Please download the datasets and then construct them as shown below.

If you want to learn and evaluate different images you have to change dataloader.py.

--data

--train

--CelebAMask-HQ-attribute-anno.txt

--CelebA_HQ

--0.jpg

⋮

--27999.jpg

--test

--CelebA_HQ

--28000.jpg

⋮

--29999.jpg

--masks

--train

--00000_train.png

⋮

--test

--00000_test.png

⋮

--RGINP

⋮

Please select the desired attributes from the CelebAMask-HQ-attribute-anno.txt.

The default attributes of this experiment are as follows.

attrs_default = ['Bushy_Eyebrows',

'Mouth_Slightly_Open',

'Big_Lips',

'Male',

'Mustache',

'Young',

'Smiling',

'Wearing_Lipstick',

'No_Beard']To train the model:

python main.py --mode trainTo resume the model:

python main.py --mode train --resume_iter 52500python main.py --mode val --resume_iter 200000To test the model, you need to provide an input image, a reference image, and a mask file.

Please make sure that the mask file covers the entire mask region in the input image.

All file names must be the same and constructed as shown below.

--RGINP

⋮

--user_test

--test_result

--user_input

--image

--ref

--sample.jpg # reference image

--src

--sample.jpg # input image

--mask

--sample.jpg # mask image

To test the model:

python main.py --mode test --resume_iter 200000@article{bmvc2021_RGINP,

title={Reference Guided Image Inpainting using Facial Attributes},

author={Yoon, Dongsik and Kwak, Jeonggi and Li, Yuanming and Han, David and Jin, Youngsaeng and Ko, Hanseok},

journal={arXiv preprint arXiv:2301.08044},

year={2023}

}

RGINP is bulided upon the LBAM implementation and inspired by MLGN.

We appreciate the authors' excellent work!