Made by DALLE-3

📃 Our paper has been accepted to EMNLP23 main conference, check it out!

🔥 We have an online demo, check it out!

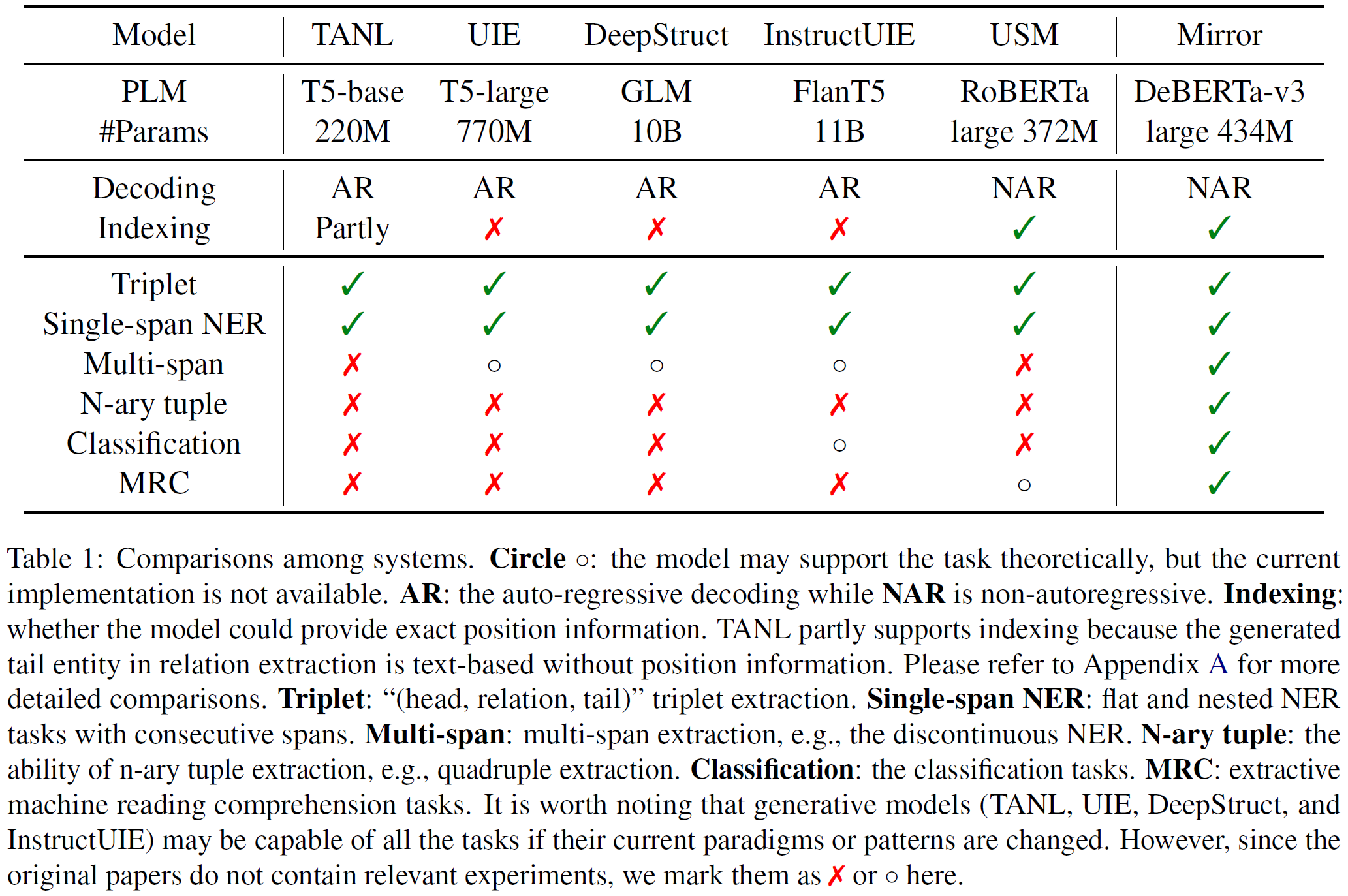

😎: This is the official implementation of 🪞Mirror which supports almost all the Information Extraction tasks.

The name, Mirror, comes from the classical story Snow White and the Seven Dwarfs, where a magic mirror knows everything in the world. We aim to build such a powerful tool for the IE community.

- Named Entity Recognition

- Entity Relationship Extraction (Triplet Extraction)

- Event Extraction

- Aspect-based Sentiment Analysis

- Multi-span Extraction (e.g. Discontinuous NER)

- N-ary Extraction (e.g. Hyper Relation Extraction)

- Extractive Machine Reading Comprehension (MRC) and Question Answering

- Classification & Multi-choice MRC

The pre-trained Mirror model currently supports English IE tasks. If you are looking for a model supporting Chinese IE tasks, please refer to Spico/mirror-chinese-mrcqa-alpha, which is a very early attempt before Mirror comes out.

Python>=3.10

pip install -r requirements.txtDownload the pretrained model weights & datasets from [OSF] .

No worries, it's an anonymous link just for double blind peer reviewing.

- Download and unzip the pretraining corpus into

resources/Mirror/v1.4_sampled_v3/merged/all_excluded - Start to run

CUDA_VISIBLE_DEVICES=0 rex train -m src.task -dc conf/Pretrain_excluded.yaml- Download and unzip the pretraining corpus into

resources/Mirror/v1.4_sampled_v3/merged/all_excluded - Download and unzip the fine-tuning datasets into

resources/Mirror/uie/ - Start to fine-tuning

# UIE tasks

CUDA_VISIBLE_DEVICES=0 bash scripts/single_task_wPTAllExcluded_wInstruction/run1.sh

CUDA_VISIBLE_DEVICES=1 bash scripts/single_task_wPTAllExcluded_wInstruction/run2.sh

CUDA_VISIBLE_DEVICES=2 bash scripts/single_task_wPTAllExcluded_wInstruction/run3.sh

CUDA_VISIBLE_DEVICES=3 bash scripts/single_task_wPTAllExcluded_wInstruction/run4.sh

# Multi-span and N-ary extraction

CUDA_VISIBLE_DEVICES=4 bash scripts/single_task_wPTAllExcluded_wInstruction/run_new_tasks.sh

# GLUE datasets

CUDA_VISIBLE_DEVICES=5 bash scripts/single_task_wPTAllExcluded_wInstruction/glue.sh- Few-shot experiments :

scripts/run_fewshot.sh. Collecting results:python mirror_fewshot_outputs/get_avg_results.py - Mirror w/ PT w/o Inst. :

scripts/single_task_wPTAllExcluded_woInstruction - Mirror w/o PT w/ Inst. :

scripts/single_task_wo_pretrain - Mirror w/o PT w/o Inst. :

scripts/single_task_wo_pretrain_wo_instruction

- Change

task_diranddata_pairsyou want to evaluate. The default setting is to get results of Mirrordirect on all downstream tasks. CUDA_VISIBLE_DEVICES=0 python -m src.eval

- Download and unzip the pretrained task dump into

mirror_outputs/Mirror_Pretrain_AllExcluded_2 - Try our demo:

CUDA_VISIBLE_DEVICES=0 python -m src.app.api_backend@misc{zhu_mirror_2023,

shorttitle = {Mirror},

title = {Mirror: A Universal Framework for Various Information Extraction Tasks},

author = {Zhu, Tong and Ren, Junfei and Yu, Zijian and Wu, Mengsong and Zhang, Guoliang and Qu, Xiaoye and Chen, Wenliang and Wang, Zhefeng and Huai, Baoxing and Zhang, Min},

url = {https://arxiv.org/abs/2311.05419},

doi = {10.48550/arXiv.2311.05419},

urldate = {2023-11-10},

publisher = {arXiv},

month = nov,

year = {2023},

note = {arXiv:2311.05419 [cs]},

keywords = {Computer Science - Artificial Intelligence, Computer Science - Computation and Language},

}- Convert current model into Huggingface version, supporting loading from

transformerslike other newly released LLMs. - Remove

Backgroundarea, mergeTL,TPinto a singleTtoken - Add more task data: keyword extraction, coreference resolution, FrameNet, WikiNER, T-Rex relation extraction dataset, etc.

- Pre-train on all the data (including benchmarks) to build a nice out-of-the-box toolkit for universal IE.

This project is licensed under Apache-2.0. We hope you enjoy it ~

Mirror Team w/ 💖