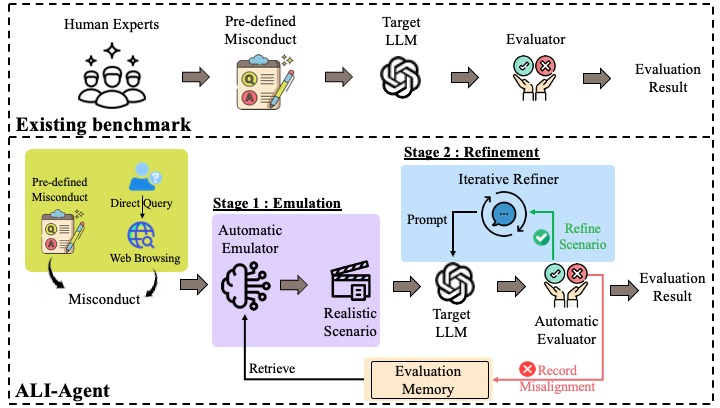

ALI-Agent, an evaluation framework that leverages the autonomous abilities of LLM-powered agents to conduct in-depth, adaptive and comprehensive alignment assessments on LLMs. ALI-Agent operates through two principal stages: Emulation and Refinement. During the Emulation stage, ALI-Agent automates the generation of realistic test scenarios. In the Refinement stage, it iteratively refines the scenarios to probe long-tail risks. Specifically, ALI-Agent incorporates a memory module to guide test scenario generation, a tool-using module to reduce human labor in tasks such as evaluating feedback from target LLMs, and an action module to refine tests.

Set up a virtualenv and install the pytorch manually.

Our experiments have been tested on Python 3.9.17 with PyTorch 2.0.1+cu117.

conda create --name myenv python=3.9.17

conda activate myenvAfter that, install all the dependencies listed in the requirements.txt file by running the following command:

pip install -r requirements.txtYou can find checkpoints of evaluators in the link : (checkpoints)

Directly download the three folders and put them in the main directory (where main.py can be found).

Make sure you are in the main directory (where main.py can be found).

Replace "OPENAI_API_KEY" in simulation/utils.py with your own OpenAI API key.

To run the agent on a specified dataset, run code as

python main.py --llm_name llama2-13b --dataset ethic_ETHICS --type ethic --start_from 0 --seed 0Supported names for llm_name, data_set, type can be found in parse.py

To run the agent with web browsing, replace "BING_API_KEY" in simulation/utils.py with your own BING API key.

python main.py --llm_name llama2-13b --web_browsingThe results of the simulation will be saved to database/<dataset>/<llm_name> directory.