This repo is forked from the DPO repo. However, this repo is NOT to explore alignment of LLMs, but rather to explore what the LLMs are learning during SFT.

It has been long argued that SFT is enough for alignment, i.e. LLMs can generate "PROPER RESPONSES" after SFT. Yet, in my own previous experiment, I observed that the likelihood of the "negative responses" (or "improper responses") also keeps increasing during SFT, even though the learning target is ONLY the positive/preferred responses. So, this leads to the question of this repo: what is the LLM really learning during SFT?

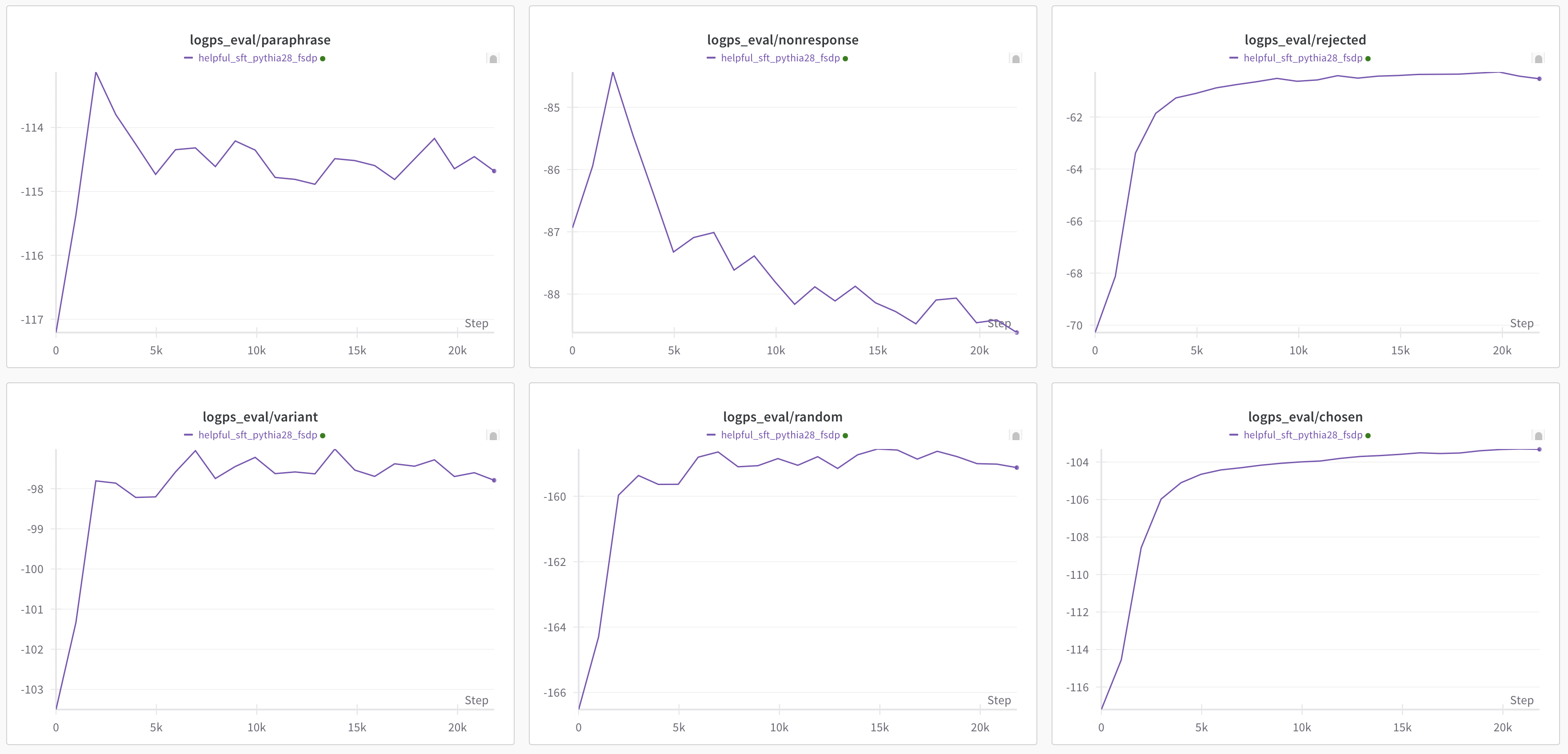

More specifically, I wonder if the LLM is learning to generate the "proper responses" or just "responses". To answer this question, I construct the synthetic data followed the method introduced in the next section, and then train the LLMs on only the "proper responses" with SFT. The likelihood of various kinds of responses over SFT is then recoded and showed at the end of this README.

More broadly, the key hypothesis can be generalised as:

Hypothesis: given a set of samples

$\mathbb{D}={(x_i,y_i)}_{i=1}^N$ generated by a target function$f_{tgt}$ , if we train a model on$\mathbb{D}$ , the models is not neccessarily learning$f_{tgt}$ , but rather learning a simpler function$f_{smp}$ that can generate$\mathbb{D}$ .

The above hypothesis says that the dataset

So far, the unclear and interesting part is that how can we tell the exact function that the model is learning through SFT on

To answer the question above, beyond the "proper/chosen responses" to the prompts inthe HH/helpful-base dataset from Anthropic, I also need to synthesise more data on it.

Following the function hypothesis above, I hereby consider from the perspective that

For a given prompt

-

Rejected response

$y_i^-$ : a response that is sampled from$f_{tgt}(x_i)$ as well, but is less preferred by the user, compared to$y_i^+$ . -

Paraphrase of rejected response

$\tilde{y}_i^-$ : a paraphrase of the REJECTED response$y_i^-$ , done by Gemini-Pro.$\tilde{y}_i^-$ should be in$\mathcal{F}$ , but is further away from the distribution specified by$f_{tgt}(x_i)$ than$y_i^+$ and$y_i^-$ . -

Vairant response

$y_i'$ : a responses generated by Gemini-Pro to$x_i$ , thus$y_i'in\mathcal{F}$ but should be out of the distribution specified by$f_{tgt}(x_i)$ . -

Random response

$y_j^+$ : the preferered response to a randomly selected prompt$x_j$ . N.B. the index is$j$ here instead of$i$ , thus$y_j^+\in\mathcal{F}$ but is mapped from a different$x_j$ .$y_j^+$ should be totally out of the distribution specified by$f_{tgt}(x_i)$ . -

Non-response

$\bar{y}_i$ : a random sentence generated by Gemini-Pro, thus not a response, i.e.$\bar{y}_i\notin\mathcal{F}$ .

Now, let's see how the likelihood of the above kinds of responses change over SFT.

N.B. the learning target is ONLY the "proper responses"

As can be seen from the above figure, the log-probabilities of various kinds of responses change in different ways over SFT.

Briefly speaking, all of them increase over SFT, except for the "non-response"

The results above suggest that SFT is actually learning to generate "responses" in general, not even only the "responses" to

More interestingly, the log-probability of "rejected responses" GitHub-Copilot)

Overall, the results signifies that SFT is not enough for alignment, and the alignment step is still necessary.

- Discuss the degrees of the changes of log-probabilities of various responses.

- Figure out how to define the "simplicity" of a function for a given model.

- Figure out how to construct a dataset

$\mathbb{D}$ that can represent only$f_{tgt}$ , i.e.$\mathcal{F}={f_{tgt}}$ .