The problem of global motion estimation (GME) deals with the separation, in a video sequence, of two different types of motion: the egomotion of the camera recording the video, and the actual motion of the objects recorded. The literature presents a number of possible approaches to the GME problem; here, we aim to combine some of the most effective strategies to compute the motion of the camera.

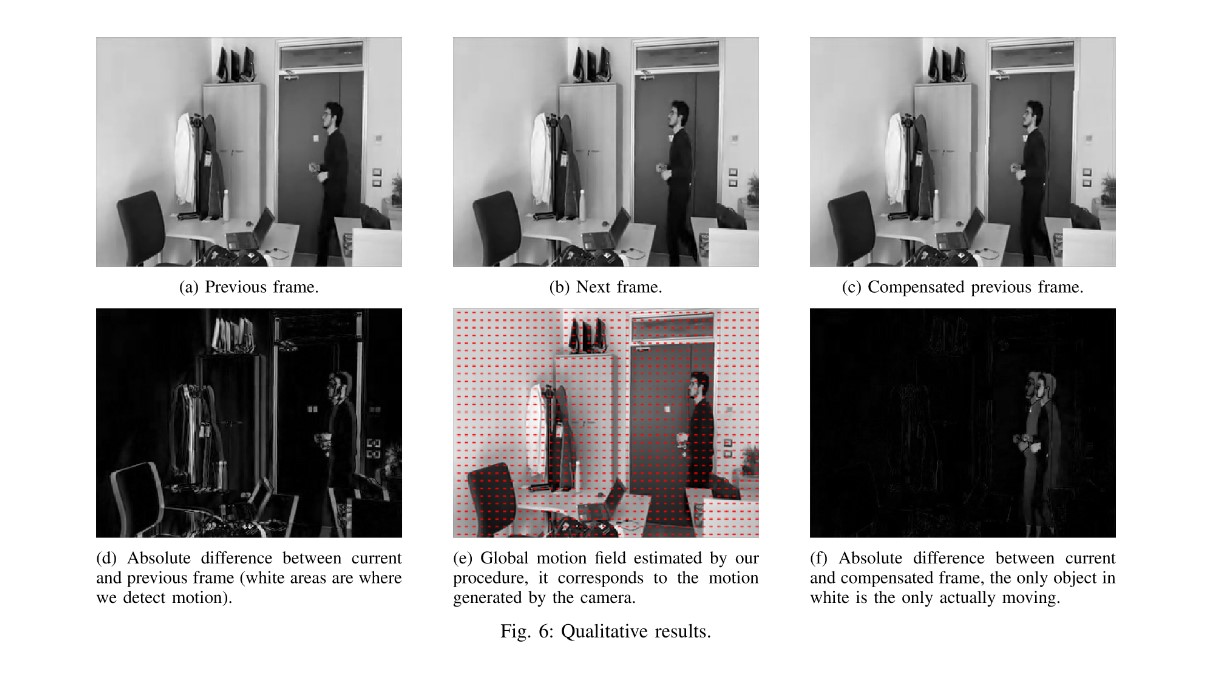

We adopt an indirect approach based on the affine motion model. Following a multi-resolution approach, at each level we compute the parameters of the motion model that minimize the error of the motion field. Then, we use those parameters to spot the outliers of the motion field in the next level; when computing the optimal parameters in the next level, we remove the detected outliers, in order to obtain a robust estimation. Results are reported both in a qualitative and quantitative way.

# Input: previous_frame, current_frame

# Output: a (parameter vector)

prev_pyrs = get_pyr(previous_frame)

cur_pyrs = get_pyr(current_frame)

a = first_estimation(prev_pyrs.next(), cur_pyrs.next())

for l in pyr_levels:

prev_frame = prev_pyrs.next()

cur_frame = cur_pyrs.next()

ground_truth_mfield = BBME(prev_frame, cur_frame)

estimated_mfield = affine(a)

outliers = detect_outliers(ground_truth_mfield, estimated_mfield)

a = minimize_error(prev_frame, cur_frame, outliers)

return aIn the project report (docs folder) you can find more details of both foundations and implementation details.

bbme.py: module containing all the functions related to the various block matching motion estimation procedures that we have tested;motion.py: module containing all the functions and constants needed in order to perform camera motion estimation and motion compensation;results.py: example of use of the packages modules to produce the results we used also to create this report.

First of all, create a virtualenv and install the requirements:

python3 -m venv venv

source venv/bin/activate

pip install -r requirementsTo produce a full execution of the pipeline, you can run the results.py module as follows:

- pick a video and put it in the videos folder

- move into the module

cd ./global_motion_estimation - run the script with

python3 ./results.py - optionally set the frame distance (default is 3), e.g.

python3 ./results.py -v pan240.mp4 -f 5

The BBME module bbme.py can be run independently from the main pipeline and it accepts a variety of parameters:

usage: bbme.py [-h] -p PATH -fi FI [-pn PNORM] [-bs BLOCK_SIZE] [-sw SEARCH_WINDOW]

[-sp SEARCHING_PROCEDURE]

Computes motion field between two frames using block matching algorithms

optional arguments:

-h, --help show this help message and exit

-p PATH, --video-path PATH

path of the video to analyze

-fi FI, --frame-index FI

index of the current frame to analyze in the video

-pn PNORM, --p-norm PNORM

0: 1-norm (mae), 1: 2-norm (mse)

-bs BLOCK_SIZE, --block-size BLOCK_SIZE

size of the block

-sw SEARCH_WINDOW, --search-window SEARCH_WINDOW

size of the search window

-sp SEARCHING_PROCEDURE, --searching-procedure SEARCHING_PROCEDURE

0: Exhaustive search,

1: Three Step search,

2: 2D Log search,

3: Diamond search

Basic usage:

- pick a video to analyze

- set the index of the anchor frame

- adjust pnorm, searching procedure, search window and block size

- run

python3 ./global_motion_estimation/bbme.py - find results in the resources folder

Example:

python3 ./global_motion_estimation/bbme.py -p ./global_motion_estimation/resources/videos/pan240.mp4 -fi 10 -bs 12 -sw 12 -sp 3The videos we used to test the pipeline are available in this Google Drive folder. Feel free to try it out with your own datasets.

In order to reduce computation time, we suggest to scale down higher-resolution videos to reasonable sizes, e.g.

ffmpeg -i full-res-video.mp4 scale=720:480 scaled-video.mp4An example of the results is reported here, refer to the project report for a detailed explanation.

The results.py scripts on video pan240.mp4 yields the following result:

📁 ./global_motion_estimation/results/pan240

├── 📁 compensated

│ ├── 🖼️ 0000.png

│ ├── 🖼️ ........

│ └── 🖼️ XXXX.png

├── 📁 curr_comp_diff

│ ├── 🖼️ 0000.png

│ ├── 🖼️ ........

│ └── 🖼️ XXXX.png

├── 📁 curr_prev_diff

│ ├── 🖼️ 0000.png

│ ├── 🖼️ ........

│ └── 🖼️ XXXX.png

├── 📁 frames

│ ├── 🖼️ 0000.png

│ ├── 🖼️ ........

│ └── 🖼️ XXXX.png

├── 📁 motion_model_field

│ ├── 🖼️ 0000.png

│ ├── 🖼️ ........

│ └── 🖼️ XXXX.png

└── 📄 psnr_records.json- compensated: collection of compensated previous frames

- curr_comp_diff: collection of images encompassing the absolute difference between current frame and the compensated previous

- curr_prev_diff: collection of images encompassing the absolute difference between current frame and previous

- frames: collection of all the frames of the video

- motion_model_field: collection of all the frames in the video on top of which it has been drawn a needle diagram representing the estimated global motion field

- psnr_records.json: JSON object holding the PSNR value for each pair compensated-current

This is how you change the default configuration:

bbme.py: just typepython3 bbme.py -hand go with the CLI argumentsresults.py: samemotion.py: open the file and change the constant declarations on top of the module

Better configuration management is soon to come.