This repository contains code (and soon, also results) of benchmarks for the SMPyBandits python package, using the airspeed velocity tool.

The (current) results are hosted on this page. I won't upload them on GitHub pages, for now.

This project is written by Lilian Besson's, written in Python (2 or 3), to test the quality of SMPyBandits, my open-source Python package for numerical simulations on 🎰 single-player and multi-players Multi-Armed Bandits (MAB) algorithms.

A complete Sphinx-generated documentation for SMPyBandits is on SMPyBandits.GitHub.io.

I (Lilian Besson) have started my PhD in October 2016, and this is a part of my on going research since December 2016.

I launched the documentation on March 2017, I wrote my first research articles using this framework in 2017 and decided to (finally) open-source my project in February 2018.

/

:

/

I wrote two benchmark scripts, for single-player policies and multi-players policies (in the Policies and PoliciesMultiPlayers modules in [SMPyBandits]), see SMPyBandits_PoliciesSinglePlayer.py SMPyBandits_PoliciesMultiPlayers.py .

Roughly speaking, the simulation loops for both benchmarks look like this:

-

Single player (SMPyBandits_PoliciesSinglePlayer)

def full_simulation(self, algname, nbArms, horizon): MAB = make_MAB(nbArms) alg = algorithm_map[algname](nbArms) alg.startGame() for t in range(horizon): arm = alg.choice() reward = MAB.draw(arm) alg.getReward(arm, reward)

-

Multi players (SMPyBandits_PoliciesSinglePlayer)

def full_simulation(self, algname, nbArms, nbPlayers, horizon): MAB = make_MAB(nbArms) my_policy_MP = algorithmMP_map[algname](nbPlayers, nbArms) children = my_policy_MP.children # get a list of usable single-player policies for one_policy in children: one_policy.startGame() # start the game for t in range(horizon): # chose one arm, for each player choices = [ children[i].choice() for i in range(nbPlayers) ] sensing = [ MAB.draw(k) for k in range(nbArms) ] for k in range(nbArms): players_who_played_k = [ i for i in range(nbPlayers) if choices[i] == k ] reward = sensing[k] if len(players_who_played_k) == 1 else 0 # sample a reward for i in players_who_played_k: if len(players_who_played_k) > 1: children[i].handleCollision(k, sensing[k]) else: children[i].getReward(k, reward)

-

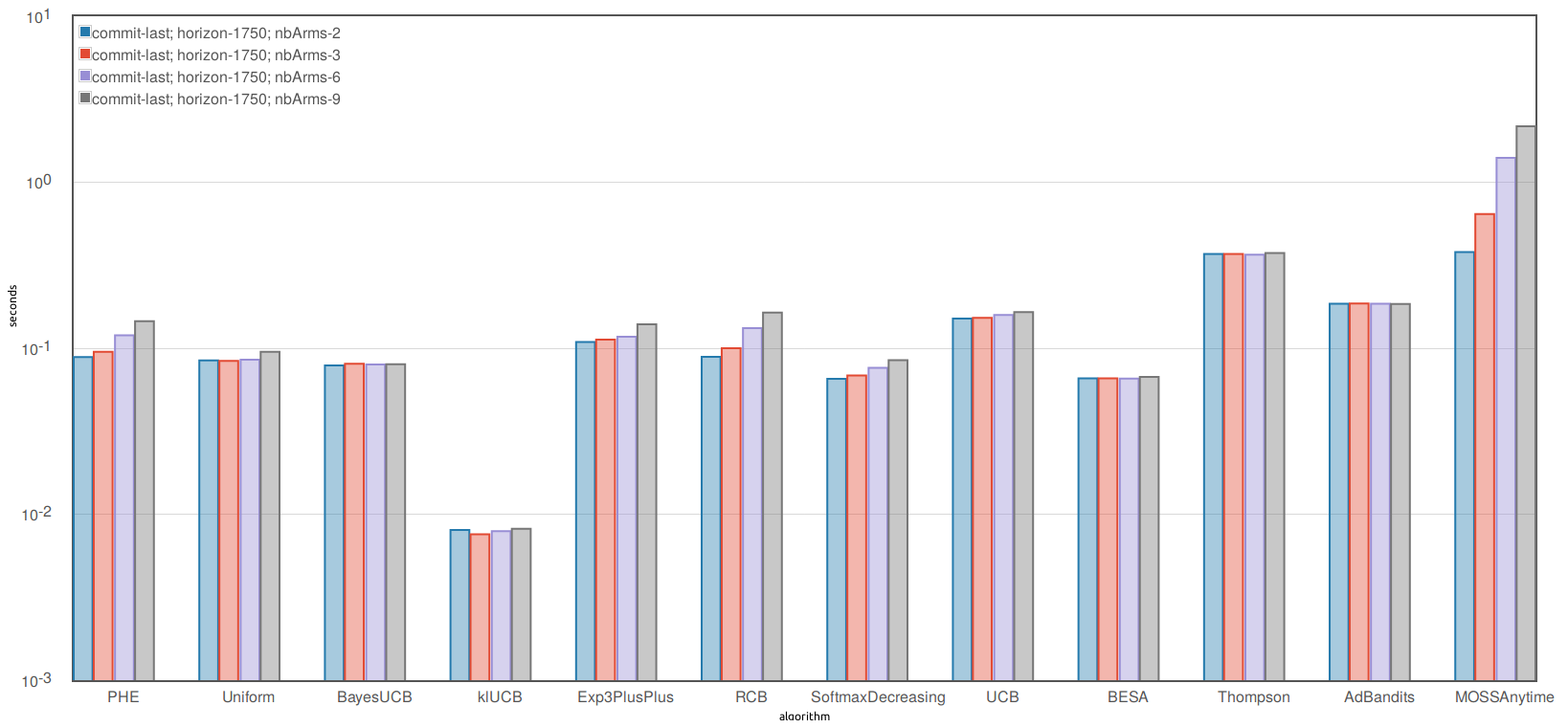

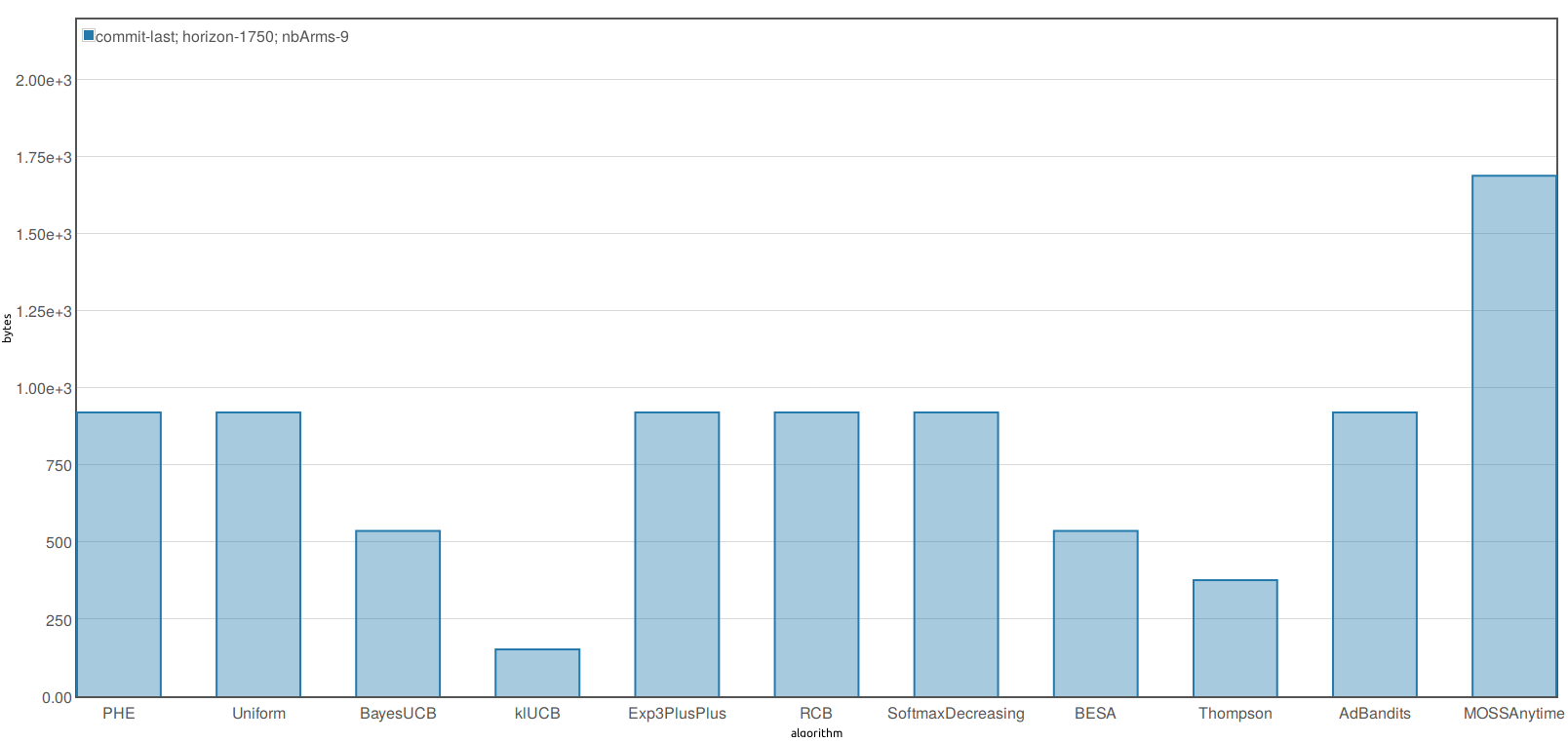

Here are some screenshots from quick simulations I ran as a first try of using airspeed velocity for benchmarking my 3-year-long implementation work on SMPyBandits:

As function of nb of arms (for fixed horizon T)

As a function of horizon (for fixed nb of arms K)

(not sure yet if I wrote this one correctly, I don't understand the plot)

For three different values of K

(I also don't understand the results, I need to check what I wrote quickly yesterday, klUCB should be very good, BESA is usually awesome)

It's all very impressive, right?

And the bonus is that the HTML files generated by asv can be simply hosted online, and anyone can then browse through the web interface… Incredible 💥! See this page

If you use this package for your own work, please consider citing it with this piece of BibTeX:

@misc{SMPyBandits,

title = {{SMPyBandits: an Open-Source Research Framework for Single and Multi-Players Multi-Arms Bandits (MAB) Algorithms in Python}},

author = {Lilian Besson},

year = {2018},

url = {https://github.com/SMPyBandits/SMPyBandits/},

howpublished = {Online at: \url{github.com/SMPyBandits/SMPyBandits}},

note = {Code at https://github.com/SMPyBandits/SMPyBandits/, documentation at https://smpybandits.github.io/}

}I also wrote a small paper to present SMPyBandits, and I will send it to JMLR MLOSS. The paper can be consulted here on my website.

A DOI will arrive as soon as possible! I tried to publish a paper on both JOSS and MLOSS.

MIT Licensed (file LICENSE).

© 2016-2019 Lilian Besson, with help from contributors.