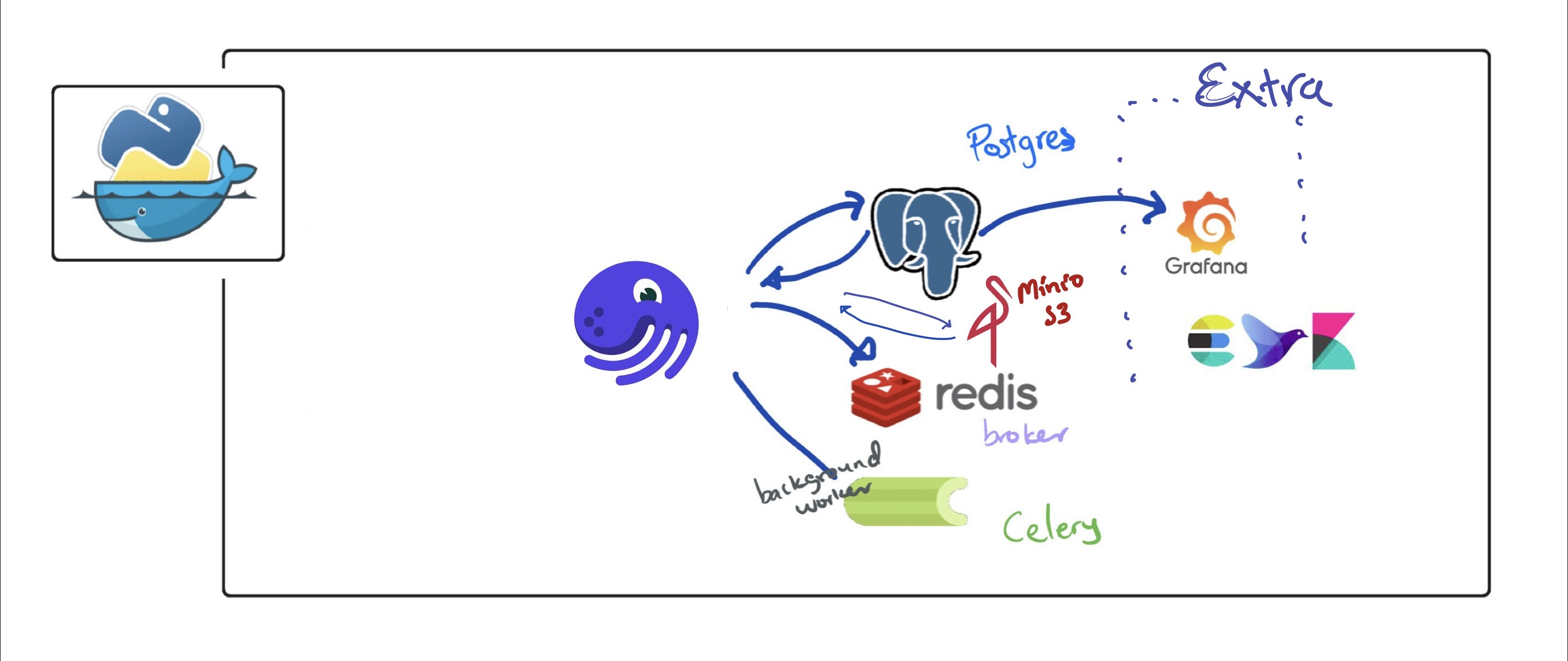

Using dagster to schedule, monitor, and log. Postgres as backend and data storage. Celery[Redis] as a broker

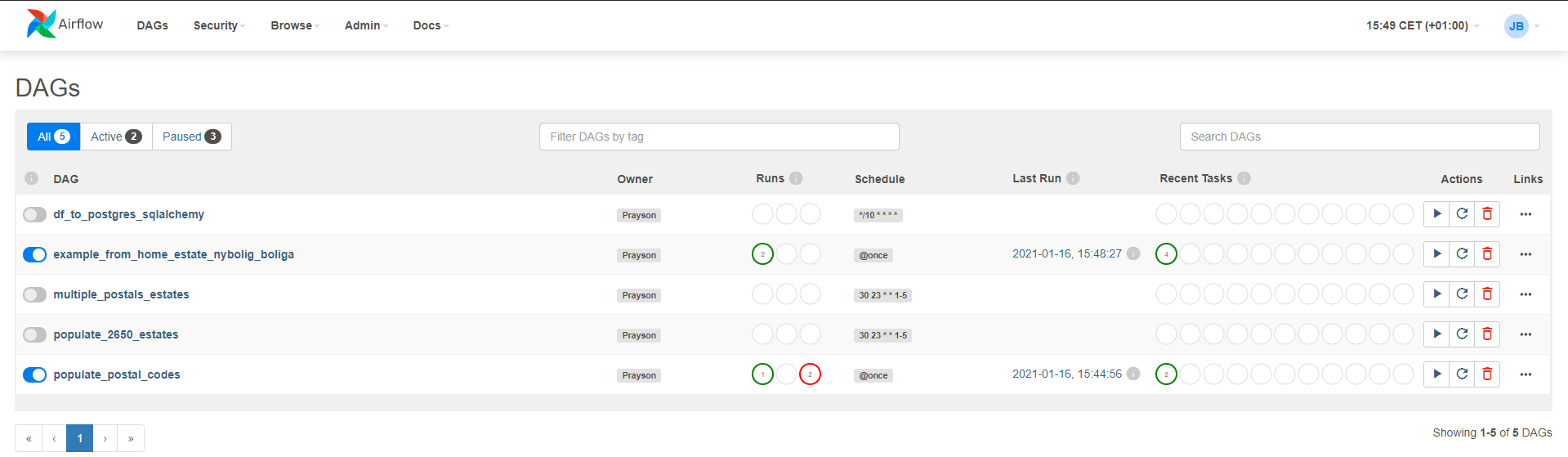

Migrating from airflow to dagster in progress ...

Checklist to start our services

- make sure docker is running, and volume mounting is enabled.

- git clone advance_scraping

- set environment variables

- run the service with a single docker compose command

Real estates data gathered through websites API are for personal use only. This project demonstrates how I built a pipeline to assist my family buy a house 🏡. Where you to use that data beyond private use. Do contact estate websites API for permission.

git clone https://github.com/Proteusiq/advance_scraping.git

cd advance_scrapingSet correct environment variables in all *.env. E.g. edit the .env_demo contents and save it as .env

Each service has service.env. Edit them as you see fit.

⚠️ WARNING: Remember to add*.envto .gitignore. Do not share your secrets. All*.envare left here for demonstration.

docker compose configMake sure you can see the environment variable docker-compose fetches from .env

See: docker-compose options.

Slack Integration

Checklist to set slack integration

- Create Slack APP for a given channel

- In

OAuth Tokens for Your Workspaceget the Bot User OAuth Token and set it in .env SLACK_TOKEN - Set

Bot Token Scopestochat:write.customize - Extra

Restrict API Token Usagewhitelist IP Address - In a given channel invite the bot e.g.

@BotName

WARNING: Postgres container has an issue with persisting data after a restart. Until then, we will use labelled volume Do

docker volume create --name=pgdatato create a named volume (to deletedocker volume rm pgdata)

docker compose upNote: Only the initial build will take a while. Go grab a cup of coffee as docker downloads and install the necessary tools.

You can run the services in detach mode. --detach or -d flag. This will leave services running.

See: docker-compose up options

Head to localhost:3000 on your browser.

Examples: On Launchpad

Jobs Config!

Running make_service_job requires config:

ops:

estate_service:

config:

url: "https://www.estate.dk/Services/PropertySearch/Search"

nybolig_service:

config:

url: "https://www.nybolig.dk/Services/PropertySearch/Search"

resources:

warehouse:

config:

table_name: servicesexample: make_boliga_job recent estates

ops:

get_boliga:

config:

start_page: 0

end_page: 100

pagesize: 800

url: https://api.boliga.dk/api/v2/search/results

resources:

warehouse:

config:

table_name: boliga_currentexample: make_boliga_job sold estates

ops:

get_boliga:

config:

start_page: 0

end_page: 100

pagesize: 800

resources:

warehouse:

config:

table_name: "boliga_sold"Tools

-

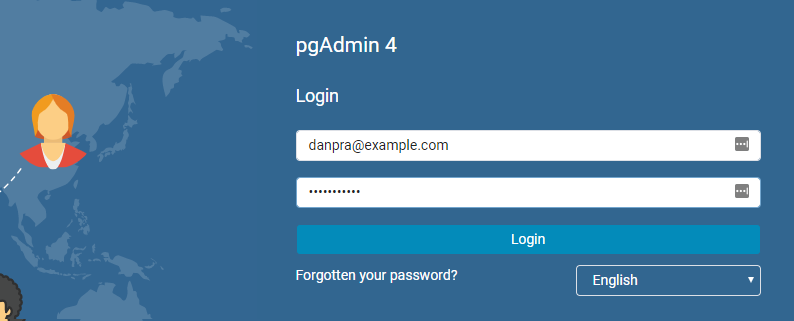

pgAdmin:

address:localhost:5050default_email:[email protected]default_pwd: admin

Head to localhost:5050. Login with credentials used in your environment PGADMIN_DEFAULT_EMAIL and PGADMIN_DEFAULT_PASSWORD variables. Example: [email protected] and password postgrespwd

Adding a connection to postgres DB in pgAdmin, click Add New Server. Type any name and select Connection. Name:Boliga > Host name/address: postgres: Postgres Username and Password and click Save

[Coming soon]

Press Ctrl + C to stop our services without killing your volumes data. Then do

docker compose downUse docker-compose down -v to remove also the volumes.

WARNING: remember to backup your data before removing volumes.

docker compose down -vSee: docker-compose down options

OOP Rumbling

A lazy programmer, like me, loves to write less yet comprehensive codes. (:) Yes, I said it). Design Pattern in Python is not as useful and, in most cases, an overkill as other languages like Java, C#, and C++. To design a simple bolig[danish for estate] scrapping tool from different estate websites in Denmark, I decided to use Abstract Factory Pattern.

Bolig (webscrapers.abc_estates.Bolig) ensures a single instance and single object that can be used by all other bolig related classes. The form of singleton design is Early Instantiation. We create an instance at load time.

Bolig class also defines an interface[abstract class] for creating families of related objects without specifying their concrete sub-classes[functions]. This ensures consistency among all objects by isolating the client code from implementation. We want to use the same function but with different implementations. get_page and get_pages will always be called in the same way, but the implementation is different.

# inheritance tree

Bolig # singleton and abstract

Boliga(Bolig) # overides get_page and get_pages for boliga.dk api logic

Services(Bolig) # overides get_page and get_pages for home.dk and estate.dk api logic

BoligaRecent(Boliga) # initiate with recent boliga as url

BoligaSold(Boliga) # initiate with sold boliga as url

Home(Services) # initiate with home recent home as url

Estate(Services) # initiate with estate recent home as url- Add a web-scraper examples

- Add simple Dagster examples

- Add custom error handling class

Docker Basics:

Kill all containers

docker container ps | awk {' print $1 '} | tail -n+2 > tmp.txt; for line in $(cat tmp.txt); do docker container kill $line; done; rm tmp.txtSteps for local setup: assuming pyenv -v 2.3.0 and poetry 1.1.13 or above

pyenv install 3.10.4 && pyenv local 3.10.4

poetry install && poetry shell # or from scratch `poetry add pandas httpx sqlalchemy psycopg2-binary dagster dagit`# create a new dagster template with:

dagster new-project <project_name> get tables

-- get all tables

SELECT table_name

FROM information_schema.tables

WHERE table_schema='public';Get the columns and tables

SELECT

table_name

,column_name

,data_type

FROM

information_schema.columns

WHERE

table_schema = 'public'

-- AND table_name = 'home';

ORDER BY

column_name ASCdrop table

DROP TABLE "test"scipy needs python <3.11

SELECT price, solddate::date FROM boliga_sold

WHERE saletype = 'Alm. Salg'

AND city = 'Hvidovre'

AND propertytype in (1, 2, 3) -- villa, jointhouse, apartment

AND DATE_PART('year', solddate::date) > 2010