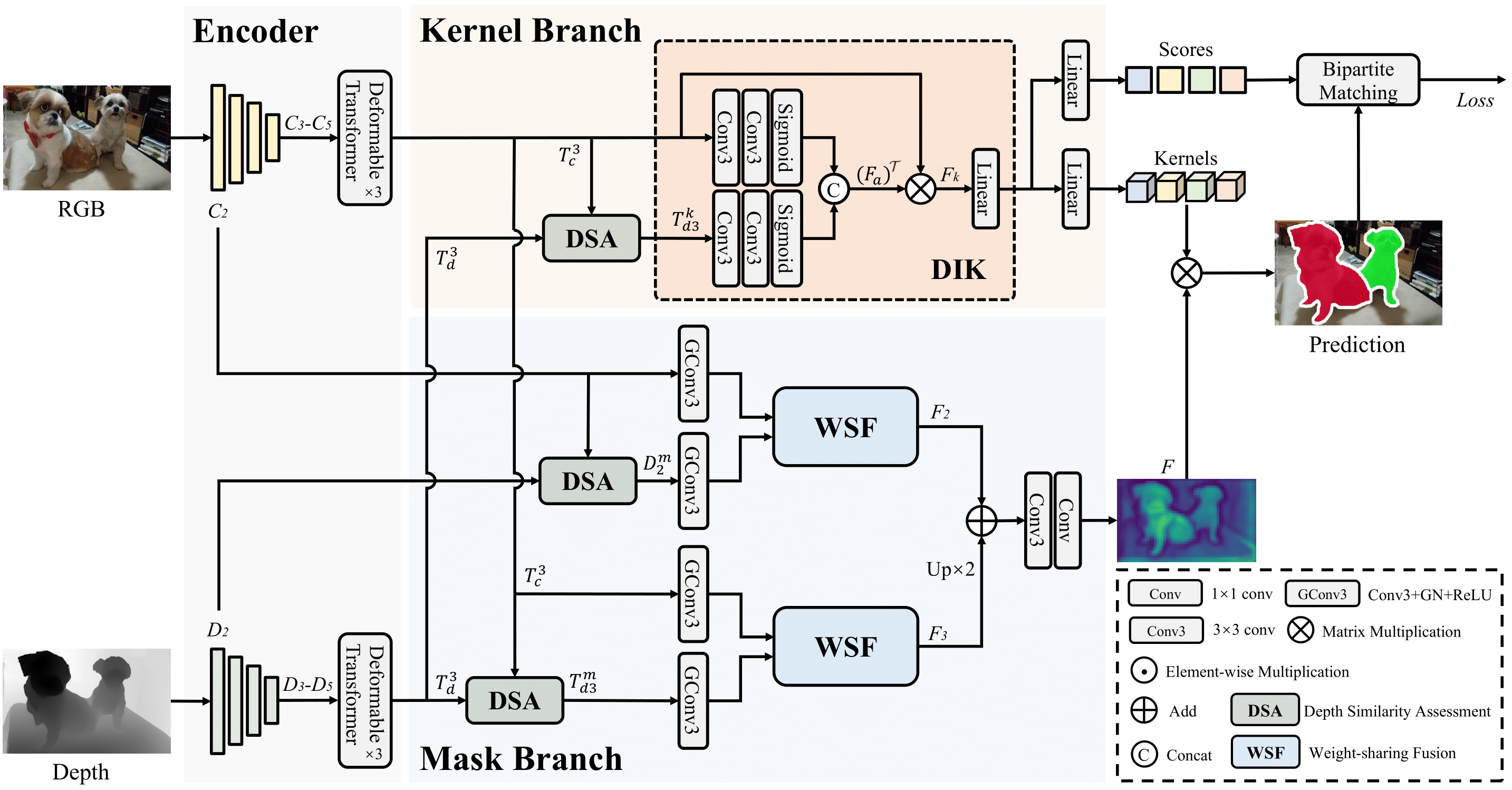

Official Implementation of "CalibNet: Dual-branch Cross-modal Calibration for RGB-D Salient Instance Segmentation"

Jialun Pei, Tao Jiang, He Tang, Nian Liu, Yueming Jin, Deng-Ping Fan✉, and Pheng-Ann Heng

👀 [Paper]; [Chinese Version]; [Official Version]

Contact: [email protected], [email protected]

- Linux with python ≥ 3.8

- Pytorch ≥ 1.9 and torchvison that matches the Pytorch installation.

- Detectron2: follow Detectron2 installation instructions.

- OpenCV is optional but needed by demo and visualization.

pip install -r requirements.txt

After preparing the required environment, run the following command to compile CUDA kernel for MSDeformAttn:

CUDA_HOME must be defined and points to the directory of the installed CUDA toolkit.

cd calibnet/trans_encoder/ops

sh make.shOur project is built upon detectron2. In order to accommodate the RGB-D SIS task, we have made some modifacations to the framework to handle its dual modality inputs. You could replace the c2_model_loading.py in the framework with the one we provide calibnet/c2_model_loading.py.

We give an example to setup the environment. The commands are verified on CUDA 11.1, pytorch 1.9.1 and detectron2 0.6.0.

# an example

# create virtual environment

conda create -n calibnet python=3.8 -y

conda activate calibnet

# install pytorch

pip install torch==1.9.1+cu111 torchvision==0.10.1+cu111 torchaudio==0.9.1 -f https://download.pytorch.org/whl/torch_stable.html

# install detectron2, use the pre-built detectron2

python -m pip install detectron2 -f \

https://dl.fbaipublicfiles.com/detectron2/wheels/cu111/torch1.9/index.html

# install requierement packages

pip install -r requirements.txt

# build CUDA kernel for MSDeformAttn

cd calibnet/trans_encoder/ops

sh make.sh

# replace the model loading code in detectron2. You should specify your own detectron2 path.

cp -i calibnet/c2_model_loading.py detectron2/checkpoint/c2_model_loading.py- COME15K: Google Drive

- DSIS (Ours): Google Drive; Xunlei Drive (password: 7dif)

- SIP: Google Drive

- Download the datasets and put them in the same folder. To match the folder name in the dataset mappers, you'd better not change the folder names, its structure may be:

DATASET_ROOT/

├── COME15K

├── train

├── imgs_right

├── depths

├── ...

├── COME-E

├── RGB

├── depth

├── ...

├── COME-H

├── RGB

├── depth

├── ...

├── annotations

├── ...

├── DSIS

├── RGB

├── depth

├── DSIS.json

├── ...

├── SIP

├── RGB

├── depth

├── SIP.json

├── ...

- Change the dataset root in

calibnet/register_rgbdsis_datasets.py

# calibnet/register_rgbdsis_datasets.py line 28

_root = os.getenv("DETECTRON2_DATASETS", "path/to/dataset/root")Model weights: Google Drive

| Model | Config | COME15K-E-test AP | COME15K-H-test AP |

|---|---|---|---|

| ResNet-50 | config | 58.0 | 50.7 |

| ResNet-101 | config | 58.5 | 51.5 |

| Swin-T | config | 60.0 | 52.6 |

| PVT-v2 | config | 60.7 | 53.7 |

| P2T-Large | config | 61.8 | 54.4 |

To train our CalibNet on single GPU, you should specify the config file <CONFIG>.

python tools/train_net.py --config-file <CONFIG> --num-gpus 1

# example:

python tools/train_net.py --config-file configs/CalibNet_R50_50e_50q_320size.yaml --num-gpus 1 OUTPUT_DIR output/trainBefore evaluating, you should specify the config file <CONFIG> and the model weights <WEIGHT_PATH>. In addition, the input size is set to 320 by default.

python tools/train_net.py --config-file <CONFIG> --num-gpus 1 --eval-only MODEL.WEIGHTS <WEIGHT_PATH>

# example:

python tools/train_net.py --config-file configs/CalibNet_R50_50e_50q_320size.yaml --num-gpus 1 --eval-only MODEL.WEIGHTS weights/calibnet_r50_50e.pth OUTPUT_DIR output/evalWe provide tools to validate the efficiency of CalibNet, including Parameters, GFLOPS and inference fps. The usages are as follow.

# fps

python tools/count_fps.py --config-file <CONFIG> INPUT.MIN_SIZE_TEST 320 MODEL.WEIGHTS <WEIGHT_PATH>

# Parameters

python tools/get_flops.py --tasks parameter --config-file <CONFIG> MODEL.WEIGHTS <WEIGHTS_PATH>

# GFLOPS

python tools/get_flops.py --tasks flop --config-file <CONFIG> MODEL.WEIGHTS <WEIGHT_PATH>This work is based on detectron2 and SparseInst. We sincerely thanks for their great work and contributions to the community!

If this helps you, please cite this work:

@article{pei2024calibnet,

title={CalibNet: Dual-branch Cross-modal Calibration for RGB-D Salient Instance Segmentation},

author={Pei, Jialun and Jiang, Tao and Tang, He and Liu, Nian and Jin, Yueming and Fan, Deng-Ping and Heng, Pheng-Ann},

booktitle={IEEE Transactions on Image Processing},

volume={33},

pages={4348-4362},

year={2024},

organization={IEEE}

}