Project Website | Paper | RSS 2024

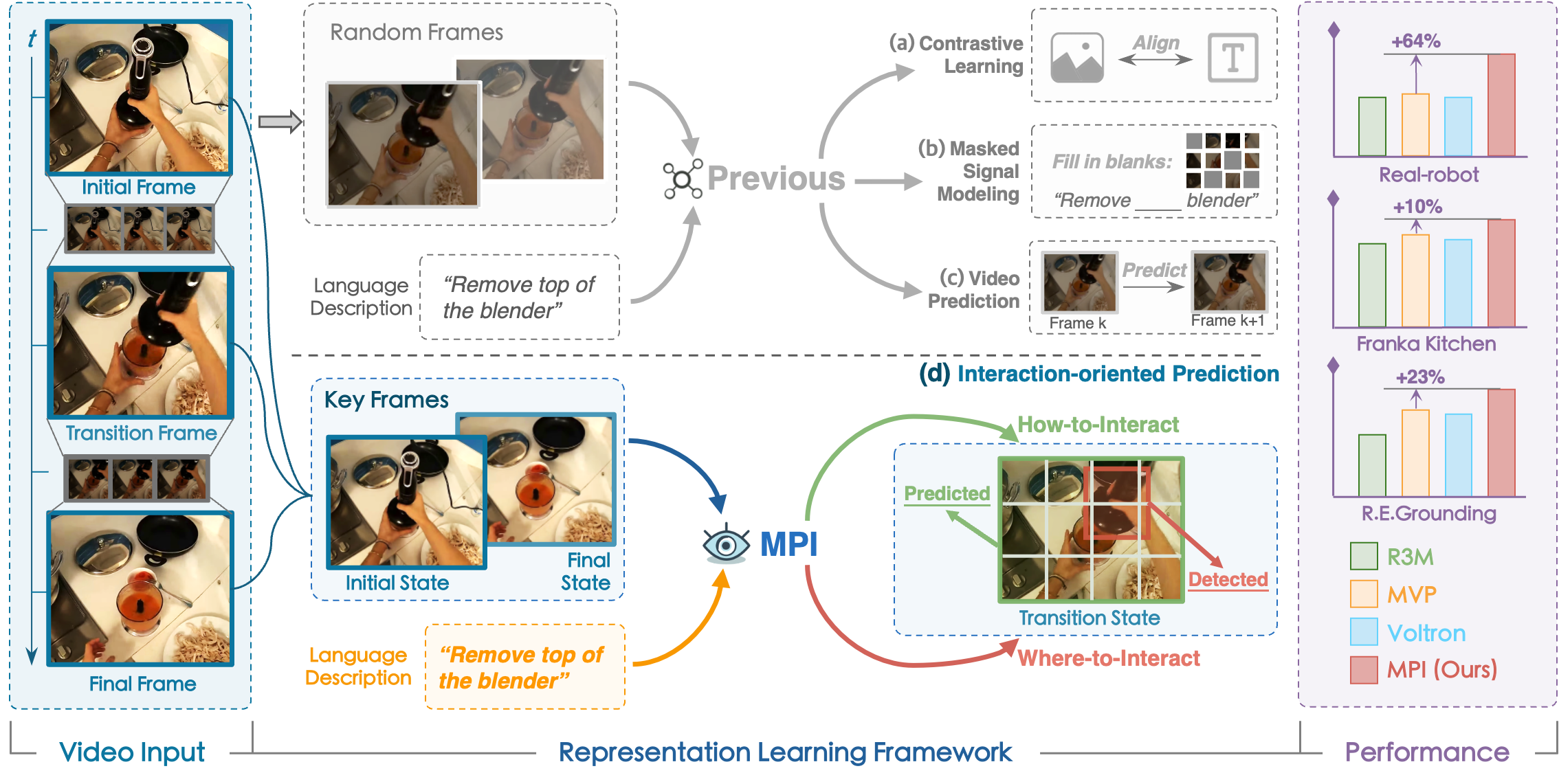

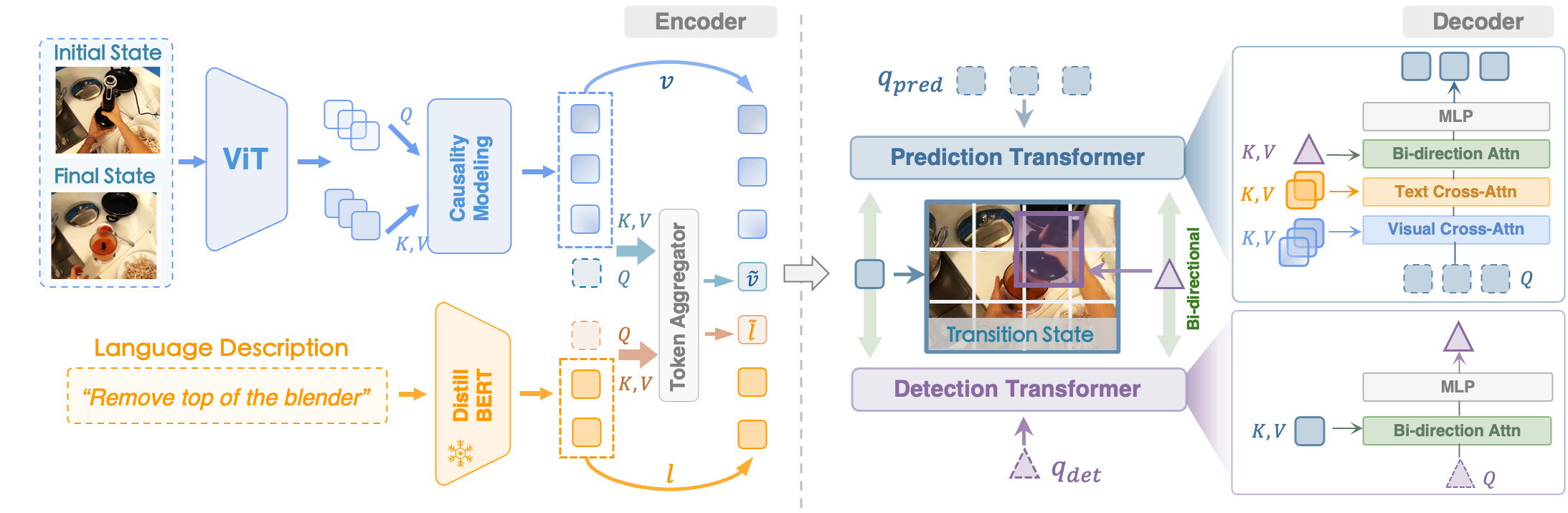

MPI is an interaction-oriented representation learning method towards robot manipulation:

- Instruct the model towards predicting transition frames and detecting manipulated objects with keyframes.

- Foster better comprehension of “how-to-interact” and “where-to-interact”.

- Acquire more informative representations during pre-training and achieve evident improvement across downstream tasks.

Real-world robot experiments on complex kitchen environment.

| Take the spatula off the shelf (2x speed) | Lift up the pot lid (2x speed) |

take_the_spatula_2.mp4 |

lift_up_the_pot_lid_2.mp4 |

| Close the drawer (2x speed) | Put pot into sink (2x speed) |

close_drawer_2.mp4 |

mov_pot_into_sink_2.mp4 |

- [2024/05/31] We released the implementation of pre-training and evaluation on Referring Expression Grounding task.

- [2024/06/04] We released our paper on arXiv.

- Model weights release.

- Evaluation code on Franka Kitchen environment.

| Model | Checkpoint | Params. |

|---|---|---|

| MPI-Small | GoogleDrive | 22M |

| MPI-Base | GoogleDrive | 86M |

Step 1. Clone and setup MPI dependency:

git clone https://github.com/OpenDriveLab/MPI

cd MPI

pip install -e .Step 2. Prepare the language model, you may download DistillBERT from HuggingFace

Download Ego4D Hand-and-Object dataset:

# Download the CLI

pip install ego4d

# Select Subset Of Hand-and-Object

python -m ego4d.cli.cli --output_directory=<path-to-save-dir> --datasets clips annotations --metadata --version v2 --benchmarks FHO

Your directory tree should look like this:

$<path-to-save-dir>

├── ego4d.json

└── v2

|—— annotations

└── clips

Preprocess dataset for pre-training MPI:

python prepare_dataset.py --root_path <path-to-save-dir>/v2/

torchrun --standalone --nnodes 1 --nproc-per-node 8 pretrain.pyStep 1. Prepare the OCID-Ref dataset following this repo. Then put the dataset to

./mpi_evaluation/referring_grounding/data/langrefStep 2. Initiate evaluation with

python mpi_evaluation/referring_grounding/evaluate_refer.py test_only=False iou_threshold=0.5 lr=1e-3 \

model=\"mpi-small\" \

save_path=\"MPI-Small-IOU0.5\" \

eval_checkpoint_path=\"path_to_your/MPI-small-state_dict.pt\" \

language_model_path=\"path_to_your/distilbert-base-uncased\" \or you can simply use

bash mpi_evaluation/referring_grounding/eval_refer.shTBD

If you find the project helpful for your research, please consider citing our paper:

@inproceedings{zeng2024mpi,

title={Learning Manipulation by Predicting Interaction},

author={Jia, Zeng and Qingwen, Bu and Bangjun, Wang and Wenke, Xia and Li, Chen and Hao, Dong and Haoming, Song and Dong, Wang and Di, Hu and Ping, Luo and Heming, Cui and Bin, Zhao and Xuelong, Li and Yu, Qiao and Hongyang, Li},

booktitle= {Proceedings of Robotics: Science and Systems (RSS)},

year={2024}

}The code of this work is built upon Voltron and R3M. Thanks for their open-source work!