A user-friendly wraper of LLM to help you find, understand, and query your files.

In order to set your environment up to run the code on your local machine, first install all requirements:

pip install -r requirements.txtThen, save your OpenAI API key to the environment variable named OPENAI_API_KEY.

If you don't have one, get one here.

For Bash:

export OPENAI_API_KEY=...For PowerShell:

$env:OPENAI_API_KEY="..."-

Preprocess the files that you want AI to ingest.

- Run

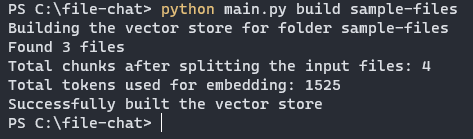

python main.py build {folder_path}. - This will ingest all the

.mdfiles under the given folder. - The result of the preprocessing will be saved to the folder named

cache. - All the subsequent commands depend on the preprocessing result.

- Run

-

You can have a trial run with the sample files under the folder

sample-filesthat come with this project.- Simply run

python main.py build sample-files - The sample files are:

books-i-have-read.mdis a fake note recording the list of books that somebody has read in 2023.Gettysburg-Address.mdis the famous Gettysburg Address by Abraham Lincoln.langchain-README.mdis the README file from LangChain.

- Simply run

- Search for files related to a topic semantically.

- Command:

python main.py search {topic}

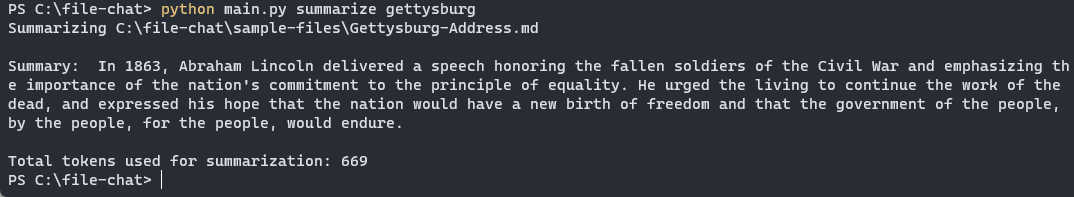

- Summarize a file.

- Command:

python main.py summarize {file}filecan be the file name or the absolute path to the file.- Note that

fileis fuzzily matched with the absolute paths, so you don't have to input the exact name or path.

- Query facts in your files using natural language.

- Command:

python main.py query {question}

- Chat with an AI assistant that can access your files.

- Command:

python main.py chat - Available features:

- Find related files

- Summarize a file

- Query facts in the files

- This project is built with LangChain, an awesome tool that abstracts the way we invoke LLMs.

- This project is inspired by https://github.com/hwchase17/notion-qa.