The airline industry is undeniably massive with an annual revenue exceeding $800B and 6M travelers per day. A team of four computer engineers from Cairo university have taken it upon themselves to find out a recipe for the perfect airline company by answering the paramount question “What makes airline customers satisfied?”. The question is posed as both a data analysis problem and a machine learning problem that together answer it via exploratory analytics, association rule mining and predictive models.

Our approach to said problem utilized the following pipeline

The following is the implied folder structure:

.

├── DataFiles

│ ├── airline-train.csv

│ └── airline-val.csv

├── DataPreparation

│ ├── DataPreparation.py

│ └── Visualization.ipynb

└── ModelPipelines

├── Apriori

│ ├── Apriori.ipynb

│ └── rules.txt

├── NaiveBayes

│ ├── NaiveBayes.ipynb

│ └── NaiveBayes.py

├── RandomForest

│ ├── RandomForest.ipynb

│ └── RandomForest.py

└── SVC

├── SVC.ipynb

└── SVC.py

pip install requirements.txt

# To run any stage of the pipeline, consider the stage's folder. There will always be a demonstration notebook.We have also run the project on the cloud via Azure ML studio. You may contact the developers if you find any issue in that.

We used Kaggle's Airline satisfaction dataset as shown below

| Age | Flight Distance | Departure Delay in Minutes | Arrival Delay in Minutes | Gender | Customer Type | Type of Travel | Class | Inflight wifi service | Departure/Arrival time convenient | Ease of Online booking | Gate location | Food and drink | Online boarding | Seat comfort | Inflight entertainment | On-board service | Leg room service | Baggage handling | Checkin service | Inflight service | Cleanliness | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 13 | 460 | 25 | 18.0 | Male | Loyal Customer | Personal Travel | Eco Plus | 3 | 4 | 3 | 1 | 5 | 3 | 5 | 5 | 4 | 3 | 4 | 4 | 5 | 5 |

| 1 | 25 | 235 | 1 | 6.0 | Male | disloyal Customer | Business travel | Business | 3 | 2 | 3 | 3 | 1 | 3 | 1 | 1 | 1 | 5 | 3 | 1 | 4 | 1 |

| 2 | 26 | 1142 | 0 | 0.0 | Female | Loyal Customer | Business travel | Business | 2 | 2 | 2 | 2 | 5 | 5 | 5 | 5 | 4 | 3 | 4 | 4 | 4 | 5 |

Our data preparation module was implemented using Pandas and PySpark and supports the following:

- Reading a specific split of the data (training or validation)

- Reading specific column types from the data (numerical, ordinal or categorical)

- Frequency Encoding for categorical features

- Dropping missing values

- Imputing numerical outliers Alternatives for the function were implemented as well in case any model required further special preprocessing.

Instead of querying the dataset for specific facts, we priotized that it should tell us all the facts. In other words, we have let the data speak for itself and for that we designed the following analysis workflow

| Analysis Stage | Components |

|---|---|

| Univariate Analysis | Prior Class Distribution |

| Basics Features Involved | |

| Missing Data Analysis | |

| Central Tendency & Spread | |

| Feature Distirbutions | |

| Feature Distributions per Class | |

| Correlations & Associations | Dependence between Categorical Features |

| Correlations between Categorical & Numerical Features | |

| Monotonic Association between Ordinal Variables | |

| Correlations between Numerical Features | |

| Naive Bayes Assumption | |

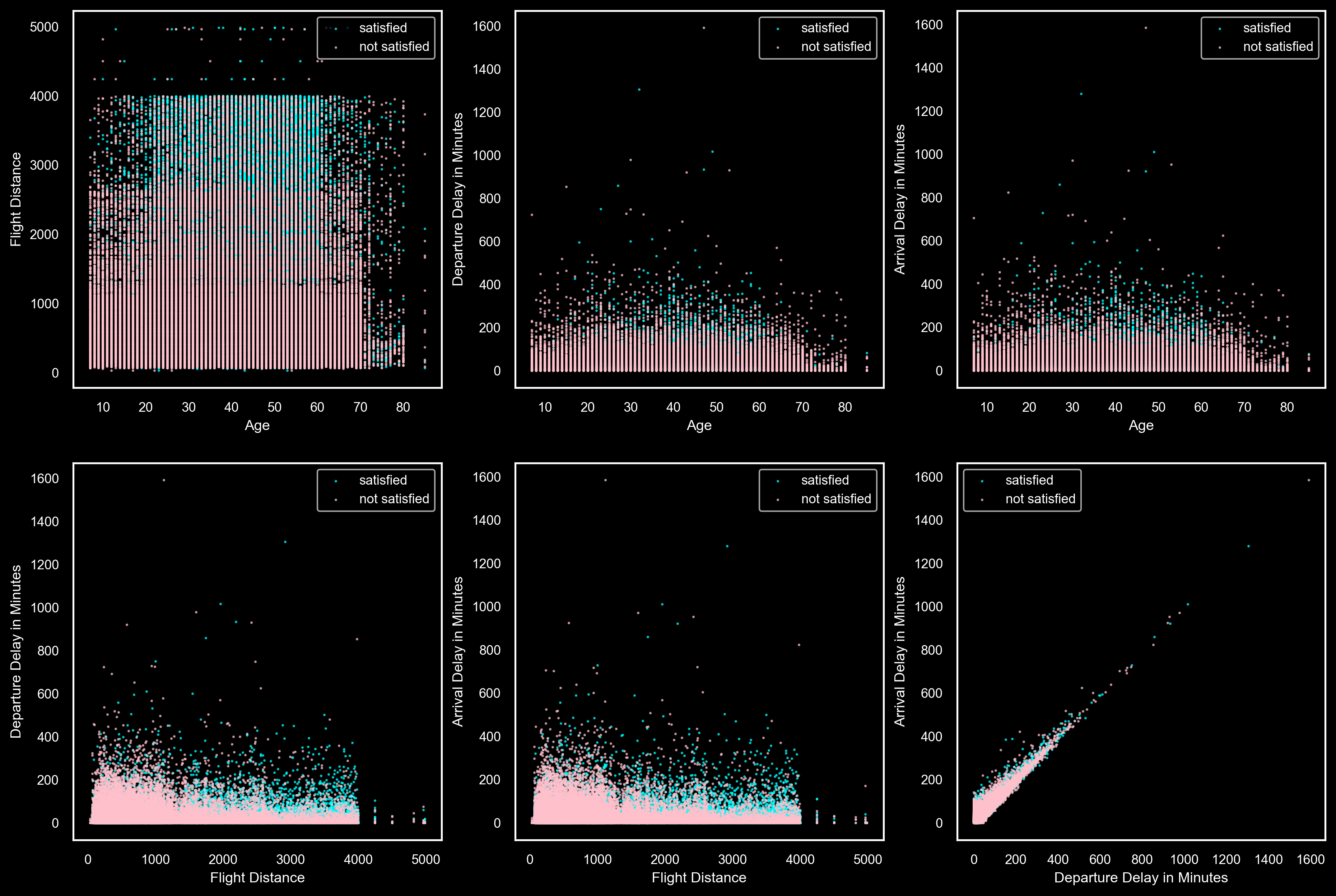

| Multivariate Analysis | Separability & Distribution of Numerical Features |

| Separability & Distribution of Numerical Feature Pairs | |

| Separability & Distribution of Numerical Feature Trios | |

| Seprability and Distribution of Numerical and Categorical Pairs | |

| Seprability and Distribution of Categorical Pairs |

In the following we will take you through a cursory glance of the workflow, the set of insights that each visual corresponds to (over 50 insights in total) can be found in the demonstration notebook or the report along with full versions of the visuals and tables.

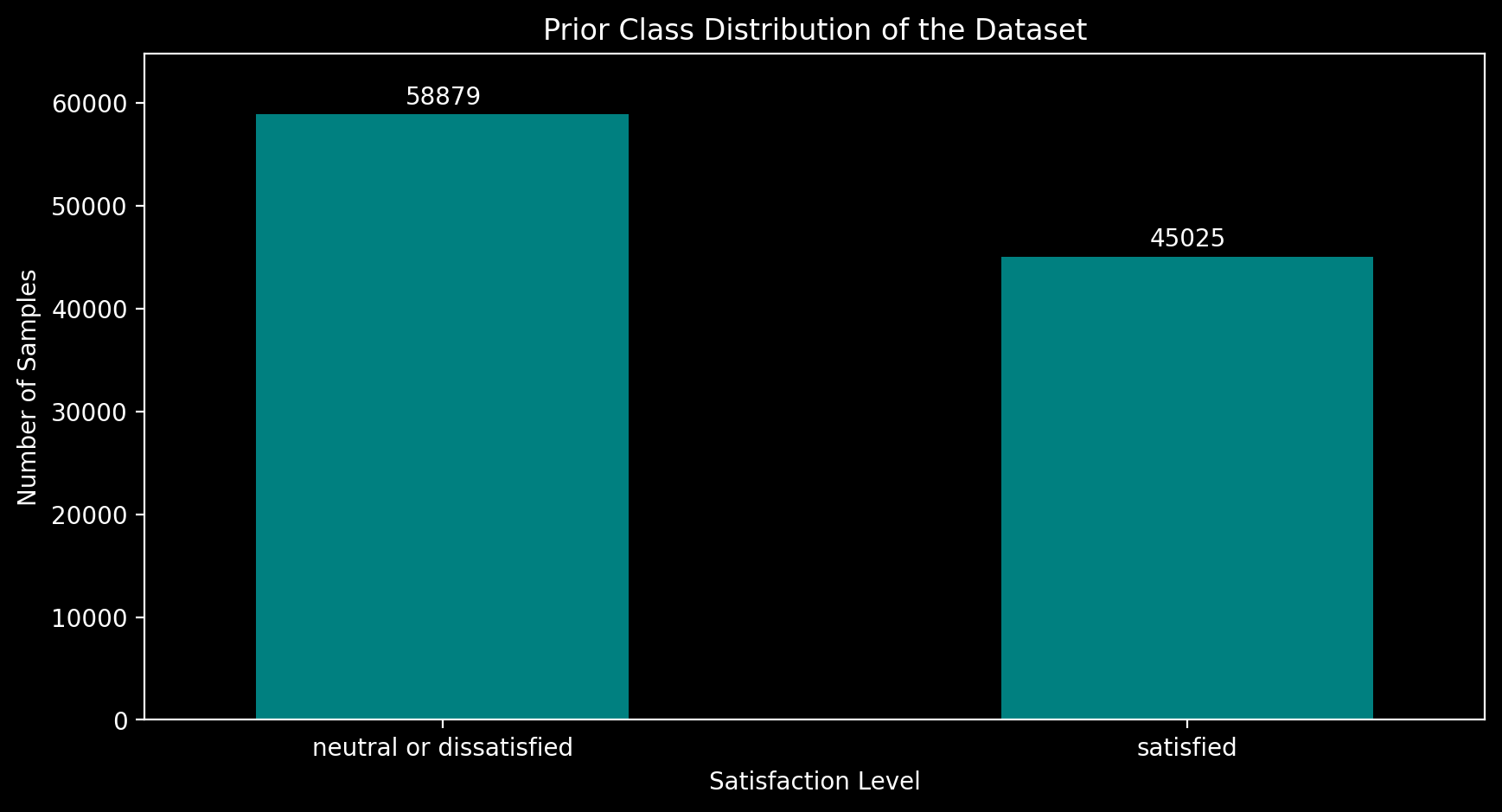

In this we study if there is any imbalance among the satisfaction levels of people involved in the dataset.

This provides a description, type and possible values for each feature.

| Variable Name | Variable Description | Variable Type | Values |

|---|---|---|---|

| Gender | Gender of the passengers | Nominal | Female, Male |

| Customer Type | The customer type | Nominal | Loyal customer, Disloyal customer |

| Age | The actual age of the passengers | Numerical | - |

| Type of Travel | Purpose of the flight of the passengers | Nominal | Personal Travel, Business Travel |

| Class | Travel class in the plane of the passengers | Nominal | Business, Eco, Eco Plus |

| Flight Distance | The flight distance of this journey | Numerical | - |

| Inflight wifi service | Satisfaction level of the inflight wifi service | Ordinal | 1, 2, 3, 4, 5 |

| Departure/Arrival time convenient | Satisfaction level of Departure/Arrival time convenient | Ordinal | 1, 2, 3, 4, 5 |

| Ease of Online booking | Satisfaction level of online booking | Ordinal | 1, 2, 3, 4, 5 |

| Gate location | Satisfaction level of Gate location | Ordinal | 1, 2, 3, 4, 5 |

| Food and drink | Satisfaction level of Food and drink | Ordinal | 1, 2, 3, 4, 5 |

| Online boarding | Satisfaction level of online boarding | Ordinal | 1, 2, 3, 4, 5 |

| Seat comfort | Satisfaction level of Seat comfort | Ordinal | 1, 2, 3, 4, 5 |

| Inflight entertainment | Satisfaction level of inflight entertainment | Ordinal | 1, 2, 3, 4, 5 |

| On-board service | Satisfaction level of On-board service | Ordinal | 1, 2, 3, 4, 5 |

| Leg room service | Satisfaction level of Leg room service | Ordinal | 1, 2, 3, 4, 5 |

| Baggage handling | Satisfaction level of baggage handling | Ordinal | 1, 2, 3, 4, 5 |

| Check-in service | Satisfaction level of Check-in service | Ordinal | 1, 2, 3, 4, 5 |

| Inflight service | Satisfaction level of inflight service | Ordinal | 1, 2, 3, 4, 5 |

| Cleanliness | Satisfaction level of Cleanliness | Ordinal | 1, 2, 3, 4, 5 |

| Departure Delay in Minutes | Minutes delayed when departure | Numerical | - |

| Arrival Delay in Minutes | Minutes delayed when Arrival | Numerical | - |

| Satisfaction | Airline satisfaction level | Nominal | Satisfaction, Neutral, Dissatisfaction |

In this, we analyze each feature for missing data.

| Age | Flight Distance | Departure Delay in Minutes | Arrival Delay in Minutes | Gender | Customer Type | Type of Travel | Class | Inflight wifi service | Departure/Arrival time convenient | Ease of Online booking | Gate location | Food and drink | Online boarding | Seat comfort | Inflight entertainment | On-board service | Leg room service | Baggage handling | Checkin service | Inflight service | Cleanliness | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Missing Count | 0 | 0 | 0 | 310 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

For each type of feature, we consider measures of central tendency and spread. This is an example for numerical features.

| Age | Flight Distance | Departure Delay in Minutes | Arrival Delay in Minutes | |

|---|---|---|---|---|

| count | 103904.000000 | 103904.000000 | 103904.000000 | 103594.000000 |

| mean | 39.379706 | 1189.448375 | 14.815618 | 15.178678 |

| std | 15.114964 | 997.147281 | 38.230901 | 38.698682 |

| min | 7.000000 | 31.000000 | 0.000000 | 0.000000 |

| 25% | 27.000000 | 414.000000 | 0.000000 | 0.000000 |

| 50% | 40.000000 | 843.000000 | 0.000000 | 0.000000 |

| 75% | 51.000000 | 1743.000000 | 12.000000 | 13.000000 |

| max | 85.000000 | 4983.000000 | 1592.000000 | 1584.000000 |

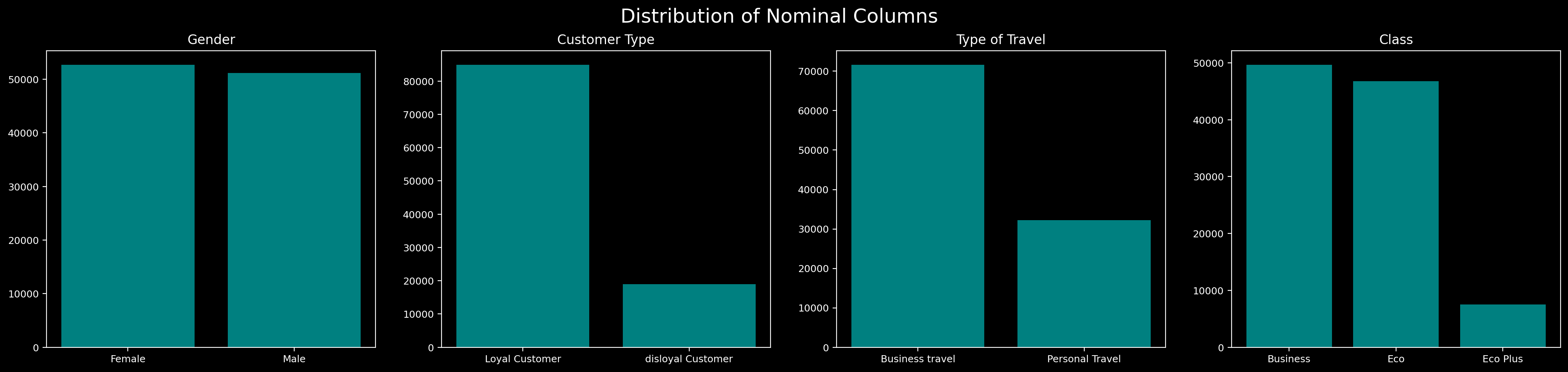

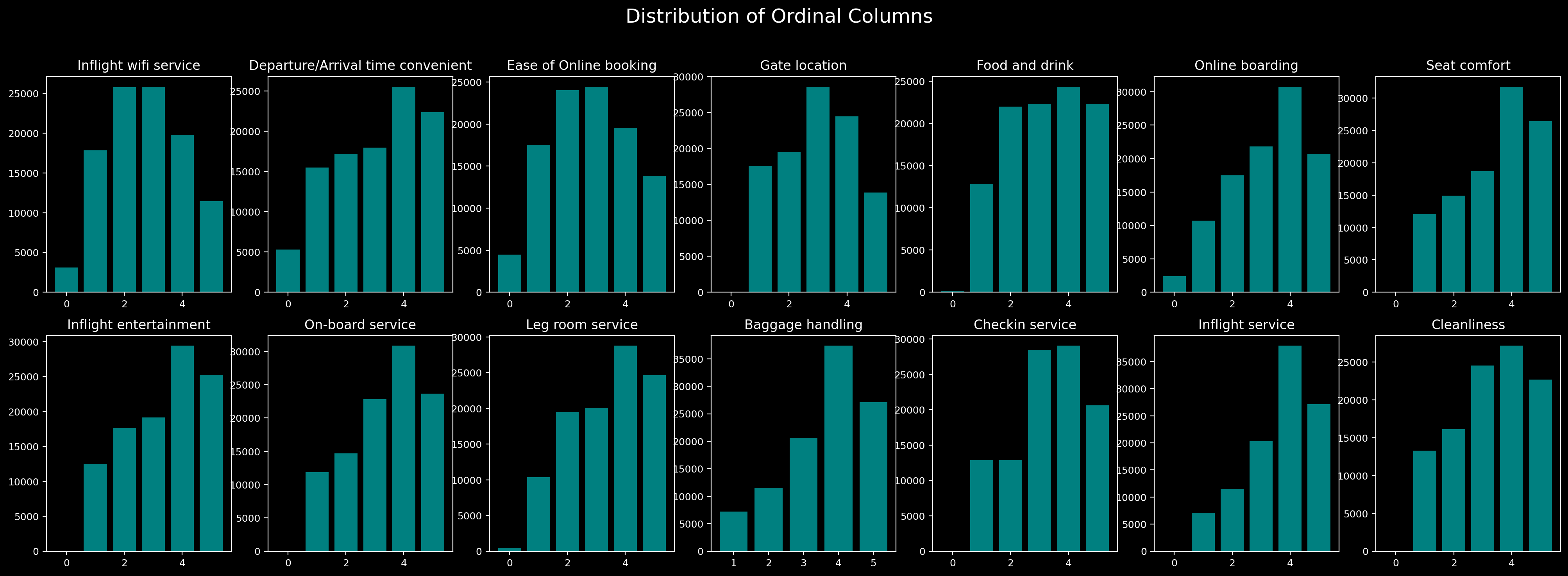

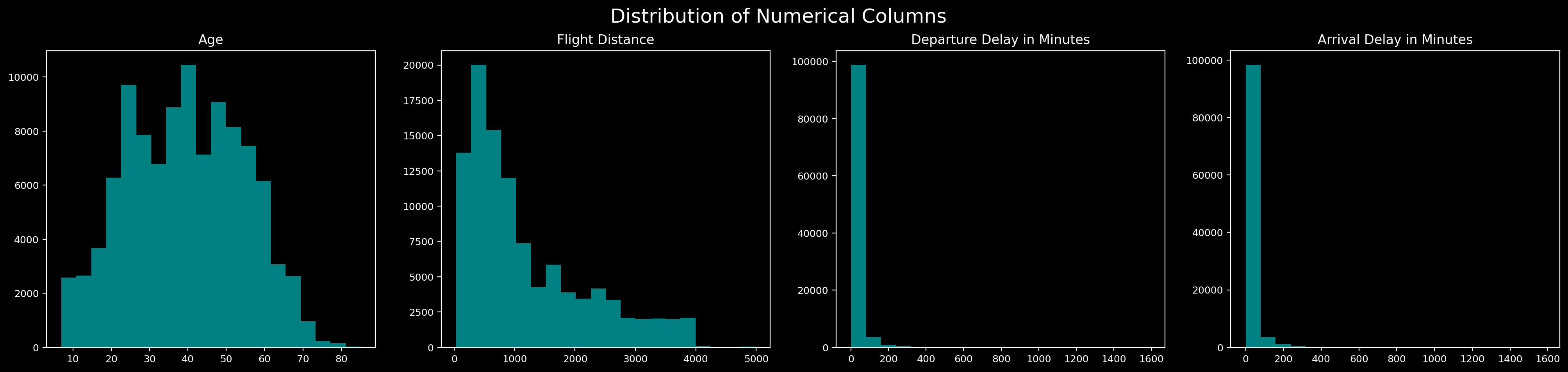

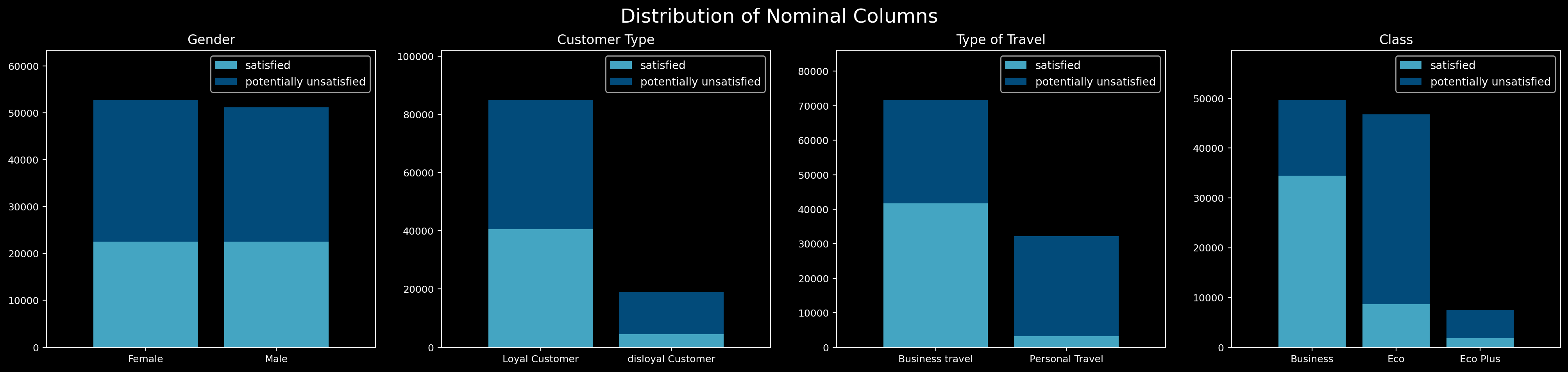

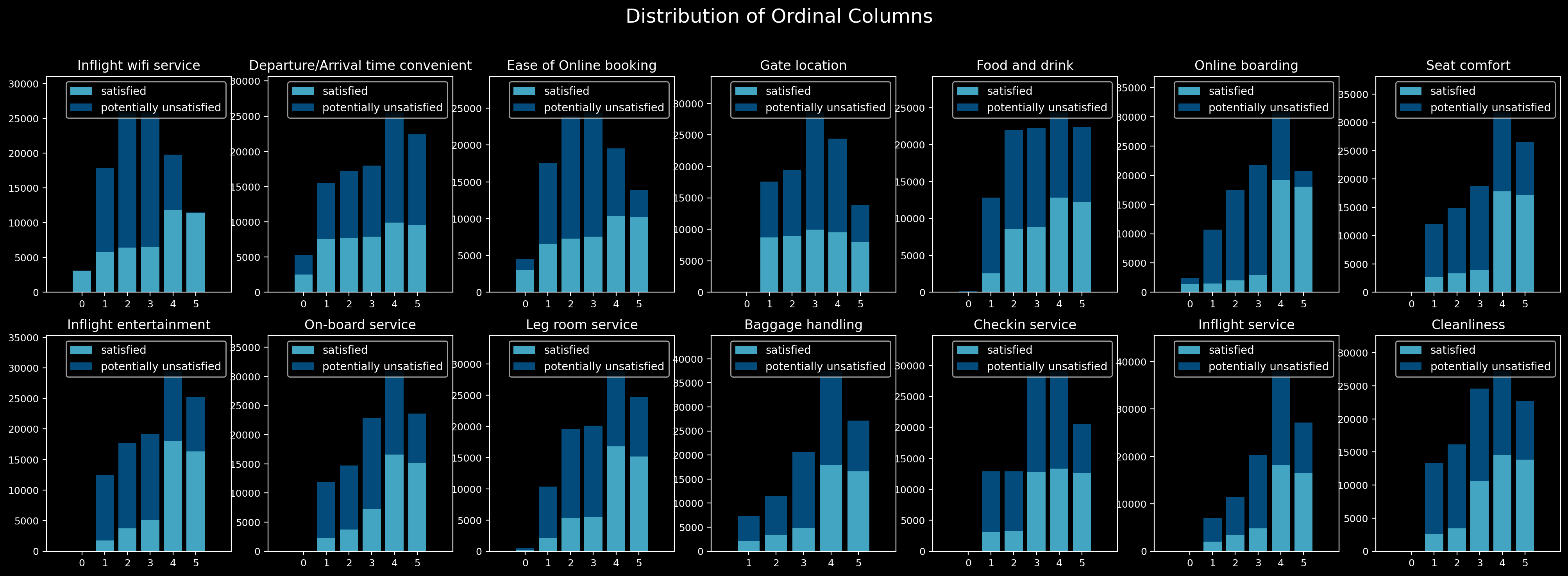

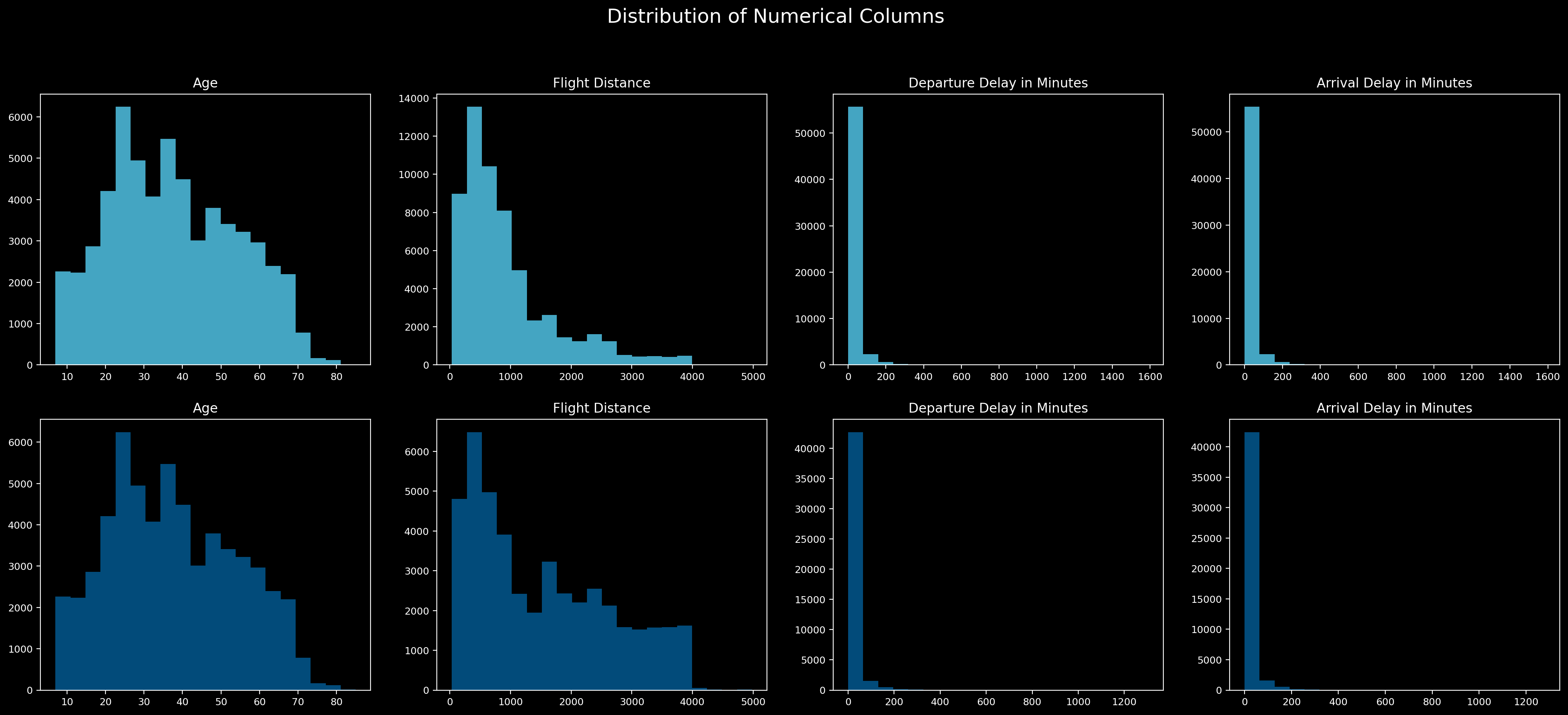

Here we analyze the distributions of each feature to look for any special patterns.

This provides the same analysis as above, but per class.

We have used the Chi-square test of independence which tests if there is a relationship between two categorical variables. In particular, we have that

Here, we set

| Gender | Customer Type | Type of Travel | Class | |

|---|---|---|---|---|

| Gender | 0.0 | 0.0 | 0.026398 | 0.000119 |

| Customer Type | 0.0 | 0.0 | 0.0 | 0.0 |

| Type of Travel | 0.026398 | 0.0 | 0.0 | 0.0 |

| Class | 0.000119 | 0.0 | 0.0 | 0.0 |

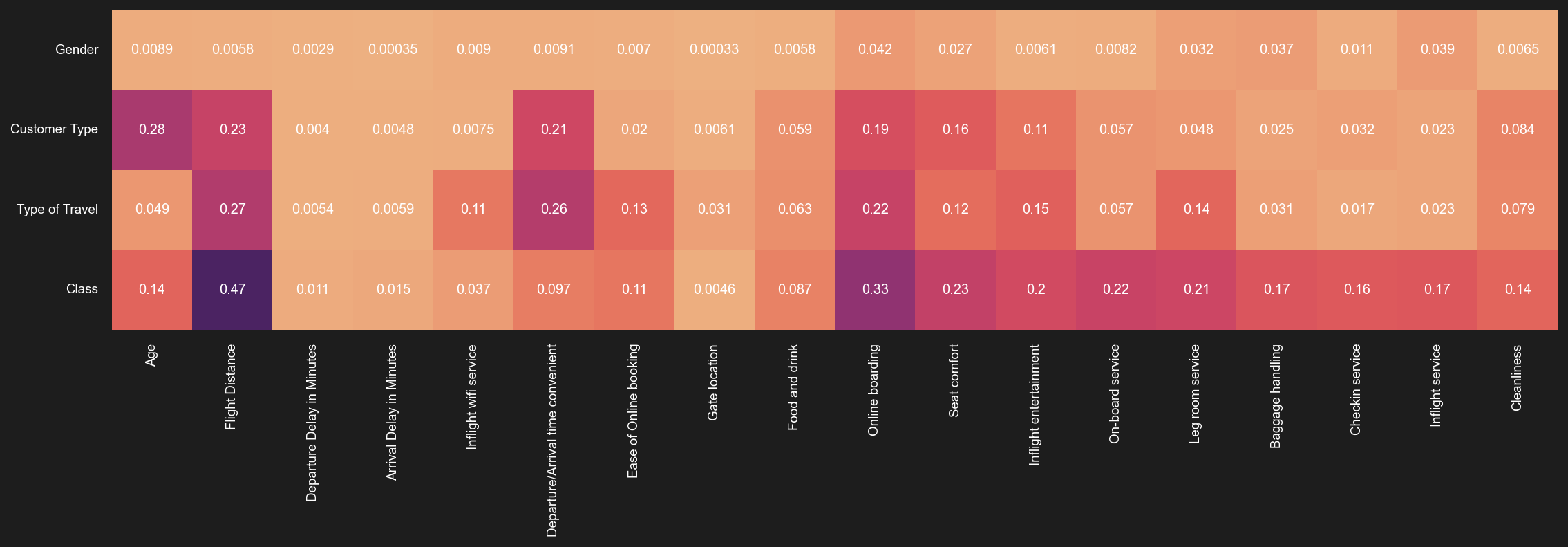

We have used Pearson's correlation ratio to find associations between all possible numerical and categorical features

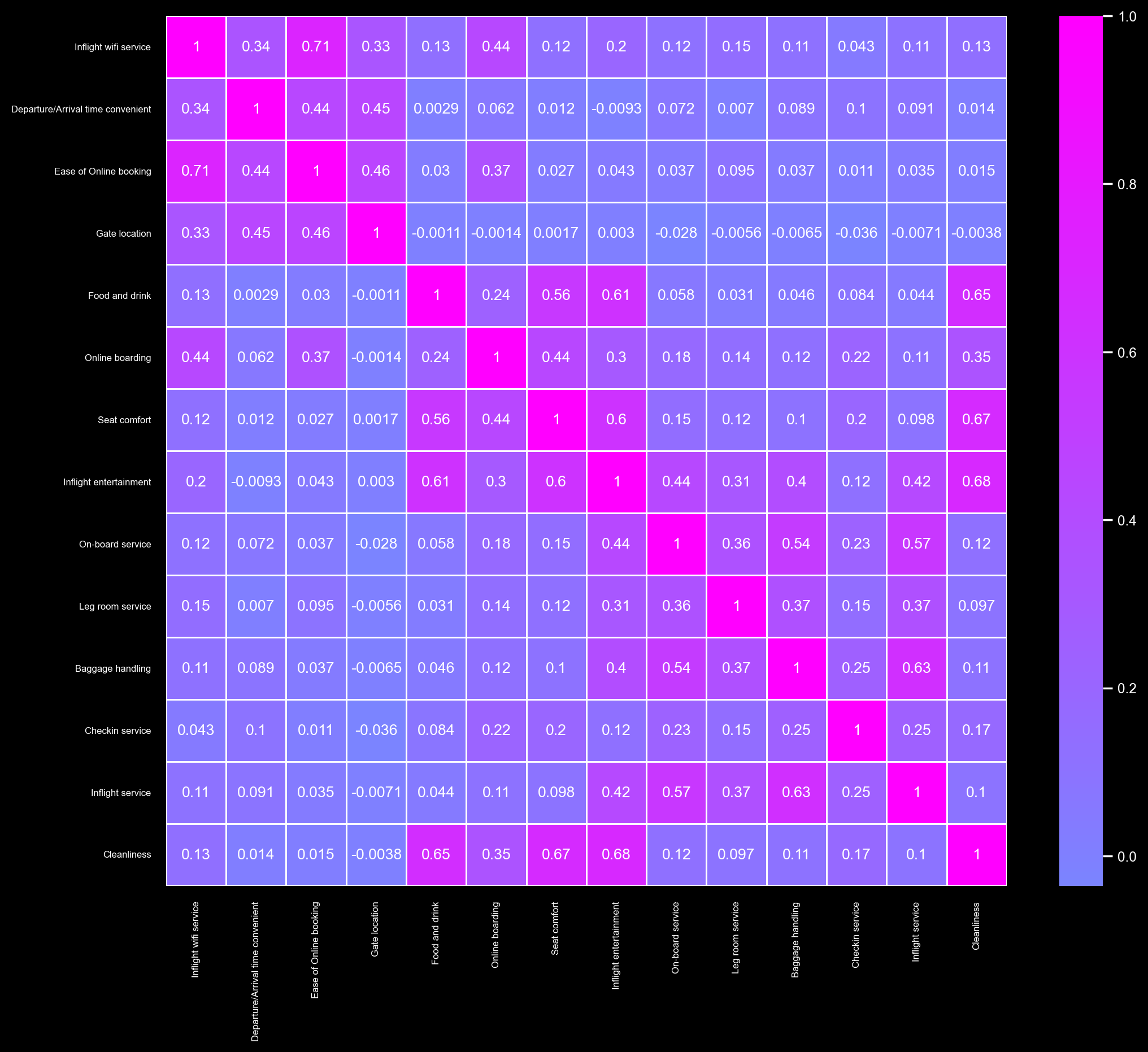

We have use Spearman's to find monotonic associations between ordinal variables

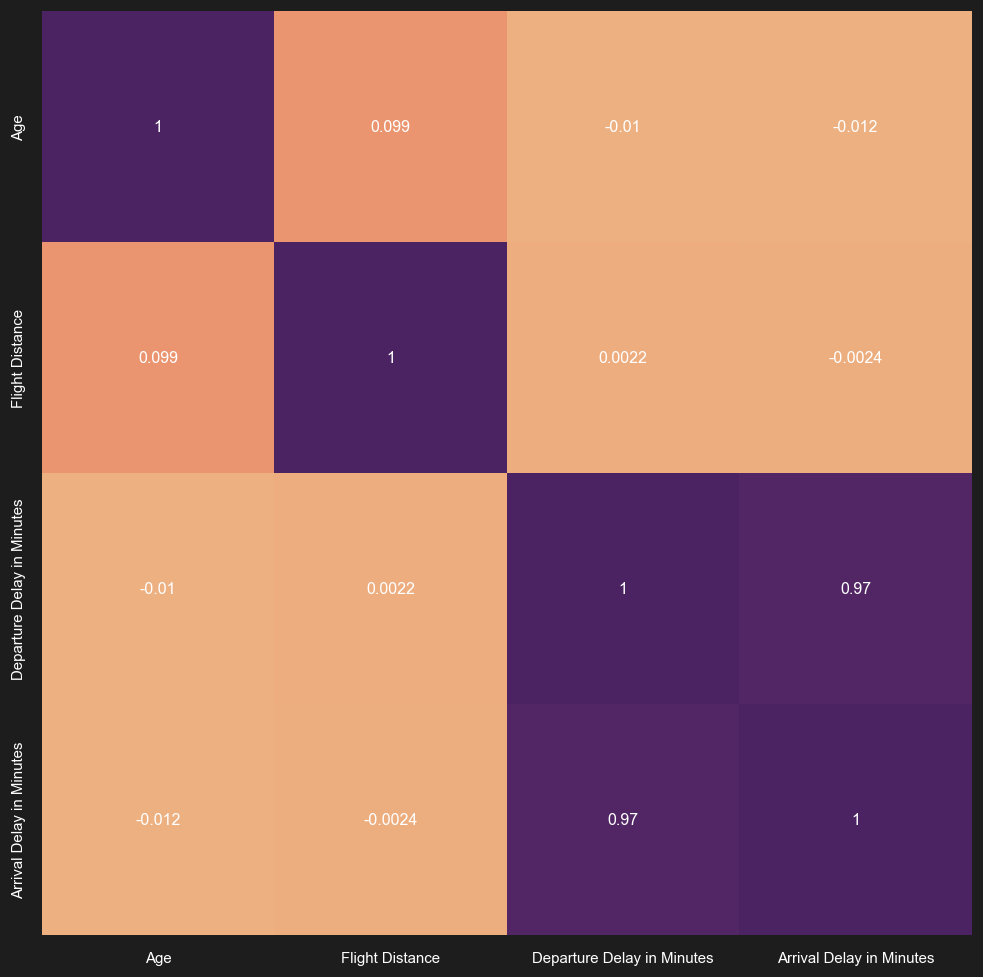

We have use Pearson's to study linear correlation between numerical variables

Related to dependence is also testing the Naive bayes assumption. We hae provided an automated way for that with the sample output:

As expected, the Naive Bayes assumption does not hold. In particular, we have that

Likewise, for the class

This does not stop up from using the model as research has demonstrated that it can be robust to the assumption.

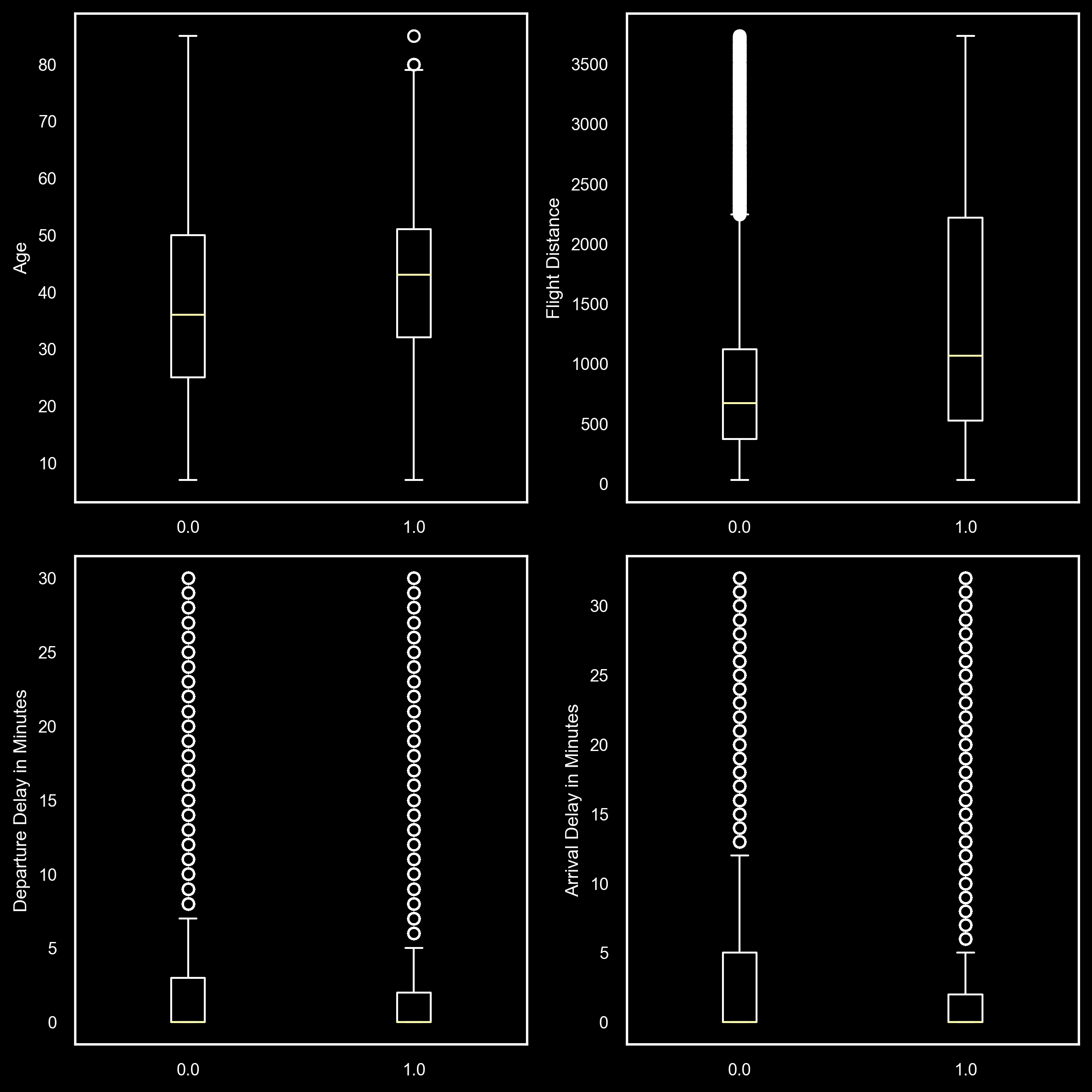

We prepared box plots to study the distribution of the numerical features for each class in the target

Then we started analyzing all pairs to see if separability gets better

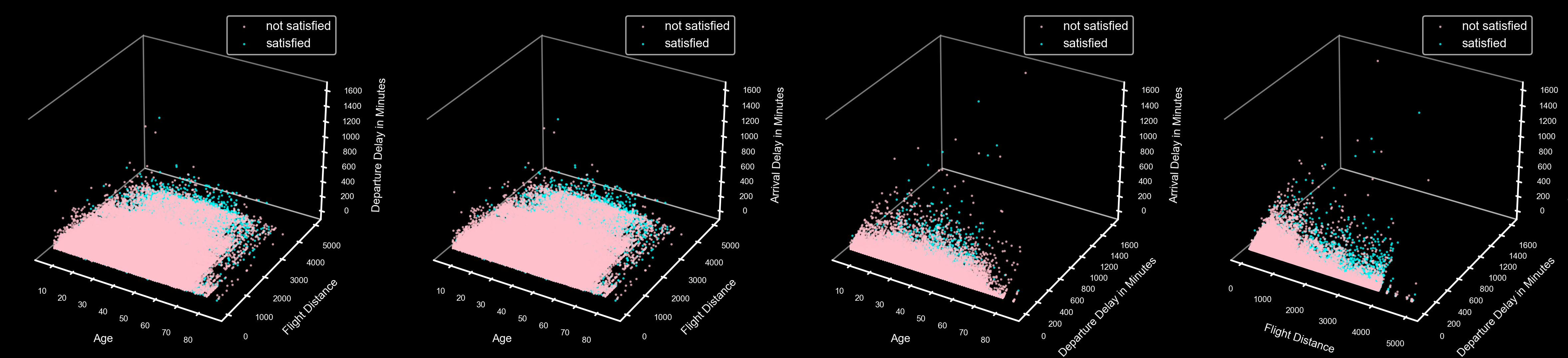

Then we considered all trios

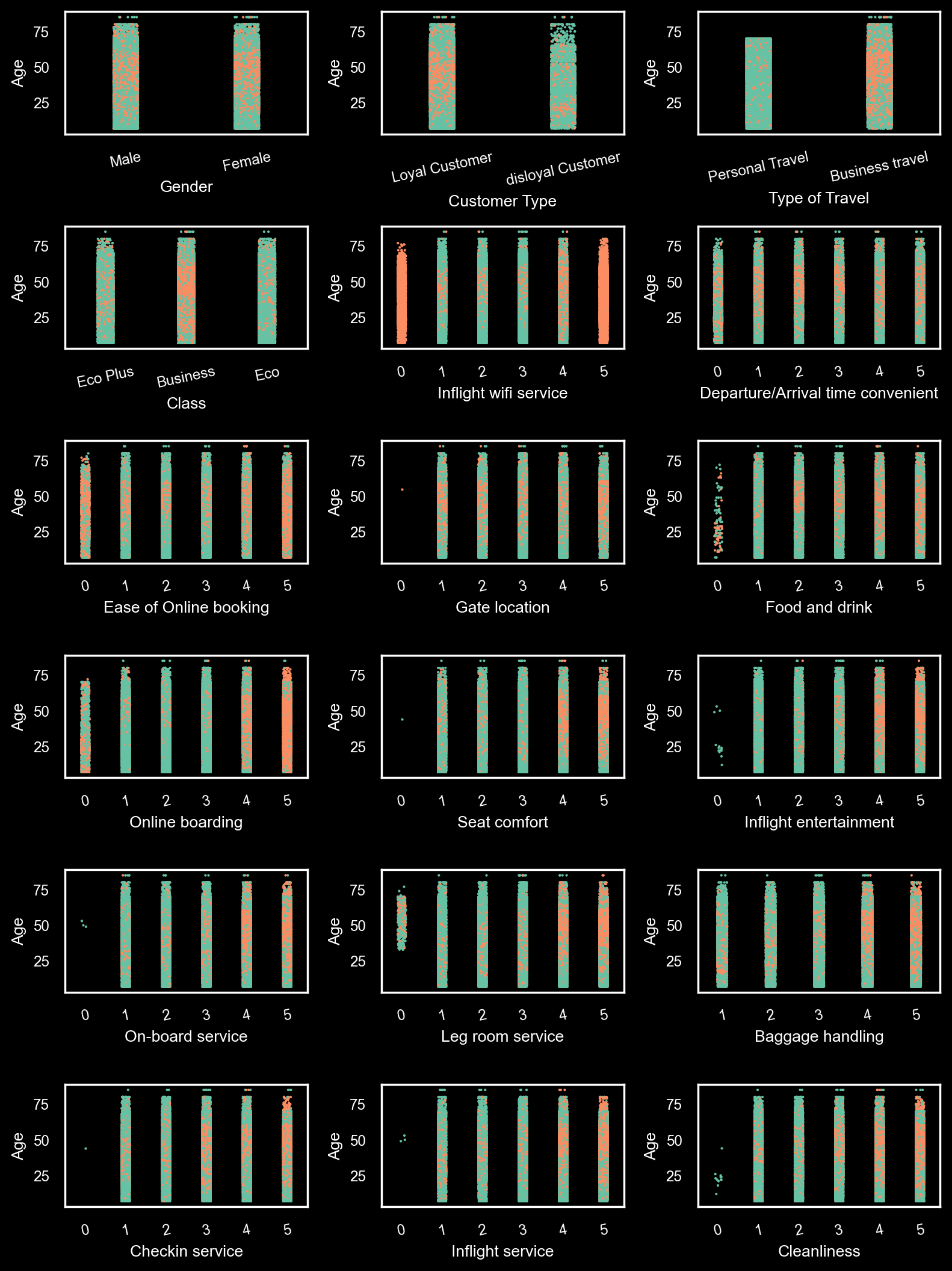

We then sought interaction with all possible numerical features. The plot is much longer in the notebook.

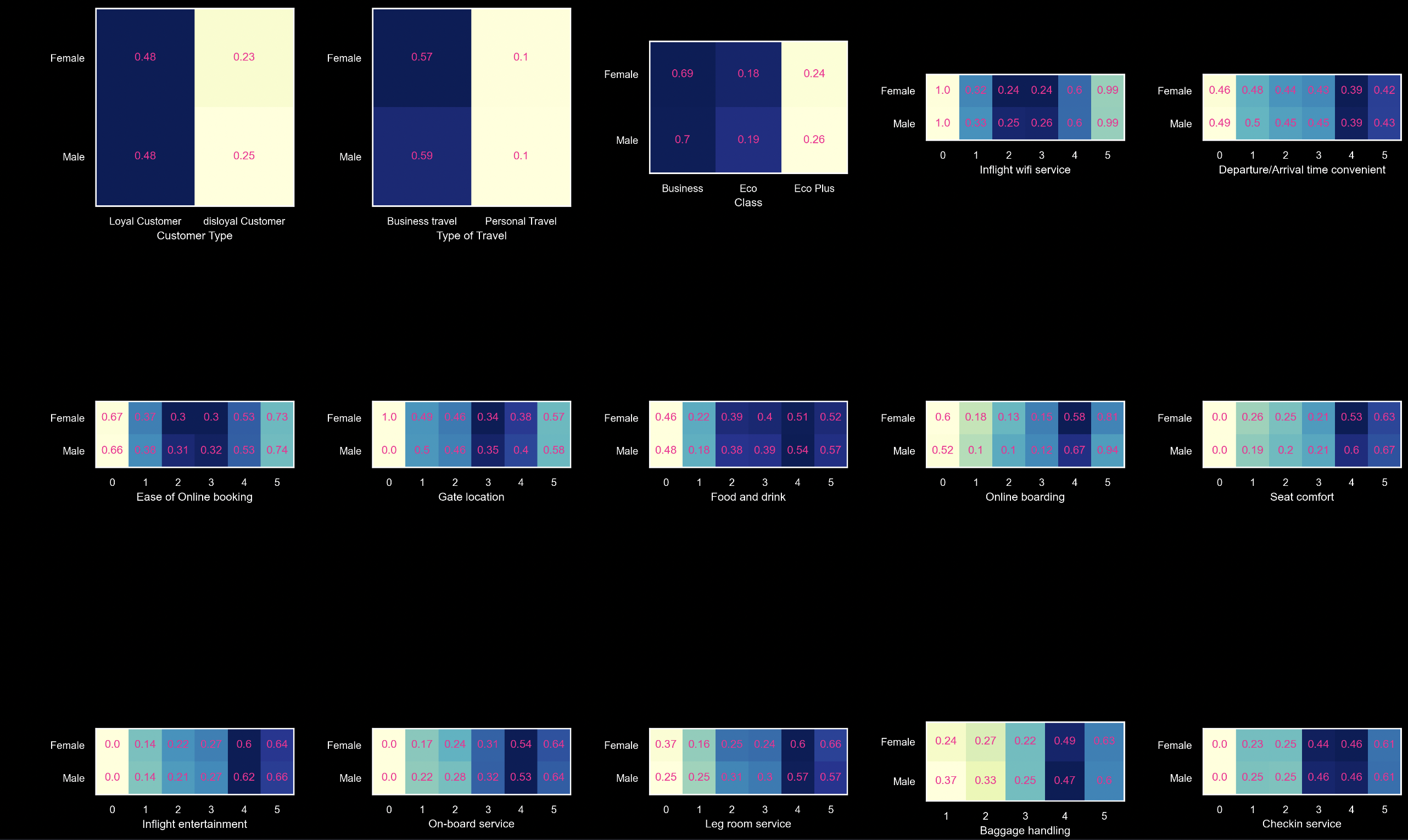

And interaction among all possible categorical features. You must have enough RAM to see the full version in the notebook.

The abundance of categorical features and their significance as shown above has inspired considering Naive Bayes and Random Forest as predictive models. We later follow up with an SVM model as ordinal features can be assumed as numerical as well. But before employing such models we considered topping off our exploratory data analytics with association rule learning.

For this, numerical features (excluding extremely skewed ones) were converted to categorical ones by binning and then all the categorical features were one-hot encoded.

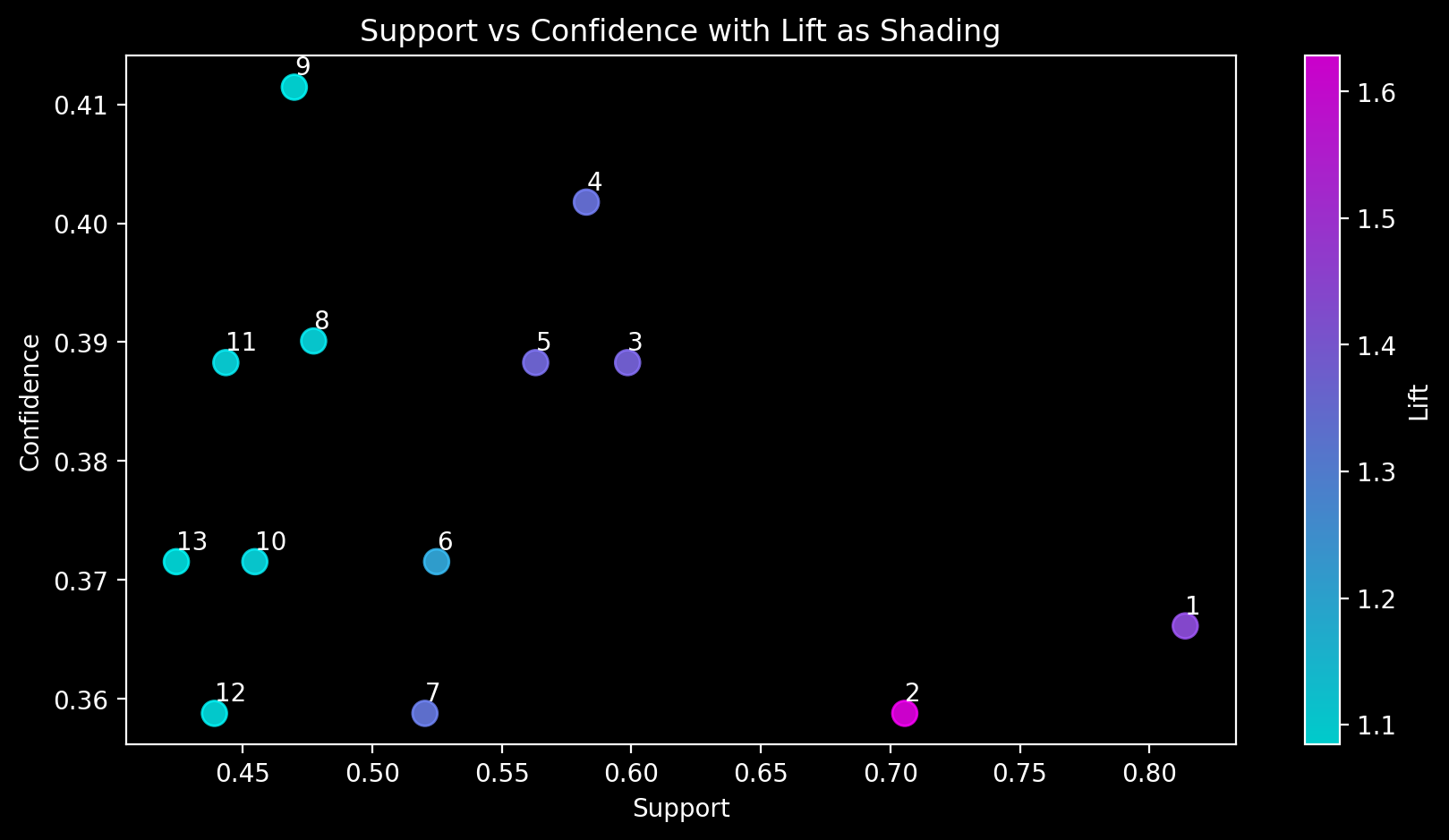

The following shows a sample of the strongest rules found:

| antecedents | consequents | support | confidence | lift | |

|---|---|---|---|---|---|

| 1 | Class = Eco | satisfaction = neutral or dissatisfied | 0.366146 | 0.813862 | 1.436226 |

| 2 | Type of Travel = Business travel, Customer Type = Loyal Customer | satisfaction = satisfied | 0.358793 | 0.705553 | 1.628201 |

| 3 | Age = adult, Type of Travel = Business travel | satisfaction = satisfied | 0.388281 | 0.598531 | 1.381228 |

Where the corresponding graphic over all strong rules is

This is color plot of support against the confidence where the color represents lift and the number refers to a rule in the table.

For Naive Bayes, we started with preprocessing the data by converting the string categories to integers and bucketizing the four numerical variables based on the 10 percentiles (10%, 20%, 30%,...) so that they can be treated similar to categorical variables. We followed by implementing NaiveBayes on PySpark from scratch:

Since it holds by Bayes rule:

Since it holds by the naive assumption:

By the constant denominator in Bayes:

Hence, the most likely class is given by:

where

This has yields an accuracy of

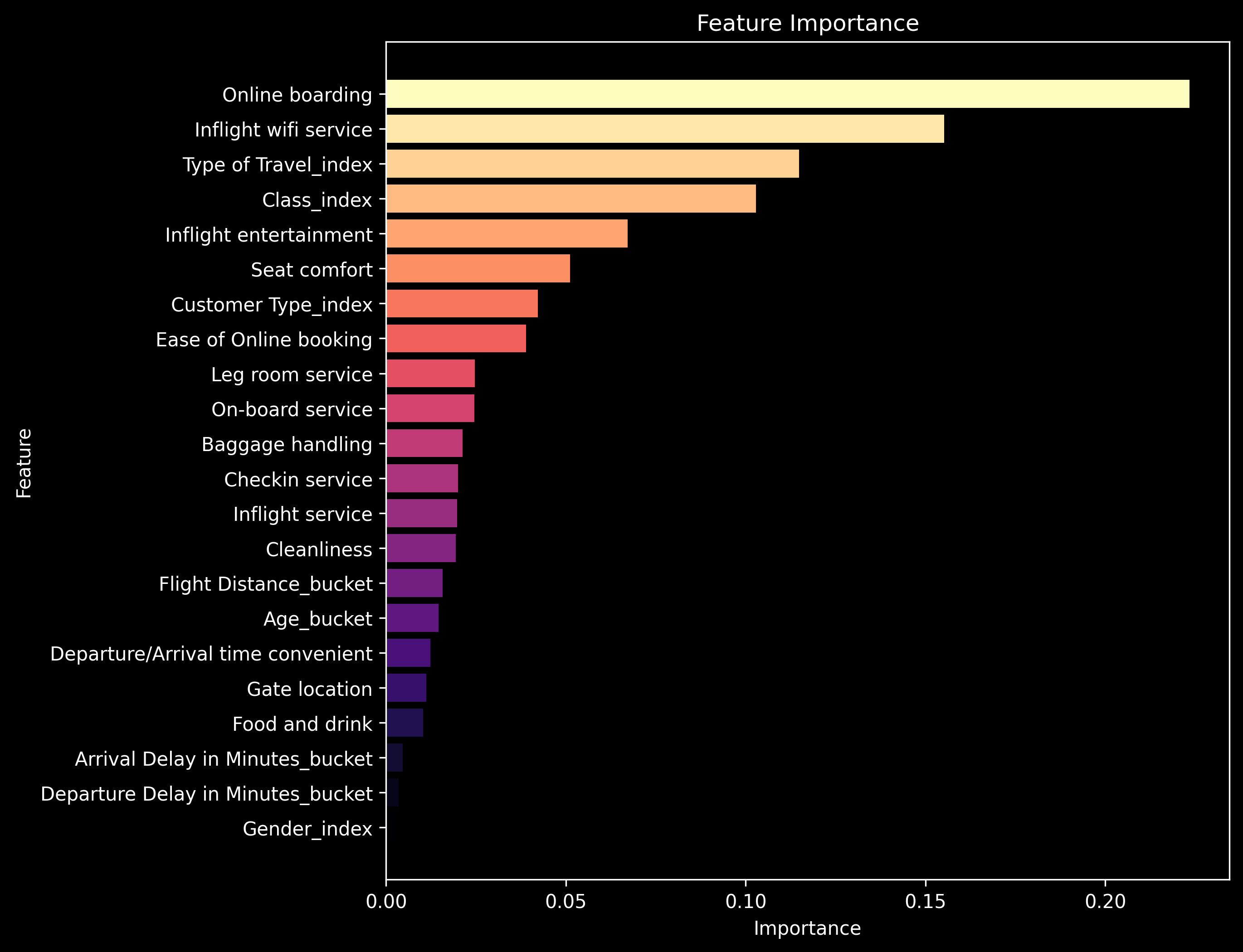

We also considered initiating a Random Forest model which did not further require any special processing (beyond NB). Luckily, PySpark’s RandomForest inherently supports both categorical and numerical features after applying the model the perceived accuracy on the validation set was

We analyzed the average feature importance set by trees in the forest to yield

We topped off with a linear SVM model and hyperparameter search but results were not as significant as the random forest.

The totality of the analyses above have led us conclude the following:

- There is a lack of satisfaction in airline travel experience (imbalance)

- Such lack is focused on economy travelers

- Wifi Service, Entertainment and OnlineBoarding are key determinants of satisfaction

- Comfort and ease of booking also matter

- Distance and delays seem to have a less adverse effect

|

Essam |

Halahamdy22 |

MUHAMMAD SAAD |

Noran Hany |

We have utilized Notion for progress tracking and task assignment among the team.