We are going to dockerize an express application.

This code sets up a basic Express server that listens for incoming GET requests on the root path ("/") and responds with a simple "Hi There" message in HTML. The server will listen on either the port specified in the

process.env.PORTenvironment variable or port3000if the environment variable is not set.

const express = require("express")

const app = express();

app.get("/", (req, res) => {

res.send("<h2> Hi There </h2>");

});

const port = process.env.PORT || 3000;

app.listen(port, () => console.log(`listening on port ${port}`))In Docker, everything is based on Images. An image is a combination of a file system and parameters. Let’s take an example of the following command in Docker.

- Docker images are built using

Dockerfile - Docker images are built using layers

- Check the below example:

FROM node:15

WORKDIR /app

COPY package.json .

RUN npm install

COPY . ./

EXPOSE 3000

CMD ["node", "index.js"]- The above

Dockerfilewill create an image from a base imagenode:15[IMAGE_NAME:TAG]. Here we are using a base image ofnodeversion15. - Docker Images are build layer wise.

- Here

FROM node:15is base layer, and above that layer all other layers are cached.

WORKDIR /appwill set the working directory inside the container.COPY package.json .will copy thepackage.jsonfile which is present locally inside the container to install dependencies.RUNit is an image build step the state of the container after aRUNcommand will be committed to the container image. A Dockerfile can have manyRUNsteps that layer on top of one another to build the image.RUN npm installit will install all the dependencies which is listed inpackage.json.EXPOSE 3000this will expose the port 3000.CMDis the command the container executes by default when you launch the built image. A Dockerfile will only use the finalCMDdefined. TheCMDcan be overridden when starting a container withdocker run $imageCMD ["node", "index.js"]this will execute the command inside the container in order to run theexpressapp. So, basically the above code are the instructions or steps which should be executed sequentially while building an container from an image.

To build an image from this

Dockerfileyou need to execute this commanddocker buildcommand.docker buildcommand builds Docker images from aDockerfile.

General Docker Build Image Command

docker build [OPTIONS] PATH | URL | -

Building our Docker Image:

docker build -t node-app-image .

Containers are instance of docker images that can be run using

docker runcommand Basic purpose of Docker is to run containers.

- Running containers is managed by

docker runcommand. To run a container we use following command. - We will be running our

express-appusing docker image which we created earliernode-app-image.

The

docker runcommand runs a command in a new container, pulling the image if needed and starting the container. You can restart a stopped container with all its previous changes intact usingdocker startUsedocker ps -ato view a list of all containers, including those that are stopped.

docker run -p 4000:3000 -d --name node-app node-app-imagedocker runRuns the command in container, pulling image if needed.docker run -p 4000:3000So, as docker container are completely isolated from other systems, so we can't access ourexpress-appfrom outside container, instead we can specify that the traffic coming to the port4000in our local machine should be redirected to port3000of the docker container.docker run -p 4000:3000 -dthis flag-ddetaches our container from our terminal and runs the container in background, though printing the container id to terminal.docker run -p 4000:3000 -d --name node-app node-app-imagespecifying the container namenode-appand giving the imagenode-app-imageto run a container.

- If you need to start an interactive shell inside a Docker Container, perhaps to explore the file system or debug running processes, use

docker execcommand with-iand-tflags. - The

-iflag keeps input open to the container, and the-tflag creates a pseudo-terminal that the shell can attach to. These flags can be combined like this:

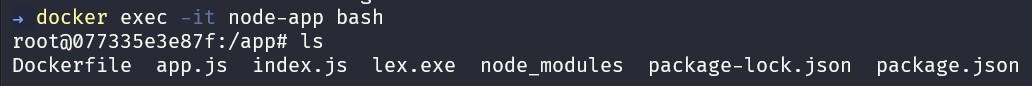

$ docker exec -it node-app bash- In the above screenshot we can see all the files inside our container.

- We can see that there are some unnecessary files like

Dockerfile,node_modulewhich aren't necessary. - These files are creating inside docker container because of

COPY . ./command in ourDockerfile. - So, to don't include these files or folders inside our container we will use

.dockerignore.

- Create a file inside your project folder

.dockerignore. - Include all the file and folder names in the file which you don't want to copy inside the container.

node_modules

.vscode

Dockerfile

.gitignore

files

.obsidian

- Re build the docker image

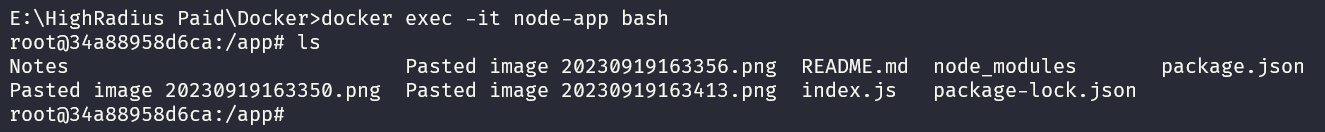

$ docker built -t node-app-image .In the above screenshot, we can see that now after re building the image, with the help of .dockerignore there no unnecessary files in our container.

- When we edit or update our file in our local machine, the file didn't gets updated inside docker container.

- To update that file inside our container, we need to build the image again using

docker buildcommand. - To overcome this issue, we use

volumesin docker, to synchronize our files between local and container.

$ docker run -v pathToFolderOnLocal:pathToFolderOnContainer -p 4000:3000 -d node-app node-app-imagepathToFolderLocal: need to pass the absolute path i.eE:\HighRadius Paid\Docker\DockerfilepathToFolderOnContainer:/app

- Windows Command Shell:

%cd% - Windows PowerShell:

${pwd} - Mac or Linux Shell:

$(pwd)

docker run -v %cd%:/app -p 4000:3000 -d --name node-app node-app-image Suppose we delete our

node_modulefolder from our local machine, and run our container, Inside the containernode_modulesfolder will be created due tonpm installcommand. But due tobind mountas the volumes are synced, it will delte thenode_modulesfolder inside the container also.

To avoid this problem we will use Anonymous mount.

$ docker run -v ${pwd}:/app -v /app/node_modules -p 4000:3000 -d --name node-app node-app-image-v /app/node_modules It will preserve this folder from bind mount. It overrides the bind mount.

To change the docker file system to read only we use

:ro (to be used with volumes -v)$ docker run -v ${pwd}:/app:ro -v /app/node_modules -p 4000:3000 -d --name node-app node-app-image- Displaying the volumes

$ docker volume ls- Deleting a volume

$ docker volume <Volume Name>- Deleting a volume while deleting a container

$ docker rm node-app -fv- Deleting all volumes

$ docker volume pruneWhen using Docker extensively, the management of several different containers quickly becomes cumbersome. Docker Compose is a tool that helps us overcome this problem and easily handle multiple containers at once.

Docker Compose works by applying many rules declared within a single

docker-compose.yml configuration file.

These YAML rules, both human-readable and machine-optimized, provide an effective way to snapshot the whole project from ten-thousand feet in a few lines.

Almost every rule replaces a specific Docker command, so that in the end, we just need to run:

$ docker-compose up -dA Basic docker-compose.yml file looks like this:

version: "3"

services:

node-app:

build: .

ports:

- "4000:3000"

volumes:

- ./:/app

- /app/node_modules

environment:

- PORT=3000

# env_file:

# - ./.envShutting down a container

docker-compose down -v

-vflag to delete mounted volumes also.

Suppose you want to execute your project on different environments, like development environment or production environment.

For this you need to configure multiple docker-compose files.

For our express-app we will be setting up two different environments i.e production and development.

FROM node:15

WORKDIR /app

COPY package.json .

ARG NODE_ENV

# bash script for checking if environment is production or development.

RUN if [ "$NODE_ENV" = "development" ]; \

then npm install; \

else npm install --only=production; \

fi

COPY . ./

EXPOSE $PORT

CMD ["npm", "index.js"]--only==production will dont' install the

devdependencies mentioned inpackage.jsonfile.

For dev environment we need to synchronize the local and container files, for that we will use bind mount and anonymous mount.

For every time the code get's update we need to automatically restart the node engine, for that we will use nodemon.

version: '3'

services:

node-app:

build: # Overriding the base docker-compose.yml

context: . # path to dockerfile

args:

- NODE_ENV=development #environment variable for specific environment

volumes:

# Bind mount for dev environment.

- ./:/app

- /app/node_modules

environment:

- NODE_ENV=development

command: npm run devFor prod environment we don't need to synchronize the local and container files, so we don't need to mount the volumes. If we don't need to synchronize files between local and container, then we also don't want to install nodemon.

version: '3'

services:

node-app:

build:

context: .

args:

- NODE_ENV=production

environment:

- NODE_ENV = production

command: node index.js$ docker-compose -f docker-compose.yml -f docker-compose.dev.yml up $ docker-compose -f docker-compose.yml -f docker-compose.prod.yml upWe will be setting up a new mongodb container. Benefit of docker-compose is that we can setup multiple container using a single yaml configuration file.

Setting up mongo service in docker-compose.

version: "3"

services:

node-app:

build: .

ports:

- "4000:3000"

environment:

- PORT=3000

env_file:

- ./.env

mongo:

image: mongo # Using offical mongo image from dockerhub

# environment:

env_file:

- ./.env.env file

PORT=3000

MONGO_INITDB_ROOT_USERNAME=nikhil

MONGO_INITDB_ROOT_PASSWORD=pwdTo run mongo container, we will run the following command:

$ docker-compose -f docker-compose.yml -f docker-compose.dev.yml up -d --buildThis will create 2 containers,

docker-node-app-1anddocker-mongo-1

Executing mongo shell inside docker-mongo-1 container.

$ docker exec -it docker-mongo-1 mongosh -u nikhil -p pwd