This is the official repo for the CVPR 2021 L2ID paper "Distill on the Go: Online knowledge distillation in self-supervised learning" By Prashant bhat, Elahe Arani, and Bahram Zonooz

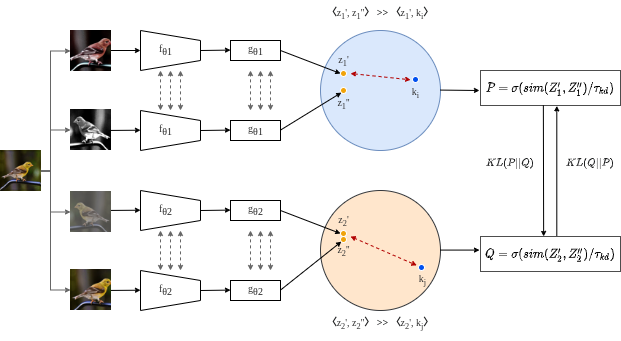

Self-supervised learning solves pretext prediction tasks that do not require annotations to learn feature representations. For vision tasks, pretext tasks such as predicting rotation, solving jigsaw are solely created from the input data. Yet, predicting this known information helps in learning representations useful for downstream tasks. However, recent works have shown that wider and deeper models benefit more from self-supervised learning than smaller models. To address the issue of self-supervised pre-training of smaller models, we propose Distill-on-the-Go (DoGo), a self-supervised learning paradigm using single-stage online knowledge distillation to improve the representation quality of the smaller models. We employ deep mutual learning strategy in which two models collaboratively learn from each other to improve one another. Specifically, each model is trained using self-supervised learning along with distillation that aligns each model's softmax probabilities of similarity scores with that of the peer model. We conduct extensive experiments on multiple benchmark datasets, learning objectives, and architectures to demonstrate the potential of our proposed method. Our results show significant performance gain in the presence of noisy and limited labels and generalization to out-of-distribution data.

For details, please see the Paper and Presentation.

- Python 3.7

- PyTorch 1.4.0

- Torchvision 0.5.0

This branch runs baseline version of SSL.

python train.py --config-file config/simclr.yaml

To run our method, switch to branch "dogo" for more info.

@inproceedings{bhat2021distill,

title={Distill on the Go: Online knowledge distillation in self-supervised learning},

author={Bhat, Prashant and Arani, Elahe and Zonooz, Bahram},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={2678-2687},

year={2021}

}

This project is licensed under the terms of the MIT license.