Releases: MegEngine/MegCC

v0.1.6

MegCC

Highlight

- 支持 ARM V8.6 平台新特性 I8MM 实现的 Int8 量化卷积,性能约为 DOT 版本的1.7倍。

- 支持 clip 新模型推理,性能与 megdnn 相当。

Bug Fixes

compiler-kernel

- reisze 算子添加 NEAREST Mode。

- 修正 int8 Winograd F23 的适用条件,避免 hybrid conv 选到该 kernel 的错误情况。

- 修正所有 ConvBias 的适用条件,限制其只支持 channel broadcast 的 bias。

- 新增对 elemwise sqrt sin cos 算子的支持。

- 添加 int8 resize 的 float32 计算实现, 修复 Arm 实现和 naive 实现结果存在误差的问题。

complier-通用

- 修复 MegCC 无法支持不带 bias 的 ConvBias 算子的 bug。

runtime

- 修复 runtime 可能释放指针为 NULL 的 dynamic tensor 导致程序 crash 的问题。

New Features

基础组件

- 升级 megbrain 到 padding channel pass bug 修复版本,以解决 megcc 部分模型编译出错的问题。

complier-kernel

- 增加 Arm64 BatchedMatmul 算子。

- 增加 IndexingMultiAxisVec 算子对多维 indexing 模式的支持。

- 新增对 nchw int8 conv1x1x kernel 的支持。

- 添加 aarch32 int8 dot nchw conv5x5 kernel。

- 添加 Float16 数据类型的 batched matmul算子。

- 新增 naive mod op。

- 支持 ARM V8.6 平台新特性 I8MM 实现的 Int8 量化卷积,性能约为 DOT 版本的1.7倍。

MegCC

Highlight

- Supports Int8 quantized convolution implemented by I8MM, a new feature of the ARM V8.6 platform, with performance approximately 1.7 times that of the DOT version.

- Support clip new model inference, performance is equivalent to megdnn.

Bug Fixes

compiler-kernel

- Add NEAREST Mode for resize operator.

- Correct the applicable conditions of int8 Winograd F23 to avoid the error of hybrid conv selecting this kernel.

- Fix all ConvBias applicability conditions to restrict support to channel broadcast only bias.

- Support sqrt sin cos elemwise kernel.

- Add float32 calculation implementation of int8 resize to fix the problem of discrepancy between Arm implementation and naive implementation results.

complier-common

- Fix the bug that MegCC does not support ConvBias operator without bias.

runtime

- Fix the bug that runtime may try to free dynamic tensor with NULL pointer.

New Features

basic components

- Update megbrain to the padding channel pass bug fix version to solve the problem of compiling some models of megcc.

complier-kernel

- Adds the BatchedMatmul operator for the Arm64 platform.

- The IndexingMultiAxisVec operator supports multi-dimensional indexing mode.

- Add support for nchw int8 conv1x1x kernel.

- Add aarch32 int8 dot nchw conv5x5 kernel.

- Add batched matmul operator for Float16 datatype.

- Add naive float32 mod elemwise op

- Supports Int8 quantized convolution implemented in I8MM, a new feature of the ARM V8.6 platform, with approximately 1.7x the performance of the DOT version.

v0.1.5

MegCC

Highlight

增加了 AArch32 Int8 NCHW44 的支持,优化了 AArch32 模式下量化模型的速度。

Bug Fixes

基础组件

- 修复在 DimShuffle 的前后均不是 reshape 时程序挂掉的问题以及 SubTensor 逆序切片时计算出错的问题。

- 修复用户无法在 rsicv 平台编译 runtime 代码的 bug。

compiler-kernel

- 添加部分 cv 算子缺失的函数声明。

- 修复头文件(math.h)缺失导致变异得到的二进制文件出错的问题。

- 修复 arm64 qint 算子计算精度与 x86 无法对齐的问题。

- 支持 elemwise erf。

complier-通用

- 修复模型编译 json 必要配置项和命令行必要配置项(model_path)不一致的问题。

- 修复 ENABLE_MGE_FP16_NCHW88 的错误使用导致 benchmark 报错的问题。

- 修复 mgb-to-tinynn 无法处理多组输入 shape 的 hako 加密模型的 bug。

- 增加对用户输入的 loader 的 output shapes 参数的正确性验证,若有误,将给出正确的参数值并 crash。

runtime

- 为 tinnynn_fopen 添加报错信息,在 runtime 编译添加 build_with_dump_tensor 编译选项时更容易定位错误。

- 修复连续多次调用 LITE_get_io_tensor 时,每次调用须释放上一次获取到的 ComboIOTensor 的限制,本次修复后,您可以多次连续调用 LITE_get_io_tensor 获取不同的 tensor 且无须调用 LITE_destroy_tensor。

- 修复了 LITE_destory_network 接口释放用户传入的存储模型文件的内存,可能引起的 crash,因此您需要自行释放存储模型文件的 buffer,否则可能会造成内存泄漏。

- 由于未规划 input tensor 的内存,禁止向 input tensor 拷贝数据的行为,做了以下限制:只能使用 LITE_reset_tensor_memory设置 input tensor 的内存。

- 修复 runtime example 不指定输入时,input tensor 为 NULL 引起的 segment fault 的问题。

New Features

基础组件

- 支持用户指定文件作为对分脚本的输入,以避免随机生成数据导致对分过不了的问题。

- 添加 runtime docker,以解决 runtime NON_STAND_OS 无法编译的问题。

complier-kernel

- 新增 int8 channelwise 5x5 卷积 kernel。

- 新增 gi channelwise k5s2 f32/f16 kernel 与 arm64 m8n8 f16 kernel。

- 新增 float cv resize kernel。

- 新增 int8 nchw44 direct 卷积算子。

- 新增 int8 nchw44 hybird 卷积算子。

- 添加 Int8 NCHW44 Winograd F23 算子。

- 支持 adaptive pooling 算子。

- 支持 Int8 NCHW44 pooling 算子。

- 增加 IndexingMultiAxis 算子支持的 dtype。

- 增加 fp16 Argsort/Elemwise/IndexingMultiAxisVec/Pooling/PowC/Topk/TypeCvt/WarpPerspective kernel。

- 增加 gray2rgb 算子。

- 添加 aarch32 conv1x1 kernel。

complier-通用

- 为适应 megcli 仅支持给 mgb-to-tinynn 传入json 配置文件的用法,将所有必要的 mgb-to-tinynn 命令行选项加入 json 中,且 mgb-to-tinynn 添加了生成 json 模版的功能。

runtime

- 支持 runtime 编译同时输出静态库和动态库。

MegCC

Highlight

breaking change

Bug Fixes

基础组件

- Fix the problem that the program hangs up when the front and rear of DimShuffle are not reshape, and the problem of calculation error when SubTensor slices in reverse order.

- Fix the bug that users can't compile runtime code in rsicv platform.

compiler-kernel

- Add the missing declaration of some cv operator.

- Fix the problem that the missing header file (math.h) causes errors in the binary file obtained by mutation.

- Fix arm64 qint compute algorithm to get the nearest result with x86.

- support elemwise erf mode

complier-通用

- Fix the inconsistency between the necessary configuration items for model compilation json and the required configuration items for the command line (model_path).

- Fix benchmark option error when ENABLE_MGE_FP16_NCHW88 is defined to 0.

- Fix the bug that mgb-to-tinynn cannot handle hako encrypted models with multi-group input shape.

- Verify the correctness of the output shapes parameter of the loader provided by the user, if there is an error give the correct parameter value and crash.

runtime

- Add an error message for tinynn_fopen, and it is easier to locate errors when adding the build_with_dump_tensor compilation option to runtime compilation.

- Fix the bug that calling LITE_get_io_tensor multiple times to get different tensor causes unknown behavior.

After the fix, you can call LITE_get_io_tensor multiple times to get different tensors without calling LITE_destroy_tensor. - Fixed the LITE_destory_network interface to release the memory of the storage model file passed in by the user, which may cause a crash. (Therefore, you need to release the buffer storing the model file by yourself, otherwise it will cause a memory leak).

- Since the memory of the input tensor is not planned, the behavior of copying data to the input tensor is prohibited, and the following restrictions are imposed: only LITE_reset_tensor_memory can be used to set the memory of the input tensor.

- Fix bug that the pointer of input tensor is NULL if there is no input data.

New Features

基础组件

- Supports user-specified files as input to the script for comparison results.

- Add runtime docker to solve the problem of runtime cannot be compiled in some environments.

complier-kernel

- Add init8 channel-wise conv 5x5 kernel.

- Support gi channelwise k5s2 f32/f16 kernel and arm64 m8n8 f16 kernel.

- Support float cv resize.

- Add int8 nchw44 hybrid conv kernel.

- Add int8 nchw44 direct conv kernel.

- Add Int8 NCHW44 Winograd F23 operator.

- Support adaptive pooling operator.

- Support Int8 NCHW44 pooling operator.

- Added dtype supported by IndexingMultiAxis operator.

- Add fp16 Argsort/Elemwise/IndexingMultiAxisVec/Pooling/PowC/Topk/TypeCvt/WarpPerspective kernel.

- Add gray2rgb operator.

- Add aarch32 conv1x1 kernel.

complier-通用

- In order to adapt to the usage that megcli only supports importing json configuration files to mgb-to-tinynn, all necessary mgb-to-tinynn command line options are added to json; mgb-to-tinynn adds the function of generating json templates;

runtime

- Support runtime compilation to export both static and dynamic libraries.

v0.1.4

MegCC

HighLight

breaking change

runtime

- 修复 runtime 暴露符号容易和用户 pipeline 符号(例如 DEBUG 枚举与编译器会自带的 DEBUG 宏)冲突的问题。此改动会导致 log API(LITE_set_log_level)兼容性破坏(若您使用了 LITE_set_log_level , 编译时编译器会报类似 “undefine WARN, do you means LITE_WARN” 之类的错误,需参考此 PR 中的 diff 修改代码)。

Bug Fixes

基础组件

- 支持 MacOS 编译 compiler 工具以及 MacOS 主机编译 runtime。

- 修复输出为 Uint8 时 MegCC 与 MegBrain 对分不正确的问题,完善对分脚本,提升其易用性。

compiler-kernel

- 修复 megcc 不支持自动创建 kernel 导出目录、模型信息文件中没有输出信息的问题。

complier-通用

- 修复 importer 无法识别重名 op 的问题。

- 修复 exporter return-op 输出不能为 weight 的情况。

- 修复使用 mgb-to-tinynn 编译模型开启 float16 nchw88 优化时编译失败的问题。

- 修复 MegCC 在 Apple m1 平台上编译报错的问题,目前 MegCC 支持在 Apple M1 平台编译。

runtime

- 绑定 CombineModel 模块和 vm 模块,以解决多模型下LITE_destroy_network 和 LITE_make_network 顺序不同时出现的 crash。

- 修复输出 tensorw 为 weight 时模型计算出错的问题。

- 修复 loader 多次使用时,参数重置不完整造成的内存非法访问问题。

其他

- 修复 arm-linux 环境因缺少依赖导致的使用 release 包编译时 runtime 失败。

New Features

基础组件

- 修复 llvm 不支持 fp16 数据类型的识别导致的编译问题。

complier-kernel

- 添加 arm int8-dot hybrid stride 为 1 的卷积算子。

- 添加 VEC_BCAST111C f32 类型的 elemwise。

- 新增对 float16 类型 kernel 的支持(已添加 mk8 matmul、relayout、unary elemwise)。

- 新增 fp16 f23 f43 f63 winograd 卷积算子。

- 新增 fp16 im2col 卷积 kenrel。

- 增加对高斯模糊算子的支持。

- 增加 float16 数据类型的 reduce、pooling、以及 float16 和 float32 相互转换的算子。

- 修改模型解密逻辑,删除了辅助工具hako-to-mgb,用户无需指定hako版本,将自动尝试megcc支持的所有解密算法。(hako 选项保留,标记 为deprecated)。

- 添加 float16 数据类型的二元和三元 elemwise 算子。

- 添加 float16 数据类型的 Conv1x1 算子。

- 添加对 fp16 模型的 benchmark 支持;添加 conv 和 matmul 的 naive 实现。

- arm 平台添加 VEC_BCAST110 elemwise 模式。

- 添加 gaussian blur cv 算子。

- 添加 fp16 gi gemv, gevm 算子。

- 添加 fp16 nchw88 channelwise 算子。

complier-通用

- 支持 kernel riscv 后端单元测试。

Improvements

文档

- 更换文档中 mgeconvert onnx 转换文档链接为具体使用位置链接。

- 更新对分脚本和文档,使之更准确完整。

- 更新使用文档,使之更合理完整。

其他

- 格式化代码,用来保证 MegCC 中 cpp ,cmake 的代码风格,使之更合理规范。

MegCC

HighLight

breaking change

runtime

- Fix the problem that runtime exposed symbols are easy to conflict with user pipeline symbols (for example, DEBUG enumeration will conflict with the DEBUG macro that comes with the compiler). This change will lead to the loss of log API compatibility (if the user side uses the log API(LITE_set_log_level), the compiler will report an error similar to: "undefine WARN, do you means LITE_WARN" when compiling).

Bug Fixes

basic components

- Support MacOS compiler build and host build for runtime.

- Fix the compilation failure when using mgb-to-tinynn compile model with float16 nchw88 optimization enabled.

- Fix the bug that the bisection of MegCC and MegBrain is incorrect when the output is Uint8, fix the bisection script and improve its usability.

compiler-kernel

- Fix dumpdir empty error and output info empty for exporter and mgb-to-tinynn.

complier-common

- Fix the case where the exporter output op contains weight.

- Fix redefinition error in importer when there is two op with same name in model.

- Support for compiling MegCC on Apple M1 platform.

- Fix the compilation failure when using mgb-to-tinynn compile model with float16 nchw88 optimization enabled.

runtime

- Bind vm with CombineModel to solve the crash that occurs when the order of LITE_destroy_network and LITE_make_network is different under multiple models.

- Fix the error of weighttype output tensor value invalid when inference.

- Fix the illegal memory access bug caused by incomplete parameter reset when the loader is used multiple times.

其他

- Fix the bug that arm-linux environment fails to compile runtime with release package due to missing dependency.

New Features

basic components

- Fixed llvm compilation problem for fp16 data type.

complier-kernel

- Add int8 dot hybrid conv stride 1 algo.

- Add elemwise for VEC_BCAST111C f32 type.

- Started to support float16 type kernel (mk8 matmul, relayout, unary elemwise have been added).

- Add fp16 f23 f43 and f63 winograd kernel.

- Add fp16 im2col kernel.

- Support Gaussian blur operator.

- Add float16 data type reduce, pooling, and float16 and float32 converter operators.

- Modify the decryption logic of the model, delete the auxiliary tool Hako-To-MGB, and the user does not need to specify the Hako version. It will automatically try all the unbuttonous algorithms supported by megcc (hako option reserved, marked as deprecated).

- Add binary and ternary elemwise operators for float16 data types.

- Add the Conv1x1 operator for the float16 data type.

- Add support benchmark for fp16 model;Add fp16 naive conv and matnul kernel.

- Add VEC_BCAST110 elemwise mode on arm platform.

- Add gaussian blur cv op.

- Add gi fp16 gemv and gevm kernel.

- Add fp16 nchw88 channelwise kernel.

complier-common

- Add riscv kernel unit test.

Improvements

compiler-kernel

- Optimize the performance of MegCC naive argsort operator to align with MegBrain.

- Optimize MEgCC naive topK operator performance to align with MegBrian.

- Optimize Conv 1x1 algorithm for Group NCHW44 lauout on arm64 platform, and the performance is aligned with MegBrain after optimization.

- Optimize WarpPerspective transform, optimize for the case where the transform type is resize transform, and the performance is aligned with MegBrain after optimization.

文档

- Change the mgeconvert onnx to mge usage link.

- Update bisection script and documentation.

- Update the usage documentation to make it more reasonable and complete.:

其他

- Formatting documentation and code to make it more reasonable and standardized.

What's Changed

- fix(doc): update doc/first-use.md by @Asthestarsfalll in #36

- improvement(readme): unify readme format by @Asthestarsfalll in #35

- CI(project): and CI to fix spell by @tpoisonooo in #39

- fix(doc): add kernel exporter doc and fix some misc doc problem by @yeasoon in #34

- fix some misc by @yeasoon in #37

- Update internal by @yeasoon in #41

- fix(lib): add the Tiny CC library for Apple M1; by @Qsingle in #42

- feat(third_party): add fp16 llvm supported patch by @yeasoon in #45

New Contributors

- @Asthestarsfalll made their first contribution in #36

- @Qsingle made their first contribution in #42

Full Changelog: v0.1.3...v0.1.4

v0.1.3

MegCC

MegCC is a deep-learning model compiler with the following features:

- Extremely Lightweight Runtime: Only keep the required computation kernel in your binary. e.g., 81KB runtime for MobileNet v1

- High Performance: Every operation is carefully optimized by experts

- Portable: generate nothing but computation code, easy to compile and use on Linux, Android, TEE, BareMetal

- Low Memory Usage while Boot Instantly: Model optimization and memory planning are generated at compile time. Get State-of-the-art level memory usage and spend no extra CPU during inference

MegCC compiler is developed based on MLIR infrastructure. Most of the code generated by the compiler is optimized by hand. MegCC supports neural networks that contain tensors in static shape or dynamic shape. To help achieve the minimum binary size, it also supports generating the necessary CV operators so that you don't need to link another giant CV lib.

When compiling a model:

- MegCC generates both the kernels used by the model and user-required CV kernels

- MegCC does several optimizations, such as static memory planning and model optimization

- MegCC dumps the data above into the final model

- MegCC runtime loads the model and uses the generated kernels to finish the model inference. Only 81KB binary size is required to inference MobileNetV1 (in fp32).

MegCC supports Arm64/ArmV7/X86/BareMatal backend. You may want to check supported operator lists.

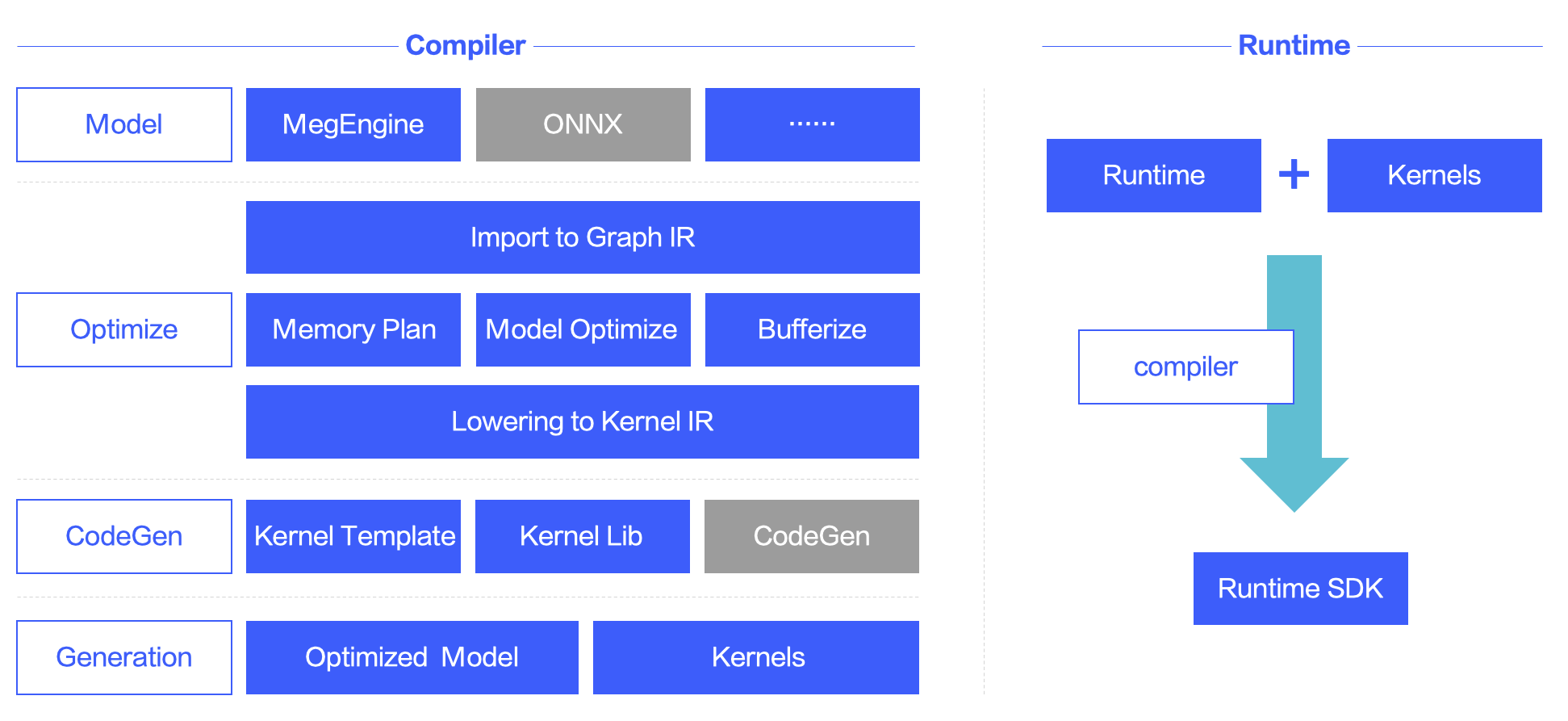

MegCC Structure

Documentation

Get MegCC

Download release compiler suit from release page

Compiler from source, please fellow the compiler doc

Build the release tar, please fellow the release doc

Get benchmark of different model please reference benchmark

How to use MegCC

Read how-to-use to see how to compile your models and deploy them,also there is a Chinese doc 如何使用.

MegCC runtime is easy to run in standard OS, even no OS(example).

New Feature

- support benchmark for some typical classification model include resnet18, resnet50, efficientnet, etc.

- add kernel exporter tools to export the C code of given kernel type

- support elemwise kernel fuse pass to get faster model for inference

- add qadd, qrelu and other new kernel for support qint8 type model

- optimise arm64 sigmoid, conv3x3,, conv1x1 kernel

- update MegEngine version

Bug Fix

- fix gemv/gevm unusable bug

- fix ppl_gen.sh script copy error

v0.1.2

MegCC

以解决 MegEngine 推理库 Binary size 过大为出发点,MegEngine 团队基于 MLIR 开发了一个深度学习模型编译器 MegCC。MegCC 的输入为 MegEngine 的模型,经过编译优化之后生成运行这个模型的高效纯 C kernel,以及优化之后的自定义格式模型。MegCC 具有下特点:

超级轻量的 Binary Size :仅仅只编译 mobilenetV1,stripped 之后的Runtime只有 81KB .

高性能 :所有算子都进过精细优化,Arm 推理性能超过 MegEngine。

移植非常容易 :因为 Runtime 和 Kernel都是纯 C 代码,非常方便部署,支持部署在 Android,TEE 以及无操作系统的单片机上

低内存消耗,快启动 :使用全局图优化,以及在模型编译时候进行了静态绑定,所以内存和启动时间都得到优化

结构框图

支持情况

支持平台

MegCC 目前只支持 CPU 平台:

- Arm64,ArmV7:高度优化

- X86 :部分优化

- risc-v:部分优化

- 单片机:暂时没有优化

支持数据类型:

- float32:高度优化

- int8-dot:高度优化

支持的 Operator List

支持的 Operator List 记录在 Operator List。

MegCC 使用

megcc 文档都集中在megcc的git project的doc下面 文档,文档入口