MedCLIP is a medical image captioning Deep Learning neural network based on the OpenAI CLIP architecture.

Run main.ipynb on a Colab instance.

Weights for the model are provided, so you don’t need to train again.

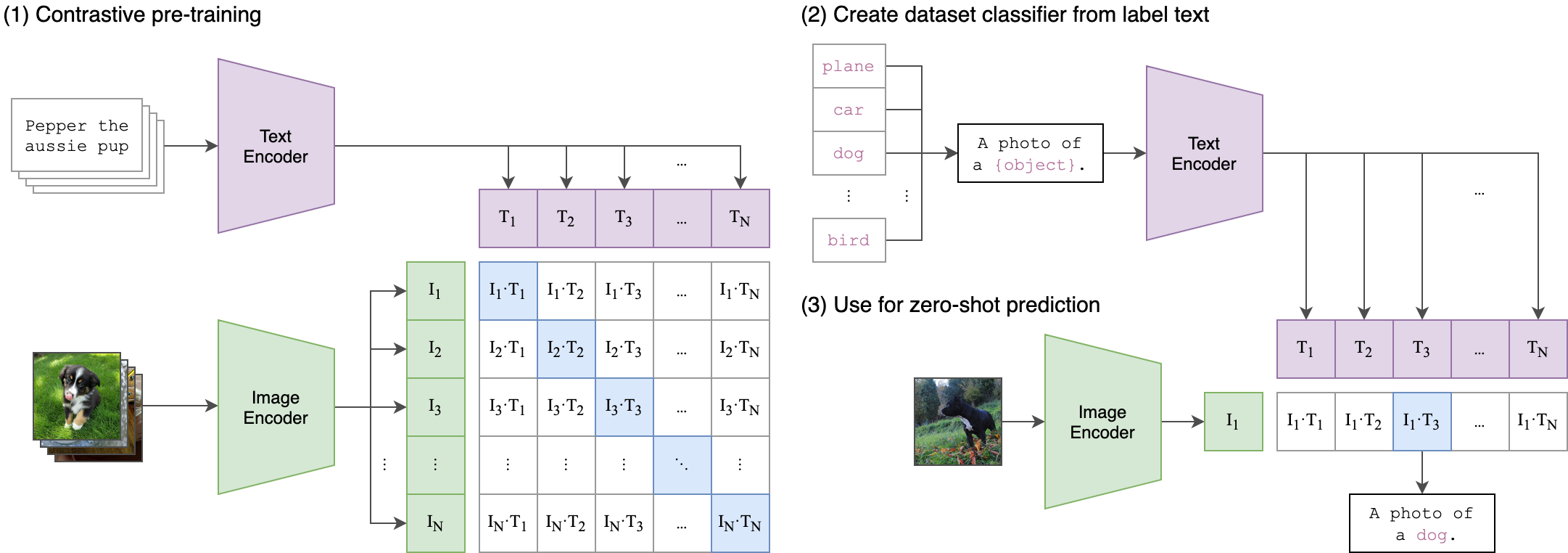

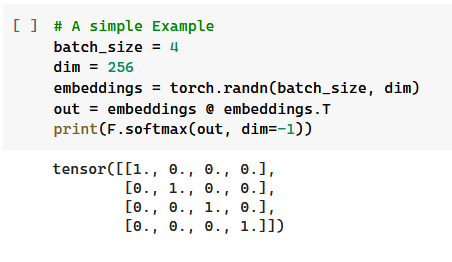

CLIP is a beautiful hashing process.

Through encodings and transformations, CLIP learns relationships between natural language and images. The underlying model allows for either captioning of an image from a set of known captions, or searching an image from a given caption. With appropriate encoders, the CLIP model can be optimised for certain domain-specific applications. Our hope with MedCLIP is to help the radiologist emit diagnoses.

CLIP works by encoding an image and a related caption into tensors. The model then optimises the last layer of the (transfer learnable) encoders to make both image and text encodings as similar as possible. (1. Contrastive Pretraining)

After the model is successfully trained, we can query it with new information. (2. Zero shot)

-

Take an input

-

Encode with the custom trained encoders

-

Find a match (image or text) from the known data set.

-

Go through each entry of the data set

-

Check similarity with the current input

-

Output the pairs resulting in most similarity

-

-

[Optionally] Measure the similarity between the real caption, and the guessed one.

The model was trained using a curated MedPix dataset that focuses on Magnetic Resonance, Computer Tomography and X-Ray scans. ClinicalBERT was used to encode the text and ResNet50 was used for the images.

Similarity between captions was measured using Rouge, Bleu, Meteor and Cider.

-

Add new datasets; the more datasets the model has, the better the captioning performance (bigger space from where to choose a caption/image).

Some relevant datasets:

-

IU Chest X-Ray

-

ChestX-Ray 14

-

PEIR gross

-

BCIDR

-

CheXpert

-

MIMIC-CXR

-

PadChest

-

ICLEF caption

-

Generate new captions instead of just looking them up. This will vastly improve accuracy.

-

-

Repo Owner

-

Jorge Allan Gomez Mercado

-

Luis Soenksen