Note: 同一论文在不同分类目录下可能会出现多次

-

TVM: An Automated End-to-End Optimizing Compiler for Deep Learning

-

Tensor Comprehensions: Framework-Agnostic High-Performance Machine Learning Abstractions

-

Intel® nGraph™: An Intermediate Representation, Compiler, and Executor for Deep Learning

-

MLIR: A Compiler Infrastructure for the End of Moore’s Law

模块化设计,可以整合不同编译器的DL编译器框架

-

DLVM: A MODERN COMPILER INFRASTRUCTURE FOR DEEP LEARNING SYSTEMS

-

Glow: Graph Lowering Compiler Techniques for Neural Networks

-

Halide用于图像处理领域的算子生成,首次提出计算和调度分离的思想,后被TVM拓展到深度学习领域

-

Stripe: Tensor Compilation via the Nested Polyhedral Model

用polyhedral进行算子生成

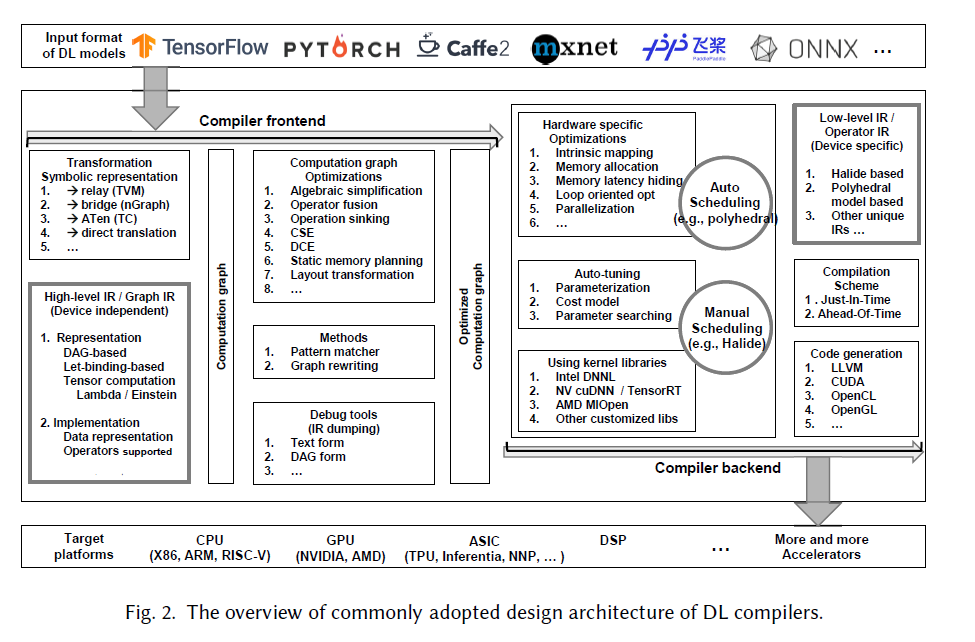

一篇很好的DL编译器的survey,总结了DL编译器的设计框架

比较了Halide, XLA, TVM, TC等几种编译器的性能

-

UC伯克利AI-Sys课程AI compiler sides

依次介绍了Halide/TVM/TC三个工作,勾勒出DL compilers发展的脉络。Halide把调度从硬件的复杂性中抽象出来;TVM自动地为不同硬件优化算子调度;TC为算子全自动代码生成,完全不同考虑硬件。

- TVM: An Automated End-to-End Optimizing Compiler for Deep Learning

- Relay: A New IR for Machine Learning Frameworks

- Relay: A High-Level Compiler for Deep Learning

TVM的第二代high-level IR,类似于编程语言,设计了语法规则,引入了let-binding机制。DL背景的开发者可以使用data flow graph来定义计算图,PL(Program Language)背景的研究人员可以使用let binding来定义计算图。Let binding机制通过compute scope解决了AST的二义性问题。

TVM设计的一套通用的后端设计方案,设计了指令集,可以基于FPGA实现。VTA与Relay, TVM组成一套完整的end-to-end的DL编译栈。TVM基于VTA尝试了hardware-software codesign.

-

Automatically tuned linear algebra software(ATLAS, 1998)

-

OpenTuner: An extensible framework for program autotuning(2014)

-

Automatically Scheduling Halide Image Processing Pipelines(2016)

-

Tensor Comprehensions: Framework-Agnostic High-Performance Machine Learning Abstractions(2018.2)

-

Learning to Optimize Tensor Programs (Auto-TVM, 2018.5)

-

Learning to Optimize Halide with Tree Search and Random Programs(2019)

下面两篇都是基于TVM做的template-free工作

-

Ansor: Generating High-Performance Tensor Programs for Deep Learning(2020.6)

把schedule分成sketch和annotation两层,sketch相当于TVM的schedule template,Ansor可以先搜索出sketch,再搜索annotation。

用强化学习来做schedule搜索

上面面三篇公众号文章介绍Poly的一些基本原理和在DL领域中的应用,作者是要术甲杰,是Poly研究领域的博士

同样是要术甲杰写的介绍Pluto算法的文章

用shared memory来实现更激进的operator fusion策略

- Automatic differentiation in ML: Where we are and where we should be going

- Automatic Differentiation in Machine Learning: a Survey

两篇关于自动微分的survey

schedule和execution阶段进行联合优化

阿里杨军的系列文章

用TVM在神威超算上生成算子

TensorFow中的图优化