Assessor, as an adaptive colloquium, generates challenging training samples for training of symbolic regression systems like Boolformer. Assessor plays the crucial role of self-play, as in AlphaZero, yet is tailored to the asymmetry of symbolic regression: 👉 Crafting puzzles is simpler than solving them (post on X)

The setup runs like a GAN. Assessor, a transformer based on nanoGPT, generates Boolean formulas. The formulas are used to train a system, and are labeled easy if the trained system does it, otherwise hard. The Assessor is in turn trained using the labeled formulas to generate more challenging samples.

The project is young and moving quickly. Currently, the trained system is simulated by a script that labels a formula as easy/hard, if after simplification, its depth is less/more than a given threshhold. As assessor learns to generate formulas with more depth, the threshhold is increased.

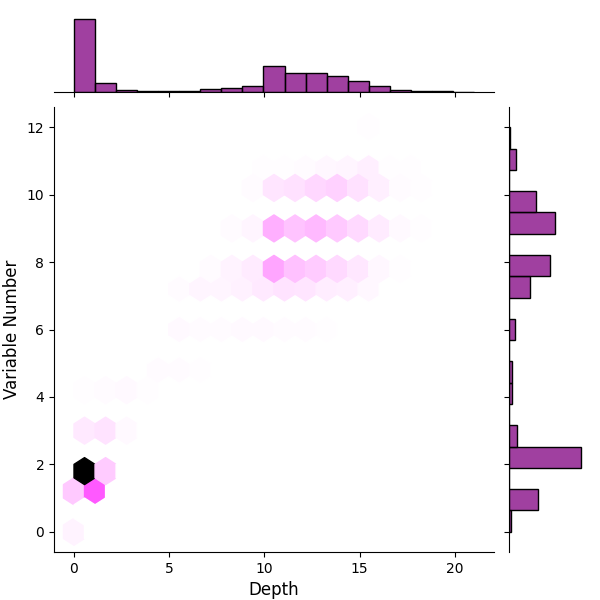

After just 330K iterations, Assessor generates formulas that, after simplification, 52.7% of them have both depth >= 6 and >= 6 variables:

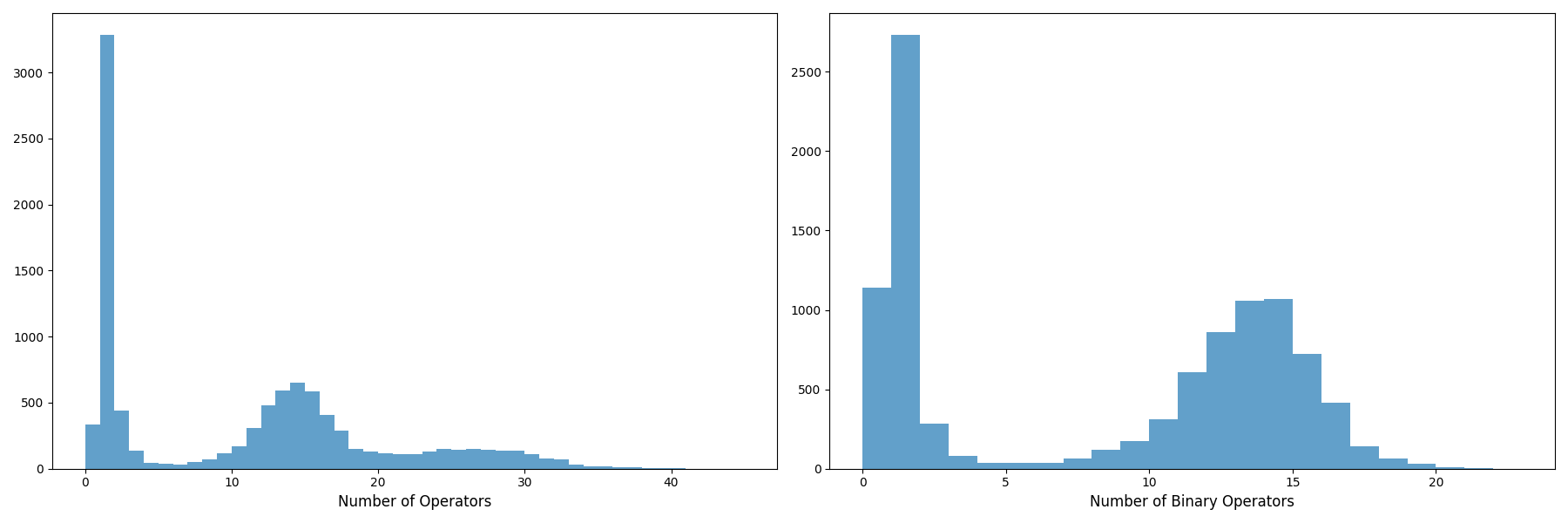

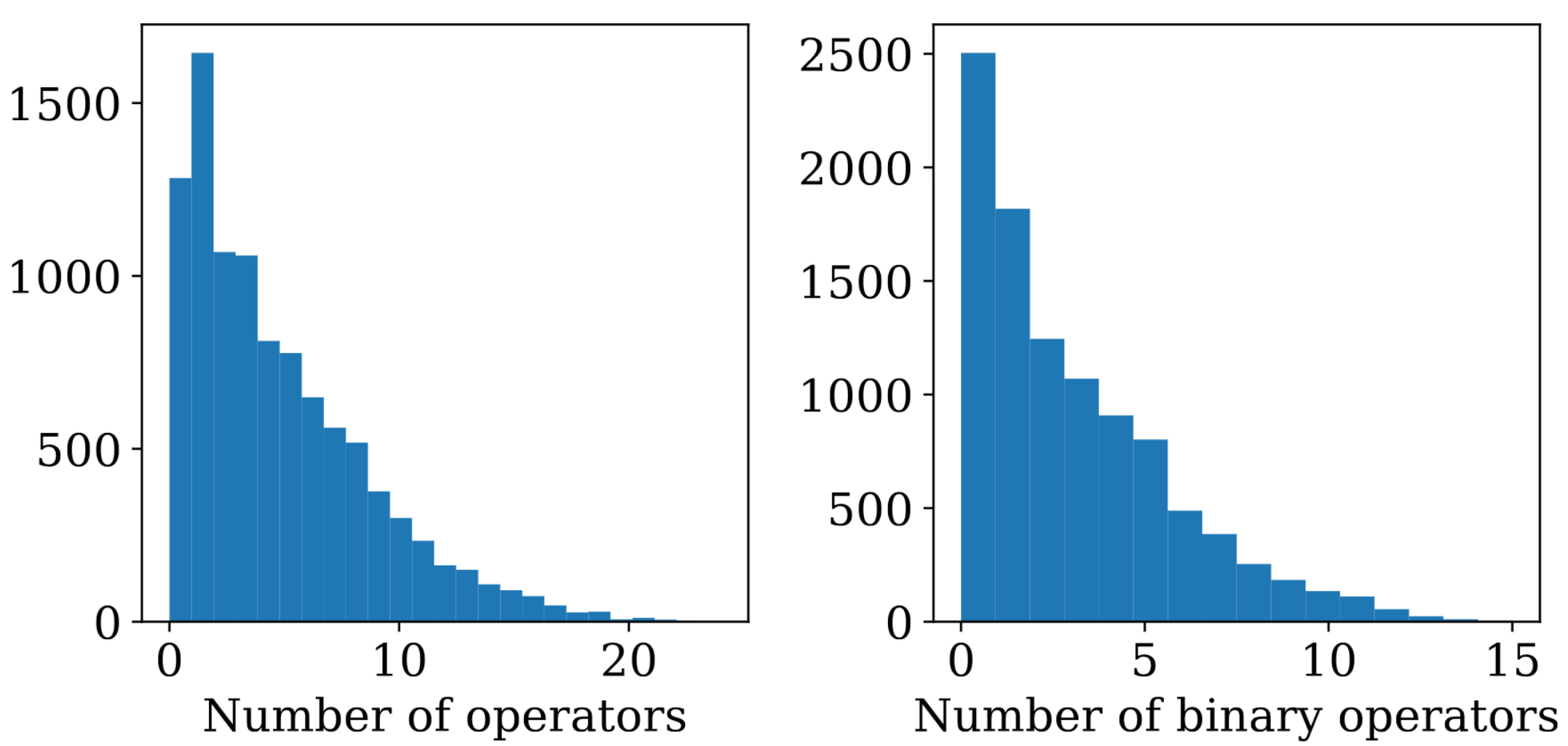

Assessor has superior Gaussian distribution of number of operators after simplification, compared to decaying distribution of the krafted method currently employed by Boolformer, despite Assessor having severe restrictions: Assessor has upto just 200 tokens, and 12 variables, while Boolfomer has upto 1000 binary operators.

Assessor

Boolformer Paper - Fig. 8

First, navigate to the folder where you keep your projects and clone this repository to this folder:

git clone https://github.com/karpathy/nanoGPT.git

git clone https://github.com/Majdoddin/assessor.gitDependencies:

pip install torch matplotlib seaborn boolean.pyThen, open the repository folder:

cd assessorNow, let's just run the trained Assessor. You need a model checkpoint. Download this 300M parameter model I trained within just 300K iterations to generate formulas with depth >= 6:

wget -P cwd https://huggingface.co/majdoddin/assessor/resolve/main/state-depth-6-2.ptAnd run it:

PYTHONPATH="${PYTHONPATH}:path/to/nanoGPT" && cd cwd && python ../assessor.pyYou'll see each generated formula in Polish normal form, followed by its simplified form, with num of variables and depth:

['or', 'and', 'and', 'or', 'and', 'or', 'and', 'or', 'and', 'or', 'and', 'or', 'and', 'x12', 'x4', 'x6', 'x12', 'x3', 'x4', 'x11', 'x5', 'x11', 'x7', 'x10', 'x4', 'x7', 'x10']

depth:12 var_num:8 simpified: x10|(x4&x7&(x10|(x7&(x11|(x5&(x11|(x4&(x3|(x12&(x6|(x12&x4)))))))))))

##analysis

analysis.py cwd/output.txtThis generates graphical statistics of the ouput in cwd.

You can train from scratch or from a checkpoint.

Download a checkpoint

Set the variables checkpoint, sec_round, min_depth, eval, start, end, logf, and comment the to_test_a_checkpoint lines in assessor.py.