Note these are my own personal notes and are a work in progress as I study towards passing this exam. If this helps someone great, but I make no guarantees/promises.

- Introduction

- Data Engineering

- Exploratory Data Analysis

- Modeling

- Machine Learning Implementation and Operations

- Question Answer Shortcuts

- Acronyms

AWS Certified Machine Learning – Specialty (MLS-C01) Exam Guide

Don't forget to utilize a benefit code if you've passed another AWS exam previously to save

| Domain | % of Exam |

|---|---|

| Domain 1: Data Engineering | 20% |

| Domain 2: Exploratory Data Analysis | 24% |

| Domain 3: Modeling | 36% |

| Machine Learning Implementation and Operations | 20% |

| Total | 100% |

- 170 minutes

- 65 questions

- >= 75% to pass

- Good to use the bathroom before the exam

- Average question time should be between 2 and 2 1/2 minutes

- Try to understand each question thoroughly

Identify data sources (e.g., content and location, primary sources such as user data) and determine storage mediums (e.g., DB, Data Lake, S3, EFS, EBS)

- fully managed, scalable cloud data warehouse, columnar instead of row based (based on Postgres, No OLTP [row based], but OLAP [column based]); no Multi-AZ

- As it is a data warehouse, can only store structured data

- Offers parallel sql queries

- Can be server less or use cluster(s)

- Uses SQL to analyze structured and semi-structured data across data warehouses, operational DBs, and data lakes

- Integrates with Quicksight or Tableau

- Leader node for query planning, results aggregation

- Compute node(s) for performing queries to be sent back to the leader

- Provision node sizes in advance

- Enhanced VPC Routing forces all COPY and UNLOAD traffic moving between your cluster and data repositories through your VPCs, otherwise over the internet routing, including to other AWS services

- Can configure to automatically copy snapshots to other Regions

- Large inserts are better (S3 copy, firehose)

- Resides on dedicated Amazon Redshift servers independent of your cluster

- Can efficiently query and retrieve structured and semistructured data from files in S3 into Redshift Cluster tables (points at S3) without loading data into Redshift tables

- Pushes many compute intensive tasks such as predicate filtering (ability to skip reading unnecessary data at storage level from a data set into RAM) and aggregation, down to the Redshift Spectrum layer

- Redshift Spectrum queries use much less of the formal cluster's processing capacity than other queries

- Autoscaling when running out of storage

- OLTP based

- Must be provisioned

- Max read replicas: 5

- Read replicas are not equal to a DB

- Read replicas cross region/AZ incur $

- IAM Auth

- Integrates with Secrets Manager

- Supports MySQL, MariaDB, Postgres, oracle, aurora

- Fully customized => MS SQL Server or RDS Custom for Oracle => can use ssh or SSM session manager; full admin access to OS/DB

- At rest encryption via KMS

- Use SSL for data in transit to ensure secure access

- Use permission boundary to control the maximum permissions employees can grant to the IAM principals (eg: to avoid dropped/deleted tables)

- Multi-AZ:

- Can be set at creation or live

- Synchronous replication, at least 2 AZs in region, while Read replicas => asynchronous replication can be in an AZ, cross-AZ or cross Region

- MySQL or Postgres

- OLTP based

- Better performance than RDS version

- Lower price

- At rest encryption via KMS

- 2 copies in each AZ, with a minimum of 3 AZ => 6 copies

- max read replica: 15 (autoscales)

- Shareable snapshots with other accounts

- Replicas: MySQL, Postgres, or Aurora

- Replicas can autoscale

- Cross region replication (< 1 second) support available

- Aurora Global: multi region (up to 5)

- Aurora Cloning: copy of production (faster than a snapshot)

- Aurora multimaster (for write failover/high write availability)

- Aurora serverless for cost effective option (pay per second) for infrequent, intermittent or unpredictable workloads

- Non-serverless option must be provisioned

- Automated backups

- Automated failover with Aurora replicas

- Fail over tiers: lowest ranking number first, then greatest size

- Aurora ML: ML using SageMaker and Comprehend on Aurora

- Scalable, highly available, serverless, and managed Apache Cassandra compatible (NoSQL) DB service offering consistent single-digit millisecond server-side read/write performance, while also providing HA and data durability

- Uses the Cassandra Query Language (CQL)

- All writes are replicated 3x across multiple AWS AZ for durability and availability

- Tables can scale up and down with virtually unlimited throughput and storage. There is no limit on the size of a table or the number of rows you can store in a table.

- Effectively the AWS "Aurora" version of MongoDB

- Used to store query and index JSON data

- Similar "deployment concepts" as Aurora

- Fully managed, HA with replication across 3 AZs

- Storage automatically grows in increments of 10 GB, up to 64 TB

- Automatically scales to workloads with millions of requests per second

- Anything related to MongoDB => DocumentDB

- Doesn't have an in-memory caching layer => Consider DynamoDB (DAX) for a NoSQL approach

- (Serverless) NoSQL Key-value / document DB that delivers single-digit millisecond performance at any scale. It's a fully managed, multi-region, multi-master, durable DB with built-in security, backup and restore, and in-memory caching for internet scale applications

- Stored on SSD

- Good candidate to store ML model served by application(s)

- Stored across 3 geographically distinct data centers

- Eventual consitent reads (default) or strongly consistent reads (1 sec or less)

- Session storage alternative (TTL)

- IAM for security, authorization, and administration

- Primary key possibilities could involve creation time

- On-Demand (pay per request pricing) => $$$

- Provisioned Mode (default) is less expensive where you pay for provisioned RCU/WCU

- Backup: optionally lasts 35 days and can be used to recreate the table

- Standard and IA Table Classes are available

- Max size of an item in DynamoDB Table: 400KB

- Can be exported to S3 as DynamoDB JSON or ion format

- Can be imported from S3 as CSV, DynamoDB JSON or ion format

- Service to search any field, even partial matches at petabyte scale

- Common to use as a complement to another DB (conduct search in the service, but retrieve data based on indices from an actual DB)

- Requires a cluster of instances (can also be Multi-AZ)

- Doesn't support SQL (own query language)

- Comes with Opensearch dashboards (visualization)

- Built in integrations: Amazon Data Firehose, AWS IOT, λ, Cloudwatch logs for data ingest

- Security through Cognito and IAM, KMS encryption, SSL and VPC

- Can help efficiently store and analyze logs (amongst cluster) for uses such as Clickstream Analytics

- Patterns:

sequenceDiagram

participant Kinesis data streams

participant Amazon Data Firehose (near real time)

participant OpenSearch

Kinesis data streams->>Amazon Data Firehose (near real time):

Amazon Data Firehose (near real time)->>OpenSearch:

Amazon Data Firehose (near real time)->>Amazon Data Firehose (near real time): data tranformation via λ

sequenceDiagram

participant Kinesis data streams

participant λ (real time)

participant OpenSearch

Kinesis data streams->>λ (real time):

λ (real time)->>OpenSearch:

- Good to improve latency and throughput for read heavy applications or compute intensive workloads

- Good for storing sessions of instances

- Good for performance improvement of DB(s), though use of involves heavy application code changes

- Must provision EC2 instance type(s)

- IAM auth not supported

- Redis versus Mem Cached:

- Redis:

- backup and restore features

- read replicas to scale reads/HA

- data durability using AOF persistence

- multi AZ with failover

- Redis sorted sets are good for leaderboards

- Redis Auth tokens enable Redis to require a token (password) before allowing clients to execute commands, thus improving security (SSL/Inflight encryption)

- Fast in-memory data store providing sub-millisecond latency, Hippa compliant, replication, HA, and cluster sharding

- Mem Cached:

- Multinode partitioning of data (sharing)

- No replication (HA)

- Non persistence

- No backup/restore

- Multithreaded

- Supports SASL auth

- Redis:

- Service to transition (no transformations) supported sources to relation DB, data warehouses, streaming platforms, and other data stores in AWS without new code (or any?)

- Sources:

- On-premises and EC2 DBs: Oracle, MS SQL Server, MySQL, MariaDB, postgres, mongoDB, SAP, DB2

- Azure: Azure SQL DB

- Amazon RDS: all including Aurora

- S3

- Targets

- On-premises and EC2 DBs: Oracle, MS SQL Server, MySQL, MariaDB, postgres, SAP

- Amazon RDS: all including Aurora

- Amazon Redshift

- DynamoDB

- S3

- Elastic Search service

- Kinesis Data Streams

- DocumentDB

- Homogenous migration: Oracle => Oracle

- Heteregenous: Oracle => Aurora

- EC2 server runs replication software, as well as continuous data replication using Change Data Capture (CDC) [for new deltas] and DMS

- Can pre-create target tables manually or use AWS Schema Conversion Tool (SCT) [runs on the same server] to create some/all of the target tables, indices, views, etc. (only necessary for heterogeneous case)

- Offers centralized architecture within S3

- Can store structured, semi-structured and unstructured data

- Decouples storage (S3) from compute resources

- Analagous to S3, any format is permitted, but typically they are: CSV, JSON, Parquet, Orc, Avro, and Protobuf

| Data Warehouse | Capability | Data Lake |

|---|---|---|

| structured, processed | data | structured, semi-structured, unstructured, raw |

| schema-on-write | processing | schema-on-read |

| hierarchically archived | storage | object-based, no hierarchy |

| less agile, fixed configuration | agility | highly agile, configure and reconfigure as needed |

| mature | security | maturing |

| business professionals | users | data scientists et. al. |

- Service to allow objects/files within a virtual "directory"

- Bucket names must be globally unique

- Buckets exist within AWS regions

- Not a file system, though if a file system is needed, EBS/EFS/FSx should be considered

- Not mountable as is a NFS

- Supports any file format

- Name formalities:

- Must not start with the prefix 'xn--'

- Must not end with the the suffix '-s3alias'

- Not an IP address

- Must start with a lowercase letter or number

- Between 3-63 characters long

- No uppercase

- No underscores

- Each has a key, it's full path within the s3 bucket including the object/file separated by backslashes ("/")

- Each has a value, it's content

- Note there is no such thing as a true directory within S3, but the convention effectively serves as a namespace

- Compression is good for cost savings concerning persistence

- Max size is 5 TB

- If uploading > 100MB and absolutely for > 5 GB, use Multi-Part upload

- S3 Transfer Acceleration also can be utilized to increase transfer rates (upload and download) by going through an AWS edge location that passes the object to the target S3 bucket (can work with Multi-Part upload)

- Strong consistency model to reflect latest version/value upon write/delete to read actions

- Version ID if versioning enabled at the bucket level

- Metadata (list of key/val pairs)

- Tags (Unicode key/val pair <= 10) handy for lifecycle/security

- Endpoint offers HTTP (non encrypted) and HTTPS (encryption in flight via SSL/TLS)

Security (IAM principle can access if either of the policy types below allows it and there is no Deny present):

- Types

- User Based: governed by IAM policies (eg: which user, within a given AWS account, via IAM should be allowed to access resources)

- Resource Based:

- Bucket Policies (JSON based statements) governing such things as:

- (Blocking) public access [setting was created to prevent company data leaks, and can be set at the account level to ensure of inheritance]

- Forced encryption at upload (necessitates encryption headers). Can alternatively be done by "default encryption" via S3, though Bucket Policies are evaluated first

- Cross account access

- Bucket policy statement attributes

- SID: statement id

- Resources: per S3, either buckets or objects

- Effect: Allow or Deny

- Actions: The set of api action(s) to apply the effect to

- Principal: User/Account the policy applies to

- Bucket Policies (JSON based statements) governing such things as:

- Object Access Control List (ACL) - finer control of individual objects (eg: block public access)

- Bucket Access Control List (ACL) - control at the bucket level (eg: block public access)

- S3 Object(s) are owned by the AWS account that uploaded it, not the bucket owner

- Settings to block public access to bucket(s)/object(s) can be set at the account level

- S3 is accessible to other AWS resources via:

- VPC endpoint (private connection)

- bucket policy tied to AWS:SourceVpce (for one endpoint)

- bucket policy tied to AWS:SourceVpc (for all possible endpoint(s))

- Public internet via an IGW=>public ip tied to a bucket policy tied to AWS:SourceIP:

- VPC endpoint (private connection)

- S3 Access Logs can be stored to another S3 bucket (not the same to prevent infinite looping)

- Api calls can be sent to AWS CloudTrail

- MFA Delete of object(s) within only versioned buckets to prevent accidental permanent deletions [only enabled/disabled by bucket owner via CLI]

| Std | Intelligent Tiering | Std-IA | One Zone-IA | Glacier Instant Retrieval | Glacier Flexible Retrieval | Glacier Deep Archive | |

|---|---|---|---|---|---|---|---|

| *Durability | 99.99999999999% | 99.99999999999% | 99.99999999999% | 99.99999999999% | 99.99999999999% | 99.99999999999% | 99.99999999999% |

| *Availability | 99.99% | 99.9% | 99.9% | 99.5% | 99.9% | 99.99% | 99.99% |

| *Availability SLA | 99.99% | 99% | 99% | 99% | 99% | 99.99% | 99.99% |

| *AZs | >= 3 | >= 3 | >= 3 | 1 | >= 3 | >= 3 | >= 3 |

| *Min Storage Duration Charge | None | None | 30 days | 30 days | 90 days | 90 days | 180 days |

| *Min Billable Obj Size | None | None | 128 KB | 128 KB | 128 KB | 40 KB | 40 KB |

| *Retieval Fee | None | None | Per GB | Per GB | Per GB | Per GB | Per GB |

| *Storage Cost (GB per month) | .023 | .0025 - .023 | .0125 | .01 | .004 | .0036 | .00099 |

| *Retieval Cost (per 1000 requests) | GET: .0004 POST: .005 |

GET: .0004 POST: .005 |

GET: .001 POST: .01 |

GET: .001 POST: .01 |

GET: .01 POST: .02 |

GET: .0004 POST: .03 Expediated: $10 Std: .05 Bulk: free |

GET: .0004 POST: .05 Std: .10 Bulk: .025 |

| *Retieval Time | immediate | immediate | immediate | immediate | immediate (milliseconds) | Expediated (1-5 mins) Std (3-5 hrs) Bulk (5-12 hrs) |

Std (12 hrs) Bulk (48 hrs) |

| *Monitoring Cost (per 1000 requests) | 0 | .0025 | 0 | 0 | 0 | 0 | 0 |

| *Note: US-East-1 for the sake of example, entire table subject to change by AWS |

- Durability: How often a file is not to be lost

- Availability: How readily S3 bucket/files are available

- Used data frequently accessed

- Provides high throughput and low latency

- Good for mobile and gaming applications, pseudo cdn, big data/analytics

- Good for data less frequently acessed that need immediate access

- Cheaper than Standard

- Good for Disaster Recovery and/or backups

- Modest fee for monthly monitoring and auto-tiering

- Moves objects between tiers based on usage

- Access tiers include:

- Frequent (automatic) default

- Infrequent (automatic) not acessed for 30 days

- Archive Instant (automatic) not accessed for 90 days

- Archive (optional) configurable between 90 days to >= 700 days

- Deep Archive (optional) configurable between 180 days to >= 700 days

- Data lost when AZ is lost/destroyed

- Good for recreateable data or on-prem data secondary backup copies

- Never setup a transition to glacier classes if usage might need to be rapid

- Good for archiving/backup

- Glacier Instant Retrieval is a good option for accessing data once a quarter

- Harness Glacier Vault Lock (WORM) to no longer allow future edits, which is great for compliance and data retention

- Glacier or Deep Archive are good for infrequently accessed objects that don't need immediate access

graph LR

A[Std] --> B[Std-IA]

A --> C[Intelligent-Tiering]

A --> D[One Zone-IA]

A --> E[Glacier]

A --> F[Glacier Deep Archive]

B --> C

B --> D

B --> E

B --> F

C --> D

C --> E

C --> F

D --> E

D --> F

E --> F

- Transition Actions: rules for when to transtion objects between s3 classes (see S3 storage classes above)

- Expiration Actions: rules for when to delete an object after some period of time

- Good for deleting log files, deleting old versions of files (if versioning enabled), or incomplete multi-part uploads

- Rules can be created for object prefixes (addresses) or associated object tags

- Harnesses disparate key [path] to speed up queries (eg: Athena)

- Typical scenarios are:

- time/date (eg: s3:https://bucket/datasetname/year/month/day/....)

- product (eg: s3:https://bucket/datasetname/productid/...)

- Partitioning handled by tools such as Kinesis, Glue, etc.

- Encryption (keys) managed by AWS (S3)

- Encryption type of AES-256

- Encrypted server side via HTTP/S and Header containing "x-amz-server-side-encryption":"AES256"

- Enabled by default for new buckets and objects

- Encryption (KMS Customer Master Key [CMK]) managed by AWS KMS

- Encrypted server side via HTTP/S and Header containing "x-amz-server-side-encryption":"aws:kms"

- Offers further user control and audit trail via cloudtrail

- May be impacted by KMS limits, though you can increase them via Service Quotas Console

- Upload calls the GenerateDataKey KMS API (counts towards KMS quota 5500, 10000, or 30000 req/s based upon region)

- Download calls the Decrypt KMS API (also counts towards KMS quota)

- Server side encryption via HTTPS only, using a fully managed external customer key external to AWS that must be provided in the HTTP headers for every HTTP request (key isn't saved by AWS)

- Objects encrypted with SSE-C are never replicated between S3 Buckets

- Utilizes a client library such as Amazon S3 Encryption Client

- Encrypted prior to sending to S3 and must be decrypted by clients when retrieving from S3 conducted over HTTP/S

- Utilizes a fully managed external customer key external to AWS

- S3 objects using SSE-C unable to be replicated between buckets

- HTTP Endpoint - non-encrypted

- HTTPS Endpoint - encrypted

- To force encryption, a bucket policy is in order, and the following is an HTTP Get version

-

{ "Version": "2012-10-17", "Statement": [ { "Effect":"Deny", "Principal":"*", "Action":"s3:GetObject", "Resource":"arn:aws:s3:::random-bucket-o-stuff/*", "Condition":{ "Bool":"aws:SecureTransport":"false" }

} ] }

-

- To force SSE-KMS encryption

-

{ "Version": "2012-10-17", "Statement": [ { "Effect":"Deny", "Principal":"*", "Action":"s3:PutObject", "Resource":"arn:aws:s3:::random-bucket-o-stuff/*", "Condition":{ "StringNotEquals":{"s3:x-amz-server-side-encryption":"aws-kms"} }

} ] }

-

- To force SSE-C encryption

-

{ "Version": "2012-10-17", "Statement": [ { "Effect":"Deny", "Principal":"*", "Action":"s3:PutObject", "Resource":"arn:aws:s3:::random-bucket-o-stuff/*", "Condition":{ "Null":{"s3:x-amz-server-side-encryption-customer-algorithm":"true"} }

} ] }

-

- Linux based only

- Can mount on many EC2(s)

- Use SG control access

- Connected via ENI

- 10GB+ throughput

- Performance mode (set at creation time):

- General purpose (default); latency-sensitive; use cases (web server, CMS)

- Max I/O-higher latency, throughput, highly parallel (big data, media processing)

- Throughput mode:

- Bursting (1 TB = 50 MiB/s and burst of up to 100 MiB/s)

- Provisioned-set your throughput regardless of storage size (eg 1 GiB/s for 1 TB storage)

- Storage Classes, Storage Tiers (lifecycle management=>move file after N days):

- Standard: for frequently accessed files

- Infrequent access (EFS-IA): cost to retrieve files, lower price to store

- Availability and durability:

- Standard: multi-AZ, great for production

- One Zone: great for development, backup enabled by default, compatible with IA (EFS One Zone-IA)

- Volumes exist on EBS => virtual hard disk

- Snapshots exist on S3 (point in time copy of disk)

- Snapshots are incremental-only the blocks that have changed since the last snapshot are move to S3

- First snapshot might take more time

- Best to stop root EBS device to take snapshots, though you don't have to

- Provisioned IOPS (PIOPS [io1/io2])=> DB workloads/multi-attach

- Multi-attach (EC2 =>rd/wr)=>attach the same EBS to multiple EC2 in the same AZ; up to 16 (all in the same AZ)

- Can change volume size and storage type on the fly

- Always in the same region as EC2

- To move EC2 volume=>snapshot=>AMI=>copy to destination Region/AZ=>launch AMI

- EBS snapshot archive (up to 75% cheaper to store, though 24-72 hours to restore)

- Launch 3rd party high performance file system(s) on AWS

- Can be accessed via FSx File Gateway for on-premises needs via VPN and/or Direct Connect

- Fully managed

- Accessible via ENI within Multi-AZ

- Types include:

- FSx for Windows FileServer

- FSx for Lustre

- FSx for Net App ONTAP (NFS, SMB, iSCSI protocols); offering:

- Works with most OSs

- ONTAP or NAS

- Storage shrinks or grows

- Compression, dedupe, snapshot replication

- Point in time cloning

- FSx for Open ZFS; offering:

- Works with most OSs

- Snapshots, compression

- Point in time cloning

- Fully managed Windows file system share drive

- Supports SMB and Windows NTFS

- Microsoft Active Directory integration, ACLs, user quotas

- Can be mounted on Linux EC2 instances

- Scale up to 10s of GBps, millions of IOPs, 100s of PB of data

- Storage Options:

- SSD - latency sensitive workloads (DB, data analytics)

- HDD - broad spectrum of workloads (home directories, CMS)

- On-premises accessible (VPN and/or Direct Connect)

- Can be configured to be Multi-AZ

- Data is backed up daily to S3

- Amazon FSx File Gateway allows native access to FSx for Windows from on-premises, local cache for frequently accessed data via Gateway

- High performance, parallel, distributed file system designed for Applications that require fast storage to keep up with your compute such as ML, high peformance computing, video processing, Electronic Design Automation, or financial modeling

- Integrates with linked S3 bucket(s), making it easy to process S3 objects as files and allows you to write changed data back to S3

- Provides ability to both process 'hot data' in parallel/distributed fashion as well as easily store 'cold data' to S3

- Storage options include SSD or HDD

- Can be used from on-premises servers (VPN and/or Direct Connect)

- Scratch File System can be used for temporary or burst storage use

- Persistent File System can be used for storage / replicated with AZ

- A schedulable online data movement and discovery service that simplifies and accelerates data migration to AWS or moving data between on-premises storage, edge locations, other clouds and AWS storage (AWS to AWS, too)

- Deployed VM AWS Datasync Agent used to convey data from internal storage (via NFS, SMB, or HDFS protocols) to the DataSync service over the internet or AWS Direct Connect to within AWS. Agent is unnecessary for AWS to AWS

- Directly within AWS =>S3/EFS/FSx for Windows File Server/FSx for Lustre/FSx open zfs/FSx for NetAp ONTAP

- File permissions and metadata are preserved

- transfers encrypted and data validation conducted

- Good scenarios include where timing is important such as Fraud Detection or IoT Streaming Sensors gathering readings (eg: weather)

- A lot more technical to develop/maintain

- If there is an acceptable latency, run the batch load job(s) every n seconds/minutes/hours/days/weeks/etc.

graph LR

A[S3]-->B[AWS Glue Data Catalog]

B---|Schema|C[Athena]

A-->E["Redshift /(Redshift Spectrum)"]

A-->D["EMR (Hadoop/Spark/Hive)"]

E-->C

E-->F[QuickSight]

C-->F

- Data sources can be on-prem or AWS

- Destinations: S3, RDS, DynamoDB, Redshift, EMR

- Conducted with EC2 or EMR instances managed by DP

- Manages task dependencies

- Retries and notifies upon failure(s)

- HA

- Glue:

- focused on ETL

- resources all managed by AWS

- Data Catalog is there to make the data available to Athena or Redshift Spectrum

- Lambda based

- DP:

- Move data from one location to another

- More control over environment, compute resources that run code and the code itself

- EC2 or EMR instance based

- Serverless backend capable of supporting container image (must implement λ Runtime API)

- Free tier => 1,000,000 requests and 400,000 GBs of compute time

- Pay .20 per 1,000,000 requests after free threshold

- Pay per duration of memory (in increments of 1 ms) after the free threshold ($1.00 for 600,000 GBs)

- Up to 10 GB of RAM, minimum 128 MB

- More RAM improves CPU and network capabilities

- Environment variables (< 4KB)

- Does not have out-of-the box caching

- Regionally based

- Disk capacity in function container (/tmp) 512 MB to 10 GB

- Can use /tmp to load other files at startup

- Concurrency executions: 1000, but can be increased

- Deployment size: uncompressed 250 MB, compressed 50 MB

- Can run via CloudFront as CloudFront functions and λ@edge

- Can create λ layers (up to 5) to reuse code, making deployment smaller

- Timeout is the maximum amount of time in seconds a λ can run (default 3). This can be adjusted from 1 to 900 seconds (15 minutes). A low timeout value has increased risk of unexpected timeout due to external service lag, downloads or long(er) computational executions.

- Platform to send stream data (eg: IoT, metrics and logs) making it easy to load and analyze as well as provide the ability to build your own custom applications for your business needs

- Any mention of "streaming (system[s])" and/or "real time" (big) data is of importance, kinesis is likely the best fit as it makes it easy to collect, process, and analyze real-time, streaming data to allow quick reactions from information taken in.

- Output can be classic or enhanced fan-out consumers

- Accessed via VPC

- IAM access => Identity-based (used by users and/or groups)

- Types:

- Kinesis Data Streams

- Amazon Data Firehose (formerly Kinesis Data Firehose)

- Kinesis Analytics

- Kinesis Video Streams

- Service to provide low latency, real-time streaming ingestion

- On-demand capacity mode

- 4 MB/s input, 8 MB/s output?

- Scales automatically to accommodate up to double its previous peak write throughput observed in the last 30 days

- Pay per stream per hour and data/in/out per GB

- Provisioned mode (if throughput exceeded exception => add shard[s] manually or programmatically)

- Streams are divided into ordered shards

- 1 MB/s or 1k messages input per shard else 'ProvisionedThroughputException'

- 2 MB/s output per shard

- Pay per shard per hour

- Can have up to 5 parallel consumers (5 consuming api calls per second [per shard])

- Synchronously replicate streaming data across 3 AZ in a single Region and store between 24 hours and 365 days in shard(s) to be consumed/processed/replayed by another service and stored elsewhere

- Use fan-out if lag is encountered by stream consumers (~200ms vs ~70ms latency)

- Requires code (producer/consumer)

- Shards can be split or merged

- Shard count = ceil(max(incoming_write_bandwidth_in_KB/1000, outgoing_read_bandwidth_in_KB/2000))

- 1 MB message size limit

- TLS in flight or KMS at-rest encryption

- Can't subscribe to SNS

- Can't write directly to S3

- Can output to:

- Amazon Data Firehose

- Amazon Managed Service for Apache Flink

- Containers

- λ

- AWS Glue

- Fully Managed (serverless; scales automatically)

- AWS Lambda can be a destination as well

- Allows lots of flexibility for post-processing

- Aggregating rows

- Translating to different formats

- Transforming and enriching data

- Encryption

- Opens up access to other services & destinations

- S3, DynamoDB, Aurora, Redshift, SNS, SQS, CloudWatch

- Perform real-time analytics on stream via SQL

- Can utilize λ for preprocessing (near real-time)

- Input stream can be joined with a ref table in S3

- Output results include streams/errors

- Can use either Kinesis Data Streams or Amazon Data Firehose to analyze data in kinesis

- Pay only for resources used, though that can end up not being cheap

- Schema discovery

- IAM permissions to access input(s)/output(s)

- For SQL Applications: Input/Output: Kinesis Data Streams or Amazon Data Firehose to analyze data

- Managed Apache Flink [Formerly Kinesis Data Analytics for Apache Flink or for Java (on a cluster)] :

- Replaced Kinesis Data Analytics

- Input: Kinesis Data Stream or Amazon MSK

- Output: Sink (S3/Amazon Data Firehose/Kinesis Data Stream), data analysis via data analytics app, or query data stream via queries (studio notebook)

- If output is S3, S3 Select available to query the output object(s) data

- Managed Apache Flink provides an Apache Flink Dashboard

- Managed Apache Flink resultant deployment by Cloud Formation contains a Flink Application (*.jar file based) and the source (Kinesis Data Stream)

- Flink under the hood

- Now supports Python and Scala

- Flink is a framework for processing data streams

- MSAF integrates Flink with AWS

- Instead of using SQL, you can develop your own Flink application from scratch and load it into MSAF via S3

- In addition to the DataStream API, there is a Table API for SQL access

- Serverless

- Use cases:

- Streaming ETL (simple selections/translations)

- Continuous metric generation (eg: leaderboard)

- Responsive analytics to generate alerts when certain metrics encountered

- ML use cases:

- Random Cut Forest:

- SQL function for anomaly detection on numeric columns in a stream

- uses only recent history to generate model

- HOTSPOTS:

- locate and return info about relatively dense regions of data

- uses more than only recent history

- Random Cut Forest:

Example MSAF Flow

graph LR

A[Amazon Kinesis Data Streams] -->|Flink Sources to Flink Application DataStream API| C(Managed Service For Apache Flink)

B[Amazon Managed Streaming for Apache Kafka] -->|Flink Sources to Flink Application DataStream API| C

C -->|Flink Application DataStream API to Flink Sinks| D[S3]

C -->|Flink Application DataStream API to Flink Sinks| E[Amazon Kinesis Data Streams]

C -->|Flink Application DataStream API to Flink Sinks| F[Amazon Data Firehose]

- Fully Managed (serverless) service, no administration, automatic scaling

- Allows for custom code to be written for producer/consumer

- Can use λ to filter/transform data prior to output (Better to use if filter/tranform with a λ to S3 over Kinesis Data Streams)

- Near real time: 60 seconds latency minimum for non-full batches

- Minimum 1 MB of data at a time

- Pay only for the data going through

- Can subscribe to SNS

- No data persistence and must be immediately consumed/processed

- Sent to (S3 as a backup [of source records] or failed [transformations or delivery] case[s]):

- S3

- Amazon Redshift (copy through S3)

- Amazon Elastic Search

- 3rd party partners (datadog/splunk/etc.)

- Custom destination (http[s] endpoint)

- S3 Destination(s) (Error and/or output) allow for bucket prefixes:

- output/year=!{timestamp:yyyy}/month=!{timestamp:MM}/

- error/!{firehose:random-string}/!{firehose:error-output-type}/!{timestamp:yyyy/MM/dd}/

- Data Conversion from csv/json to Parquet/ORC using AWS Glue (only for S3)

- Data Transformation through λ (eg: csv=>json)

- Supports compression if target is S3 (GZIP/ZIP/SNAPPY)

- Producers

- used to capture, process and store video streams in real-time such as smartphone/security/body camera(s), AWS DeepLens, audio feeds, images, RADAR data; RTSP camera.

- One producer per video streams

- Video playback capability

- Consumers

- custom build server (MXNet, Tensorflow, etc.)

- This may pass on the data to db (checkpoint stream per processing status), decode the input frames and pass onto SageMaker, or even inference results to Kinesis Streams=>λ for downstream notifications

- EC2 instances

- AWS SageMaker

- Amazon Rekognition Video

- custom build server (MXNet, Tensorflow, etc.)

- retention between 1 hr to 10 years

- An IoT Standard messaging protocol

- Sensor data transferred to your model

- The AWS loT Device SDK can connect via MQTT

- Handle ML-specific data using map reduce (Hadoop, Spark, Hive)

- Transforming data transit (ETL: Glue, EMR, AWS Batch)

- A visual workflow service that helps developers use AWS services with λ to build distributed applications, automate processes, orchestrate micro services, or create data (ML) pipelines

- JSON used to declare state machines under the hood

- Advanced Error Handling and retry mechanism outside the code

- Audit history of workflows is available

- Able to "wait" for any length of time, though the max execustion time of a state machine is 1 year

- Great for orchestrating and tracking and ordered flow of resources

- Service to create a managed Hadoop framework clusters (Big Data) to analyze/process lots of data using (many) instances

- Supports Apache Spark, HBase, Presto, Flink, Hive, etc.

- Takes care of provisioning and configuration

- Autoscaling and integrated with Spot Instances for cost savings

- Use cases: Data processing, ML, Web Indexing, BigData

- Can have long-running cluster or transient (temporary) cluster

- At installation of the cluster you need to select frameworks and applications to install

- Connect to the master node through an EC2 instance and run jobs from the terminal or via ordered steps submitted via the console

- Master Node:

- single EC2 instance to manage the cluster

- coordinates distribution of data and tasks

- manages health-long running process

- Core Node:

- Hosts HDFS data and runs tasks and stores data-long running process

- can spin up/down as needed

- Task Node (optional):

- only to run tasks-usually Spot Instances are a best option

- no hosted data, so no risk of data loss upon removal

- can spin up/down as needed

- On-demand: reliable, predictable, won't be terminated, good for long running cluster(s) [though you need to manually delete]

- Reserved: cost savings (EMR will use if available), good for long running cluster(s) [though you need to manually delete]

- Spot instances:

- cheaper, can be terminated, less reliable

- Good choice for task nodes (temporary capacity)

- Only use on core & master if you're testing or very cost-sensitive; you're risking partial data loss

- Master node:

- m4.large if < 50 nodes, m4.xlarge if > 50 nodes

- Core & task nodes:

- m4.large is usually good

- If cluster waits a lot on external dependencies (i.e. a web crawler), t2.medium

- Improved performance: m4.xlarge

- Computation-intensive applications: high CPU instances

- Database, memory-caching applications: high memory instances

- Network / CPU-intensive (NLP, ML) - cluster computer instances

- Accelerated Computing / AI - GPU instances (g3, g4, p2, p3)

- EMR charges by the hour

- Plus EC2 charges

- Provisions new nodes if a core node fails

- Can add and remove tasks nodes on the fly

- Can resize a running cluster's core nodes

- Amazon EC2 for the instances that comprise the nodes in the cluster

- Amazon VPC to configure the virtual network in which you launch your instances

- Amazon S3 to store input and output data or HDFS (default)

- Amazon CloudWatch to monitor cluster performance and configure alarms

- AWS IAM to configure permissions

- AWS CloudTrail to audit requests made to the service

- AWS Data Pipeline to schedule and start your clusters

- HDFS (distributed scalable file system for Hadoop)

- distributes data that it stores across every instance in a cluster, as well as multiple copies of data on different instances to ensure no data is lost if instance(s) fail

- each file stored as blocks

- default block size is 128 MB

- storage is ephemeral and is lost upon termination

- performance benefit of processing data where stored to avoid latency

- EBS serves as a backup for HDFS

- EMRFS: access S3 as if it were HDFS

- EMRFS Consistent View - Optional for S3 consistency

- Uses DynamoDB to track consistency

- Local file system

- Similar concept to Zeppelin, with more AWS integration

- Notebooks backed up to S3 only (not in within the cluster)

- Provision clusters from the notebook!

- Able to use multiple Notebooks to share the multi-tenant EMR clusters

- Hosted inside a VPC

- Accessed only via AWS console

- build Apache Spark Apps and run queries against the cluster (python, pyspark, spark sql, spark r, scala, and/or anaconda based open source graphical libs)

- EMR supports Apache MXNet and GPU instance types

- Appropriate instance types for deep learning:

- P3: 8 Tesla V100 GPUs

- P2: 16 K80 GPUs

- G3: 4 M60 GPUs (all Nvidia chips)

- G5g : AWS Graviton 2 processors / Nvidia T4G Tensor Core GPUs

- Not (yet) available in EMR

- Also used for Android game streaming

- P4d - A100 "UltraClusters" for supercomputing

- Deep Learning AMIs

- Sagemaker can deploy a cluster using whatever architecture you want

- Trn1 instances

- "Powered by Trainium"

- Optimized for training NN/LLM (50% savings)

- 800 Gbps of Elastic Fabric Adapter (EFA) networking for fast clusters

- Trn1n instances

- Even more bandwidth (1600 Gbps)

- Inf2 instances

- "Powered by AWS Inferentia2"

- Optimized for inference

- IAM policies: can be combined with tagging to control access on a cluster-by-cluster basis

- Kerberos

- SSH can use kerberos or EC2 key pairs for client authentication

- IAM roles:

- Every cluster in EMR must have a service role and a role for EC2 instance profile(s). These roles, attached via policies, will provide permission(s) to interact with other AWS Services

- If a cluster uses automatic scaling, an autoscaling role is necessary

- Service linked roles can be used if service for EMR has lost ability to clean up EC2 resources

- IAM roles can also be used for EMRFS requests to S3 to control user access to files with in EMR based on users, groups, or location(s) within S3

- Security configurations may be specified for Lake Formation (JSON)

- Native integration with Apache Ranger to provide security for Hive data metastore and Hive instance(s) on EMR

- For data security on Hadoop/Hive

- Within EMR, select Create studio instance, which is your environment for running workspaces/notebooks

- Requires:

- VPC access

- 1-5 subnets

- SG(s)

- Service role (IAM/IAM Identity Center)

- S3 bucket

- Within the studio instance, create workspaces. The workspace will need to create/attach an EMR cluster

- A notebook must select a kernel at initialization (relative to the tech stack used)

- Good practice delete your cluster if not using so it's not billed, though good to have a safeguard of the cluster shutting down, automatically to avoid paying for it

- Managed ETL service (fully serverless) used to prepare/transform data for analysis

- upper limit of 15 minutes as it is serverless

- Utilizes Python (PySpark) or Scala (Spark) scripts, but runs on serverless Spark platform

- Targets: S3, JDBC (RDS, Redshift), or Glue Data Catalog

- Jobs scheduled via Glue Scheduler

- Jobs triggered by events=>Glue Triggers

- Transformations:

- Bundled Transformations

- DropFields/DropNullFields

- Filter records

- Join data to make more interesting data

- Map/Reduce

- ML Transformations

- FindMatches ML: identify duplicate or matching data, even when the records lack a common unique identifier, and no fields exactly match

- K-Means

- Format conversions: CSV, JSON, Avro, Parquet, ORC, XML

- Need an IAM role / credentials to access the TO/FROM data stores

- Bundled Transformations

- Can be event driven (eg: λ triggered by S3 put object) to call Glue ETL

- Glue Data Catalog:

- Uses an AWS Glue Data Crawler scanning DBs/S3/data to write associated metadata utilized by Glue ETL, or data discovery on Athena, Redshift Spectrum or EMR

- Can issue crawlers throughout a DP to be able to know what data is where in the flow

- Metadata repo for all tables with versioned schemas and automated schema inference

- Glue Crawlers go through your data to infer schemas and partitions (s3 based on organization [see S3 Data Partitioning])

- formats supported: JSON, Parquet, CSV, relational store

- Crawlers work for: S3, Amazon Redshift, Amazon RDS

- Can be schedule or On-Demand

- Need an IAM role / credentials to access the data store(s)

- Glue Job bookmarks prevent reprocessing old data

- Glue Studio-new GUI to create, run, and monitor ETL jobs in Glue

- Glue Streaming ETL (built on Apache Spark Structured Streaming)-compatible with Kinesis Data Streaming, Kafka, MSK

- Glue Elastic Views:

- Combine and replicate data across multiple data stores using SQL (View)

- No custom code, Glue monitors for changes in the source data, serverless

- Leverages a "virtual table" (materialized view)

- Postgres, Redshift, SqlServer, Oracle, MySql (JDBC datastores)

- dynamodb

- mongodb/documentdb

- Kinesis Data Streams

- Kafka/Amazon Managed Streaming for Apache Kafka

- Athena

- Spark

- S3

- Files (Orc, Parquet)

- Allows you to clean, normalize, and translate data without writing any code (ETL)

- Reduces ML and analytics data preparation time by up to 80%

- +250 ready-made transformations to automate tasks

- Filtering anomalies, data conversion, correct invalid values...

- Needs access to input via IAM role

- Can conduct statistics on data for the sake of analysis

- Can find (and remove) missing, invalid, duplicate, and outlier data

- All conducted transformations are recorded into a recipe, from which a glue job might be created

- Inputs:

- File upload

- Data lake/data store

- Redshift

- Aurora

- JDBC

- AWS Glue Data Catalog

- Amazon Appflow

- AWS Data Exchange

- Snowflake

- Fully managed (serverless) batch processing at any scale using dynamically launched EC2 instances (spot or on-demand) managed by AWS for which you pay

- Job with a start and an end (not continuous)

- Can run 100,000s of computing batch jobs

- You submit/schedule batch jobs and AWS Batch handles it

- Can be scheduled using CloudWatch Events

- Can also be orchestrated using step functions

- Provisions optimal amount/type of compute/memory based on volume and requirements

- Batch jobs are defined as docker images and run on ECS

- Helpful for cost optimization and focusing less on infrastructure

- No time limit

- Any run time packaged in docker image

- Rely on EBS/instance store for disk space

- Advantage over λ=>time limit, limited runtimes, limited disk space

- Good for any compute based job (must harness docker) and for any non-ETL based work, batch is likely best (eg: periodically cleaning up s3 buckets)

- Using spot instances to train deep learning models

- Normal distribution: bell curve centered around 0

- Probability density function: gives the probability of a data point falling within a given range of values (on a curve) with infinite possibilities (non-discrete)

- Poisson distribution: series of discrete events that end in a success or failure, where the average number of successes over a period of time is known (count distribution). As λ value approaches 0, distribution looks exponential

- Probability mass function: discrete data example being a bell curve surrounding a histogram chart

- Binomial Distribution: number of successes dealing with a binary result; discrete trials (n)

- Bernouli Distribution:

- special binomial distribution

- single trial (n=1)

- Sum of bernouli=>binomial

- Numerical - quantitative measurement(s)

- Categorical

- yes/no, categories

- can assign numbers to categories in order to represent them, though the numbers don't possess any real meaning

- Ordinal: mix of numerical and categorical types (eg scale of 1 to 5), where 1 is worse than 2 and so forth with 5 being best

- Pandas:

- used for slicing and mapping data (DataFrames, Series) and interoperates with numpy

- Dataframe/Series are interchangeable with numpy arrays, though the former is often converted to the former to feed ML algorithms

- Matplotlib (graphics might be good?)

- boxplot (with whiskers)

- histograms (binning: bins of results of similar measure)

- Seaborn (graphics might be good?)

- essentially Matplotlib extended

- heatmap: demonstrates another dimension within the given plot axes

- pairplot: good for attribute correlations

- jointplot: scatterplot with histograms adjoining each axis

- scikit_learn

- toolkit for/to make ML models

- X=>attributes

- y=>labels

- X and y are utilized in conjunction with the fit function to train the model(s)

- predict function harnesses the model to output inferences based on input

- good for preprocessing data (input data=>normal distribution [MinMaxScaler])

- to avoid unequal weightings, scale to the around the mean for each column, but if scales vastly different use MinMaxScaler to have values between 0 and 1

- Metrics:

- mutual_info_regression: metric used for ranking features that are continuous (display in ranked order)

- mutual_info_classif: metric used for ranking features that are discrete targets (display in ranked order)

- Horovod: distributed deep learning framework for TensorFlow, Keras, PyTorch, and Apache MxNet

- Spark MLLib (see Apache Spark on EMR)

- Hadoop consists largely of HDFS, YARN and Map Reduce

- Hadoop Core or Hadoop - Common java archive (JAR) files/scripts used to boot Hadoop

- YARN used to centrally manage cluster resources for different frameworks

- Spark (faster alternative to Map Reduce)

- Can be included within SageMaker

- In memory cache

- Optimized query execution on data of any size using directed acyclic graph adding efficiencies concerning dependencies/processing/scheduling

- Java, Scala, Python, R apis available

- Not used for OLTP or batch processing

- Upon connecting acquires executors on nodes in the cluster that run computations and store data for your applications. Code is sent to the executors and Spark context sends tasks to the executors to run

- Foundation of the platform (memory management, fault recovery, scheduling, distributing, monitoring jobs and interactions with persistence stores)

- Uses Resilient Distributed Dataset (RDD) at lowest level, representing a logical collection of data partitioned across nodes

- Up to 100x faster than Map Reduce including cost based optimizer, columnar storage and code generation for fast queries, JDBC/ODBC, JSON, HDFS, Hive, Orc, Parquet, or Hive Tables via HIVEQL

- Exposes dataframe (python)/dataset (Scala) taking the place of RDD where input (SQL) to the spark cluster and transforms the initial query to a distributed query across the cluster

- Data ingested via mini batches and analytics on the data is the same as application code for batch analytics for one application code for batch or streaming

- Inputs include twitter, Kafka, Flume, HDFS, ZeroMQ, AWS Kinesis

- Able to query unbounded table within windows of time, much like a database

- Spark is able to query from Kinesis Data Streams via the Kinesis Client Library (KCL)

- Distributed graph processing framework providing ETL, exploratory analysis, iterative graph computation to enable the building/transformation of graph data structures at scale

- Distributed and scalable via cluster nodes offering the following:

- Classification: logistic regression, naive Bayes

- Regression

- Decision trees

- Recommendation engine (ALS)

- Clustering (K-Means)

- LDA (topic modeling), extract topics from text input(s)

- ML workflow utilities (pipelines, feature transformation, persistence)

- SVD, PCA, statistics

- ALS (Alternating Least Squares)

graph LR

A[Driver Program - Spark Context] --> B[Cluster Manager]

A --> E[Executor - Cache - Tasks2]

B --> D[Executor - Cache - Tasks1]

A --> D

B --> E

- Can run Spark code interactively (like you can in the Spark shell) via browser (Notebook)

- Speeds up your development cycle

- Allows easy experimentation and exploration of your big data

- Can execute SQL queries directly against SparkSQL

- Query results may be visualized in charts and graphs

- Makes Spark feel more like a data science tool!

- runs in browser to communicate with the python environment (eg: anaconda) server

from sklearn import preprocessing

scaler = preprocessing.standardScaler()

new_data = scaler.fit_transform(input)

- import data

- head()

- Does the data have column names?

- Identify and handle missing data, corrupt data, stop words, etc.

- Labeled data (recognizing when you have enough labeled data and identifying mitigation[s])

- strategies [Data labeling tools (Mechanical Turk, manual labor)])

- Are certain rows attributes of the data type or na values?

- Can drop rows potentially (*.dropna(inplace=True), though this might introduce bias if the missing values aren't evenly distributed

- describe()=> are counts of all the columns equal?

- Formatting, normalizing, augmenting, and scaling data

- If remapping the data, it is a good idea to check the mean/std of attributes from/to via describe()

- To convert to the numpy array=>*.values()

- Replace missing values with the mean value from the rest of the column (single feature)

- Fast & easy, won't affect mean or sample size of overall data set

- Median may be a better choice than mean when outliers are present

- But it's generally pretty terrible.

- Only works on column level, misses correlations between features

- Can't use on categorical features (imputing, with most frequent value can work in this case, though)

- Not very accurate

- If not many rows contain missing Data

- dropping those rows doesn't bias your data

- you don't have a lot of time

- Will never be the right answer for the "best" approach.

- Almost anything is better. Can you substitute another similar field perhaps? (i.e., review summary vs. full text)

- Assumes numerical data, not categorical

- There are ways to handle categorical data (Hamming distance)

- KNN is a good method to produce decent imputation results for missing data

- Build a machine learning model to impute data for your other machine learning model(s)!

- Works well for categorical data, though complicated.

- One of the better methods for missing data to produce decent results

- Find linear or non-linear relationships between the missing feature and other features

- Most advanced technique: MICE (Multiple Imputation by Chained Equations) - finds relationships between features and one of the better imputation methods for missing data

- What's better than imputing data? Getting more real data!

- Large discrepancy between "positive" and "negative" cases

- i.e., fraud detection. Fraud is rare, and most rows will be not-fraud

- "positive" doesn't mean "good" it means the thing you're testing for happened.

- If your machine learning model is made to detect fraud, then fraud is the positive case.

- Mainly a problem with neural networks

- Duplicate samples from the minority class

- Can be done at random

- Instead of creating more positive samples, remove negative ones

- Throwing data away is usually not the right answer

- Unless you are specifically trying to avoid "big data" scaling issues

- Artificially generate new samples of the minority class using nearest neighbors

- Run K-nearest-neighbors of each sample of the minority class

- Create a new sample from the KNN result (mean of the neighbors)

- Both generates new samples and undersamples majority class

- Generally better than just oversampling

- When making predictions about a classification (fraud / not fraud), you have some sort of threshold of probability at which point you'll flag something as the positive case (fraud)

- If you have too many false positives, one way to fix that is to simply increase that threshold.

- Reduces false positives but; could result in more false negatives

- Applying your knowledge of the data - and the model you're using - to identify and extract useful features to train your model with.

- Analyze/evaluate feature engineering concepts (binning, tokenization, outliers, synthetic features, 1 hot encoding, reducing dimensionality of data) to understand what features one should use

- Do I need to transform these features in some way?

- How do I handle missing data?

- Should I create new features from the existing ones?

- You can't just throw in raw data and expect good results

- Expertise in the applied field helps

- Trim down feature data or create and combine new ones

- Normalize or encode data

- Handle missing data

- Too many features can be a problem - leads to sparse data

- Every feature is a new dimension

- Much of feature engineering is selecting the features most relevant to the problem at hand

- This often is where domain knowledge comes into play

- Unsupervised dimensionality reduction techniques can also be employed to distill many teatures into fewer features

- РСА

- K-Means

- Discrete samples taken over a period of time

- trends

- slope slant

- can be seasonal, can superimpose curves vs the trends to decipher if a pattern is exhibited in variations (eg: month to month)

- can both be seasonal and have an overall trend, too. To get the overall trend, subtract out noise and seasonality

- Noise: random variations

- Additive model

- Seasonal variation is constant => seasonality + trends + noise = TS model

- Multiplicative model

- seasonal variation increases as the trend increases => seasonality * trends * noise = TS model

- Additive model

- Important data for search - figures out what terms are most relevant for a document

- Compute TF-IDF for every word/n-gram in a corpus

- For a given search word/n-gram, sort the documents by their TF-IDF score accordingly

- Display the results

- Term Frequency just measures how often/relevancy of a word occurs in a document

- Document Frequency is how often a word occurs in an entire set of documents, i.e., all of Wikipedia or every web page (predicts common words)

- Equal to: Term Frequency/Document Frequency or Term Frequency * Inverse Document Frequency

- We actually use the log of the TF-IDF, since word frequencies are distributed exponentially. That gives us a better weighting of a words overall popularity

- TF-IDF assumes a document is a "bag of words"

- Parsing documents into a bag of words can be most of the work

- Words can be represented as a hash value (number) for efficiency

- What about synonyms? Various tenses? Abbreviations? Capitalizations? Misspellings?

- Doing this at scale is the hard part (Spark can help here)

- An extension of TF-IDF is to not only compute relevancy for individual words (terms) but also for bi-grams or, more generally, n-grams.

- "I love certification exams"

- Unigrams: "l", "love", "certification", "exams"

- Bi-grams: "I love", "love certification", "certification exams"

- Tri-grams: "I love certification", "love certification exams"

- The TF-IDF matrix will consist of the documents as rows and the selection of n-grams as columns

- Transformer deep learning architectures are currently state of the art utilizing a mechanism of "self-attention"

- Weighs significance of each part of the input data

- Processes sequential data (like words, like an RNN), but processes entire input all at once.

- The attention mechanism provides context, so no need to process one word at a time.

- BERT, RoBERTa, T5, GPT-2, DistilBERT

- DistilBERT: uses knowledge distillation to reduce model size by 40%

- fine-tuning and transfer learning are the same thing

- NLP models (and others) are too big and complex to build from scratch and re-train every time

- The latest may have hundreds of billions of parameters!

- use a pre-trained model to further train for specific task(s)

- Model zoos such as Hugging Face offer pre-trained models to start from

- Integrated with Sagemaker via Hugging Face Deep Learning Containers

- Hugging face is essentially a giant repo of pre-trained models (eg: GPT2, GPTJ, llama, stable-diffusion)

- You can fine-tune these models for your own use cases

- If fine-tuning a model, need to install the source version of the model

- BERT transfer learning example:

- Hugging Face offers a Deep Learning Container (DLC) for BERT

- It's pre-trained on BookCorpus and Wikipedia

- You can fine-tune BERT (or DistilBERT etc) with your own additional training data through transfer learning

- Tokenize your own training data to be of the same format

- Just start training it further with your data, with a low learning rate.

- Continue training a pre-trained model (fine-tuning)

- Use for fine-tuning a model that has way more training data than you ever have

- Use a low learning rate to ensure you are just incrementally improving the model

- Add new trainable layers to the top of a frozen model

- Learns to turn old features into predictions on new data

- Can freeze certain layers, re-train others (NN based)

- Train a new tokenizer to learn a new Fine-tuning NN language per additional training data

- Can do both: add new layers, then fine tune as well

- Retrain from scratch

- If you have a large amount of training data and it's fundamentally different from what the model was pre-trained with

- And you have the computing capacity for it!

- Use it as-is

- When the model's training data is what you want already

- Adapt it to other tasks (eg: classification, etc.)

- Graphing (scatter plot, time series, histogram, box plot)

- Interpreting descriptive statistics (correlation, summary statistics, p value)

- Clustering (hierarchical, diagnosing, elbow plot, cluster size)

- Serverless ad-hoc query service enabling analysis and querying of data in S3 using standard SQL, while allowing more advanced queries (joins permitted)

- Compress data for smaller retrieval

- Use target files (> 128 MB) to minimize overhead and as a cost savings measure

- $5.00 per TB scanned

- Commonly used/integrated with Amazon Quicksight

- Federated query allows SQL queries across relational, object, non-relational, custom (AWS or on-premisis) using Data Source Connectors that run on λ with results being returned and stored in S3

- presto under the hood

- supports: csv, json, orc, parquet, Auro

- able to query unstructured, semi-structured or structured data with in the data lake

- use cases

- query web logs (CloudTrail, CloudFront, VPC, ELB)

- query data prior to loading in DB

- can integrate with Jupiter, Zepplin, or R-Studio notebooks

- able to integrate with other visualization tools via ODBC/JDBC protocols

- can harness Glue Data Catalog metadata for queries

- anti-patterns:

- Highly formatted reports / visualization=>That's what QuickSight is for

- ETL=>Use Glue instead

graph LR

A[S3] --> B[Glue]

B --> C[Athena]

C --> D[QuickSight]

- Access control

- IAM, ACLs, S3 bucket policies

- AmazonAthenaFullAccess/AWSQuicksightAthenaAccess

- Encrypt results at rest in S3 staging directory

- Server-side encryption with S3-managed key (SSE-S3)

- Server-side encryption with KMS key (SSE-KMS)

- Client-side encryption with KMS key (CSE-KMS)

- Cross-account access in S3 bucket policy possible

- TLS encrypts in-transit (between Athena and S3)

- BI/analytics serverless ML service used to build interactive visualizations (dashboards, graphs, charts and reports), perform ad-hoc analysis without paying for integrations of data and leaving the data uncanned for exploration

- Integrates with sources both in and out of AWS (RDS)

- In memory computation using Spice Engine

- Data sets are imported into SPICE

- Super-fast, Parallel, In-memory Calculation Engine

- Uses columnar storage, in-memory, machine code generation

- Accelerates interactive queries on large datasets

- Each user gets 10GB/month of SPICE

- Highly available / durable

- Scales to hundreds of thousands of users

- Data sets are imported into SPICE

- Can share analysis (if published) or the dashboard (read only) with users or groups

- Available as an application anytime on any device (browsers [mobile])

- Data Sources

- Redshift

- Aurora / RDS

- Athena

- EC2-hosted databases

- Files (S3 or on-premises)

- Excel

- CSV, TSV

- Common or extended log format

- AWS loT Analytics

- Data preparation allows limited ETL

- Quicksight Paginated Reports

- Reports designed to be printed

- May span many pages

- Can be based on existing Quicksight dashboards

- Q

- Machine learning-powered

- Answers business questions with NLP eg: "What are the top-selling items in Florida?"

- Offered as an add-on for given regions

- Personal training on how to use it is required

- Must set up topics associated with datasets

- Datasets and their fields must be NLP-friendly

- How to handle dates must be defined

- Security:

- Column-Level security (CLS)

- Multi-factor authentication on your account

- VPC connectivity

- Add QuickSight's IP address range to your database security groups

- Row-level security

- Column-level security too (CLS) - Enterprise edition only

- Private VPC access (for on-prem access)

- Elastic Network Interface, AWS DirectConnect

- User Management

- Users defined via IAM, or email signup

- SAML-based single sign-on

- Active Directory integration (Enterprise Edition)

- MFA

- Pricing

- Annual subscription

- Standard: $9 / user /month

- Enterprise: $18 / user / month

- Enterprise with Q: $28 / user / month

- Extra SPICE capacity (beyond 10GB), otherwise more $

- $0.25 (standard) $0.38 (enterprise) / GB / month

- Month to month

- Standard: $12 / user / month

- Enterprise: $24 / user / month

- Enterprise with Q: $34 / user / month

- Additional charges for paginated reports, alerts & anomaly detection, Q capacity, readers, and reader session capacity.

- Enterprise edition

- Encryption at rest

- Microsoft Active Directory integration

- CLS

- Annual subscription

- Use Cases:

- Interactive ad-hoc exploration / visualization of data

- Dashboards and KPIs

- Analyze / visualize data from:

- Logs in S3

- On-premise databases

- AWS (RDS, Redshift, Athena, S3)

- SaaS applications, such as Salesforce

- Any JDBC/ODBC data source

- ML insights feature (only ML capabilties of Quicksight)

- Anomaly detection (uses Random Cut Forest)

- Forcasting to get rid of anomalies to make forcast (uses Random Cut Forest)

- Autonarratives to build rich dashboards with embedded narratives

- Anti-Patterns

- Highly formatted canned reports

- QuickSight is for ad ho queries, analysis, and visualization

- No longer true with paginated reports!

- ETL

- Use Glue instead, although QuickSight can do some transformations

- Highly formatted canned reports

- AutoGraph - automatically selects chart based on input features to best display the data and relationships. Not 100% effective and might require intervention

- Bar Charts

- For comparison and distribution (histograms)

- Line graphs

- For changes/trends over time

- [stacked] area line charts - allows visualization of different components added up to a change/trend

- Scatter plots, heat maps

- For correlation

- Residual plot [a type of scatter plot, containining a regression line, measuring how data point(s) overestimating/underestimating target value(s)]

- Pie graphs, tree maps - Heirarchical Aggregation chart (eg: npm package map)

- For aggregation

- Pivot tables

- For tabular data to aggregate in certain ways into other tables

- applying statistical functions applied to (multi-dimensional) data

- KPIs - chart detailing measurement(s) between current value(s) vs target(s)

- Geospatial Charts (maps) - map with sized circles annotating certain amounts in certain areas

- Donut Charts - when precision isn't important and few items in the dimension; show percentile/proportion of the total amount

- Gauge Charts - compare values in a measure (eg: fuel left in a tank)

- Word Clouds - word or phrase frequency within a corpus

-

When to use/when not to use ML

- Effective goal is to take the predictions (aka inference) to generalize well against new inputs

- Good use for problems if:

- either too complex for traditional programming approach have no known algorithm

- a fluctuating env, where ml can adapt to the new data

- getting insights/patterns about complex problems and large amounts of data (data mining)

- Don't use otherwise possibly in favor of a traditional programming approach

-

Know the difference between supervised and unsupervised learning

-

Selecting from among classification, regression, forecasting, clustering, recommendation, etc.

- Xgboost

- Observations are weighted

- Some will take part in new training sets more often

- Training is sequential; each classifier takes into account the previous one's success.

- Boosting generally yields better accuracy than Bagging

- logistic regression - supervised model dealing with classification and probability outcomes, though the former is more prevalent

- K-means - unsupervised cluster analysis on unlabeled data where the aim is to partition a set of objects into K clusters in such a way to understand what types of groups exist or to identify unknown groups in complex data sets

- linear regression - useful in predicting a variable based on the value of another variable, a supervised model curvature, if you will, in a n-space dimensional plane

- decision trees - a supervised model based on a set of decision rules for prediction analysis, data classification, and regression. May possibly overfit training data.

- RNN

- CNN

- Ensemble-supervised, multiple models that are combined to improve the overall performance and accuracy. An excellent example being Random Cut Forests, as decision trees are prone to overfitting, where lots of decision trees are modeled and let them all vote on the result.

- Transfer learning

- Bagging - generate N new training sets by random sampling with replacement. Each resampled model can be trained in parallel

- Bagging avoids overfitting over boost

- Bagging is easier to parallelize over boost

- Support Vector Machine (SVM)

- a supervised ML algorithm that can be used for either classification or regression

- can solve linear and nonlinear problems

- SVM classification creates a line or hyperplane to separate data into classes

- can be used for text classification such as categories, detecting spam and sentiment analysis

- commonly used for image recognition (performs particularly well in aspect-based recognition and color-based classification)

- outlier detection

- You have some sort of agent that "explores" some space

- As it goes, it learns the value of different state changes in different conditions

- Those values inform subsequent behavior of the agent as from learnings a model is created

- Examples: Pac-Man, Cat & Mouse game (game Al)

- Supply chain management

- HVAC systems

- Industrial robotics

- Dialog systems

- Autonomous vehicles

- Yields fast on-line performance once the space has been explored

- A specific implementation of reinforcement learning

- You have:

- A set of environmental states s

- A set of possible actions in those states a

- A value of each state/action Q

- Start off with Q values of 0

- Explore the space

- As bad things happen after a given state/action, reduce its Q

- As rewards happen after a given state/action, increase its Q

- What are some state/actions here?

- Pac-man has a wall to the West

- Pac-man dies if he moves one step South

- Pac-man just continues to live if going North or East

- You can "look ahead" more than one step by using a discount factor when computing Q (here s is previous state, s' is current state)

- Q(s,a) += discount * (reward(s,a) + maxQ(s')) - Q(s,a))

- How do we efficiently explore all of the possible states?

- Simple approach: always choose the action for a given state with the highest Q. If there's a tie, choose at random

- But that's really inefficient, and you might miss a lot of paths that way

- Better way: introduce an epsilon term

- If a random number is less than epsilon, don't follow the highest Q, but choose at random

- That way, exploration never totally stops

- Choosing epsilon can be tricky

- Simple approach: always choose the action for a given state with the highest Q. If there's a tie, choose at random

- You can make an intelligent Pac-Man:

- Have it semi-randomly explore different choices of movement (actions) given different conditions (states)

- Keep track of the reward/penalty associated with each choice for a given state/action (Q) and can propagate rewards and penalties backwards multiple steps for better performance

- Use those stored Q values to inform its future choices

- A mathematical framework/notation for modeling decision making (Q-learning) in situations where outcomes are partly random and partly under the control of a decision maker.

- States are described as s and s'

- State transition functions are described as Pa (s, s')

- Our "O" values are described as a reward function Ra (s, s')

- MDP is a discrete time stochastic control process.

Loss Functions (aka Cost Function): seek to calculate/minimize the error (difference between actual and predicted value)

-

Preventing overfitting

- Models that are good at making predictions on the data they were trained on, but not on new data it hasn’t seen before

- Overfitted models have learned patterns in the training data that don't generalize to the real world

- Often seen as high accuracy on training data set, but lower accuracy on test or evaluation data set.

- Batch sizes that are larger can increase chances of becoming stuck in a local minima

- Higher learning rates run the risk of overshooting an optimal solution or causing oscillation of accurate results

- Generally good to pair a small batch size and a small learning rate

- Too wide/deep of a neural layer(s) ending in overfitting=> simpler model might be better

- Specific to NN:

- Dropout: Remove some neurons at each Epoch During training, which forces the model to learn/spread out learning among other neurons Preventing individual neurons from overfitting specific data point(s)

- Early stopping is breaking early training from Epochs as accuracy levels out, preventing overfitting

- L1 (LASSO) / L2 (Ridge) Regularization

- Preventing overfitting in ML in general

- A regularization term is added as weights are learned

- L1 term is the sum of the weights absolute weights

- Performs feature selection - entire features go to O

- Computationally inefficient

- Sparse output

- Good to avoid curse of dimensionality

- When to use L1

- Feature selection can reduce dimensionality

- Out of 100 features, maybe only 10 end up with non-zero coefficients!

- The resulting sparsity can make up for its computational inefficiency

- But, if you think all of your features are important, L2 is probably a better choice.

- L2 term is the sum of the square of the weights

- All features remain considered, just weighted

- Computationally efficient

- Dense output

- Same idea can be applied to loss functions and/or weights as learned

-

Training data vs Validation data vs Test data

- 80/20 (Training/Validation) rule if data is largely available

- Cross validation - usually done if data is in short supply

- Validation set used to get the error rate (aka generalization or out of sample error)

- If you training error is low and the generalization error is high=>overfitting

- If you'd like to validate which algorithm is best, harness the training data on them and then validate against test set

- If also validating multiple hyperparameters, a holdout, validation set, might be decent to validate following the training set=>validation set (select best model and hyperparameters that perform best), and then testing against the holdout set to avoid selecting a model that performs best on the test set data

- Alternatively there is cross validation too, to avoid wasting too much training data on validation sets. This involves splitting the training set into complementary subsets and the model is trained against a different combination of the subsets and tested against the remaining data subset(s). Once the model/hyperparameters are finalized they are then trained on the complete training data to then measure the generalized error against the test set.

-

Model initialization

-

Neural network architecture (layers/nodes)

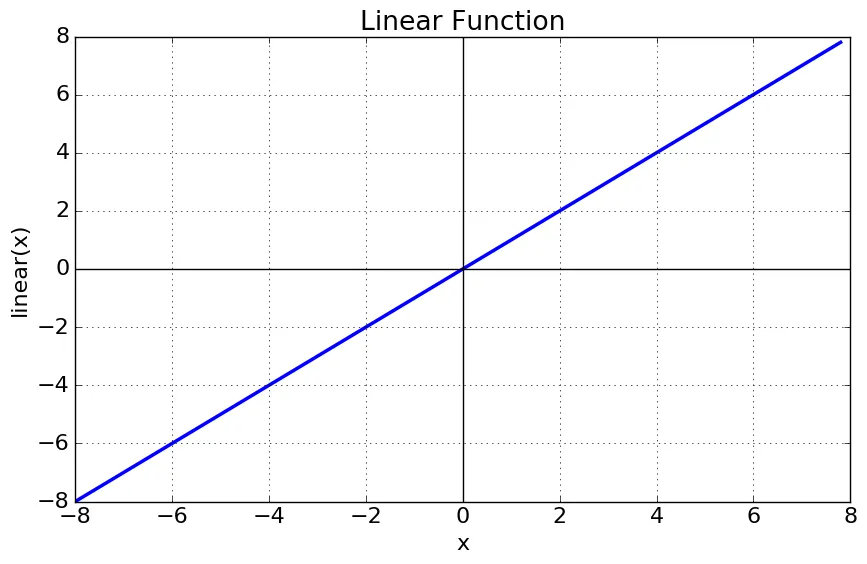

- NN Learning Rate

- Neural networks are trained by gradient descent (or some similar flavor)

- We start at some random point, and sample different solutions (weights) seeking to minimize some cost function, over many epochs

- Epochs – iterations at which we train, and attempt a different set of weights, looking to minimize the cost/loss function

- How far apart these samples are is the learning rate

- Too high a learning rate means you might overshoot the optimal solution!

- Too small a learning rate will take too long to train/find the optimal solution

- Learning rate is an example of a hyperparameter