Artistic Fusion: Revolutionizing Mural Style Transfer with Combined GAN and Diffusion Model Techniques

The official code repository for the paper: Artistic Fusion: Revolutionizing Mural Style Transfer with Combined GAN and Diffusion Model Techniques.

Artistic Fusion, is an image style transfer model base on CycleGAN and Stable Diffusion. At its core, CycleGAN establishes a reliable base for style accuracy, which is then significantly enhanced through our diffusion model adaptations. Classifier-guided and classifier-free guidance methods play a crucial role, enriched further by the integration of text-driven large diffusion models such as Stable Diffusion. Additionally, a pivotal exploration into the efficacy of superresolution models elevates the final output to high-resolution, achieving remarkable clarity and details. Our comprehensive methodology and rigorous experiments have led to promising results, achieving competitive performance in FID and LPIPS evaluation metrics, and possessing aesthetic and artistic purity.

In essence, Artistic Fusion is more than an addition to the compendium of image style transfer methods; it is an approach that aligns the humankind's aesthetic and artistic cravings with modern intricate style transfer technologies.

🤗 Please cite Artistic Fusion in your publications if it helps with your work. Please star🌟 this repo to help others notice Artistic Fusion if you think it is useful. Thank you! BTW, you may also like CycleGAN, stable-diffusion-webui, the two great open-source repositories upon which we built our architecture.

📣 Attention please:

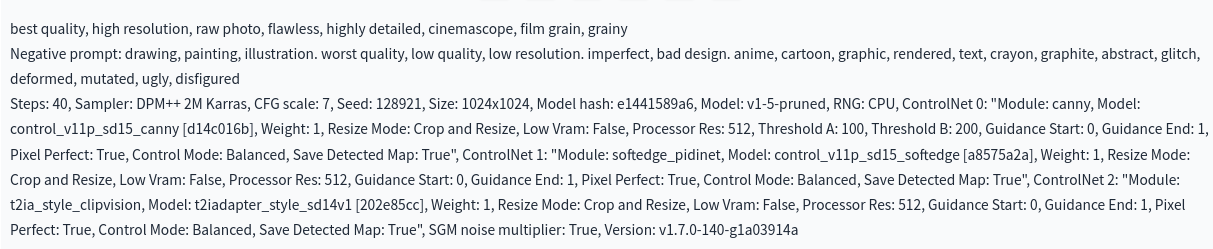

> Artistic Fusion is developed heavily under the framework of stable-diffusion-webui, an ultra-awesome project clustering copious diffusion-based generative models. Its instellation of image generaitive models provided us with a plentiful playground with munificient probablities. An example of using stale-diffusion-webui for generating image variation(a indispensable component of our final model) results are shown below. With stable-diffusion-webui, easy peasy! 😉

⦿ Contributions:

- Our approach leverages the Latent diffusion model (LDM) as a baseline, which we explore several innovative techniques such as variance learning and optimized noise scheduling. These modifications allow for a more nuanced and faithful representation of mural styles.

- We explore the use of DDIM sampling to improve the efficiency of the generative process. We also delve into the world of large text-conditional image diffusion models like Stable Diffusion, utilizing textual prompts to guide the style transfer process more effectively. This integration of conditional guidance is particularly groundbreaking, enabling our model to interpret and apply complex mural styles with unprecedented accuracy and diversity.

- We integrate super-resolution techniques, scaling the generated images to higher resolutions without losing the essence of the mural style. This step ensures that our outputs are not just stylistically accurate but also of high fidelity and detail, suitable for large-scale artistic displays.

- Our model not only achieves competitive results on evaluation metrics such as FID and LPIPS metrics but also exhibits more aesthetic and artistic details.

⦿ Performance: Artistic Fusion : Currnently, we reported our FID score at 116 and our LPIPS score at 0.63.

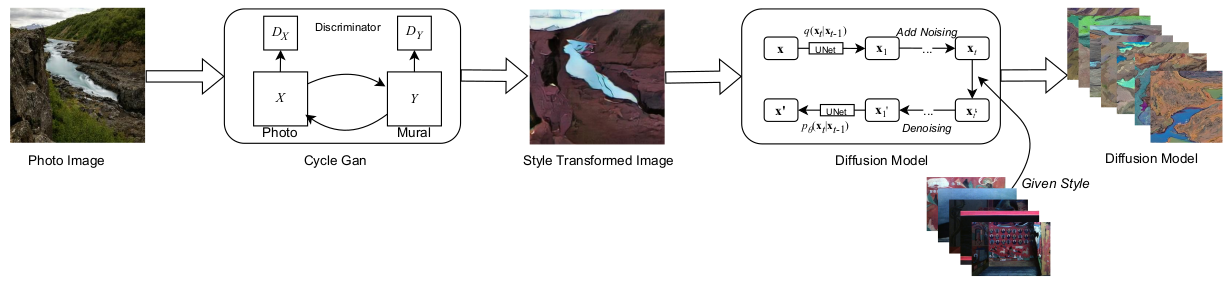

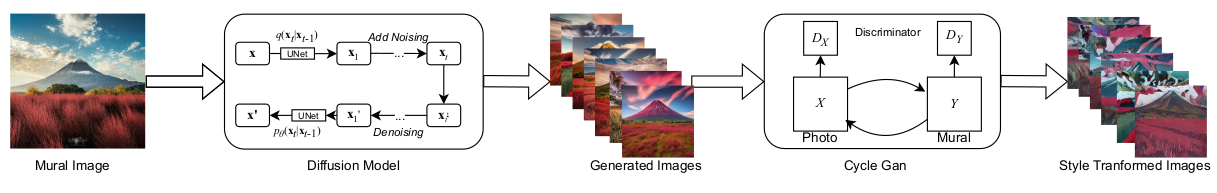

Here we only two main variations of our method: the GAN+Diffusion pipeline and the Diffusion+GAN pipeline. For the detailed description and explanation, please read our full paper if you are interested.

GAN+Diffusion pipeline, with GAN-based solid style transfer capabilities, coupled with diffusion-enhanced variety and vitality(but deviates from the style transfer intent too easily):

Our final model: Diffusion + GAN framework, with Stable diffusion models' generative capabilities injected, followed by CycleGAN's style transfer pipeline.

We run on Ubuntu 22.04 LTS with a system configured with

- Use conda to create a env for Artistic fusion from our exported

environment.ymland activate it.

conda env create -f environment.yml

conda activate ldm- Running the

./webui.sh, then the activated environment will automatically flow into the installation process. If anything goes wrong, please make sure that you start your vs-code from the command line with the desired environment activated.

conda create -n ldm python=3.10

conda activate ldm

./webui.sh

If you are looking to reproduce CycleGAN results in the paper, please install the conda env first.

cd cyclegan/

conda env create -f environment.yml

conda activate cycleGan

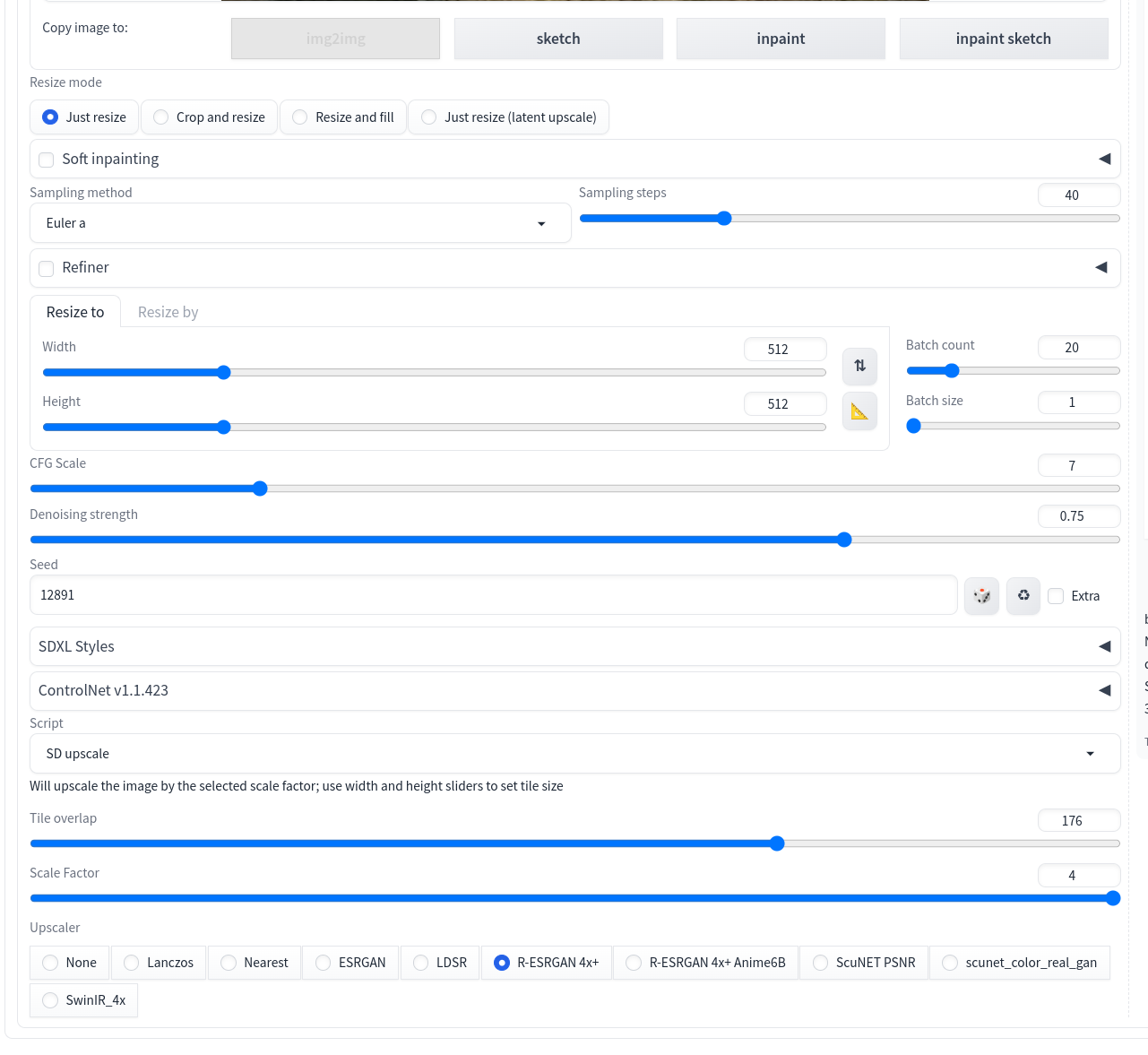

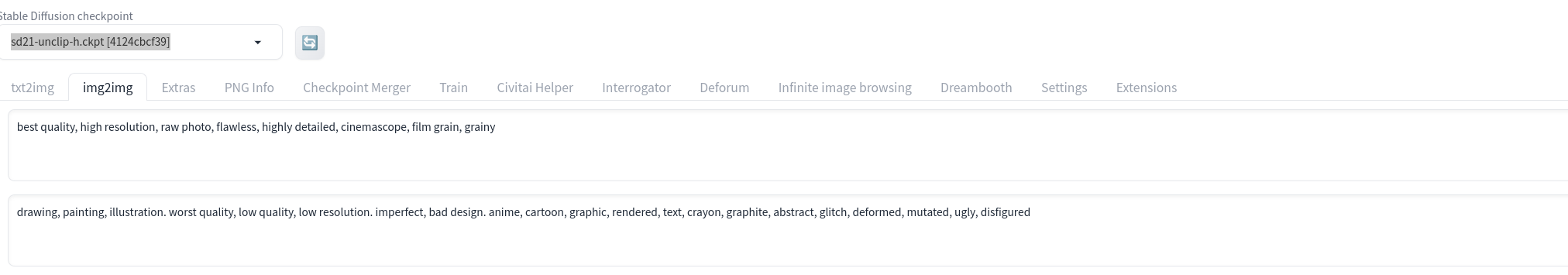

cd ..Additionally, if you want to reproduce image-variation results in the paper(based on sd-unclip-h.ckpt), please follow the procedures delineated below ! 🤗

-

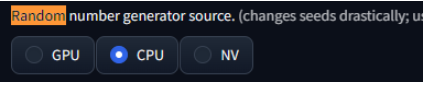

We recommend users to change these options back if users mainly use Stable Diffusion 1.5.

-

Make sure that your webui version is at least 1.6.0. We will use "sd21-unclip-h.ckpt" for the test so you should have it. Go to your webui setting, "Show all pages", then Ctrl+F open search, search for "random", then make sure to use "CPU" seed (in Setting->Stable Diffusion)

-

then search for "sgm", check "SGM noise multiplier" (in Setting->Sampler parameters)

-

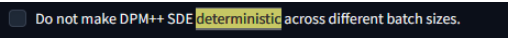

then search "deterministic", make sure to UNCHECK "Do not make DPM++ SDE deterministic across different batch sizes." (Do NOT select it, in Setting->Compatibility)

-

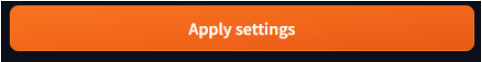

then apply the settings:

-

then set the settings below:

-

Now select a image from our test datase(if you are using batch mode, please specify the home directory of the test dataset ). Then hit Ctrl+Enter and enjoy your results !!! 😃😃😃

We run on one image style transfer dataset.

Here are some samples from our style image dataset:

We use the CycleGAN model as our backbone, and train it on our mural-paining dataset. Also please take a tour to CycleGAN repo for further details. The stable diffusion model is also used to produce munificient and copious image variation results. We have extended our diffusion-based model exploration journey among ControlNet, T2I adapter, Dreambooth, Lora fine-tuning on SDXL and INST.

To replicate the CycleGAN result of our paper, please check README.md.

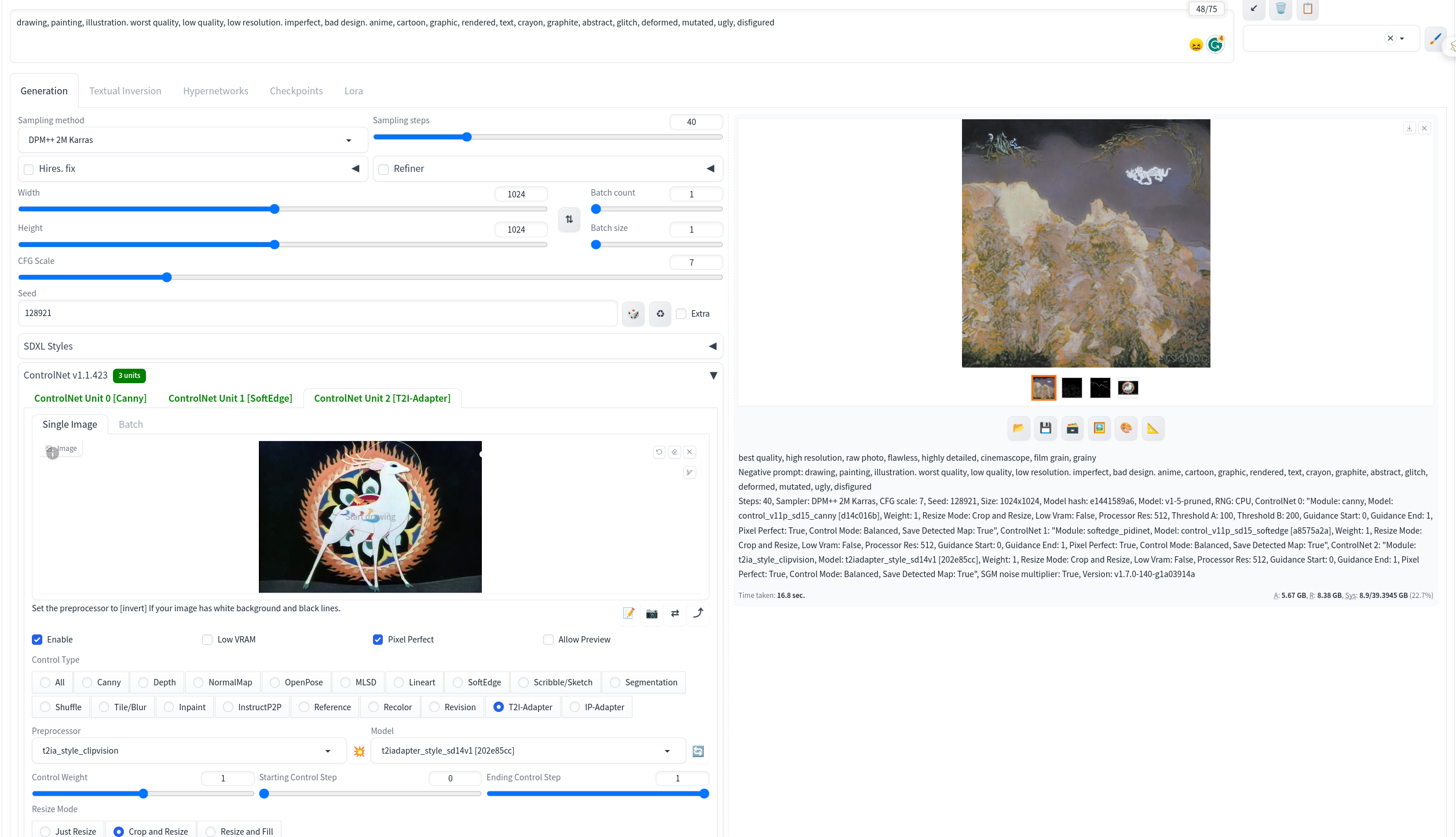

To replicate the ControlNet results of our paper, please further follow the procedures described below:

-

Open the ControlNet tab under the txt2img section:

-

then set the settings below:

-

Hit Ctrl+Enter and enjoy your results !!! 😃😃😃

👉 Click here to see the example 👀

Please see the experiment.ipynb notebooks under the cyclegan directory for reference about the training procedure and inference pass of our best model.

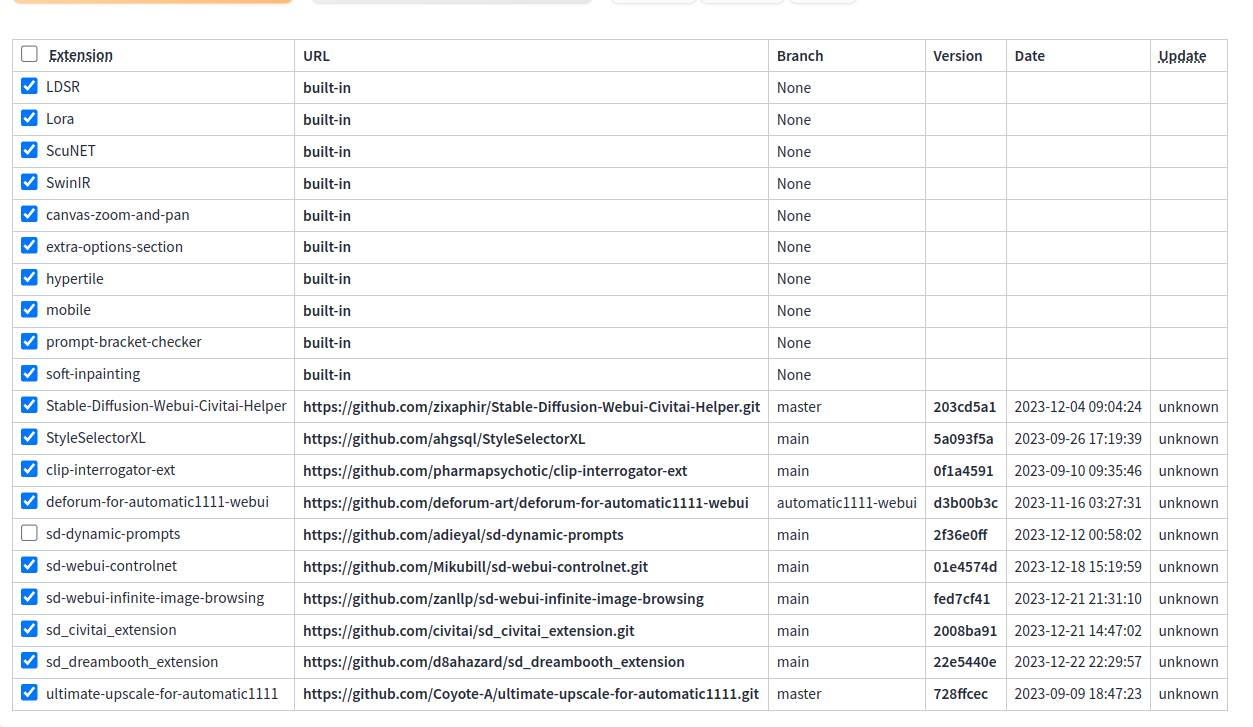

You can use infinite image browsing extension to help you go over your collections quickly and efficiently.

If you are encountering any problems during reproducing our result, consider align the installed extensions with us !

❗️Note that paths of datasets and saving dirs may be different on your PC, please check them in the configuration files.

Samples of our CycleGAN result:

Samples of our image variation result:

Samples from our GAN+Diffusion pipeline:

More results drawn from our final model's style transfer results:

I extend my heartfelt gratitude to the esteemed faculty and dedicated teaching assistants of AI3603 for their invaluable guidance and support throughout my journey in image processing. Their profound knowledge, coupled with an unwavering commitment to nurturing curiosity and innovation, has been instrumental in my academic and personal growth. I am deeply appreciative of their efforts in creating a stimulating and enriching learning environment, which has significantly contributed to the development of this paper and my understanding of the field. My sincere thanks to each one of them for inspiring and challenging me to reach new heights in my studies.

If you have any additional questions or have interests in collaboration,please feel free to contact me at songshixiang, qisiyuan, liuminghao 😃.