This repository contains PyTorch implementation for LUK: Empowering Log Understanding with Expert Knowledge from Large Language Models.

Logs play a critical role in providing essential information for system monitoring and troubleshooting. Recently, with the success of pre-trained language models (PLMs) and large language models (LLMs) in natural language processing (NLP), smaller PLMs (such as BERT) and LLMs (like ChatGPT) have become the current mainstream approaches for log analysis. While LLMs possess rich knowledge, their high computational costs and unstable performance make them impractical for direct log analysis. In contrast, smaller PLMs can be fine-tuned for specific tasks even with limited computational resources, making them valuable alternatives. However, these smaller PLMs face challenges in fully comprehending logs due to their limited expert knowledge. To better utilize the knowledge embedded within LLMs for log understanding, this paper introduces a novel knowledge enhancement framework, called LUK, which leverages expert knowledge from LLMs to empower log understanding on a smaller PLM. Specifically, we design a multi-expert collaboration framework to acquire expert knowledge from LLMs automatically. In addition, we propose two novel pre-training tasks to enhance the log pre-training with expert knowledge. LUK achieves state-of-the-art results on different log analysis tasks and extensive experiments demonstrate expert knowledge from LLMs can be utilized more effectively to understand logs.

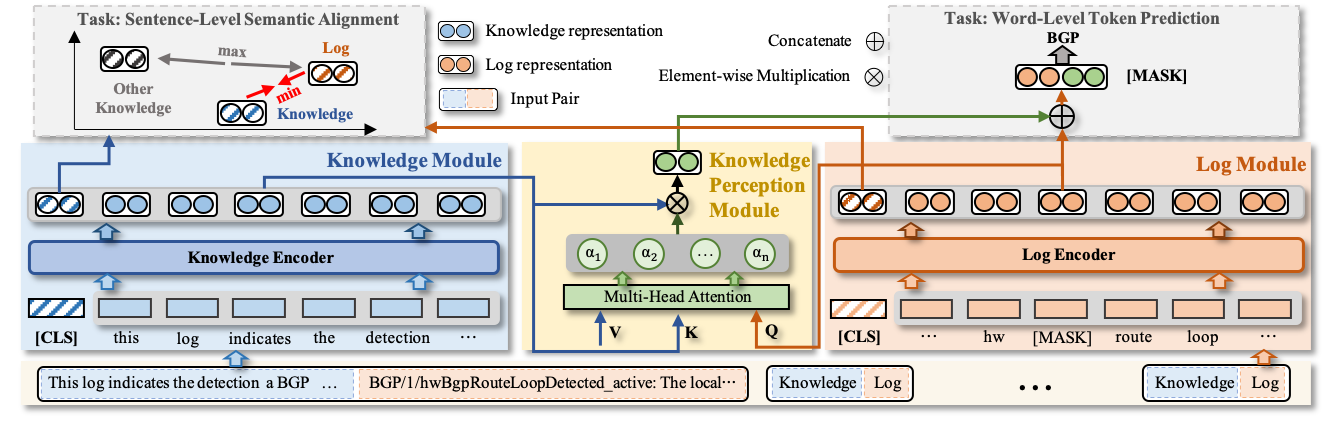

The conceptual overview of LUK:

Based on the classical waterfall model in software engineering, we design a similar waterfall model to analyze logs consisting of three stages: analysis, execution, and evaluation.

- transformers

- numpy

- torch

- huggingface-hub

- vllm

- tokenizers

- scikit-learn

- tqdm

pip install -r requirements.txt

LUK

|-- datasets

| |-- downstream_result # Results of Chatgpt and Llama2-13B on downstream tasks

| |-- pre-train # Pre-training datasets

| |-- tasks # Downstream tasks datasets

|-- sentence_transformers # We modified the code for losses, evaluation and SentenceTransformerEnhance to implement LUK

| |-- cross_encoder

| |-- datasets

| |-- evaluation

| |-- losses

| |-- models

| |-- readers

| |-- __init__.py

| |-- LoggingHandler.py

| |-- model_card_templates.py

| |-- SentenceTransformer.py

| |-- SentenceTransformerEnhance.py # enhance with LUK

| |-- util.py

|-- Llama2_inference_down_task.py # evaluate Llama2 on the specific task

|-- Llama2_MEC.py # acquire knowledge from LLMs based on MEC

|-- LUK_LDSM_task.py # evaluate LUK on LDSM

|-- LUK_LPCR_task.py # evaluate LUK on LPCR

|-- LUK_MC_task.py # evaluate LUK on MC

|-- LUK_pretrain.py # pre-train main

We collecte 43,229 logs from Software System Cisco and Network Device. The data statistics are as follows:

| Datasets | Category | # Num |

|---|---|---|

| Software System | Distributed System | 2,292 |

| Operating System | 11,947 | |

| Network Device | Cisco | 16,591 |

| Huawei | 12,399 | |

| Total | 43,229 |

In our code, we use 'bert-base-uncased' as the pre-trained model, and you can use 'bert-base-uncased' directly or download bert-base-uncased into your directory.

To acquire knowledge from LLMs based on MEC, you can run:

python Llama2_MEC.py --data ./datasets/pre-train/expert_chatgpt_output.json --model_path ./Llama-2-13b-chat-hf --save_path ./expert_llama2_output.json

Note: we use vLLM to load meta-llama/Llama-2-13b-chat-hf, and the model you can download from meta-llama/Llama-2-13b-chat-hf

To train LUK from scratch, run:

python LUK_pretrain.py --pretrain_data ./datasets/pre-train/expert_chatgpt_output.json --base_model bert-base-uncased

expert_chatgpt_output.json is the example of MEC based ChatGPT. LUK is pretrained on all data together, and you can merge all pre-train datasets.

To evaluate the model on the specific task with LUK, for example, on the LDSM task, you can run:

python LUK_LDSM_task.py --train_data ./datasets/tasks/LDSM/hw_switch_train.json --dev_data ./datasets/tasks/MC/hw_switch_dev.json --test_data ./datasets/tasks/MC/hw_switch_test.json

To evaluate the model on the specific task with Llama2, for example, on the LDSM task, you can run:

python Llama2_inference_down_task.py --data ./datasets/tasks/LDSM/hw_switch_test.json --model_path ./llama2-chat-hf --save_path ./downstream_result/Llama2_result/LDSM/ldsm_hwswitch_result_llama13b.json

Note, for software logs downstream tasks, we collect datasets from LogHub and based on LogPAI to experiment

MIT License

Our code is inspired by sentence-transformers, Hugging Face, vLLM

Our pre-training datasets and downstream tasks datasets are collected from LogHub and public documentation Cisco, Huawei and H3C.