- Project Overview

- Installation

- Instructions

- File Descriptions

- Discussion

- Licensing, Authors, and Acknowledgements

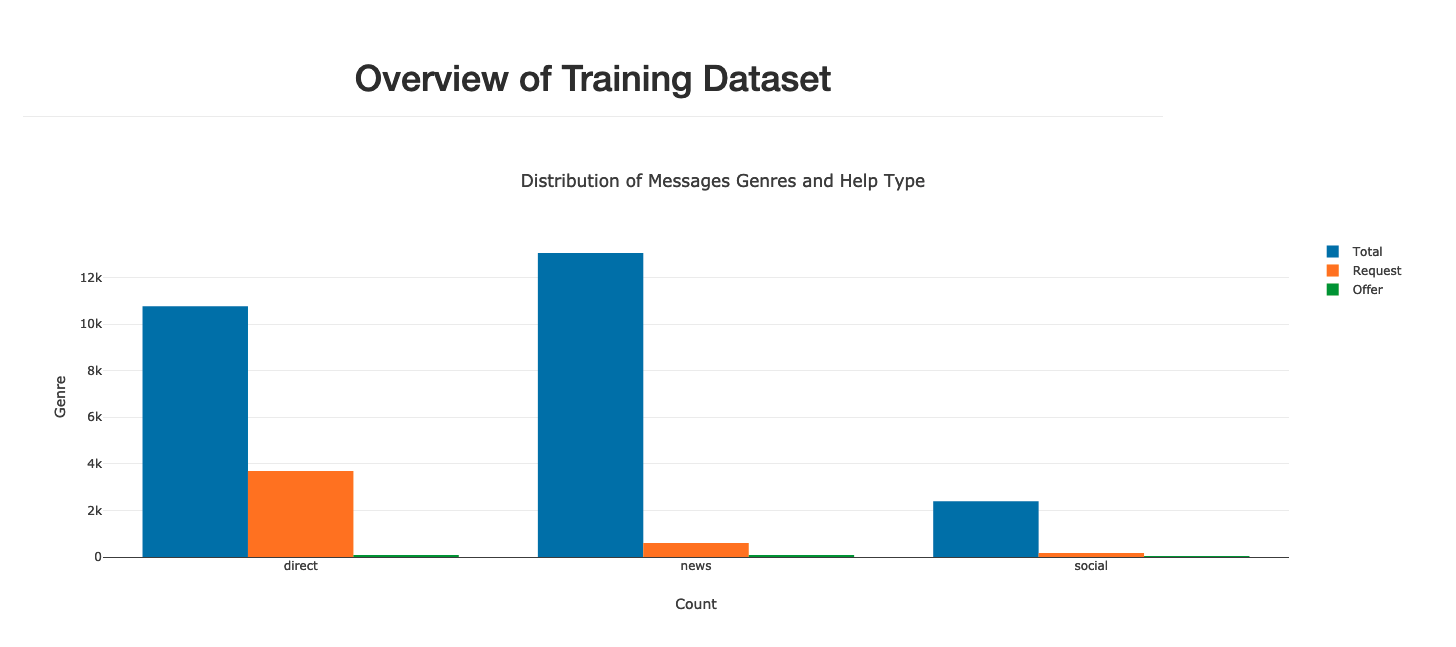

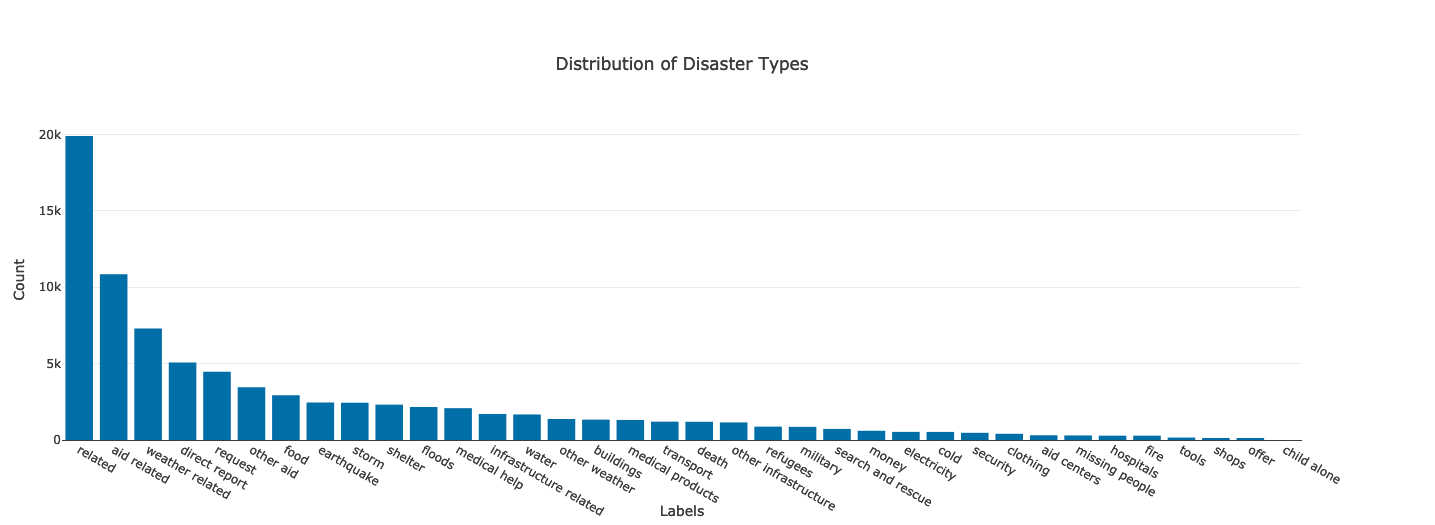

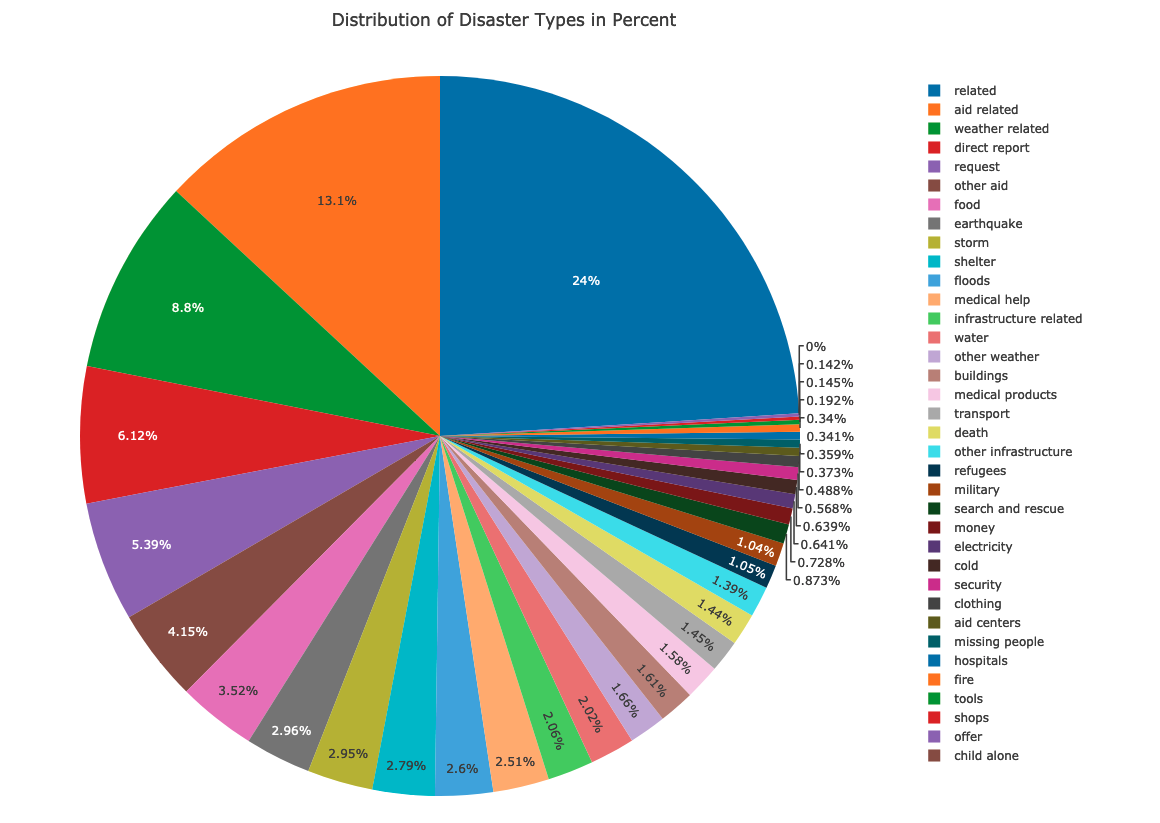

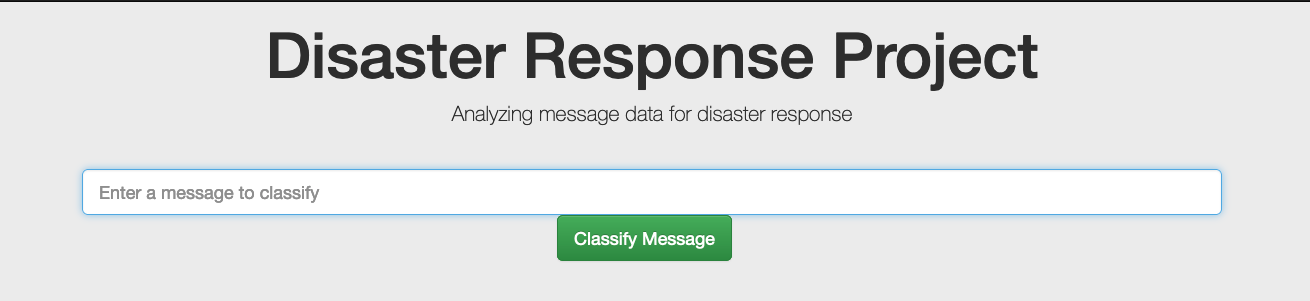

The purpose of this project is to apply natural language techniques and machine learning in order to classify disaster messages (in English). Furthermore, there is an API available in which you can enter new messages and automatically receive a classification for them. The model differentiates between 36 categories e.g. “weather”, “water”, “food” etc.

The code was tested using Python version 3.9. For other necessary libraries please use requirements.txt

pip install -r requirements.txt-

Run the following commands in the project's root directory to set up your database and model.

- To run ETL pipeline that cleans data and stores in database

python data/process_data.py data/disaster_messages.csv data/disaster_categories.csv data/DisasterResponse.db - To run ML pipeline that trains classifier and saves

- In case you wish to tune the parameter (GridSearchCV)

python models/train_classifier.py data/DisasterResponse.db models/classifier.pkl True - Otherwise, the model will take for training the optimized parameter

python models/train_classifier.py data/DisasterResponse.db models/classifier.pkl False

- In case you wish to tune the parameter (GridSearchCV)

- To run ETL pipeline that cleans data and stores in database

-

Run the following command in the app's directory to run your web app.

python run.py

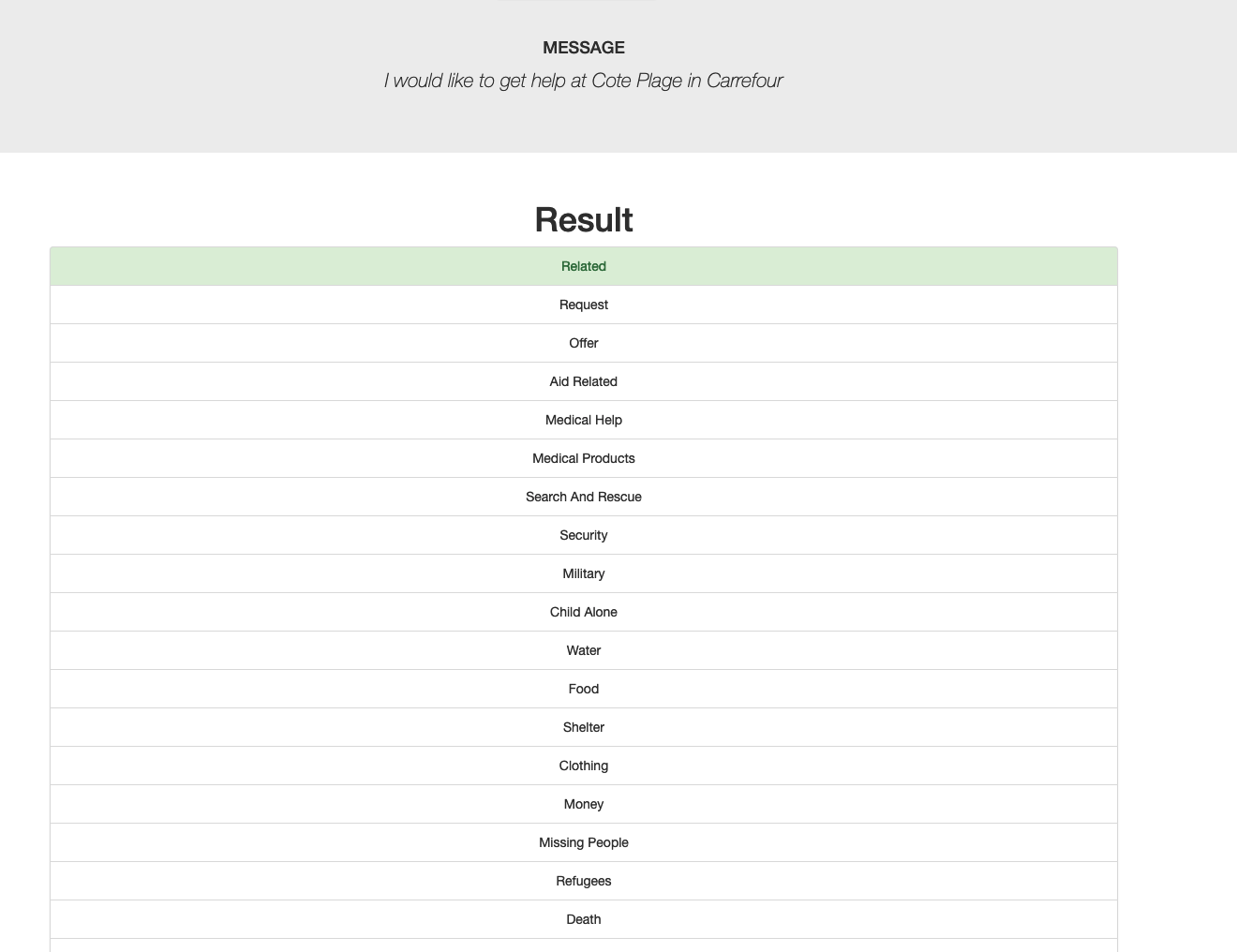

There you can find the different data visualization for better understanding the data set as well as you can put your message (in English) and receive as a response the classification:

-

data

a) /process_data.py: ETL pipeline, clean, preprocess data and store it into SQLite database

b) /disaster_messages.csv: real messages that were sent during disaster event

c) /disaster_categories.csv: 36 possible categories

-

model

a) /train_classifier.py: takes data from database, creates and trains, tunes classifier and at the end saves data to pickle file

b) /best_params.pkl : contains tuned parameter

-

app

a) /run.py: runs REST API for visualizations and message classification

b) /wrangling_script/ wrangle_data.py: data wrangling as well as data visualization

c) /templates: all files needed for frontend

Due to the fact, that “Disaster Response Project” is a supervised learning with 36 pre-defined categories we are dealing with classification task. In this project K-nearest neighbors will be used as classification algorithm.

K-nearest neighbors belongs to the type of a lazy learner that means that this algorithm doesn’t learn a discriminative function from the training set instead it memorizes the training data. The major advantage of this approach on the one hand is the instant adoption of new data points. On the other hand, this approach requires high computational cost especially for classifying new samples (the computation complexity grows linearly with the number of samples in the data set). In addition, K-nearest neighbors demands high storage space since the training samples can’t be discard (training isn’t a part of the approach).

The main idea of the K-nearest neighbors is:

- Define k and a distance metric

- Find the k-nearest neighbors for new sample

- Classify the sample by majority vote

For this reason, there are two parameters that are crucial to balance between overfitting and underfitting: the number of k and the distance metric. Therefore, these two parameters are used for tuning.

The most popular distance metrics are:

-

Euclidean Distance: the straight line between two data points in Euclidean space

-

Manhattan Distance: the distance between two points or vectors A and B is defined as the sum of the absolute differences of their individual coordinates

-

Minkowski Distance: a generalization of the Euclidean and Manhattan distance

Must give credit to Figure Eight that provided the dataset with real messages and labels. Great thanks to Udacity for their contribution during the process.