Building a hand gesture recognition model and using it to identify hand gestures in real-time to trigger actions on a computer

The COVID-19 pandemic has inevitably accelerated the adoption of a number of contactless Human-Computer Interaction (HCI) technologies, one of which is the hand gesture control technology. Hand gesture-controlled applications are widely used across various industries, including healthcare, food services, entertainment, smartphone and automotive.

In this project, a hand gesture recognition model is trained to recognize static and dynamic hand gestures. The model is used to predict hand gestures in real-time through the webcam. Depending on the hand gestures predicted, the corresponding keystrokes (keyboard shortcuts) will be sent to trigger actions on a computer.

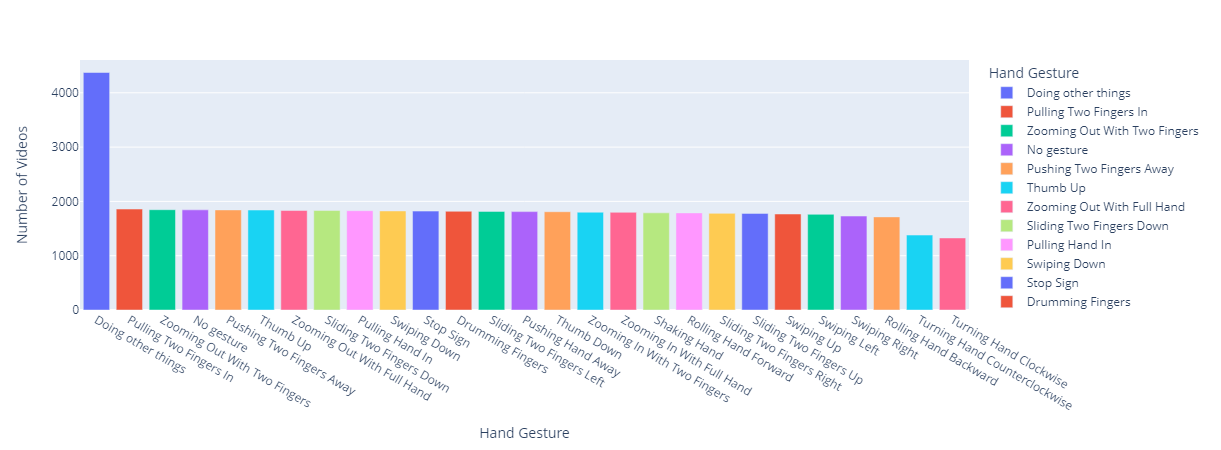

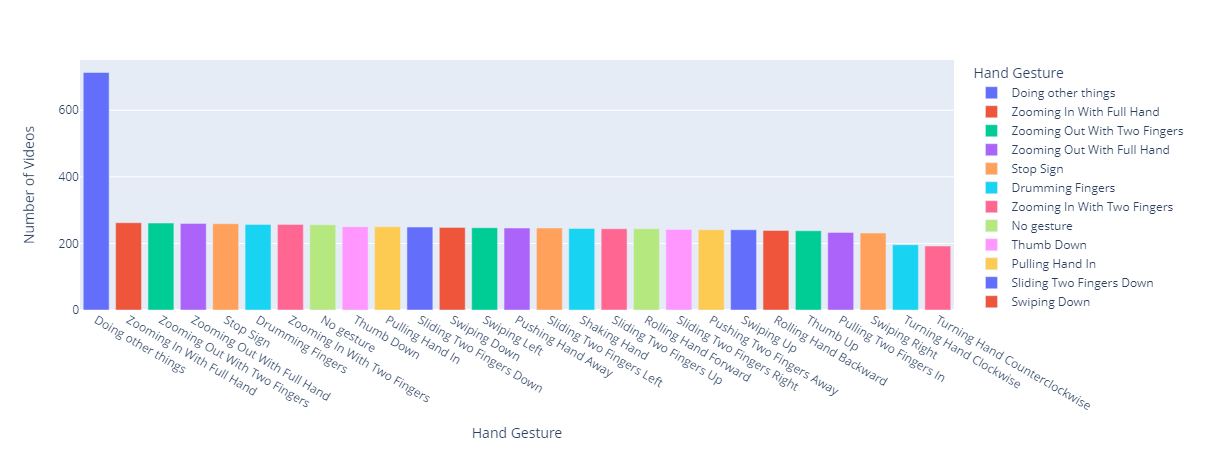

The dataset used is a subset of the 20BN-Jester dataset from Kaggle. It is a large collection of labelled video clips of humans performing hand gestures in front of a camera.

The full dataset consists of 27 classes of hand gestures in 148,092 video clips of 3 seconds length, which in total account for more than 5 million frames.

In this project, 10 classes of hand gestures have been selected to train the hand gesture recognition model.

Any actions on a computer can be triggered as long as they are linked to a keyboard shortcut. For simplicity, this project is configured to trigger actions on YouTube because it has its own built-in keyboard shortcuts.

The table below shows the hand gestures and the actions they trigger on YouTube.

- Data Exploration

-

Data Extraction

- Extract training and validation data of the selected classes from the dataset.

-

Hyperparameter Tuning

- Perform grid search to determine the optimal values for dropout and learning rate.

-

Model Training

- Build a 3D ResNet-101 model with the optimal hyperparameters.

- Compile the model.

- Train the model.

-

Classification

- Read frames from the webcam, predict the hand gestures in the frames using the model and send the corresponding keystrokes to trigger actions on the computer.

- Python 3.7.9 or above

pip install -r requirements.txt- 20bn-jester - Jester Dataset V1 for Hand Gesture Recognition by toxicmender on Kaggle

- The Jester Dataset: A Large-Scale Video Dataset of Human Gestures by Joanna Materzynska, Guillaume Berger, Ingo Bax and Roland Memisevic

- Create Deep Learning Computer Vision Apps using Python 2020 by Coding Cafe on Udemy

- 3D ResNet implementation by JihongJu