A demonstration of how minimal an effort it takes to use Rust instead of Python for Serverless projects

such as an API Gateway with Lambda functions.

Table of Contents

As an example, we are creating a backend-API that models a Sheep Shed.

The Sheep Shed is housing... well... Sheeps. Each Sheep has a Tattoo which is unique: it is a functionnal error to have sheeps sharing the same tattoo. A Sheep also have a Weight, which is important down the road.

The Sheep Shed obviously has a Dog that can, when asked, count the sheeps in the shed.

The Sheep Shed unfortunately has a hungry Wolf lurking around, who wants to eat the sheeps. This wolf is quite strange, he suffers from Obsessive-Compulsive Disorder. Even starving, he can only eat a Sheep if its Weight expressed in micrograms is a prime number. And of course, if multiple sheeps comply with his OCD he wants the heaviest one!

Finally, the Sheep Shed has a resident Cat. The cat does not care about the sheeps, shed, wolf or dog. He is interested only in its own business. This is a savant cat that recently took interest in a 2-ary variant of the Ackermann function. The only way to currently get his attention is to ask him about it.

The Sheep Shed is accessible through an Amazon API Gateway exposing 4 paths:

- POST /sheeps/

<Tattoo>to add a sheep in the shed with the givenTattooand a random weight generated by the API - GET /dog to retrieve the current sheep count

- GET /cat?m=

<m>&n=<n>to ask the cat to compute the Ackermann function for givenmandn - DELETE /wolf to trigger a raid on the shed by our OCD wolf.

Each of these paths has its own AWS Lambda function.

The backend is an Amazon DynamoDB table.

Ok that's just a demo but the crux of it is:

- The Dog performs a task that only require to scan DynamoDB with virtually no operationnal overhead other than driving the scan pagination

- The sheep insertion performs a random number generation, but is also almost entirely tied to a single DynamoDB interaction (PutItem)

- The Wolf require to not only scan the entire DynamoDB table, but also to compute the prime numbers to be able to efficiently test if the weight of each sheep is itself a prime number then, if a suitable sheep is found, he eats (DeleteItem) it

- The Cat performs a purely algorithmic task with no I/O required.

As a result, we can compare the size of the advantage of Rust over Python in these various situations.

NB1: The DynamoDB table layout is intentionaly bad: it would be possible to create indexes to drastically accelerate the search of a suitable sheep for the wolf, but that's not the subject of this demonstration

NB2: Initially I thought that activities tied to DynamoDB (Network I/O) operations would greatly reduce the advantage of Rust over Python (because packets don't go faster between Lambda and DynamoDB depending on the language used). But it turns out that even for "pure" IO bound activities Rust lambdas are crushing Python lambdas...

You can easily deploy the demo in you own AWS account in less than 15 minutes. The cost of deploying and loadtesting will be less than $1: CodePipeline/CodeBuild will stay well within their Free-tier; API Gateway, Lambda and DynamoDB are all in pay-per-request mode at an aggregated rate of ~$5/million req and you will make a few tens of thousands of request (it will cost you pennies).

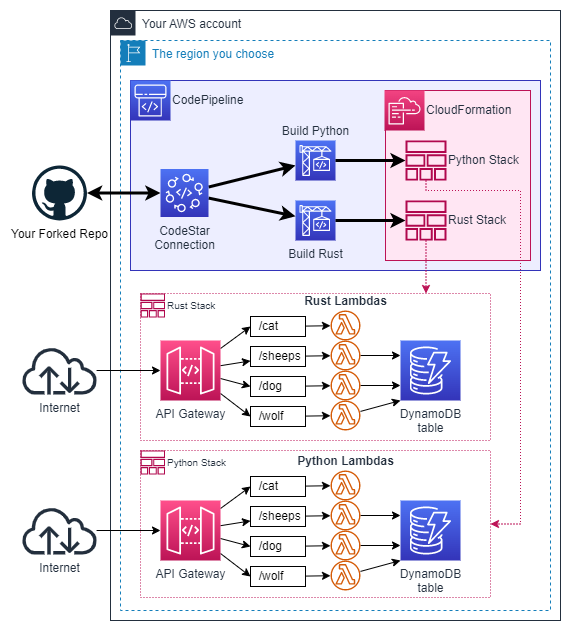

Here is an overview of what will be deployed:

This PNG can be edited using Draw.io

You need an existing AWS account, with permissions to use the following services:

- AWS CodeStar Connections

- AWS CloudFormation

- AWS CodePipeline

- Amazon Simple Storage Service (S3)

- AWS CodeBuild

- AWS Lambda

- Amazon API Gateway

- Amazon DynamoDB

- AWS IAM (roles will be created for Lambda, CodeBuild, CodePipeline and CloudFormation)

- Amazon CloudWatch Logs

You also need a GitHub account, as the deployment method I propose here rely on you being able to fork this repository (CodePipeline only accepts source GitHub repositories that you own for obvious security reasons).

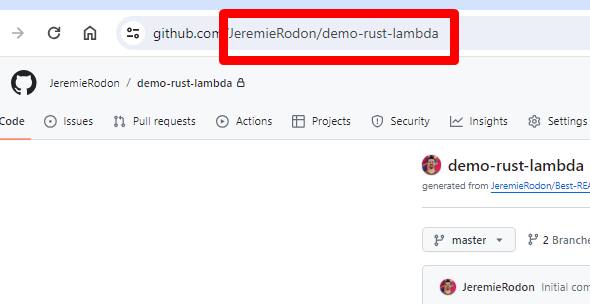

Fork this repository in you own GitHub account. Copy the ID of the new repository (<UserName>/demo-rust-lambda), you will need it later. Be mindfull of the case.

The simplest technique is to copy it from the browser URL:

In the following instructions, there is an implicit instruction to always ensure your AWS Console is set on the AWS Region you intend to use. You can use any region you like, just stick to it.

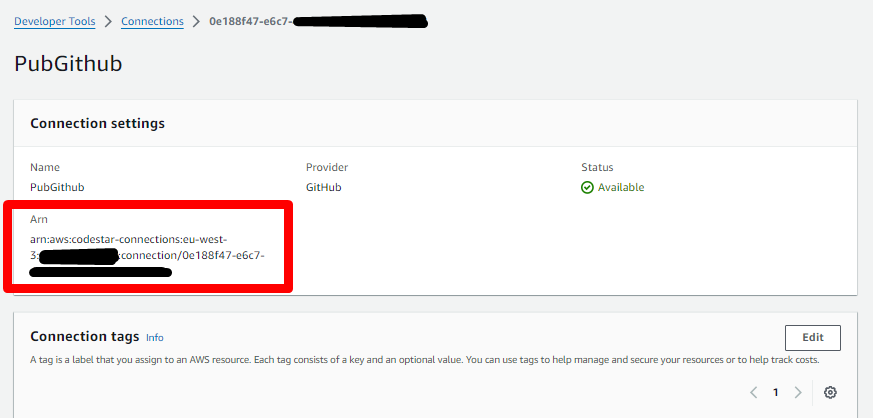

This step is only necessary if you don't already have a CodeStar Connection to your GitHub account. If you do, you can reuse it: just retrieve its ARN and keep it on hand.

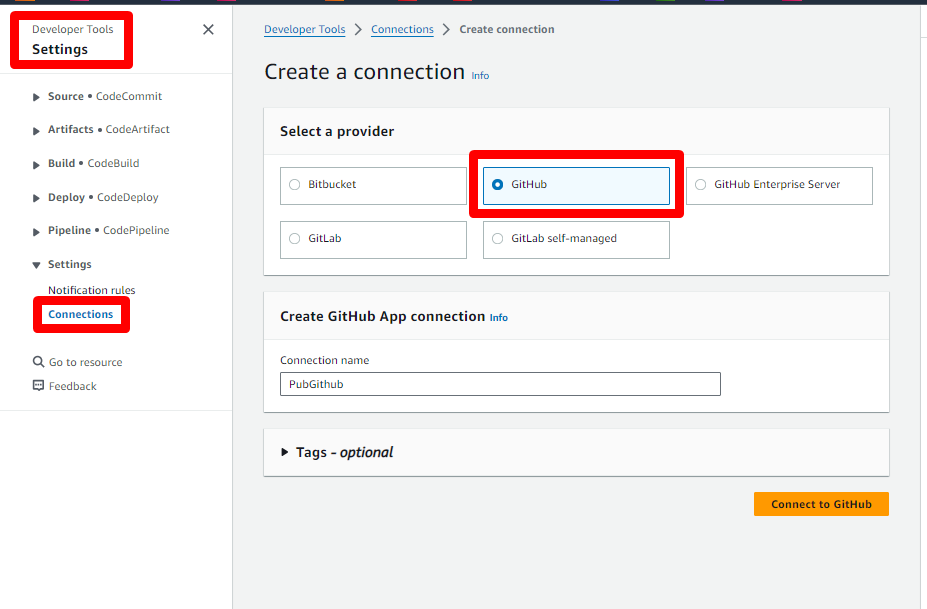

- Go to the CodePipeline console, select Settings > Connections, use the GitHub provider, choose any name you like, click Connect to GitHub

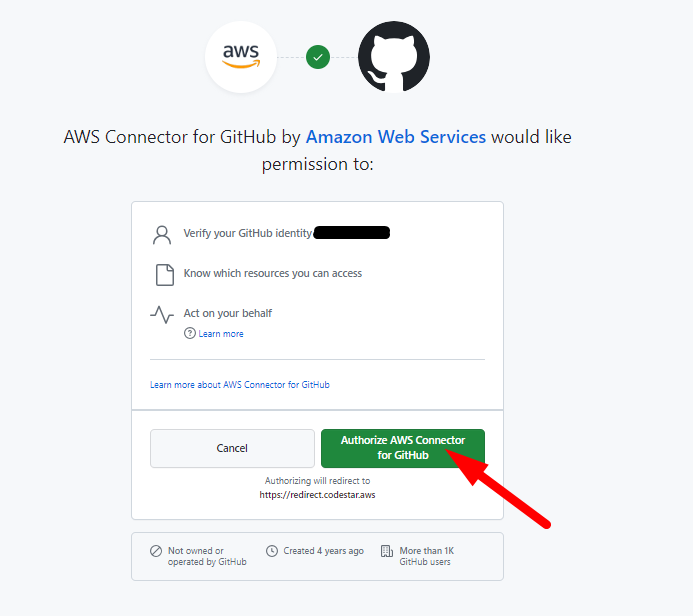

- Assuming you were already logged-in on GitHub, it will ask you if you consent to let AWS do stuff in your GitHub account. Yes you do.

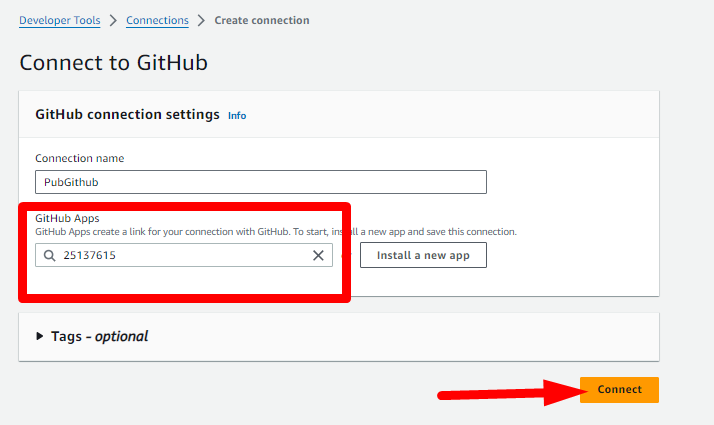

- You will be brought back to the AWS Console. Choose the GitHub Apps that was created for you in the list (don't mind the number on the screenshot, yours will be different), then click Connect.

- The connection is now created, copy its ARN somewhere, you will need it later.

Now you are ready to deploy, download the CloudFormation template ci-template.yml from the link or from your newly forked repository if you prefer.

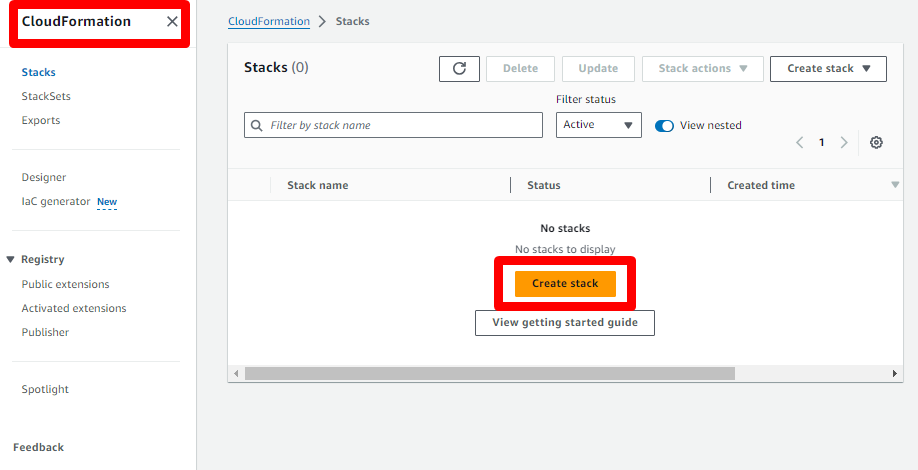

- Go to the CloudFormation console and create a new stack.

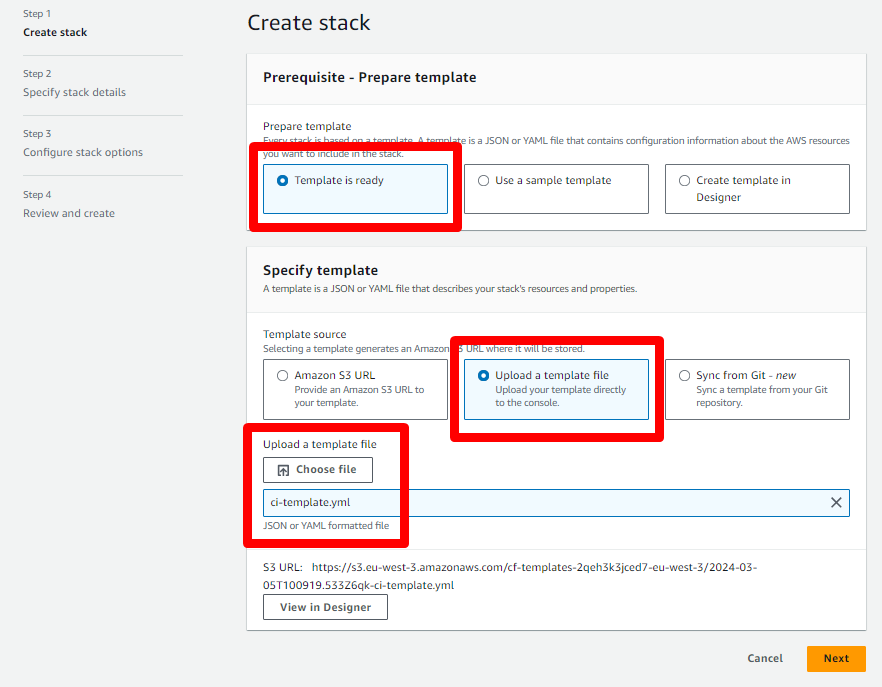

- Ensure Template is ready is selected and Upload a template file, then specify the

ci-template.ymltemplate that you just downloaded.

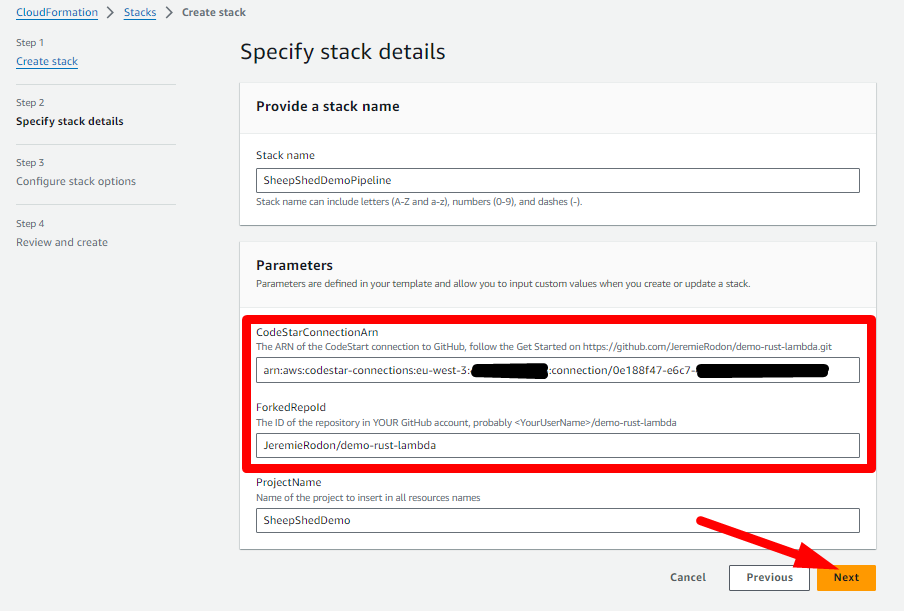

- Choose any Stack name you like, set your CodeStar Connection Arn (previously copied) in

CodeStarConnectionArnand your forked repository ID inForkedRepoId

-

Skip the Configure stack options, leaving everything unchanged

-

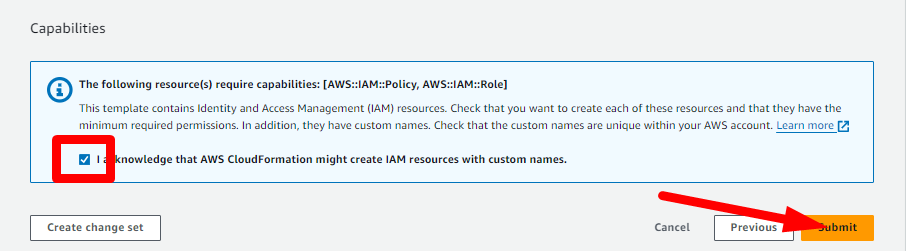

At the Review and create stage, acknowledge that CloudFormation will create roles and Submit.

At this point, everything will roll on its own, the full deployment should take ~8 minutes, largely due to the quite long first compilation of Rust lambdas.

If you whish to follow what is happening, keep the CloudFormation tab open in your browser and open another one on the CodePipeline console.

To cleanup the demo resources, you need to remove the CloudFormation stacks IN ORDER:

- First remove the two API stacks named

<ProjectName>-rust-apiand<ProjectName>-python-api - /!\ Wait until both are successfully removed /!\

- Then remove the CICD stack (the one you created yourself)

You MUST follow that order of operation because the CICD stack owns the IAM Role used by the other two to performs their operation; therefore destroying the CICD stack first will prevent the API stacks from operating.

Removing the CloudFormation stacks correctly will cleanup every resources created for this demo, no further cleanup is needed.

The utils folder of the repository contains scripts to generate traffic on each API. The easiest way is to use execute_default_benches.sh:

cd utils

./execute_default_benches.sh --rust-api <RUST_API_URL> --python-api <PYTHON_API_URL>You can find the URL of each API (RUST_API_URL and PYTHON_API_URL in the script above) in the Outputs sections of the respective CloudFormation stacks (stacks of the APIs, not the CICD) or directly in the API Gateway console.

It will execute a bunch of API calls (~4k/API) and typically takes ~10minutes to run, depending on your internet connection and latence to the APIs.

For reference, here is an execution report with my APIs deployed in the Paris region (as I live there...):

./execute_default_benches.sh \

--rust-api https://iv32tbdyt0.execute-api.eu-west-3.amazonaws.com/v1/ \

--python-api https://92jyb0j7c8.execute-api.eu-west-3.amazonaws.com/v1/It outputs:

./invoke_cat.sh https://92jyb0j7c8.execute-api.eu-west-3.amazonaws.com/v1/

Calls took 59432ms

./invoke_cat.sh https://iv32tbdyt0.execute-api.eu-west-3.amazonaws.com/v1/

Calls took 7436ms

./insert_sheeps.sh https://92jyb0j7c8.execute-api.eu-west-3.amazonaws.com/v1/

Insertion took 10227ms

./insert_sheeps.sh https://iv32tbdyt0.execute-api.eu-west-3.amazonaws.com/v1/

Insertion took 8363ms

./invoke_dog.sh https://92jyb0j7c8.execute-api.eu-west-3.amazonaws.com/v1/

Calls took 11162ms

./invoke_dog.sh https://iv32tbdyt0.execute-api.eu-west-3.amazonaws.com/v1/

Calls took 8823ms

./invoke_wolf.sh https://92jyb0j7c8.execute-api.eu-west-3.amazonaws.com/v1/

Calls took 216667ms

./invoke_wolf.sh https://iv32tbdyt0.execute-api.eu-west-3.amazonaws.com/v1/

Calls took 15917ms

Done.

Of course, you can also play with the individual scripts of the utils folder, just invoke them with --help to see what you can do with them:

./invoke_cat.sh --helpUsage: ./invoke_cat.sh [<OPTIONS>] <API_URL>

Repeatedly call GET <API_URL>/cat?m=<m>&n=<n> with m=3 and n=8 unless overritten

-p|--parallel <task_count> The number of concurrent task to use. (Default: 100)

-c|--call-count <count> The number of call to make. (Default: 1000)

-m <integer> The 'm' number for the Ackermann algorithm. (Default: 3)

-n <integer> The 'n' number for the Ackermann algorithm. (Default: 8)

OPTIONS:

-h|--help Show this help

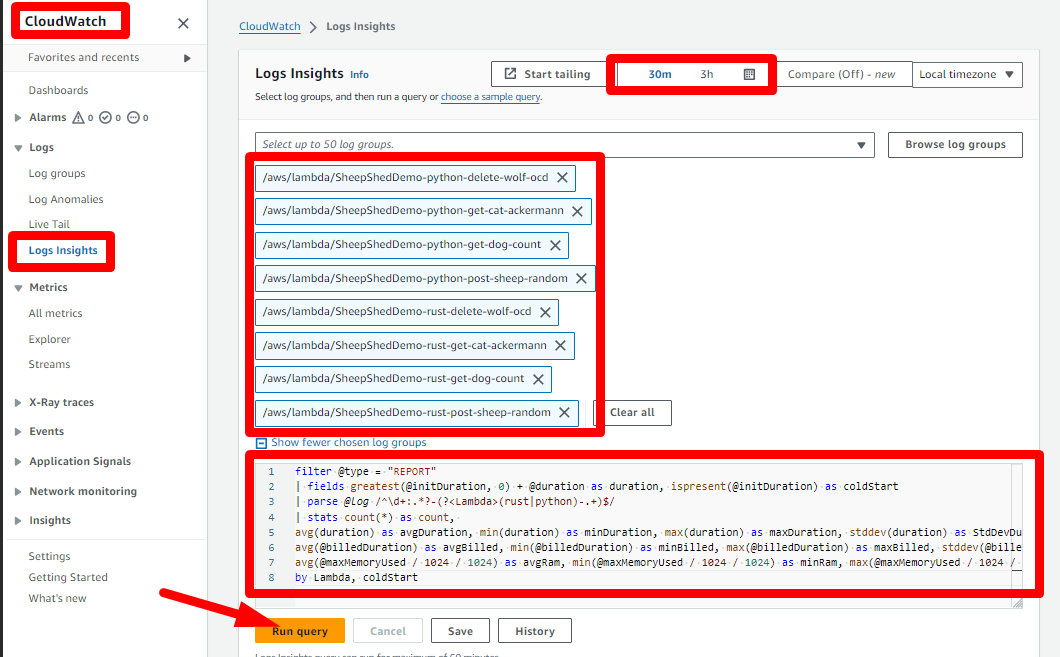

After you generated load, you can compare the performance of the lambdas using CloudWatch Log Insights.

Go to the CloudWatch Log Insights console, set the date/time range appropriately, select the 8 log groups of our Lambdas (4 Rust, 4 Python) and set the query:

Here is the query:

filter @type = "REPORT"

| fields greatest(@initDuration, 0) + @duration as duration, ispresent(@initDuration) as coldStart

| parse @log /^\d+:.*?-(?<Lambda>(rust|python)-.+)$/

| stats count(*) as count,

avg(duration) as avgDuration, min(duration) as minDuration, max(duration) as maxDuration, stddev(duration) as StdDevDuration,

avg(@billedDuration) as avgBilled, min(@billedDuration) as minBilled, max(@billedDuration) as maxBilled, stddev(@billedDuration) as StdDevBilled,

avg(@maxMemoryUsed / 1024 / 1024) as avgRam, min(@maxMemoryUsed / 1024 / 1024) as minRam, max(@maxMemoryUsed / 1024 / 1024) as maxRam, stddev(@maxMemoryUsed / 1024 / 1024) as StdDevRam

by Lambda, coldStart

This query gives you the average, min, max and standard deviation for 3 metrics: duration, billed duration and memory used. Result are grouped by lambda function and separated between coldstart and non-coldstart runs.

And here are the results yielded by my tests (Duration: ms, Billed: ms, Ram: MB; StdDev removed for bievety):

| Lambda | - | coldStart | count | - | avgDuration | minDuration | maxDuration | - | avgBilled | minBilled | maxBilled | - | avgRam | minRam | maxRam |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| rust-delete-wolf-ocd | - | no | 1238 | - | 88.6358 | 32.82 | 215.54 | - | 89.147 | 33 | 216 | - | 27.35 | 21.9345 | 30.5176 |

| rust-delete-wolf-ocd | - | yes | 70 | - | 317.8614 | 294.94 | 342.62 | - | 318.3429 | 295 | 343 | - | 23.5285 | 21.9345 | 25.7492 |

| python-delete-wolf-ocd | - | no | 2374 | - | 4788.6655 | 2441.47 | 7526.53 | - | 4789.1592 | 2442 | 7527 | - | 90.7316 | 82.016 | 100.1358 |

| python-delete-wolf-ocd | - | yes | 105 | - | 9181.7377 | 4396.71 | 9578.59 | - | 8914.0762 | 4135 | 9305 | - | 79.927 | 78.2013 | 84.877 |

| rust-get-dog-count | - | no | 964 | - | 20.0259 | 12.26 | 64.98 | - | 20.5 | 13 | 65 | - | 25.0696 | 20.9808 | 28.6102 |

| rust-get-dog-count | - | yes | 36 | - | 146.7453 | 122.48 | 182.16 | - | 147.2778 | 123 | 183 | - | 22.8352 | 20.9808 | 26.7029 |

| python-get-dog-count | - | no | 900 | - | 643.4647 | 583.33 | 733.75 | - | 643.9767 | 584 | 734 | - | 77.2656 | 74.3866 | 82.016 |

| python-get-dog-count | - | yes | 100 | - | 2363.8496 | 2286.13 | 2627.62 | - | 2108.63 | 2033 | 2359 | - | 75.3021 | 74.3866 | 78.2013 |

| rust-post-sheep-random | - | no | 955 | - | 9.5254 | 4.25 | 35.8 | - | 10 | 5 | 36 | - | 23.4085 | 20.9808 | 27.6566 |

| rust-post-sheep-random | - | yes | 45 | - | 137.026 | 121.4 | 185.69 | - | 137.5111 | 122 | 186 | - | 21.9133 | 20.0272 | 26.7029 |

| python-post-sheep-random | - | no | 900 | - | 590.7037 | 533.85 | 731.13 | - | 591.2133 | 534 | 732 | - | 76.8269 | 74.3866 | 82.016 |

| python-post-sheep-random | - | yes | 100 | - | 2369.7038 | 2200.8 | 2522.84 | - | 2099.04 | 1942 | 2261 | - | 75.1877 | 73.4329 | 80.1086 |

| rust-get-cat-ackermann | - | no | 954 | - | 127.1402 | 91.07 | 456.5 | - | 127.6342 | 92 | 457 | - | 16.3754 | 14.3051 | 20.9808 |

| rust-get-cat-ackermann | - | yes | 46 | - | 159.0285 | 144.02 | 176.91 | - | 159.5435 | 145 | 177 | - | 15.0929 | 14.3051 | 20.0272 |

| python-get-cat-ackermann | - | no | 896 | - | 5771.6155 | 5708.25 | 6035.09 | - | 5772.1138 | 5709 | 6036 | - | 33.6607 | 32.4249 | 39.1006 |

| python-get-cat-ackermann | - | yes | 104 | - | 5855.8304 | 5807.61 | 6195.08 | - | 5776.8269 | 5735 | 6119 | - | 32.755 | 31.4713 | 35.2859 |

Kind of speaks for itself, right?

Distributed under the MIT License. See LICENSE.txt for more information.

Jérémie RODON - [email protected]

Project Link: https://github.com/JeremieRodon/demo-rust-lambda