What is SAHI (Slicing Aided Hyper Inference) - https://docs.ultralytics.com/guides/sahi-tiled-inference/

This repo contain not only SAHI inference implementation but also evaluation of results with mAp50, ... (standart metrics).

If imgsz = None in main() function :

- prediction will be done with original imagesize

- validation will be done with original imagesize ( force batch size to 1 )

You will understand if SAHI inference help in your specific case

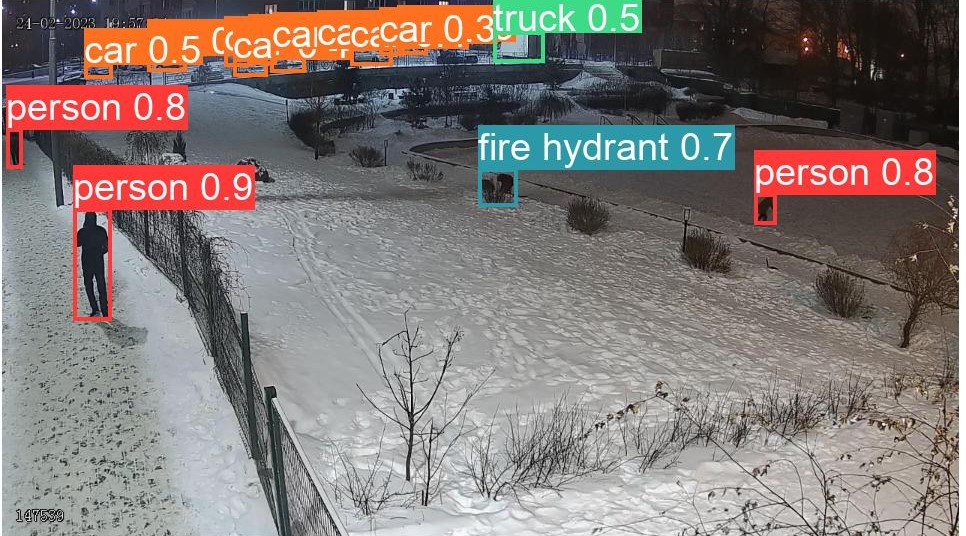

| SAHI | No SAHI |

|---|---|

|

|

| more cars far away detected | standart detections |

- SAHI inference + EVALUATION of results with basic yolo8 metrics

- output example with basic validation on 2 images:

val: Scanning C:\Users\irady\GitHub\YOLO8_SAHI\yolo_dataset\labels.cache. Class Images Instances Box(P R mAP50 mAP50-95): all 2 11 0.987 0.545 0.57 0.455 person 2 11 0.987 0.545 0.57 0.455 Speed: 1.5ms preprocess, 329.6ms inference, 0.0ms loss, 4.5ms postprocess per image ```

- output example with basic validation on 2 images:

- output example with SAHI validation on 2 images:

-

val: Scanning C:\Users\irady\GitHub\YOLO8_SAHI\yolo_dataset\labels.cache. Class Images Instances Box(P R mAP50 mAP50-95): Performing prediction on 9 number of slices. Performing prediction on 9 number of slices. Class Images Instances Box(P R mAP50 mAP50-95): all 2 11 1 0.545 0.773 0.628 person 2 11 1 0.545 0.773 0.628 Speed: 7.5ms preprocess, 0.0ms inference, 0.0ms loss, 0.0ms postprocess per image

-

- and also check

sahi/folder - there all validation plots will be saved

- git clone

- in

utils.get_category_mapping() change returned dictionary for your classes - in

main()change paths for your .pt and .yaml, and set desired input imgsz, source for inference etc - VALIDATION : in

main()runrun_sahi_validation()orrun_basic_validation() - INFERENCE : in

main()runrun_sahi_prediction()orrun_basic_prediction()- also you can update size of sliding window in head of

utils.sahi_predict():VERBOSE_SAHI = 2 SLICE_H = 640 SLICE_W = 640 OVERLAP_HEIGHT_RATIO = 0.2 OVERLAP_WIDTH_RATIO = 0.2```

- also you can update size of sliding window in head of

- your .pt model file (or any format that ultralytics support - like .onnx or .engine)

- validation dataset in yolo standart format

- .yaml file for dataset

-

#file sahi_data.yaml path: ../YOLO8_SAHI/yolo_dataset/ # dataset root dir train: images # train images (relative to 'path') 128 images val: images # val images (relative to 'path') 128 images # Classes names: 0: people

-