Temporal and Non-Temporal Contextual Saliency Analysis for Generalized Wide-Area Search within Unmanned Aerial Vehicle (UAV) Video

Created and tested using Python 3.5.3, PyTorch 0.4.0, and OpenCV 3.4.3

Repository for my Master's Project on the topic of "Contextual Saliency for Detecting Anomalies within Unmanned Aerial Vehicle (UAV) Video". The thesis report is available here.

This work was also published in The Visual Computer under the title "Temporal and Non-Temporal Contextual Saliency Analysis for Generalized Wide-Area Search within Unmanned Aerial Vehicle (UAV) Video".

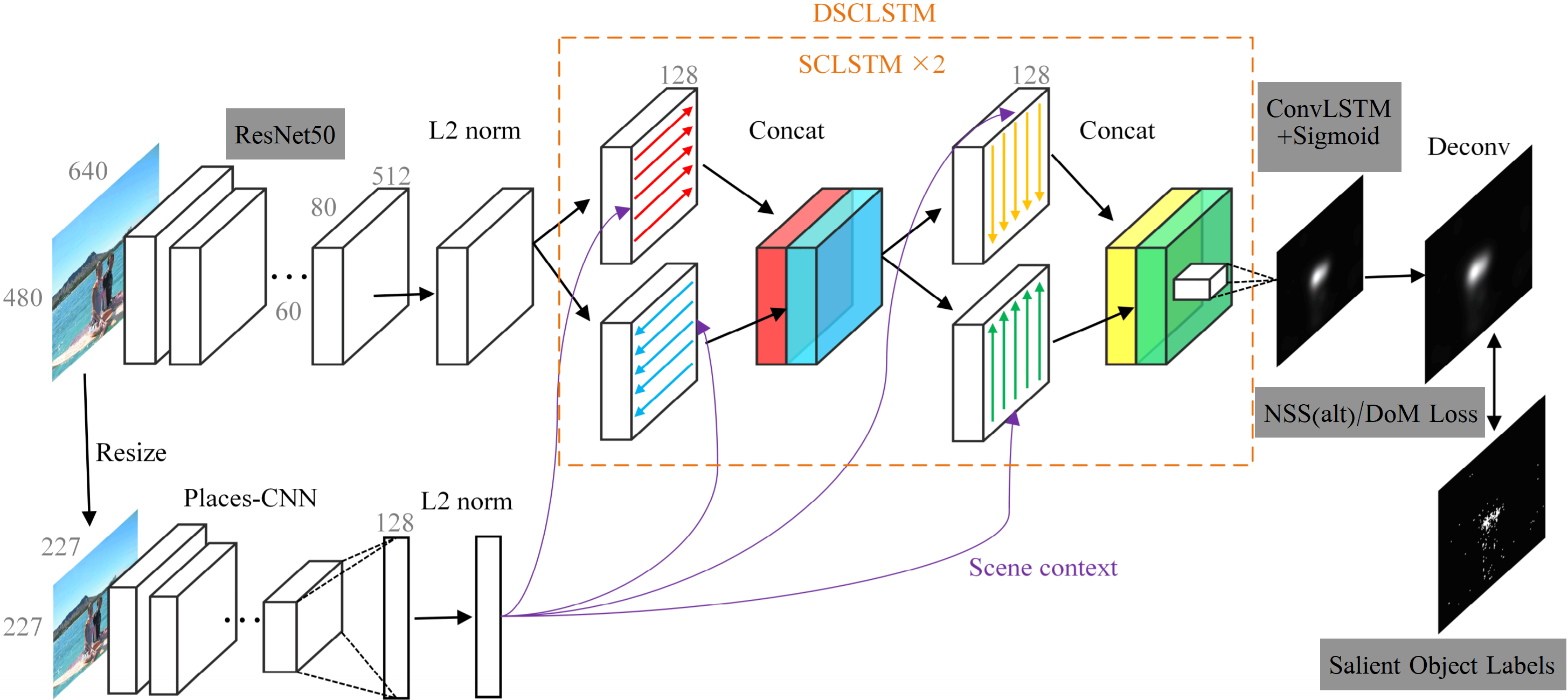

The DSCLRCN architecture (original authors (and original image source), partial re-implementation in PyTorch used for this project) was used as a baseline. Our proposed CoSADUV (aka TeCS) architecture is shown in the figure above, with changes from the DSCLRCN architecture shown with a grey background.

The architecture was modified by replacing the "Conv+Softmax" with a Convolutional-LSTM layer with kernel size 3x3 and a Sigmoid activation function. Additionally, several loss functions other than NSSLoss were investigated (see our paper for more information). Architectures with the convolutional LSTM (CoSADUV) and without it (CoSADUV_NoTemporal, using a normal conv layer instead) are available.

"Unmanned Aerial Vehicles (UAV) can be used to great effect for the purposes of surveillance or search and rescue operations. UAV enable search and rescue teams to cover large areas more efficiently and in less time. However, using UAV for this purpose involves the creation of large amounts of data (typically video) which must be analysed before any potential findings can be uncovered and actions taken. This is a slow and expensive process which can result in significant delays to the response time after a target is seen by the UAV. To solve this problem we propose a deep model using a visual saliency approach to automatically analyse and detect anomalies in UAV video. Our Contextual Saliency for Anomaly Detection in UAV Video (CoSADUV) model is based on the state-of-the-art in visual saliency detection using deep convolutional neural networks and considers local and scene context, with novel additions in utilizing temporal information through a convolutional LSTM layer and modifications to the base model. Our model achieves promising results with the addition of the temporal implementation producing significantly improved results compared to the state-of-the-art in saliency detection. However, due to limitations in the dataset used the model fails to generalize well to other data, failing to beat the state-of-the-art in anomaly detection in UAV footage. The approach taken shows promise with the modifications made yielding significant performance improvements and is worthy of future investigation. The lack of a publicly available dataset for anomaly detection in UAV video poses a significant roadblock to any deep learning approach to this task, however despite this our paper shows that leveraging temporal information for this task, which the state-of-the-art does not currently do, can lead to improved performance."

- First follow all instructions in the READMEs inside model/ and model/Dataset/ to download and prepare the pretrained models used and to format the dataset correctly. (This may require the use of the scripts in data_preprocessing/)

- Open and have a look through either

main_ncc.pyornotebook_main.ipynb(or both). The IPython notebook provides a more interactive interface, whilemain_ncc.pycan be run with minimal input required. - Set the hyperparameters and settings near the top of the file. Most important is to set the correct dataset directory name (if different) and mean image filename.

- Run through the file to train the model. If using

main_ncc.py, the model will automatically be run through testing once training is completed.

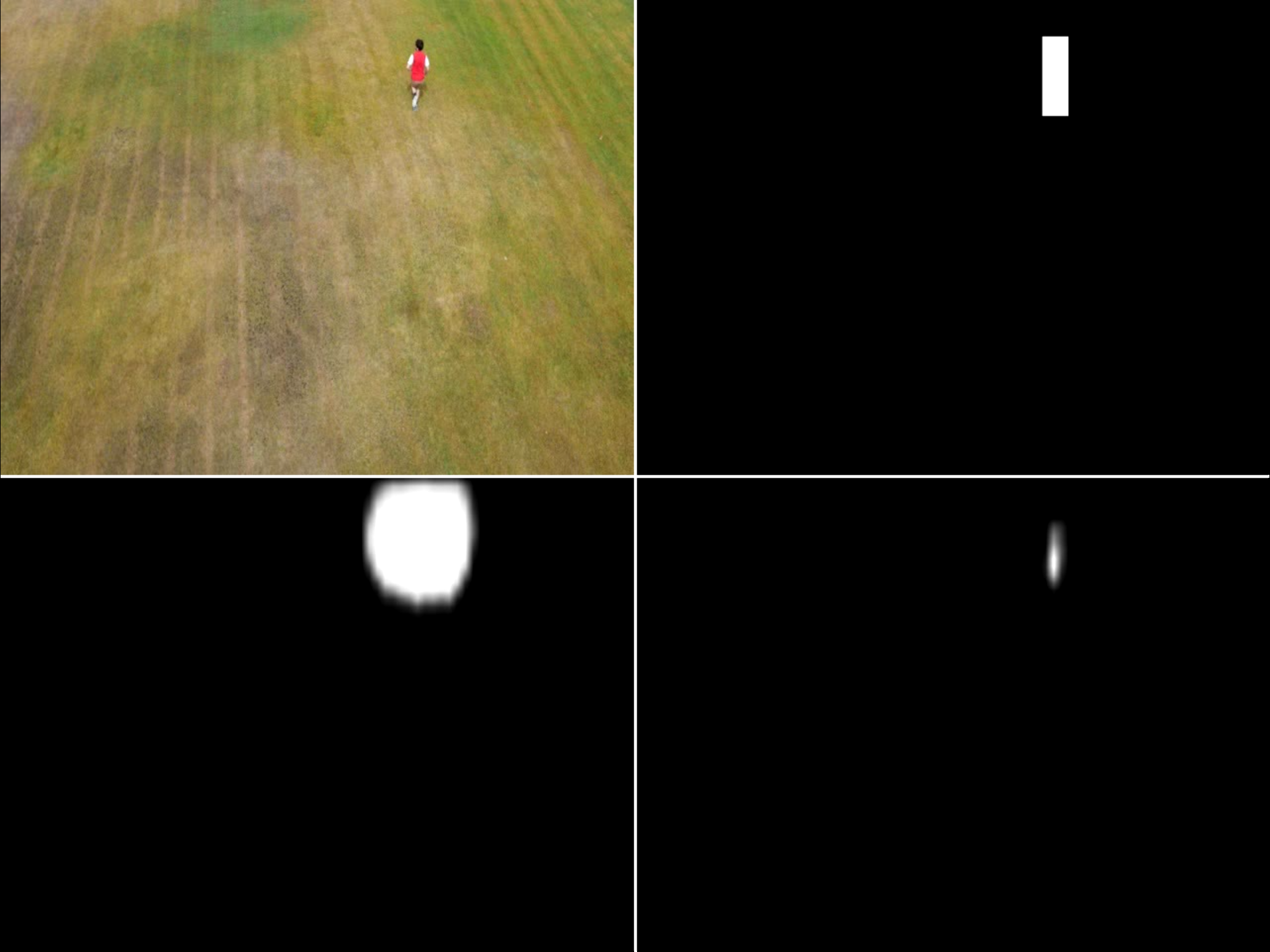

Click the video above to play it. The video shows the performane of the model on a sequence from the UAV123 dataset (This was also used to train the model). It presents four streams simultaneously:

- top-left is the input sequence

- top-right is the ground-truth data (as provided in the UAV123 dataset)

- bottom-left is the prediction of the best non-temporal model, and

- bottom-right is the prediction of the best temporal model.

If you make use of this work in any way, please reference the following:

@article{Gokstorp_2021,

author = {Simon G. E. Gökstorp and Toby P. Breckon},

title = {Temporal and non-temporal contextual saliency analysis for generalized wide-area search within unmanned aerial vehicle ({UAV}) video},

journal = {The Visual Computer},

volume = {38},

number = {6},

pages = {2033--2040},

year = 2021,

month = {sep},

publisher = {Springer Science and Business Media {LLC}},

doi = {10.1007/s00371-021-02264-6},

url = {https://doi.org/10.1007/s00371-021-02264-6},

}

If you have any questions/issues/ideas, feel free to open an issue here or contact me!

This project was completed under the supervision of Professor Toby Breckon (Durham University).