Hashmat Shadab Malik, Shahina Kunhimon, Muzammal Naseer, Salman Khan, and Fahad Shahbaz Khan

Abstract: Transferable adversarial attacks optimize adversaries from a pretrained surrogate model and known label space to fool the unknown black-box models. Therefore, these attacks are restricted by the availability of an effective surrogate model. In this work, we relax this assumption and propose Adversarial Pixel Restoration as a self-supervised alternative to train an effective surrogate model from scratch under the condition of no labels and few data samples. Our training approach is based on a min-max scheme which reduces overfitting via an adversarial objective and thus optimizes for a more generalizable surrogate model. Our proposed attack is complimentary to the adversarial pixel restoration and is independent of any task specific objective as it can be launched in a self-supervised manner. We successfully demonstrate the adversarial transferability of our approach to Vision Transformers as well as Convolutional Neural Networks for the tasks of classification, object detection, and video segmentation. Our training approach improves the transferability of the baseline unsupervised training method by 16.4% on ImageNet val. set.

- Highlights

- Installation

- Dataset Preparation

- Adversarial Pixel Restoration Training

- Self-supervised Attack

- Pretrained Surrogate Models

- Adversarial Transferability Results

- We propose self-supervised Adversarial Pixel Restoration to find highly transferable patterns by learning over flatter loss surfaces. Our training approach allows launching cross-domain attacks without access to large-scale labeled data or pretrained models.

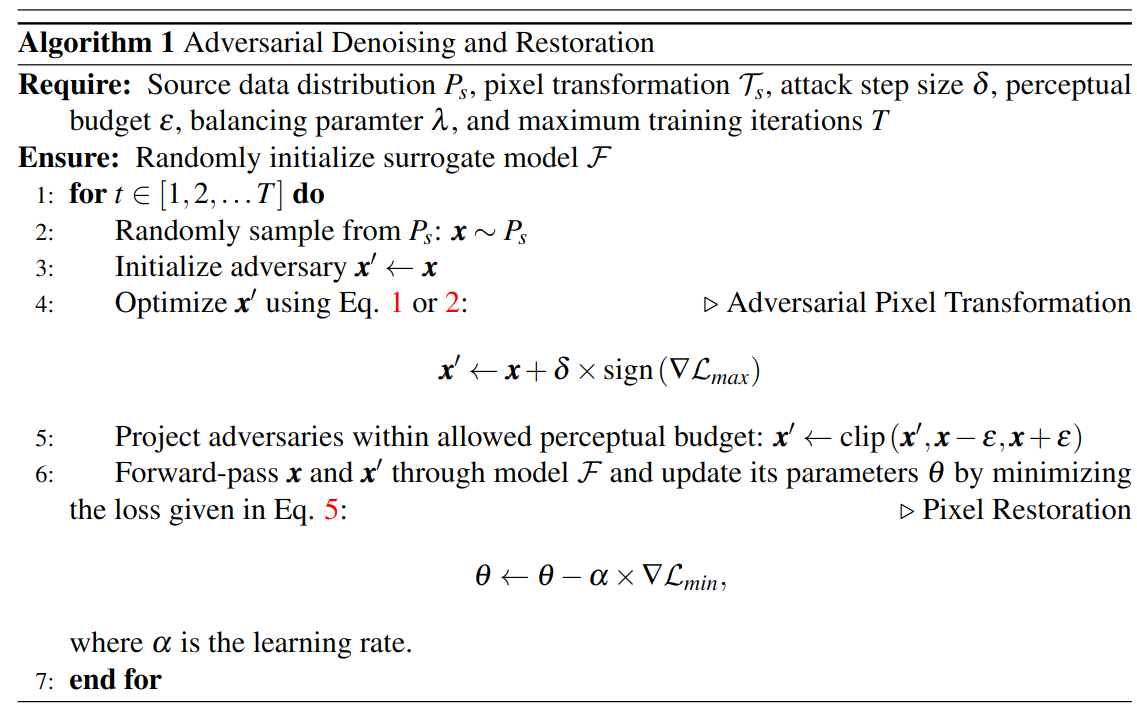

The algorithm describes Adversarial Pixel Restoration for training the surrogate model. Please refer to our paper for more details on the equations in the above-mentioned algorithm.

The algorithm describes Adversarial Pixel Restoration for training the surrogate model. Please refer to our paper for more details on the equations in the above-mentioned algorithm.

- Our proposed adversarial attack is self-supervised in nature and independent of any task-specific objective. Therefore our approach can transfer perturbations to a variety of tasks as we demonstrate for classification, object detection, and segmentation.

(top)

- Create conda environment

conda create -n apr- Install PyTorch and torchvision

conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorch- Install other dependencies

pip install -r requirements.txt(top) In-Domain Setting: 5000 images are selected from ImageNet-Val (10 each from the first 500 classes). Each surrogate model is trained only on few data samples e.g., 20 samples(default). Download the ImageNet-Val classification dataset and structure the data as follows:

└───data

├── selected_data.csv

└── ILSVRC2012_img_val

├── n01440764

├── n01443537

└── ...

The selected_data.csv is used by the our_dataset.py to load the selected 5000 images from the dataset.

Cross-Domain Setting: A single surrogate model is trained on large unannotated datasets. We use the following datasets for training:

Directory structure should look like this:

|paintings

|images

img1

img2

...

(top) In-Domain Setting: Each surrogate model is trained only on a few data samples (20 by default). The model is trained by incorporating adversarial pixel transformation based on rotation or jigsaw in an unsupervised setting. Supervised prototypical training mentioned in this paper is also trained in an adversarial fashion.

For training surrogate models with transformation:

- Rotation

python train_id.py --mode rotate --n_imgs 20 --adv_train True --fgsm_step 2 \

--n_iters 2000 --save_dir ./trained_models- Jigsaw

python train_id.py --mode jigsaw --n_imgs 20 --adv_train True --fgsm_step 2 \

--n_iters 5000 --save_dir ./trained_models- Prototypical

python train_id.py --mode prototypical --n_imgs 20 --adv_train True --fgsm_step 2 \

--n_iters 15000 --save_dir ./trained_modelsWith 20 images used for training each surrogate model, overall 250 models would be trained for the selected 5000 ImageNet-Val images. The models would be saved in like:

|trained_models

|models

rotate_0.pth

rotate_1.pth

...

Cross-Domain Setting: A single surrogate model is trained adversarially on a large unannotated data in an unsupervised setting by using rotation or jigsaw as pixel transfromations.

For training the single surrogate model with transfromation:

- Rotation

python train_cd.py --mode rotate --adv_train True --fgsm_step 2 \

--end_epoch 50 --data_dir paintings/ --save_dir ./single_trained_models- Jigsaw

python train_cd.py --mode jigsaw --adv_train True --fgsm_step 2 \

--end_epoch 50 --data_dir paintings/ --save_dir ./single_trained_modelschange the --data_dir accordingly to train on comics, coco and any other dataset.

Setting --adv_train flag to False would result in the surrogate models trained

by the baseline method mentioned in this paper.

(top) In-Domain Setting: Adversarial examples are crafted on the selected 5000 ImageNet-Val images, following the same setting used in the baseline -> Practical No-box Adversarial Attacks (NeurIPS-2021). An L_inf based attack is run using:

python attack.py --epsilon 0.1 --ila_niters 100 --ce_niters 200 \

--ce_epsilon 0.1 --ce_alpha 1.0 --n_imgs 20 --ae_dir ./trained_models \

--mode rotate --save_dir /path/to/save/adv_imagesmode can be set as rotate/jigsaw/prototypical based on how the surrogate models

were trained. For rotation/jigsaw we can use a fully-unsupervised attack by

passing --loss unsup as argument to the attack.py file.

Cross-Domain Setting: A single surrogate model trained on a cross-domain dataset as mentioned in the Training section is used to craft adversarial examples on the selected 5000 ImageNet-Val images. An L_inf based unsupervised attack is run using:

python attack.py --epsilon 0.1 --ila_niters 100 --ce_niters 200 \

--ce_epsilon 0.1 --ce_alpha 1.0 --n_imgs 20 --single_model True \

--chk_pth path/to/trained/model/weights.pth --save_dir /path/to/save/adv_imagesIn-Domain Setting: Pretrained weights for surrogate models trained with rotation/jigsaw/prototypical modes.

Cross-Domain Setting:

- Models trained with rotation mode.

| Dataset | Baseline | Ours |

|---|---|---|

| CoCo | Link | Link |

| Paintings | Link | Link |

| Comics | Link | Link |

- Models trained with jigsaw mode.

| Dataset | Baseline | Ours |

|---|---|---|

| CoCo | Link | Link |

| Paintings | Link | Link |

| Comics | Link | Link |

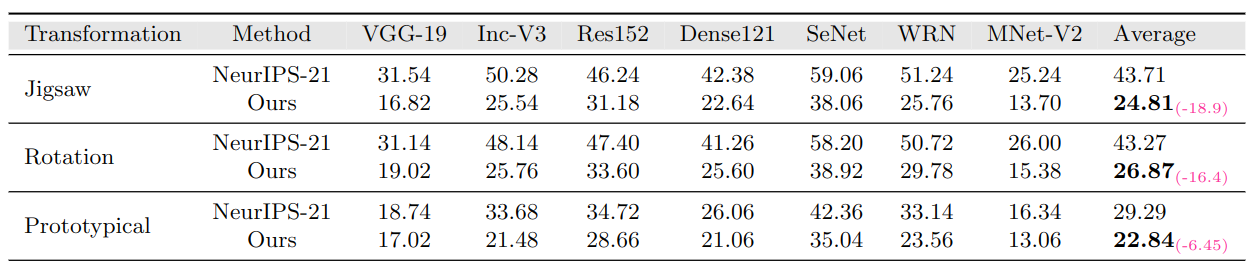

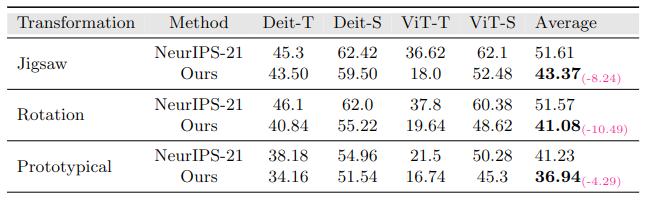

(top) We compare transferability of surrogate models trained by our approach with the approach followed by the baseline -> Practical No-box Adversarial Attacks (NeurIPS-2021). After generating adversarial examples on the selected 5000 ImageNet-Val images, we report the top-1 accuracy on several classification based models (lower is better).

In-Domain Setting:

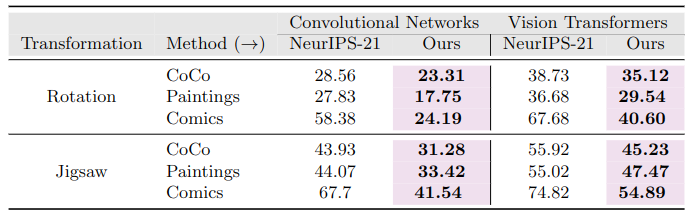

Cross-Domain Setting: Average Accuracy on Convolution Networks and Vision Transformers (listed above).

If you use our work, please consider citing:

@inproceedings{Malik_2022_BMVC,

author = {Hashmat Shadab Malik and Shahina Kunhimon and Muzammal Naseer and Salman Khan and Fahad Shahbaz Khan},

title = {Adversarial Pixel Restoration as a Pretext Task for Transferable Perturbations},

booktitle = {33rd British Machine Vision Conference 2022, {BMVC} 2022, London, UK, November 21-24, 2022},

publisher = {{BMVA} Press},

year = {2022},

url = {https://bmvc2022.mpi-inf.mpg.de/0546.pdf}

}

Should you have any question, please create an issue on this repository or contact at [email protected]

Our code is based on Practical No-box Adversarial Attacks against DNNs repository. We thank them for releasing their code.