This repository uses Yolo-V5 and RepVGG to detect facial expressions and classify emotions (see the architecture for more info on how it works). To see how to use the code, check out the usage section for more information.

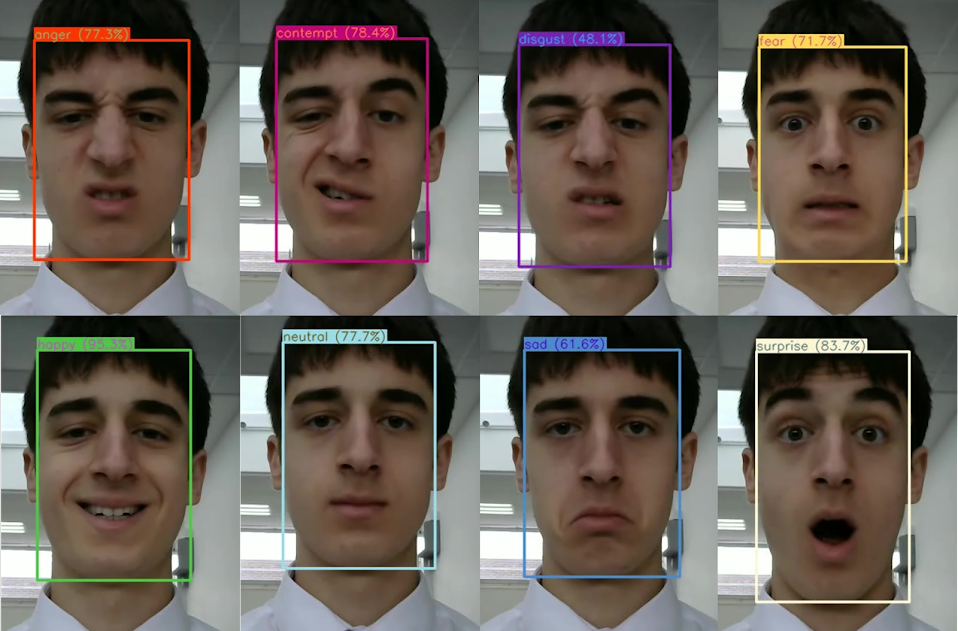

This is an example of emotion classification:

This is a picture of me pulling all 8 of the facial expressions that the model classifies:

This is a picture of me pulling all 8 of the facial expressions that the model classifies:

pip install -r requirements.txt

conda env create -f env.yaml

This model detects 8 basic facial expressions:

- anger

- contempt

- disgust

- fear

- happy

- neutral

- sad

- surprise

and then attempts to assign them appropriate colours. It classifies every face, even if it is not that confident about the result!

usage: main.py [-h] [--source SOURCE] [--img-size IMG_SIZE] [--conf-thres CONF_THRES] [--iou-thres IOU_THRES]

[--device DEVICE] [--hide-img] [--output-path OUTPUT_PATH | --no-save] [--agnostic-nms] [--augment]

[--line-thickness LINE_THICKNESS] [--hide-conf] [--show-fps]

optional arguments:

-h, --help show this help message and exit

--source SOURCE source

--img-size IMG_SIZE inference size (pixels)

--conf-thres CONF_THRES

face confidence threshold

--iou-thres IOU_THRES

IOU threshold for NMS

--device DEVICE cuda device, i.e. 0 or 0,1,2,3 or cpu

--hide-img hide results

--output-path OUTPUT_PATH

save location

--no-save do not save images/videos

--agnostic-nms class-agnostic NMS

--augment augmented inference

--line-thickness LINE_THICKNESS

bounding box thickness (pixels)

--hide-conf hide confidences

--show-fps print fps to console

There are two parts to this code: facial detection and emotion classification.

This repository is a fork of ultralytics/Yolo-V5 however, now Yolo-v7 is used for faster detection! Read here for more information on Yolo-V5 (original model). To detect faces, the model was trained on the WIDER FACE dataset which has 393,703 faces. For more information, check out the paper here.

This repository uses code directly from the DingXiaoH/RepVGG repository. You can read the RepVGG paper here to find out more. Even though this is the main model, it made more sense to fork the Yolo-V5 repository because it was more complicated. The model was trained on the AffectNet dataset, which has 420,299 facial expressions. For more information, you can read the paper here.