This is the official repository of NeurIPS 2023 paper VALOR.

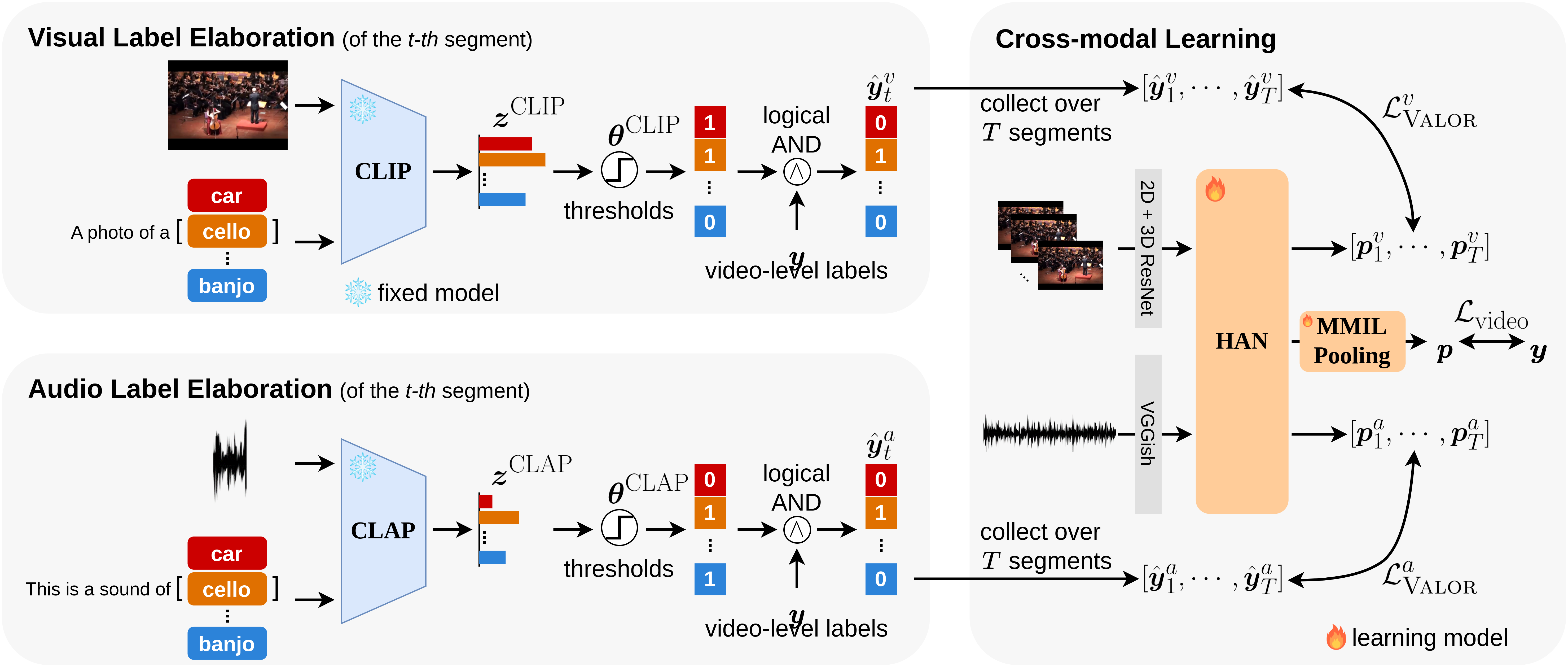

Modality-Independent Teachers Meet Weakly-Supervised Audio-Visual Event Parser

Yung-Hsuan Lai, Yen-Chun Chen, Yu-Chiang Frank Wang

- Ubuntu version: 20.04.6 LTS

- CUDA version: 11.4

- Testing GPU: NVIDIA GeForce RTX 3090

A conda environment named valor can be created and activated with:

conda env create -f environment.yaml

conda activate valorWe recommend directly work with our pre-extracted features for simplicity. If you still wish to run feature extraction and pseudo-label generation yourself, please follow the below optional instructions.

Video downloading (optional)

One may download the videos listed in a csv file with the following command. We provide an example.csv to demonstrate the process of downloading a video.python ./utils/download_dataset.py --videos_saved_dir ./data/raw_videos --label_all_dataset ./data/example.csvAfter downloading the videos to data/raw_videos/, one should extract video frames in 8fps from each video with the following command. We show the frame extraction of the video downloaded from the above command.

python ./utils/extract_frames.py --video_path ./data/raw_videos --out_dir ./data/video_framesHowever, there are some potential issues when using these downloaded videos. For more details, please see here.

Please download LLP dataset annotations (6 csv files) from AVVP-ECCV20 and put in data/.

Please download audio features (VGGish), 2D visual features (ResNet152), and 3D visual features (ResNet (2+1)D) from AVVP-ECCV20 and put in data/feats/

Option 1. Please download visual features and segment-level pseudo labels from CLIP from this Google Drive link, put in data/, and unzip the file with the following command:

unzip CLIP.zipOption 2

The visual features and pseudo labels can also be generated with the following command if the video frames from all videos are prepared in data/video_frames/:

python ./utils/clip_preprocess.py --print_progressFor example, the features and pseudo labels of the video downloaded above can be generated in data/example_v_feats/ and data/example_v_labels/, respectively, with the following command:

python ./utils/clip_preprocess.py --label_all_dataset ./data/example.csv --pseudo_labels_saved_dir ./data/example_v_labels --visual_feats_saved_dir ./data/example_v_feats --print_progressOption 1. Please download audio features and segment-level pseudo labels from CLAP from this Google Drive link, put in data/, and unzip the file with the following command:

unzip CLAP.zipOption 2

The audio features and pseudo labels can also be generated with the following command if raw videos are prepared in data/raw_videos/:

python ./utils/clap_preprocess.py --print_progressFor example, the features and pseudo labels of the video downloaded above can be generated in data/example_a_feats/ and data/example_a_labels/, respectively, with the following command:

python ./utils/clap_preprocess.py --label_all_dataset ./data/example.csv --pseudo_labels_saved_dir ./data/example_a_labels --audio_feats_saved_dir ./data/example_a_feats --print_progressPlease make sure that the file structure is the same as the following.

File structure

> data/

├── AVVP_dataset_full.csv

├── AVVP_eval_audio.csv

├── AVVP_eval_visual.csv

├── AVVP_test_pd.csv

├── AVVP_train.csv

├── AVVP_val_pd.csv

├── feats/

│ └── r2plus1d_18/

│ └── res152/

│ └── vggish/

├── CLIP/

│ └── features/

│ └── segment_pseudo_labels/

├── CLAP/

│ └── features/

│ └── segment_pseudo_labels/

├── video_frames/ (optional)

│ └── 00BDwKBD5i8/

│ └── 00fs8Gpipss/

│ └── ...

└── raw_videos/ (optional)

└── 00BDwKBD5i8.mp4

└── 00fs8Gpipss.mp4

└── ...

Please download the trained models from this Google Drive link and put the models in their corresponding model directory.

File structure

> models/

├── model_VALOR/

│ └── checkpoint_best.pt

├── model_VALOR+/

│ └── checkpoint_best.pt

└── model_VALOR++/

└── checkpoint_best.pt

We provide some sample scripts for training VALOR, VALOR+, and VALOR++.

bash scripts/train_valor.shbash scripts/train_valor+.shbash scripts/train_valor++.shNote: If you are evaluating the trained models generated with the training scripts, change --model_name model_VALOR to --model_name model_VALOR_reproduce here.

bash scripts/test_valor.shbash scripts/test_valor+.shbash scripts/test_valor++.shWe build VALOR codebase heavily on the codebase of AVVP-ECCV20 and MM-Pyramid. We sincerely thank the authors for open-sourcing! We also thank CLIP and CLAP for open-sourcing pre-trained models.

If you find this code useful for your research, please consider citing:

@inproceedings{lai2023modality,

title={Modality-Independent Teachers Meet Weakly-Supervised Audio-Visual Event Parser},

author={Yung-Hsuan Lai, Yen-Chun Chen, Yu-Chiang Frank Wang},

booktitle={NeurIPS},

year={2023}

}This project is released under the MIT License.