I am *not* a researcher or scientist. Just a regular guy who follow my interest try to figure out some interesting stuff. Since I love emacs and org mode. Why not track all the papers that i read in a single org file? This is a lifelong repo.

| Date | Paper |

| 20240423 | A Survey of Embodied AI: From Simulators to Research Tasks |

| 20240424 | Why Functional Programming Matters |

| 20240425 | Recurrent Neural Networks (RNNs): A gentle Introduction and Overview |

| 20240426 | Neural Machine Translation by Jointly Learning to Align and Translate |

| 20240427 | A General Survey on Attention Mechanisms in Deep Learning |

| 20240428 | MEGALODON: Efficient LLM Pretraining and Inference with Unlimited Context Length |

| 20240429 | Mega: Moving Average Equipped Gated Attention |

| 20240430 | The Next Decade in AI |

| 20240501 | The Bitter Lesson |

| 20240502 | KAN: Kolmogorov–Arnold Networks |

| 20240503 | Multilayer feedforward networks are universal approximators |

| 20240504 | Sequence to Sequence Learning with Neural Networks |

| 20240505 | Translating Videos to Natural Language Using Deep Recurrent Neural Networks |

| 20240506 | Summarizing Source Code using a Neural Attention Model |

| 20240507 | Learning to Control Fast-Weight Memories: An Alternative to Dynamic Recurrent Networks |

| 20240508 | Transformers are RNNs: Fast Autoregressive Transformers with Linear Attention |

| 20240509 | BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding |

| 20240510 | Language Models are Unsupervised Multitask Learners |

| 20240511 | Improving Language Understanding by Generative Pre-Training |

| 20240512 | Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond |

| 20240513 | Cramming: Training a Language Model on a Single GPU in One Day |

| 20240514 | Autonomous LLM-driven research from data to human-verifiable research papers |

| 20240515 | LoRA: Low-Rank Adaptation of Large Language Models |

| 20240516 | When to Retrieve: Teaching LLMs to Utilize Information Retrieval Effectively |

| 20240517 | A PRIMER ON THE INNER WORKINGS OF TRANSFORMER-BASED LANGUAGE MODELS |

| 20240518 | Is artificial consciousness achievable? Lessons from the human brain |

| 20240519 | Teaching Algorithm Design: A Literature Review |

| 20240520 | How Good Are Low-bit Quantized LLAMA3 Models? An Empirical Study |

| 20240521 | A Survey on Retrieval-Augmented Text Generation for Large Language Models |

| 20240522 | Best Practices and Lessons Learned on Synthetic Data for Language Models |

| 20240523 | Exploring the Limits of Language Modeling |

| 20240524 | The First Law of Complexodynamics |

| 20240525 | The Unreasonable Effectiveness of Recurrent Neural Networks |

| 20240526 | Recurrent Models of Visual Attention |

| 20240527 | Neural Turing Machines |

| 20240528 | Relational recurrent neural networks |

| 20240529 | Keeping Neural Networks Simple by Minimizing the Description Length of the Weights |

| 20240530 | RECURRENT NEURAL NETWORK REGULARIZATION |

| 20240531 | Layer Normalization |

| 20240601 | Scaling Laws for Neural Language Models |

| 20240602 | Deep Speech 2: End-to-End Speech Recognition in English and Mandarin |

| 20240603 | A Tutorial Introduction to the Minimum Description Length Principle |

| 20240604 | ORDER MATTERS: SEQUENCE TO SEQUENCE FOR SETS |

| 20240605 | Pointer Networks |

| 20240606 | Deep Residual Learning for Image Recognition |

| 20240607 | The Shattered Gradients Problem: If resnets are the answer, then what is the question? |

| 20240608 | Scaling and evaluating sparse autoencoders |

| 20240612 | Identity Mappings in Deep Residual Networks |

| 20240613 | Quantifying the Rise and Fall of Complexity in Closed Systems: The Coffee Automaton |

| 20240614 | VARIATIONAL LOSSY AUTOENCODER |

| 20240617 | A simple neural network module for relational reasoning |

| 20240619 | The Dawning of a New Era in Applied Mathematics |

| 20240620 | LANGUAGE MODELING IS COMPRESSION |

| 20240625 | Large Language Model Evaluation via Matrix Entropy |

| 20240626 | The Platonic Representation Hypothesis |

| 20240627 | Superlinear Returns |

| 20240628 | How to Do Great Work |

| 20240703 | The Best Essay |

| 20240704 | Life is Short |

| 20240705 | Putting Ideas into Words |

| 20240708 | How to think in writing |

| 20240709 | C++ design patterns for low-latency applications including high-frequency trading |

| 20240710 | An Introduction to Vision-Language Modeling |

| 20240711 | Being a Noob |

| 20240712 | How to Start Google |

| 20240713 | RT-1: ROBOTICS TRANSFORMER FOR REAL-WORLD CONTROL AT SCALE |

| 20240715 | RT-2: Vision-Language-Action Models Transfer |

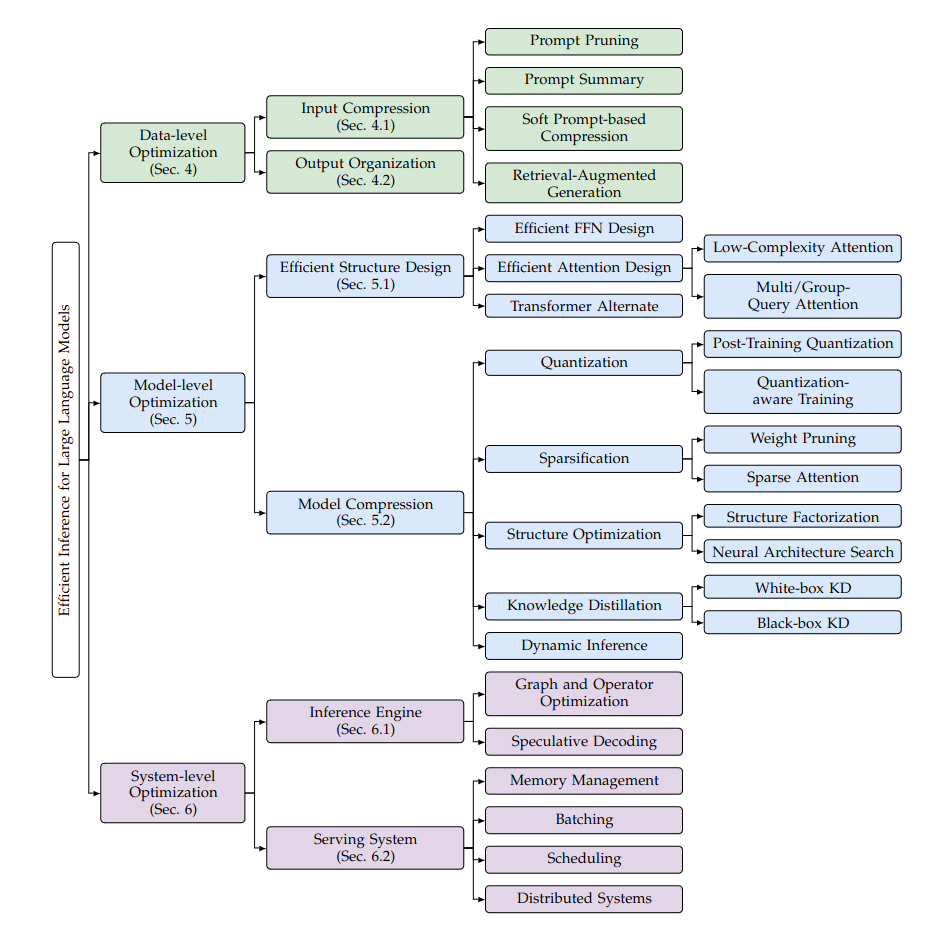

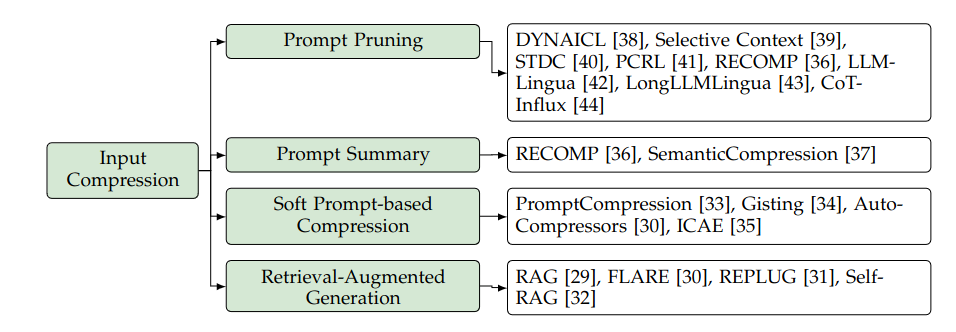

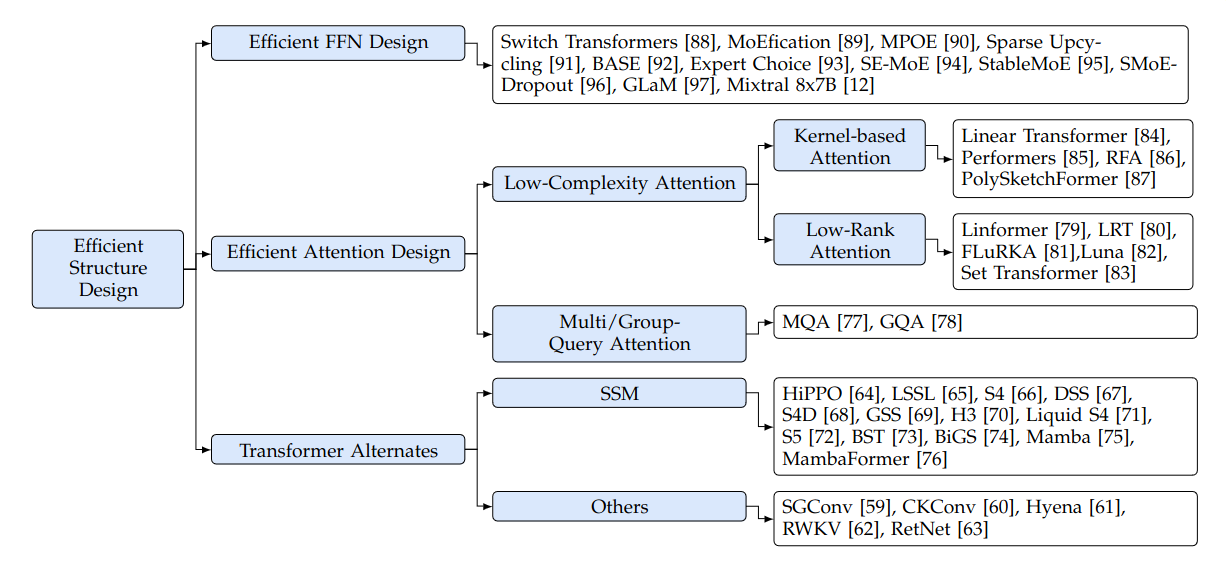

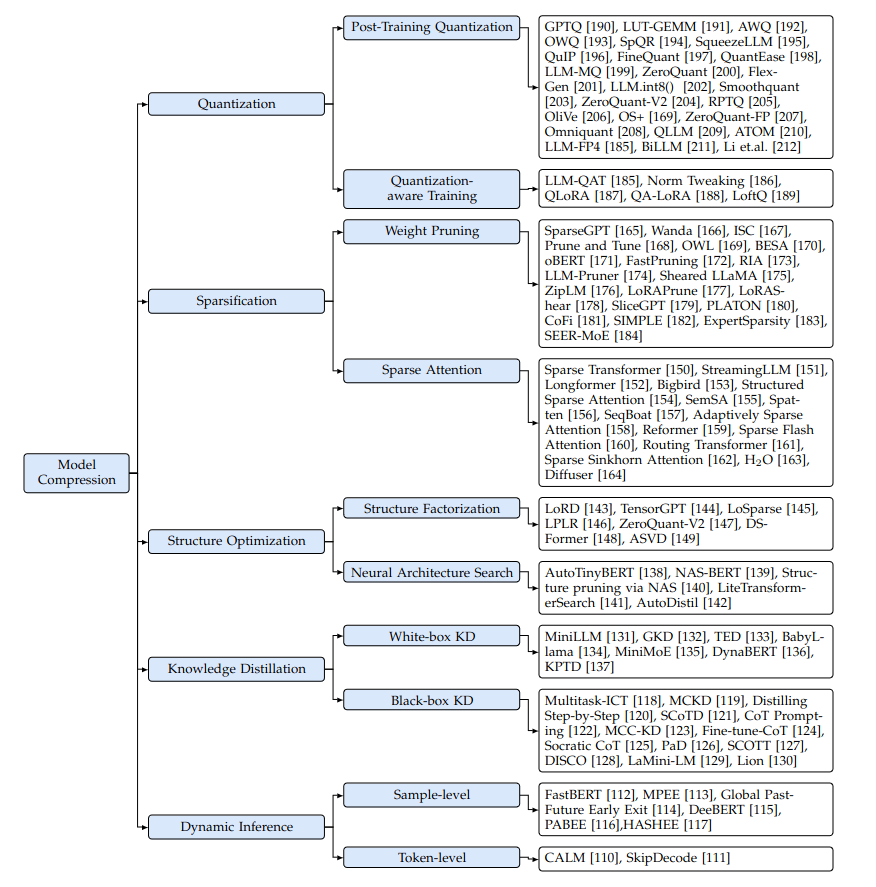

| 20240716 | A Survey on Efficient Inference for Large Language Models |

| 20240717 | Towards Efficient Generative Large Language Model Serving: A Survey from Algorithms to Systems |

| 20240718 | Beyond Euclid: An Illustrated Guide to Modern Machine Learning with Geometric, Topological, and Algebraic Structures |

| 20240719 | End-To-End Planning of Autonomous Driving in Industry and Academia: 2022-2023 |

| 20240729 | The Right Kind of Stubborn |

- Paper: A Survey of Embodied AI: From Simulators to Research Tasks

- Links: https://arxiv.org/pdf/2103.04918.pdf

- Ideas:

- Embodied AI Simulators: DeepMind Lab, AI2-THOR, SAPIEN, VirtualHome, VRKitchen, ThreeDWorld, CHALET, iGibson, and Habitat-Sim.

- Paper: Why Functional Programming Matters

- Links: https://www.cs.kent.ac.uk/people/staff/dat/miranda/whyfp90.pdf

- Ideas:

- functional programming can improve modularization in an maintainable way using high-order function and lazy evaluation

- Paper: Recurrent Neural Networks (RNNs): A gentle Introduction and Overview

- Links: https://arxiv.org/pdf/1912.05911.pdf

- Ideas:

- RNN deal with sequence data.

- BPTT (Back Propagation Through Time): store weight when processed through each loss term

- LSTM (Long Short-Term Memory): design to handle vanish graident problems and introduce the gated cell to store more information (What information?)

- DRNN (Deep Recurrent Neural Networks): stack ordinary RNN together.

- BRNN (Bidirectional Recurrent Neural Networks): the authors create the section, but i do not get any ideas.

- Seq2Seq: What problems does seq2seq or encoder-decoder structure solves?

- Attention & Transformers: Why Attentions works? Why Skip-Connection works?

- Pointer Networks

- Paper: Neural Machine Translation by Jointly Learning to Align and Translate

- Links: https://arxiv.org/pdf/1409.0473

- Ideas:

What is the difference with encoder-decoder architecture?

- this link may helps https://slds-lmu.github.io/seminar_nlp_ss20/attention-and-self-attention-for-nlp.html

- bidirectional RNN as encoder and a decoder that search through a sources sentences during translation. The architecture lead to a attention mechansim in the decoder.

- paper: A General Survey on Attention Mechanisms in Deep Learning

- links: https://arxiv.org/pdf/2203.14263

- ideas:

- authors define a task model, which contains four component, 1. the feature model 2. the query model 3. the attention model 4. the output model

-

feature model: used to extract features can be RNN or CNN and …., for turning o$Xn$ into

$fn$ -

query model: a query tell which feature

$fn$ to attend to. -

attention model: given input query

$qn$ and features vectors$fn$ , the model extract the key matrix$Kn$ and value matrix$Vn$ from$fn$ . Traditionaly, this process can be achived by linear transformation and use weight matrix$Wk$ and$Wv$ . - attention mechanisms can be classify into three categories: query-related, feature-related and general(not relate to query or feature).

To learn more about attention mechanisms, this page https://slds-lmu.github.io/seminar_nlp_ss20/attention-and-self-attention-for-nlp.html and 3blue1brown video https://www.3blue1brown.com/lessons/attentionare are helpful

- paper: MEGALODON: Efficient LLM Pretraining and Inference with Unlimited Context Length

- links: https://arxiv.org/pdf/2404.08801

- ideas:

- traditional transformer: computation complexity, limited inductive bias.

- introduce the complex exponential moving average(CEMA) components, timestamp normalization layer, normalized attention and pre-norm with two-hop residual configuraion.

Q1: This paper is based on the architecture of MEGA, But What is MEGA? Q2: Why this architecture and deal with unlimited length?

Evaluation on long-context modeling, including perplexity in various context lengths up to 2M and long-context QA tasks in Scrolls (Parisotto et al.,

not understand…

- paper: Mega: Moving Average Equipped Gated Attention

- links: https://arxiv.org/pdf/2209.10655

- ideas:

- sequence modeling common approaches: self-attention and EMA(exponential moving average) Well, this kind of theortical paper is too difficult for me, mayme i should start with some basic ideas and understand the concepts by doing project.

- paper: The Next Decade in AI

- links: https://arxiv.org/pdf/2002.06177

- ideas:

- authors cites “The Bitter Lesson” - By Rich Sutton, i have seen this paper in many places. I should check out this paper.

- claim1:

/to build a robust, knowledge-driven approach to AI we must have the machinery of symbol-manipulation in our toolkit. Too much of useful knowledge is abstract to make do without tools that represent and manipulate abstraction, and to date, the only machinery that we know of that can manipulate such abstract knowledge reliably is the apparatus of symbol-manipulation/. - claim2: robust artificial intelligences properties:

- have the ability to learn new knowledge

- can learn knowledged that is symbolically represented.

- significant knowledge is likely to be abstract.

- rules and exceptions are co-existed

- Some significant fraction of the knowledge that a robust system is likely to be causal, and to support counterfactuals.

- Some small but important subset of human knowledge is likely to be innate; robust AI, too, should start with some important prior knowledge.

- claim3: rather than starting each new AI system from scratch, as a blank slate, with little knowledge of the world, we should seek to build learning systems that start with initial frameworks for domains like time, space, and causality, in order to speed up learning and massively constrain the hypothesis space.

- knowledge by itself it not enough. knowledge put into practice with tool of reasoning.

a reasoning system that can leverage large-scale background knowledge efficiently, even when available information is incomplete is a prerequisite to robustness.

- paper: KAN: Kolmogorov–Arnold Networks

- links: https://arxiv.org/pdf/2404.19756

- ideas

- claim1: Kolmogorov-Arnold representation theorem What is Kolmogorov-Arnold representation theorem? Why it can represented any function like Universal Approximation Theorem?

- claim2: MLP: learnable weights on edges, KAN learnable activation functions on edges. TOMORRORW PAPER IS ABOUT UNIVERSAL APPROXIMATION THEOREM

- claim3: KANs’ nodes simply sum incoming signals without applying any non-linearities

- claim4: KANs are nothing more than combinations of splines What is splines?

- claim5: Currently, the biggest bottleneck of KANs lies in its slow training. KANs are usually 10x slower than MLPs, given the same number of parameters. We should be honest that we did not try hard to optimize KANs’ efficiency though, so we deem KANs’ slow training more as an engineering problem to be improved in the future rather than a fundamental limitation. If one wants to train a model fast, one should use MLPs. In other cases, however, KANs should be comparable or better than MLPs, which makes them worth trying.

- paper: Multilayer feedforward networks are universal approximators

- links: https://cognitivemedium.com/magic_paper/assets/Hornik.pdf

- ideas:

- claim1: Advocates of the virtues of multilayer feedfor- ward networks (e.g., Hecht-Nielsen, 1987) often cite Kolmogorov’s (1957) superposition theorem or its more recent improvements (e.g.. Lorentz, 1976) in support of their capabilities. However, these results require a different unknown transformation (g in Lorentz’s notation) for each continuous function to be represented, while specifying an exact upper limit to the number of intermediate units needed for the representation.

- Anyway, this paper prove multilayer feedforward networks is a class of universal approximators. While reading this paper, i am wondering why encoder-decoder structure network work? who proposed that? This is tomorrow topic.

- paper: Sequence to Sequence Learning with Neural Networks

- links: https://arxiv.org/pdf/1409.3215

- ideas:

- claim1: DNNs can only be applied to problems whose inputs and targets can be sensibly encoded with vectors of fixed dimensionality.

- claim2: network architecture, one LSTM for encoder and another LSTM for decoder. What is the encoder and the decoder has different network structure?

- paper: Translating Videos to Natural Language Using Deep Recurrent Neural Networks

- links: https://arxiv.org/pdf/1412.4729

- ideas:

- claim1:

“`

video -> cnn -> lstm -> label

“`

It seems like features extraction network is a kind of encoder-decoder structure networks.

- claim1:

“`

video -> cnn -> lstm -> label

“`

- paper: Summarizing Source Code using a Neural Attention Model

- links: https://github.com/sriniiyer/codenn/blob/master/summarizing_source_code.pdf

- ideas:

- claim1: dataset: stackoverflow that contains c# tag model: LSTM

Today paper is about llm in code generation, i chose this paper from this slides https://webstanford.edu/class/cs224g/slides/Code%20Generation%20with%20LLMs.pdf and i discover ==Standford CS 224G=.= Great Resources for keeping track to frontier llm application.

- paper: Learning to Control Fast-Weight Memories: An Alternative to Dynamic Recurrent Networks

- links: https://ieeexplore.ieee.org/document/6796337

- ideas:

- two feedword networks. first network produce “fast-weight” as short-term memory, memory controller Well, This URL is worth a look. https://people.idsia.ch//~juergen/most-cited-neural-nets.html

- paper: Transformers are RNNs: Fast Autoregressive Transformers with Linear Attention

- links: https://arxiv.org/pdf/2006.16236

- ideas:

- claim1: tranditional transformers require quadratics memories, such as for

$N$ input. The time complexity is$O(N_2)$ . This paper propose linear transformation. What’s the difference between attention layer and self-attention layer?every transformer can be seen as a recurrent neural network

- claim1: tranditional transformers require quadratics memories, such as for

- paper: BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- links: https://arxiv.org/pdf/1810.04805

- ideas:

- claim1: two methods for apply pre-trained language models to downstream tasks( feature-based and find-tuning)

- paper: Language Models are Unsupervised Multitask Learners

- links: https://d4mucfpksywv.cloudfront.net/better-language-models/language-models.pdf

- ideas:

- dataset WebText (millions of webpage)

- language modeling (unsupervised distribution estimation of examples, each example contains a length of symbols.) https://github.com/codelucas/newspaper

- paper: Improving Language Understanding by Generative Pre-Training

- links: https://s3-us-west-2.amazonaws.com/openai-assets/research-covers/language-unsupervised/language_understanding_paper.pdf

- ideas:

- By pre-training on a diverse corpus with long stretches of contiguous text our model acquires significant world knowledge and ability to process long-range dependencies which are then successfully transferred to solving discriminative tasks such as question answering, semantic similarity assessment, entailmentdetermination, and text classification, improving the state of the art on 9 of the 12 datasets we study.

What sources of corpus is suitable for pre training?

- By pre-training on a diverse corpus with long stretches of contiguous text our model acquires significant world knowledge and ability to process long-range dependencies which are then successfully transferred to solving discriminative tasks such as question answering, semantic similarity assessment, entailmentdetermination, and text classification, improving the state of the art on 9 of the 12 datasets we study.

- paper: Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond

- links: https://arxiv.org/pdf/2304.13712

- ideas:

What’s the difference between encoder-decoder structure with decoder only model?

- decoder-only model

- nlu task: text classification, named entity recognition (NER),

entailment prediction, and so on.

- nlg task: Natural Language Generation broadly encompasses two major categories of tasks, with the goal of creating coherent, meaningful, and contextually appropriate sequences of symbols.

- paper: Cramming: Training a Language Model on a Single GPU in One Day

- links: https://arxiv.org/pdf/2212.14034

- ideas: no ideas here, i follow this https://magazine.sebastianraschka.com/p/understanding-large-language-models to read paper. But maybe one day this paper would be a good start for implementing BERT in consumer compute. I’m not sure if i will do this experiment.

- paper: Autonomous LLM-driven research from data to human-verifiable research papers

- links: https://arxiv.org/pdf/2404.17605

- ideas:

- this paper propose data to paper. crazy idea…

Starting with a human-provided dataset.the process is designed to raise hypotheses, write, debug and execute code to analyze the data and perform statistical tests, interpret the results and write well-structured scientific papers which not only describe results and conclusions but also transparently delineate the research methodologies, allowing human scientists to understand, repeat and verify the analysis. The discussion on emerging guidelines for AI-driven science (22) have served as a design framework for data-to-paper, yielding a fully transparent, traceable and verifiable workflow, and algorithmic \u201cchaining\u201d of data, methodology and result allowing to trace downstream results back to the part of code which generated them. The system can run with or without a predefined research goal (fixed/open-goal modalities) and with or without human interactions and feedback (copilot/autopilot modes). We performed two open-goal and two fixed-goal case studies on different public datasets (24\u201327) and evaluated the AI-driven research process as well as the novelty and accuracy of created scientific papers. We show that, running fully autonomously (autopilot), data-to-paper can perform complete and correct run cycles for simple goals, while for complex goals, human co-piloting becomes critical.

- paper: LoRA: Low-Rank Adaptation of Large Language Models

- links: https://arxiv.org/pdf/2106.09685

- ideas:

- claim1: LoRA: which freezes the pre-trained model weights and injects trainable rank decomposition matrices into each layer of the Transformer architecture, greatly reducing the number of trainable pa-rameters for downstream tasks

- paper: When to Retrieve: Teaching LLMs to Utilize Information Retrieval Effectively

- links: https://arxiv.org/pdf/2404.19705

- ideas:

- claim1: this paper propose a method that when LLM generate token by <RET>, it use ir system for retrieve outer sources.

- paper: A PRIMER ON THE INNER WORKINGS OF TRANSFORMER-BASED LANGUAGE MODELS

- links: https://arxiv.org/pdf/2405.00208

- ideas:

- claim1: layer normalization is a common operation used to stabilize the training process of deep neural networks This paper is too long for me to digest. Maybe one day i’ll come to visit when i project transfromer architecture

- paper: Is artificial consciousness achievable? Lessons from the

human brain

- links: https://arxiv.org/pdf/2405.04540

- ideas:

- claim1:

Given this uncertainty, we recommend not to use the same general term (i.e., consciousness) for both humans and artificial systems; to clearly specify the key differences between them; and, last but not least, to be very clear about which dimension and level of consciousness the artificial system may possibly be capable of displaying.

- claim1:

- paper: Teaching Algorithm Design: A Literature Review

- links: https://arxiv.org/pdf/2405.00832

- ideas:

- claim: Systematic literature reviews

- Research Question

- Protocol Development

- Search Databases

- Screen Studies

- Extract Data

- Assess Quality

- Synthesize Data

- Report Findings

- claim: Systematic literature reviews

- paper: How Good Are Low-bit Quantized LLAMA3 Models? An Empirical Study

- links: https://arxiv.org/pdf/2404.14047

- ideas:

- Round-To-Nearest(RTN) rounding quantization method.

- LORA find tuning quantization What’s the difference between Post-Training Quantization and LORA find-tuning?

- paper: A Survey on Retrieval-Augmented Text Generation for Large Language Models

- links: https://arxiv.org/pdf/2404.10981

- ideas:

- the RAG paradigm into four categories: pre-retrieval, retrieval, post-retrieval, and generation

- paper: Best Practices and Lessons Learned on Synthetic Data for Language Models

- links: https://arxiv.org/pdf/2404.07503

- ideas:

- Training with synthetic data makes evaluation decontamination harder.

- paper: Exploring the Limits of Language Modeling

- links: https://arxiv.org/pdf/1602.02410

- ideas:

- The goal of LM is to learn a probability distribution over sequences of symbols pertaining to a language

20240524: start to follow llya-30, well-written Blog are considered as paper as well.

- paper: The First Law of Complexodynamics

- links: https://scottaaronson.blog/?p=762

- ideas:

- quote1: why does “complexity” or “interestingness” of physical systems seem to increase with time and then hit a maximum and decrease, in contrast to the entropy, which of course increases monotonically?

Question: What’s the difference between entropy in physics and information theory?

- suppose: Kolmogorov complexity to define entropy.

- quote2: First Law of Complexodynamics,” exhibiting exactly the behavior that Sean wants: small for the initial state, large for intermediate states, then small again once the mixing has finished.

- paper: The Unreasonable Effectiveness of Recurrent Neural Networks

- links: https://arc.net/folder/D0472A20-9C20-4D3F-B145-D2865C0A9FEE

- ideas:

- quote1: If training vanilla neural nets is optimization over functions, training recurrent nets is optimization over programs. Good Resources For Learning RNN:

- paper: Recurrent Models of Visual Attention

- links: https://arc.net/folder/D0472A20-9C20-4D3F-B145-D2865C0A9FEE

- ideas:

- quote1: The model is a recurrent neural network (RNN) which processes inputs sequentially, attending to different locations within the images (or video frames) one at a time, and incrementally combines information from these fixations to build up a dynamic internal representation of the scene or environment.

- Partially Observable Markov Decision Process (POMDP).

- paper: Neural Turing Machines

- links: https://arxiv.org/pdf/1410.5401

- ideas:

- quote1: Fodor and Pylyshyn (Fodor and Pylyshyn, 1988) famously made two barbed claims about the limitations of neural networks for cognitive modeling. They first objected that connectionist theories were incapable of variable-binding, or the assignment of a particular datum to a particular slot in a data structure.

Neural Turing Machine:

External Input External Output

\ /

\ /

+------------+

| Controller |

+------------+

/ \

/ \

+-----------+ +-----------+

| Read Heads| | Write Heads|

+-----------+ +------------+

- paper: Relational recurrent neural networks

- links: https://arxiv.org/pdf/1806.01822

- ideas:

- claim1: Relational Memory Core (RMC) – which employs multi-head dot product attention to allow memories to

interact

CORE Prev. Memory | v +-------------------+------------------+ | A | | +----+----+ | | | | | | | | Residual | | +----+----+ | | | | | v | | +----+ | | | MLP | | | +----+ | | | | | Residual | | | | +-------------------+------------------+ | v Output MULTI-HEAD DOT PRODUCT ATTENTION Memory | v +-------------------------+ | W_q W_k W_v | | | | | | | query key value | | (q1) (k1) (v1) | | \ | / | | \ | / | | softmax(QK^T)V | | | | | v | | Updated Memory | +-------------------------+ Compute attention weights Queries (Q) Keys (K) Weights +---+---+---+ +---+---+---+ +---+---+---+ |q1 |q2 |...| |k1 |k2 |...| |w1 |w2 |...| +---+---+---+ +---+---+---+ +---+---+---+ Normalize weights with row-wise softmax Normalized Weights +---+---+---+ | w1,1 w1,2...| | w2,1 w2,2...| | ... | +---+---+---+ Compute weighted average of values Values (V) Weighted Values +---+---+---+ +---+---+---+ |v1 |v2 |...| |wv1|wv2|...| +---+---+---+ +---+---+---+ Return updated memory Updated Memory +---+---+---+ | M1 | M2 |...| +---+---+---+

- claim1: Relational Memory Core (RMC) – which employs multi-head dot product attention to allow memories to

interact

- paper: Keeping Neural Networks Simple by Minimizing the Description Length of the Weights

- links: https://www.cs.toronto.edu/~hinton/absps/colt93.pdf

- ideas:

- quote1: The Minimum Description Length Principle (Rissanen, 1986) asserts that the best model of some data is the one that minimizes the combined cost of describing the model and describing the misfit between the model and the data.

- paper: RECURRENT NEURAL NETWORK REGULARIZATION

- links: https://arxiv.org/pdf/1409.2329

- ideas:

- claim1: The main contribution of this paper is a recipe for applying dropout to LSTMs in a way that successfully reduces overfitting

- paper: Layer Normalization

- links: https://arxiv.org/pdf/1607.06450

- ideas:

- claim1: batch normalization uses the distribution of the summed input to a neuron over a mini-batch of training cases to compute a mean and variance which are then used to normalize the summed input to that neuron on each training case.

- claim2: layer normalization, a simple normalization method to improve the training speed for various neural network models. Unlike batch normalization, the proposed method directly estimates the normalization statistics from the summed inputs to the neurons within a hidden layer so the normalization does not introduce any new dependencies between training cases

- claim3: Layer normalization does not have such problem because its normalization terms depend only on the summed inputs to a layer at the current time-step.

Well, here is a question /what is Internal Covariate Shift/, /why Internal Covariate Shift becomes a problem/ ?

Answer by kimi:

Imagine you’re playing a game where you have to sort different colored balls into different baskets. Each basket is for a different color, like a basket for red balls, a basket for blue balls, and so on.

Now, suppose at the start you only have a few balls, and they’re not evenly spread out in terms of color. Some baskets have more red balls, some have more blue balls. When you start sorting, you learn how to tell the colors apart based on these limited balls. But if the rules of your game suddenly change, like if you get a lot more balls, or the colors of the balls change, then the way you learned to sort them might not be so accurate anymore.

In machine learning, “Internal Covariate Shift” is a bit like that situation. When we train a machine learning model, we usually use a lot of data to teach it. *But if we change the distribution of the data during training, or if we don’t have enough data to represent all the possible situations, then what the model learned might change, too. That’s what we call “Internal Covariate Shift.”*

Just like how the way you learned to sort the balls at the start of the game might not be accurate if the game’s rules change, the machine learning model might need to adjust if the data distribution changes to keep being accurate.

- paper: Scaling Laws for Neural Language Models

- links: https://arxiv.org/pdf/2001.08361

- ideas:

- claim1: Larger models require fewer samples to reach the same performance

- Performance depends strongly on scale, weakly on model shape

Simple equations govern the dependence of overfitting on model/dataset size and the dependence of training speed on model size. These relationships allow us to determine the optimal allocation of a fixed compute budget.

- Performance depends strongly on scale, weakly on model shape

- claim1: Larger models require fewer samples to reach the same performance

- paper: Deep Speech 2: End-to-End Speech Recognition in English and Mandarin

- links: https://arxiv.org/pdf/1512.02595

- ideas:

- claim1:

To achieve these results, we have explored various network architectures, finding several effective techniques: enhancements to numerical optimization through SortaGrad and Batch Normalization, evaluation of RNNs with larger strides with bigram outputs for English, searching through both bidirectional and unidirectional models. This exploration was powered by a well optimized, High Performance Computing inspired training system that allows us to train new, full-scale models on our large datasets in just a few days.

- claim1:

This paper focuses more on the engineering aspects of the topic.

- paper: A Tutorial Introduction to the Minimum Description Length Principle

- links: https://arxiv.org/pdf/math/0406077

- ideas:

- claim1: we can therefore say that the more we are able to compress the data, the more we have learned about the data.

- claim2: The Fundamental Idea: Learning as Data Compression

- claim3:

To formalize our ideas, we need to decide on a description method, that is, a formal language in which to express properties of the data. The most general choice is a general-purpose2 computer language such as C or Pascal. This choice leads to the definition of the Kolmogorov Complexity [Li and Vit´anyi 1997] of a sequence as the length of the shortest program that prints the sequence and then halts. The lower the Kolmogorov complexity of a sequence, the more regular it is.

However, it turns out that for every two general-purpose programming languages A and B and every data sequence D, the length of the shortest program for D written in language A and the length of the shortest program for D written in language B differ by no more than a constant c, which does not depend on the length of D. This so-called invari- ance theorem says that, as long as the sequence D is long enough, it is not essential which computer language one chooses, as long as it is general-purpose.

MDL: The Basic Idea The goal of statistical inference may be cast as trying to find regularity in the data. ‘Regularity’ may be identified with ‘/ability to compress/’. MDL combines these two insights by viewing learning as data compression: it tells us that, for a given set of hypotheses H and data set D, we should try to find the hypothesis or combination of hypotheses in H that compresses D most.

…. (have not finished yet.)

This book delves into the fundamental building blocks of current deep learning systems, but it requires a solid background in information theory to fully grasp the underlying concepts.

- paper: pointer networks

- links: https://arxiv.org/pdf/1506.03134

- ideas:

- claim1: Our model solves the problem of variable size output dictionaries using a recently proposed mechanism of neural attention. It differs from the previous attention attempts in

that, instead of using attention to blend hidden units of an encoder to a context vector at each decoder step, it uses attention as a pointer to select a member of the input sequence as the output

- paper: Deep Residual Learning for Image Recognition

- links: https://arxiv.org/pdf/1512.03385

- ideas:

- claim1: Deep networks naturally integrate low/mid/highlevel features

- claim2: When deeper networks are able to start converging, a degradation problem has been exposed: with the network depth increasing, accuracy gets saturated (which might be

unsurprising and then degrades rapidly. Unexpectedly, such degradation is not caused by overfitting, and adding more layers to a suitably deep model leads to higher training error. *What pr

- paper: The Shattered Gradients Problem: If resnets are the answer, then what is the question?

- links: https://arxiv.org/pdf/1702.08591

- ideas:

- claim1: If resnets are the solution, then what is the problem?

- claim2: a previously unnoticed difficulty with gradients in deep rectifier networks that is orthogonal to vanishing and exploding gradients. The shattering gradients problem

is that, as depth increases, gradients in standard feedforward networks increasingly resemble white noise.

- claim3: The shattered gradient problem is that the spatial structure of gradients is progressively obliterated as neural nets deepen.

- claim4: Introducing skip-connections allows much deeper networks to be trained (Srivastava et al., 2015; He et al., 2016b;a; Greff et al., 2017). Skip-connections signif- icantly change the correlation structure of gradients

- claim5: Batch normalization was introduced to reduce covariate shift (Ioffe & Szegedy, 2015). However, it has other effects that are less well-known – and directly impact the correlation structure of gradients. Maybe to really understand reset-net and shattered reqiure coding something.

OpenAI new paper about using top-k sparse autoencoder for neural network explaination

- p

- paper: Identity Mappings in Deep Residual Networks

- links: https://arxiv.org/pdf/1603.05027

- ideas:

- claim1: This paper investigates the propagation formulations behind the connection

mechanisms of deep residual networks. Our derivations imply that identity short- cut connections and identity after-addition activation are essential for making information propagation smooth.

- paper: Quantifying the Rise and Fall of Complexity in Closed Systems: The Coffee Automaton

- links: https://arxiv.org/pdf/1405.6903

- ideas:

- claim1: Just as we can reason about the disorder of the coffee cup system, we can also consider its

“complexity.” Informally, by complexity we mean the amount of information needed to describe everything “interesting” about the system.

I don’t know why paper have relation to deep learning, so i ask GPT4 and here is the answers may help you:

The paper “Quantifying the Rise and Fall of Complexity in Closed Systems: The Coffee Automaton” relates to deep learning in several ways:

- **Optimization and Convergence**: The process of complexity rising and falling in a closed system is similar to the optimization and convergence of neural networks during training. As a model learns, its complexity increases, reaches a peak, and then stabilizes as it approaches an optimal solution.

- **Dynamic Systems**: Both the Coffee Automaton and deep learning models are dynamic systems that evolve over time. Understanding how complexity changes in these systems can provide insights into the behavior and stability of neural networks.

- **Pattern Recognition**: The study of how patterns emerge and disappear in the automaton parallels how deep learning models recognize and simplify patterns in data. This understanding can help improve model design and efficiency.

- **Entropy and Information Theory**: The concepts of entropy and information theory used to quantify complexity in the paper are also fundamental to understanding the information processing capabilities of deep learning models.

These parallels highlight the broader applicability of principles from the study of physical systems to the field of deep learning, providing valuable insights into the dynamics and optimization of neural networks.

I spend some time on learning how to read paper, so I upgrade my method from now.

Core principle:

- no quote from text.

- always ask question.

- for every paper use at least one-two sentences to summary paper idea.

- no missing math formula

- paper: VARIATIONAL LOSSY AUTOENCODER

- links: https://arxiv.org/pdf/1603.05027

here i try to strcture my reading process.

- first-pass:

Q: what is paper about? A: this paper proposed a method that combining VAE(Variational Autoencoder) with neural autoregressive models, which increase the flexiability of global latent codes for various problem and increase sparsity so that result can be better explaination and faster computing.

Q: how does it improved compare to other works? A: to answer this question is hard. Becase i am limited in my understanding about different noun. but there is an excellent quote in this paper answer the question.

However, earlier attempts at combining these two kinds of models have run into the problem that the autoregressive part of the model ends up explaining all structure in the data, while the latent variables are not used.

Q: what is the main method in this work? A:

- goal: given data, model auto learn features without interacting

- method:

- VAE + autoregressive model (but why?)

Does density estimator and representation learning are different tasks?

** My quesiton where does maximum likelihood comes from ? **

- paper: A simple neural network module for relational reasoning

- links: https://arxiv.org/pdf/1706.01427

- ideas:

- first-pass:

Q: what is paper about? A: the paper proposed a network called “Relational Network ” as a module that can be plugged into the network, which can improve the reason ability of the networks.

- first-pass:

This paper is more like educational paper, so there is no pass.

- paper: The Dawning of a New Era in Applied Mathematics

- links: https://www.ams.org/journals/notices/202104/rnoti-p565.pdf

- ideas:

- In the

Keplerian paradigm, or thedata-driven approach,one extracts scientific discoveries through the analysis of data. The classical example is Kepler’s laws of planetary motion. Bioinformatics provides a compelling illustration of the success of the Keplerian paradigm in modern times - In the

Newtonian paradigm, or thefirst-principle-based approach,the objective is to discover the fundamental principles that govern the world around us or the things we are interested inThe data-driven approach has become a very powerful tool with the advance of statistical methods and machine learning. It is very effective for finding the facts, but less

- In the

effective for helping us to find the reasons behind the facts.

The first-principle-based approach aims at understand ing at the most fundamental level. Physics, in particular, is driven by the pursuit of such first principles. A turn- ing point was in 1929 with the establishment of quantum mechanics: as was declared by Dirac [2], with quantum

This is the dilemma we often face in the first principle-based approach: it is fundamental but not very practical.

- paper: LANGUAGE MODELING IS COMPRESSION

- links: https://arxiv.org/pdf/2309.10668v2

- ideas:

- first-pass:

Q: what is paper about? A: prediction-compression equivalence allows us to use any compressor (like gzip) to build a conditional generative mode

Q: what is the hypnosis? A: Arithmetic coding transforms a sequence model into a compressor, and, conversely, a compressor can be

- first-pass:

transformed into a predictor using its coding lengths to construct probability distributions following Shannon’s entropy principle.

Question: It has long been established that predictive models can be transformed into lossless compressors and vice versa. Why?

A:

This paper worth digging.

- paper: Large Language Model Evaluation via Matrix Entropy

- links: https://arxiv.org/pdf/2401.17139

- ideas:

- first-pass:

Q: what is paper about? A: this paper introduce matrix entropy. This compression process enables the model to learn and understand the shared structure of data

core idea: We introduce matrix entropy, a new intrinsic metric that reflects the extent to which a language model “compresses” the common knowledge in the data.

Probably try it out in someday.

- first-pass:

- paper: The Platonic Representation Hypothesis

- links: https://arxiv.org/pdf/2405.07987

- ideas:

- first-pass: Q: what is paper about? A: representations in AI models, par-

ticularly deep networks, are converging. First, we survey many examples of convergence in the lit- erature: over time and across multiple domains, the ways by which different neural networks rep- resent data are becoming more aligned. Next, we demonstrate convergence across data modalities: as vision models and language models get larger, they measure distance between datapoints in a more and more alike way. We hypothesize that this convergence is driving toward a shared sta- tistical model of reality, akin to Plato’s concept of an ideal reality.

The Platonic Representation Hypothesis: Neural networks, trained with different objectives on different data and modalities, are converging to a shared statistical model of reality in their representation spaces.

Models are increasingly aligning to brains.

- paper: Superlinear Returns

- links: https://paulgraham.com/superlinear.html

- takeaway:

- If your product is only half as good as your competitor’s, you don’t get half as many customers. You get no customers, and you go out of business.

- the companies with high growth rates tend to become immensely valuable, while the ones with lower growth rates may not even survive.

- Y Combinator encourages founders to focus on growth rate rather than absolute numbers.

- The most common case of exponential growth in preindustrial times was probably scholarship. The more you know, the easier it is to learn new things.

- Knowledge grows exponentially, but there are also thresholds in it. Learning to ride a bicycle, for example. Some of these thresholds are akin to machine tools.

- There are two ways work can compound. It can compound directly, in the sense that doing well in one cycle causes you to do better in the next. That happens for example when you’re building infrastructure, or growing an audience or brand. Or work can compound by teaching you, since learning compounds. This second case is an interesting one because you may feel you’re doing badly as it’s happening.

- This is one reason Silicon Valley is so tolerant of failure.

People in Silicon Valley aren't blindly tolerant of failure. They'll only continue to bet on you if you're learning from your failures.But if you are, you are in fact a good bet: maybe your company didn’t grow the way you wanted, but you yourself have, and that should yield results eventually. - Which yields another heuristic: always be learning. If you’re not learning, you’re probably not on a path that leads to superlinear returns.

But don't overoptimize what you're learning. Don't limit yourself to learning things that are already known to be valuable. You're learning; you don't know for sure yet what's going to be valuable, and if you're too strict you'll lop off the outliers.- A principle for taking advantage of thresholds has to include a test to ensure the game is worth playing. Here’s one that does: if you come across something that’s mediocre yet still popular, it could be a good idea to replace it. For example, if a company makes a product that people dislike yet still buy, then presumably they’d buy a better alternative if you made one.

- So one heuristic here is to be driven by curiosity rather than careerism — to give free rein to your curiosity instead of working on what you’re supposed to.

Pg’s essay are really good. It’s worthing to categorize all his papers into different category.

- paper: How to Do Great Work

- links: https://paulgraham.com/greatwork.html

- takeaway:

Every paragraph seeme like gold.

- The first step is to decide what to work on. The work you choose needs to have three qualities:

it has to be

something you have a natural aptitude for, thatyou have a deep interest in,= and that =offers scope to do great work. - The way to figure out what to work on is by working. If you’re not sure what to work on, guess. But pick something and get going. You’ll probably guess wrong some of the time, but that’s fine. It’s good to know about multiple things; some of the biggest discoveries come from noticing connections between different fields.

- Develop a habit of working on your own projects. Don’t let “work” mean something other people tell you to do. If you do manage to do great work one day, it will probably be on a project of your own. It may be within some bigger project, but you’ll be driving your part of it.

- What should your projects be? Whatever seems to you excitingly ambitious. As you grow older and your taste in projects evolves, exciting and important will converge. At 7 it may seem excitingly ambitious to build huge things out of Lego, then at 14 to teach yourself calculus, till at 21 you’re starting to explore unanswered questions in physics. But always preserve excitingness.

- Once you’ve found something you’re excessively interested in, the next step is to learn enough about it to get you to one of the frontiers of knowledge. Knowledge expands fractally, and from a distance its edges look smooth, but once you learn enough to get close to one, they turn out to be full of gaps.

- Four steps: choose a field, learn enough to get to the frontier, notice gaps, explore promising ones. This is how practically everyone who’s done great work has done it, from painters to physicists.

- The three most powerful motives are curiosity, delight, and the desire to do something impressive. Sometimes they converge, and that combination is the most powerful of all. The big prize is to discover a new fractal bud. You notice a crack in the surface of knowledge, pry it open, and there’s a whole world inside.

- The nature of ambition exacerbates this problem. Ambition comes in two forms, one that precedes interest in the subject and one that grows out of it. Most people who do great work have a mix, and the more you have of the former, the harder it will be to decide what to do.

- The main reason it’s hard is that you can’t tell what most kinds of work are like except by doing them. Which means the four steps overlap: you may have to work at something for years before you know how much you like it or how good you are at it. And in the meantime you’re not doing, and thus not learning about, most other kinds of work. So in the worst case you choose late based on very incomplete information.

- The first step is to decide what to work on. The work you choose needs to have three qualities:

it has to be

What should you do if you're young and ambitious but don't know what to work on? What you should not do is drift along passively, assuming the problem will solve itself. You need to take action. But there is no systematic procedure you can follow. When you read biographies of people who've done great work, it's remarkable how much luck is involved. They discover what to work on as a result of a chance meeting, or by reading a book they happen to pick up. So you need to make yourself a big target for luck, and the way to do that is to be curious. Try lots of things, meet lots of people, read lots of books, ask lots of questions.

Don't worry if you find you're interested in different things than other people. The stranger your tastes in interestingness, the better. Strange tastes are often strong ones, and a strong taste for work means you'll be productive. And you're more likely to find new things if you're looking where few have looked before.

If you're making something for people, make sure it's something they actually want. The best way to do this is to make something you yourself want. Write the story you want to read; build the tool you want to use. Since your friends probably have similar interests, this will also get you your initial audience.

This should follow from the excitingness rule. Obviously the most exciting story to write will be the one you want to read. The reason I mention this case explicitly is that so many people get it wrong. Instead of making what they want, they try to make what some imaginary, more sophisticated audience wants. And once you go down that route, you’re lost.

There are a lot of forces that will lead you astray when you're trying to figure out what to work on. Pretentiousness, fashion, fear, money, politics, other people's wishes, eminent frauds. But if you stick to what you find genuinely interesting, you'll be proof against all of them. If you're interested, you're not astray.

In most cases the recipe for doing great work is simply: work hard on excitingly ambitious projects, and something good will come of it. Instead of making a plan and then executing it, you just try to preserve certain invariants.

I think for most people who want to do great work, the right strategy is not to plan too much. At each stage do whatever seems most interesting and gives you the best options for the future. I call this approach "staying upwind." This is how most people who've done great work seem to have done it.

This is one case where the young have an advantage. They’re more optimistic, and even though one of the sources of their optimism is ignorance, in this case ignorance can sometimes beat knowledge.

Since there are two senses of starting work — per day and per project — there are also two forms of procrastination. Per-project procrastination is far the more dangerous. You put off starting that ambitious project from year to year because the time isn’t quite right. When you’re procrastinating in units of years, you can get a lot not done.

The way to beat it is to stop occasionally and ask yourself: Am I working on what I most want to work on? When you're young it's ok if the answer is sometimes no, but this gets increasingly dangerous as you get older.

(Note: Don’t lie to yourself.)

There may be some jobs where you have to work diligently for years at things you hate before you get to the good part, but this is not how great work happens. Great work happens by focusing consistently on something you’re genuinely interested in. When you pause to take stock, you’re surprised how far you’ve come.

The reason we’re surprised is that we underestimate the cumulative effect of work. Writing a page a day doesn’t sound like much, but if you do it every day you’ll write a book a year. That’s the key: consistency. People who do great things don’t get a lot done every day. They get something done, rather than nothing.

(Note: to really accumulate something. Conscious level is important.)

If you do work that compounds, you’ll get exponential growth. Most people who do this do it unconsciously, but it’s worth stopping to think about. Learning, for example, is an instance of this phenomenon: the more you learn about something, the easier it is to learn more. Growing an audience is another: the more fans you have, the more new fans they’ll bring you.

Don’t try to work in a distinctive style. Just try to do the best job you can; you won’t be able to help doing it in a distinctive way.

Style is doing things in a distinctive way without trying to. Trying to is affectation.

True by itself is not enough, of course. Great ideas have to be true and new. And it takes a certain amount of ability to see new ideas even once you’ve learned enough to get to one of the frontiers of knowledge.

I’ve never liked the term “creative process.” It seems misleading. Originality isn’t a process, but a habit of mind. Original thinkers throw off new ideas about whatever they focus on, like an angle grinder throwing off sparks. They can’t help it.

To find new ideas you have to seize on signs of breakage instead of looking away. That’s what Einstein did. He was able to see the wild implications of Maxwell’s equations not so much because he was looking for new ideas as because he was stricter.

The other thing you need is a willingness to break rules. Paradoxical as it sounds, if you want to fix your model of the world, it helps to be the sort of person who's comfortable breaking rules. From the point of view of the old model, which everyone including you initially shares, the new model usually breaks at least implicit rules.

There are two ways to be comfortable breaking rules: to enjoy breaking them, and to be indifferent to them. I call these two cases being aggressively and passively independent-minded.

One way to discover broken models is to be stricter than other people. Broken models of the world leave a trail of clues where they bash against reality. Most people don’t want to see these clues. It would be an understatement to say that they’re attached to their current model; it’s what they think in; so they’ll tend to ignore the trail of clues left by its breakage, however conspicuous it may seem in retrospect.

The other way to break rules is not to care about them, or perhaps even to know they exist. This is why novices and outsiders often make new discoveries; their ignorance of a field’s assumptions acts as a source of temporary passive independent-mindedness. Aspies also seem to have a kind of immunity to conventional beliefs. Several I know say that this helps them to have new ideas.

Use the advantages of youth when you have them, and the advantages of age once you have those. The advantages of youth are energy, time, optimism, and freedom. The advantages of age are knowledge, efficiency, money, and power. With effort you can acquire some of the latter when young and keep some of the former when old.

- paper: The Best Essay

- links: https://paulgraham.com/best.html

- takeaway:

- How do you get this initial question? It probably won’t work to choose some important-sounding topic at random and go at it.

Professional traders won't even trade unless they have what they call an edge — a convincing story about why in some class of trades they'll win more than they lose. Similarly, you shouldn't attack a topic unless you have a way in — some new insight about it or way of approaching it. - Perhaps beginning writers are alarmed at the thought of starting with something mistaken or incomplete, but you shouldn’t be, because this is why essay writing works. Forcing yourself to commit to some specific string of words gives you a starting point, and if it’s wrong, you’ll see that when you reread it. At least half of essay writing is rereading what you’ve written and asking is this correct and complete? You have to be very strict when rereading, not just because you want to keep yourself honest, but because a gap between your response and the truth is often a sign of new ideas to be discovered.

Ideally the response to a question is two things: the first step in a process that converges on the truth, and a source of additional questions (in my very general sense of the word). So the process continues recursively, as response spurs response. [4]- It would be a mistake to let this make you too conservative though, because you can’t predict where a question will lead. Not if you’re doing things right, because doing things right means making discoveries, and by definition you can’t predict those. So the way to respond to this situation is not to be cautious about which initial question you choose, but to write a lot of essays. Essays are for taking risks.

Almost any question can get you a good essay.Indeed, it took some effort to think of a sufficiently unpromising topic in the third paragraph, because any essayist’s first impulse on hearing that the best essay couldn’t be about x would be to try to write it. But if most questions yield good essays, only some yield great ones.- This essay is an example. Writing about the best essay implies there is such a thing, which pseudo-intellectuals will dismiss as reductive, though it follows necessarily from the possibility of one essay being better than another. And thinking about how to do something so ambitious is close enough to doing it that it holds your attention.

- I like to start an essay with a gleam in my eye. This could be just a taste of mine, but there’s one aspect of it that probably isn’t: to write a really good essay on some topic, you have to be interested in it. A good writer can write well about anything, but to stretch for the novel insights that are the raison d’etre of the essay, you have to care.

- What other qualities would a great initial question have? It’s probably good if it has implications in a lot of different areas. And I find it’s a good sign if it’s one that people think has already been thoroughly explored.

But the truth is that I've barely thought about how to choose initial questions, because I rarely do it. I rarely choose what to write about; I just start thinking about something, and sometimes it turns into an essay. - Perhaps the answer is to go one step earlier: to write about whatever pops into your head, but try to ensure that what pops into your head is good. Indeed, now that I think about it, this has to be the answer, because a mere list of topics wouldn’t be any use if you didn’t have edge with any of them. To start writing an essay, you need a topic plus some initial insight about it, and you can’t generate those systematically. If only. [9]

- You can probably cause yourself to have more of them, though. The quality of the ideas that come out of your head depends on what goes in, and you can improve that in two dimensions,

breadth and depth. - You can’t learn everything, so getting breadth implies learning about topics that are very different from one another. When I tell people about my book-buying trips to Hay and they ask what I buy books about, I usually feel a bit sheepish answering, because the topics seem like a laundry list of unrelated subjects. But perhaps that’s actually optimal in this business.

- You can also get ideas by talking to people, by doing and building things, and by going places and seeing things. I don’t think it’s important to talk to new people so much as the sort of people who make you have new ideas. I get more new ideas after talking for an afternoon with Robert Morris than from talking to 20 new smart people. I know because that’s what a block of office hours at Y Combinator consists of.

While breadth comes from reading and talking and seeing, depth comes from doing.The way to really learn about some domain is to have to solve problems in it. Though this could take the form of writing, I suspect that to be a good essayist you also have to do, or have done, some other kind of work. That may not be true for most other fields, but essay writing is different. You could spend half your time working on something else and be net ahead, so long as it was hard.- That’s the ultimate source of drag on the connectedness of ideas: the discoveries you make along the way. If you discover enough starting from question A, you’ll never make it to question B. Though if you keep writing essays you’ll gradually fix this problem by burning off such discoveries. So bizarrely enough, writing lots of essays makes it as if the space of ideas were more highly connected.

There are two senses in which an essay can be timeless: to be about a matter of permanent importance, and always to have the same effect on readers.With art these two senses blend together. Art that looked beautiful to the ancient Greeks still looks beautiful to us. But with essays the two senses diverge, because essays teach, and you can’t teach people something they already know. Natural selection is certainly a matter of permanent importance, but an essay explaining it couldn’t have the same effect on us that it would have had on Darwin’s contemporaries, precisely because his ideas were so successful that everyone already knows about them.- If you want to surprise readers not just now but in the future as well, you have to write essays that won’t stick — essays that, no matter how good they are, won’t become part of what people in the future learn before they read them.

- But although I wish I could say that writing great essays depends mostly on effort, in the limit case it’s inspiration that makes the difference. In the limit case, the questions are the harder thing to get. That pool has no bottom.

How to get more questions? That is the most important question of all.

- How do you get this initial question? It probably won’t work to choose some important-sounding topic at random and go at it.

- paper: Life is Short

- links: https://paulgraham.com/vb.html

- takeaway:

- If life is short, we should expect its shortness to take us by surprise. And that is just what tends to happen. You take things for granted, and then they’re gone.

You think you can always write that book, or climb that mountain, or whatever, and then you realize the window has closed.The saddest windows close when other people die. Their lives are short too. After my mother died, I wished I’d spent more time with her. I lived as if she’d always be there. And in her typical quiet way she encouraged that illusion. But an illusion it was. I think a lot of people make the same mistake I did. Perhaps a better solution is to look at the problem from the other end. Cultivate a habit of impatience about the things you most want to do.Don’t wait before climbing that mountain or writing that book or visiting your mother. You don’t need to be constantly reminding yourself why you shouldn’t wait. Just don’t wait.- I can think of two more things one does when one doesn’t have much of something: try to get more of it, and savor what one has. Both make sense here.

- Relentlessly prune bullshit, don’t wait to do things that matter, and savor the time you have. That’s what you do when life is short.

- If life is short, we should expect its shortness to take us by surprise. And that is just what tends to happen. You take things for granted, and then they’re gone.

- paper: Putting Ideas into Words

- links: https://paulgraham.com/vb.html

- takeaway:

- Writing about something, even something you know well, usually shows you that you didn’t know it as well as you thought. Putting ideas into words is a severe test. The first words you choose are usually wrong; you have to rewrite sentences over and over to get them exactly right. And your ideas won’t just be imprecise, but incomplete too. Half the ideas that end up in an essay will be ones you thought of while you were writing it. Indeed, that’s why I write them.

- Once you publish something, the convention is that whatever you wrote was what you thought before you wrote it. These were your ideas, and now you’ve expressed them. But you know this isn’t true. You know that putting your ideas into words changed them. And not just the ideas you published. Presumably there were others that turned out to be too broken to fix, and those you discarded instead.

- It’s not just having to commit your ideas to specific words that makes writing so exacting. The real test is reading what you’ve written. You have to pretend to be a neutral reader who knows nothing of what’s in your head, only what you wrote. When he reads what you wrote, does it seem correct? Does it seem complete? If you make an effort, you can read your writing as if you were a complete stranger, and when you do the news is usually bad. It takes me many cycles before I can get an essay past the stranger. But the stranger is rational, so you always can, if you ask him what he needs.

- You can know a great deal about something without writing about it. Can you ever know so much that you wouldn’t learn more from trying to explain what you know? I don’t think so. I’ve written about at least two subjects I know well — Lisp hacking and startups — and in both cases I learned a lot from writing about them. In both cases there were things I didn’t consciously realize till I had to explain them.

- And I don’t think my experience was anomalous. A great deal of knowledge is unconscious, and experts have if anything a higher proportion of unconscious knowledge than beginners

- I’m not saying that writing is the best way to explore all ideas. If you have ideas about architecture, presumably the best way to explore them is to build actual buildings. What I’m saying is that however much you learn from exploring ideas in other ways, you’ll still learn new things from writing about them.

- If you’re lazy, of course, writing and talking are equally useless. But if you want to push yourself to get things right, writing is the steeper hill.

- paper: How to think in writing

- links: https://www.henrikkarlsson.xyz/p/writing-to-think

- takeaway:

- The reason I’ve spent so long establishing this rather obvious point [that writing helps you refine your thinking] is that it leads to another that many people will find shocking. If writing down your ideas always makes them more precise and more complete, then no one who hasn’t written about a topic has fully formed ideas about it. And someone who never writes has no fully formed ideas about anything nontrivial.

It feels to them as if they do, especially if they’re not in the habit of critically examining their own thinking. Ideas can feel complete. It’s only when you try to put them into words that you discover they’re not. So if you never subject your ideas to that test, you’ll not only never have fully formed ideas, but also never realize it.

- Good thinking is about pushing past your current understanding and reaching the thought behind the thought.

- When I write, I get to observe the transition from this fluid mode of thinking to the rigid. As I type, I’m often in a fluid mode—writing at the speed of thought. I feel confident about what I’m saying. But as soon as I stop, the thoughts solidify, rigid on the page, and, as I read what I’ve written, I see cracks spreading through my ideas. What seemed right in my head fell to pieces on the page.

- And it is only the first step. Once you have made your thoughts definite, clear, concrete, sharp, and rigid, you also want to unfold them.

- By doing this, I try to

continually focus my reading on the goal of forming a bottom-line view, rather than just “gathering information.”I think this makes my investigations more focused and directed, and the results easier to retain. I consider this approach to be =probably the single biggest difference-maker between “reading a ton about lots of things, but retaining little” and “efficiently developing a set of views on key topics and retaining the reasoning behind them.”=

- The reason I’ve spent so long establishing this rather obvious point [that writing helps you refine your thinking] is that it leads to another that many people will find shocking. If writing down your ideas always makes them more precise and more complete, then no one who hasn’t written about a topic has fully formed ideas about it. And someone who never writes has no fully formed ideas about anything nontrivial.

- paper: C++ design patterns for low-latency applications including high-frequency trading

- links: https://arxiv.org/pdf/2309.04259

- takeaway:

Optimization in C++

- Cache Warming:

- Compile-time dispatch:

- Constexpr:

- Loop Unrolling:

- Short-circuiting:

- Signed vs Unsigned Comparsions:

- Avoid Mixing Float and Doubles

- Branch Prediction/Reduction:

- Slowpath Removal:

- SIMD:

- Prefetching:

- Lock-free Programming:

- Inlining:

ring buffer: lock-free programming

+--------------------+

| Memory request |

+--------------------+

|

v

+--------------------+

| Request type |

+--------------------+

/ \

/ \

v v

+-------+ +-------+

| Read | | Write |

+-------+ +-------+

| |

v v

+------------+ +------------+

| Cache hit? | | Cache hit? |

+------------+ +------------+

| |

No | Yes No | Yes

| |

+------------------+ +------------------+

| Locate a cache | | Write data into |

| block to use | | cache block |

+------------------+ +------------------+

| |

v v

+----------------------------+ |

| Read data from lower | |

| memory into the cache block| |

+----------------------------+ |

| |

v v

+------------------+ +------------------+

| Return data | | Write data into |

+------------------+ | lower memory |

| +------------------+

v |

+--------------------+ v

| Done |<-------/

+--------------------+

Beyond networking protocols and physical infrastructure, HFT firms invest in specialized hardware. Field-Programmable Gate Arrays (FPGAs) and Application-Specific Integrated Circuits (ASICs) are common choices, as they can execute trading algorithms more efficiently than general-purpose processors

Runtime dispatch, also known as dynamic dispatch, resolves function calls at runtime. This method is primarily associated with inheritance and virtual func- tions [13]. In such cases, the function that gets executed relies on the object’s type at runtime. Conversely, compile-time dispatch determines the function call during the compilation phase and is frequently used in conjunction with templates and function overloading.

Bad design:

if (checkForErrorA())

handleErrorA();

else if (checkForErrorB())

handleErrorB();

else if (checkForErrorC())

handleErrorC();

else

executeHotpath();

Good design:

uint32_t errorFlags;

...

if (errorFlags)

HandleError(errorFlags);

else

{

... hotpath

}

ArrayAddition: Takes approximately 20,000 ns. ArrayAddition_SIMD: Takes approximately 12,000 ns, showing improved performance compared to regular array addition.

Mutex: Takes approximately 175,000 ns. Atomic: Takes approximately 75,000 ns, demonstrating better performance compared to using mutex.

kernel bypass: Kernel bypass mitigates these latency issues by facilitating direct communication between user applications

and the network interface card (NIC).

Speed Improvement by Optimisation Technique ------------------------------------------------------------- | Technique | Speed Improvement (%) | ------------------------------------------------------------- | Cache Warming | ############################ 90.00% | | Constexpr | ############################ 90.88% | | Loop unrolling | ####################### 72.00% | | Lock-Free Programming | ################### 63.00% | | Mixing data types | ################## 52.00% | | Short-circuiting | ################## 50.00% | | SIMD Instructions | ################## 49.00% | | Branch reduction | ########### 36.00% | | Compile-time dispatch | ########### 26.00% | | Prefetching | ######## 23.50% | | Inlining | ####### 20.50% | | Signed vs unsigned | #### 12.15% | | Slowpath removal | #### 12.00% | -------------------------------------------------------------

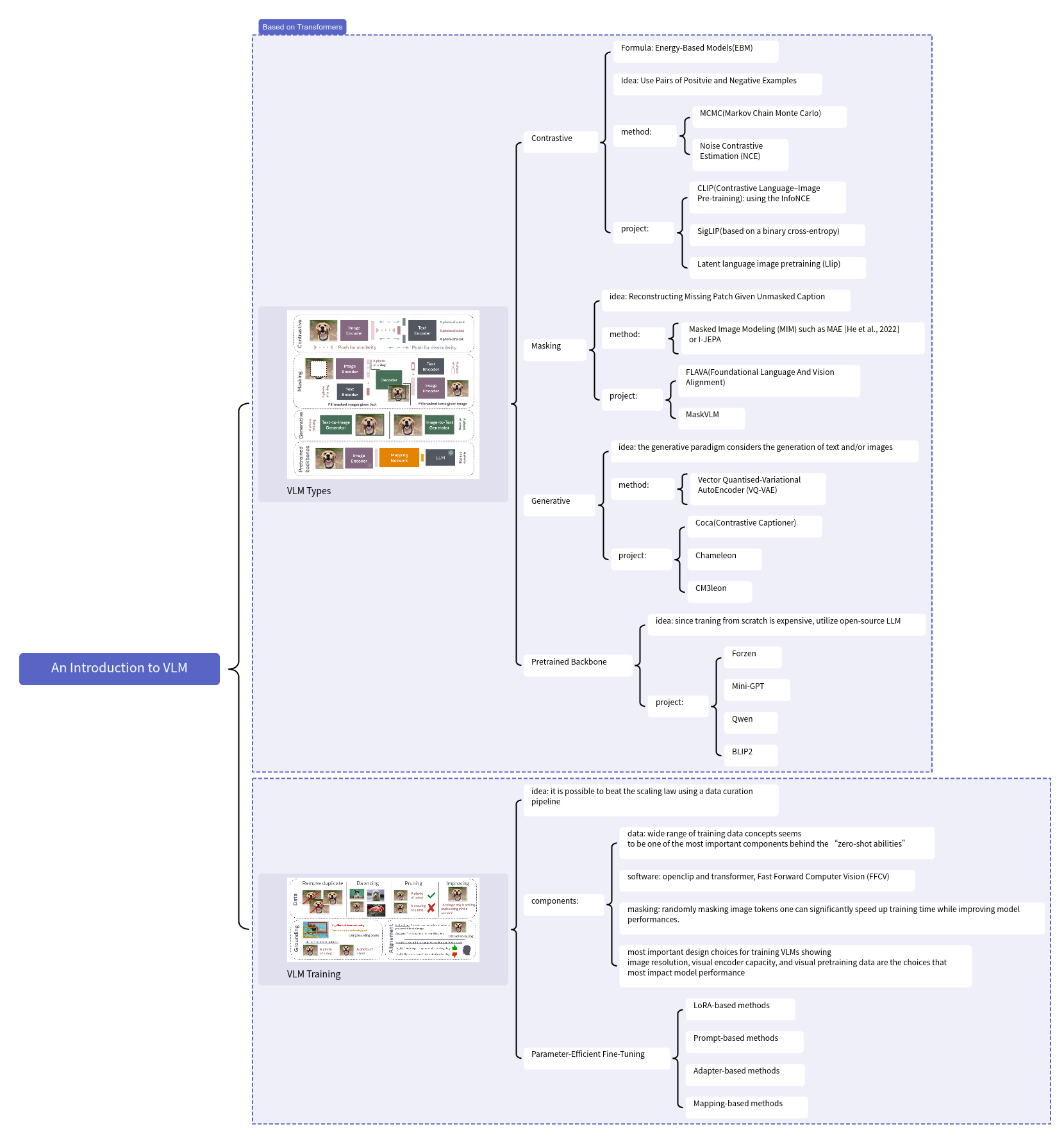

- paper: An Introduction to Vision-Language Modeling

- links: https://arxiv.org/pdf/2405.17247

- takeaway:

- paper: Being a Noob

- links: https://www.paulgraham.com/noob.html

- takeaway:

- It’s not pleasant to feel like a noob. And the word “noob” is certainly not a compliment. And yet today I realized something encouraging about being a noob: the more of a noob you are locally, the less of a noob you are globally.

- Though it feels unpleasant, and people will sometimes ridicule you for it, the more you feel like a noob, the better.

- paper: How to Start Google

- links: https://www.paulgraham.com/google.html

- takeaway:

- The trick is to start your own company. So it’s not a trick for avoiding work, because if you start your own company you’ll work harder than you would if you had an ordinary job. But you will avoid many of the annoying things that come with a job, including a boss telling you what to do

- All you can know when you start working on a startup is that it seems worth pursuing. You can’t know whether it will turn into a company worth billions or one that goes out of business. So when I say I’m going to tell you how to start Google, I mean I’m going to tell you how to get to the point where you can start a company that has as much chance of being Google as Google had of being Google.

- You need to be good at some kind of technology, you need an idea for what you’re going to build, and you need cofounders to start the company with.

- Just work on whatever interests you the most. You’ll work much harder on something you’re interested in than something you’re doing because you think you’re supposed to.

- Those of you who are taking computer science classes in school may at this point be thinking, ok, we’ve got this sorted. We’re already being taught all about programming. But sorry, this is not enough. You have to be working on your own projects, not just learning stuff in classes. You can do well in computer science classes without ever really learning to program. In fact you can graduate with a degree in computer science from a top university and still not be any good at programming. That’s why tech companies all make you take a coding test before they’ll hire you, regardless of where you went to university or how well you did there. They know grades and exam results prove nothing.

Actually it's easy to get startup ideas once you're good at technology. Once you're good at some technology, when you look at the world you see dotted outlines around the things that are missing. You start to be able to see both the things that are missing from the technology itself, and all the broken things that could be fixed using it, and each one of these is a potential startup.- So the list of what you need to do to get from here to starting a startup is quite short. You need to get good at technology, and the way to do that is to work on your own projects. And you need to do as well in school as you can, so you can get into a good university, because that’s where the cofounders and the ideas are. That’s it, just two things, build stuff and do well in school.

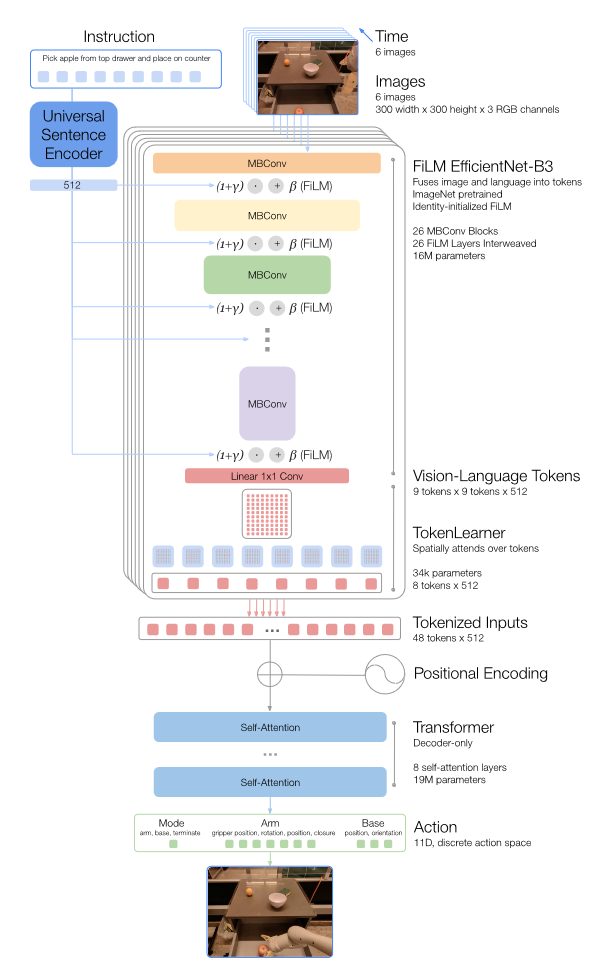

- paper: RT-1: ROBOTICS TRANSFORMER FOR REAL-WORLD CONTROL AT SCALE

- links: https://robotics-transformer1.github.io/assets/rt1.pdf

- takeaway:

- first-pass: Q: what’s this paper is about? A: this paper propose a method called robotics transformers which aims to solve general robotics problems.

Architecture:

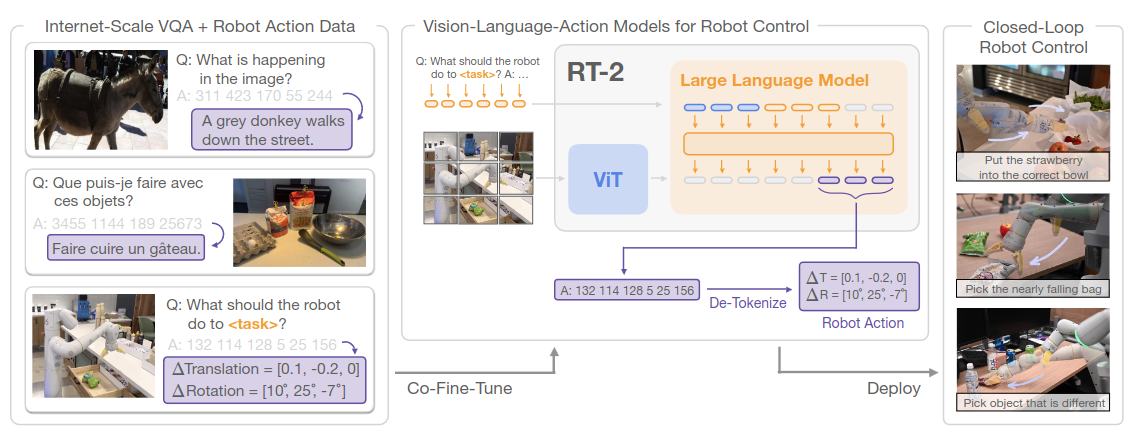

- paper: RT-2: Vision-Language-Action Models Transfer

Web Knowledge to Robotic Control

- links: https://arxiv.org/pdf/2307.15818

- takeaway:

- first-pass: Q: what’s this paper is about? A: Key method: tokenizing the actions into text tokens and creating “multimodal

sentences” (Driess et al., 2023) that “respond” to robotic instructions paired with camera observations by producing corresponding actions.

Architecture:

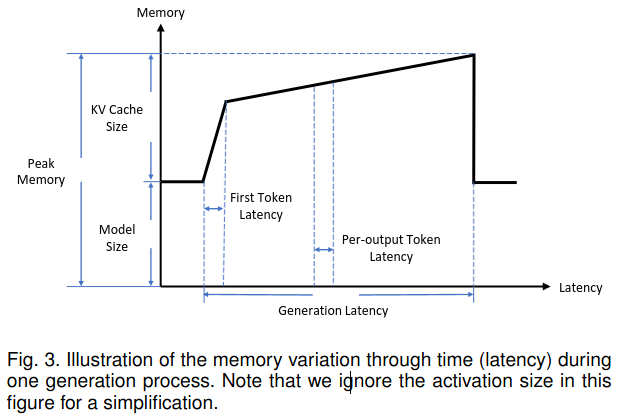

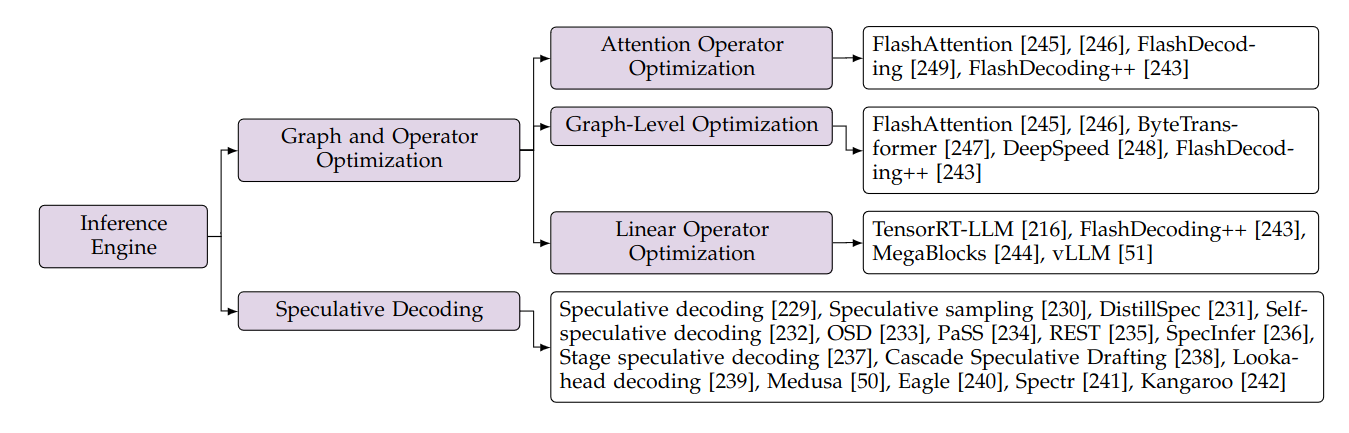

- paper: A Survey on Efficient Inference for Large Language Models

- links: https://arxiv.org/pdf/2404.14294

- takeaway:

Based on the above methods and

techniques, the inference process of LLMs can be divided

into two stages:

• Prefilling Stage: The LLM calculates and stores the KV cache of the initial input tokens, and generates the first output token

• Decoding Stage: The LLM generates the output tokens one by one with the KV cache, and then updates it with the key (K) and value (V) pairs of the newly generated token

- paper: Towards Efficient Generative Large Language Model Serving:

A Survey from Algorithms to Systems

- links: https://arxiv.org/pdf/2312.15234

- takeaway:

- first-pass:

Architecture:

Architecture:

Architecture:

Architecture:

Architecture:

Architecture:

Architecture:

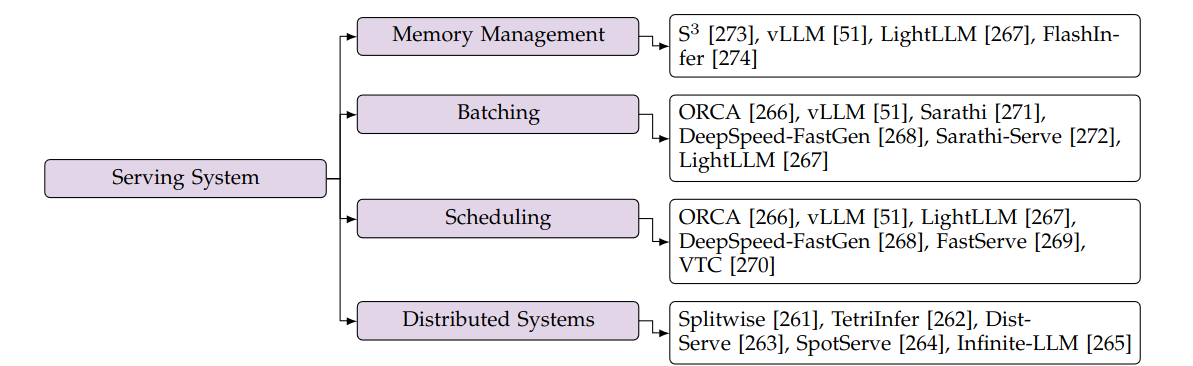

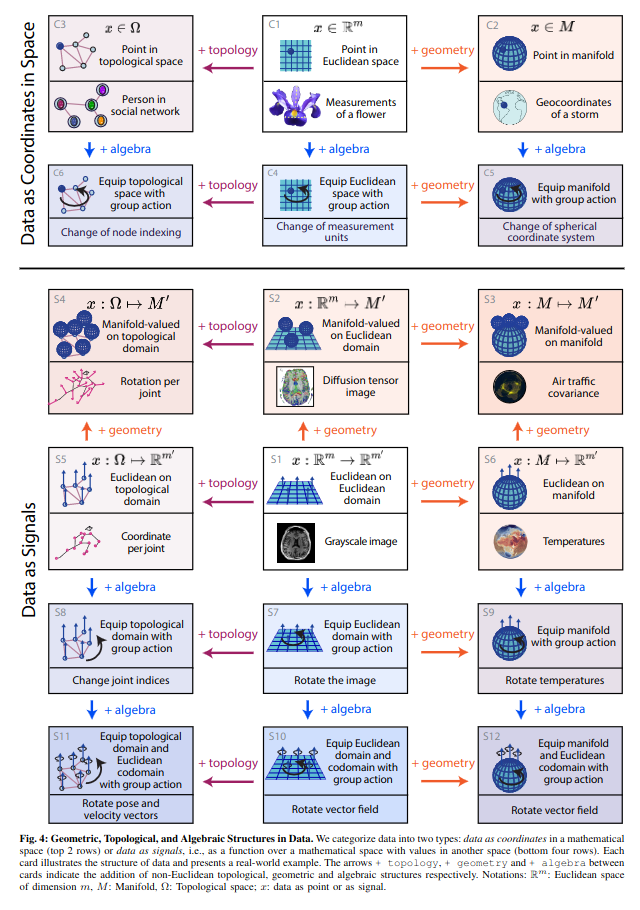

- paper: Beyond Euclid: An Illustrated Guide to Modern Machine Learning with Geometric, Topological, and Algebraic Structures

- links: https://arxiv.org/pdf/2407.09468

- takeaway:

- As the availability of richly structured, non-Euclidean

data grows across application domains, there is an increasing need for machine learning methods that can fully leverage the underlying geometry, topology, and symmetries to extract insights. Driven by this need, a new paradigm of non-Euclidean machine learn- ing is emerging that generalizes classical techniques to curved manifolds, topological spaces, and group- structured data. This paradigm shift echoes the non- Euclidean revolution in mathematics in the 19th cen- tury, which radically expanded our notion of geometry and catalyzed significant advancements across the nat- ural sciences.

> graph machine learning

Architecture:

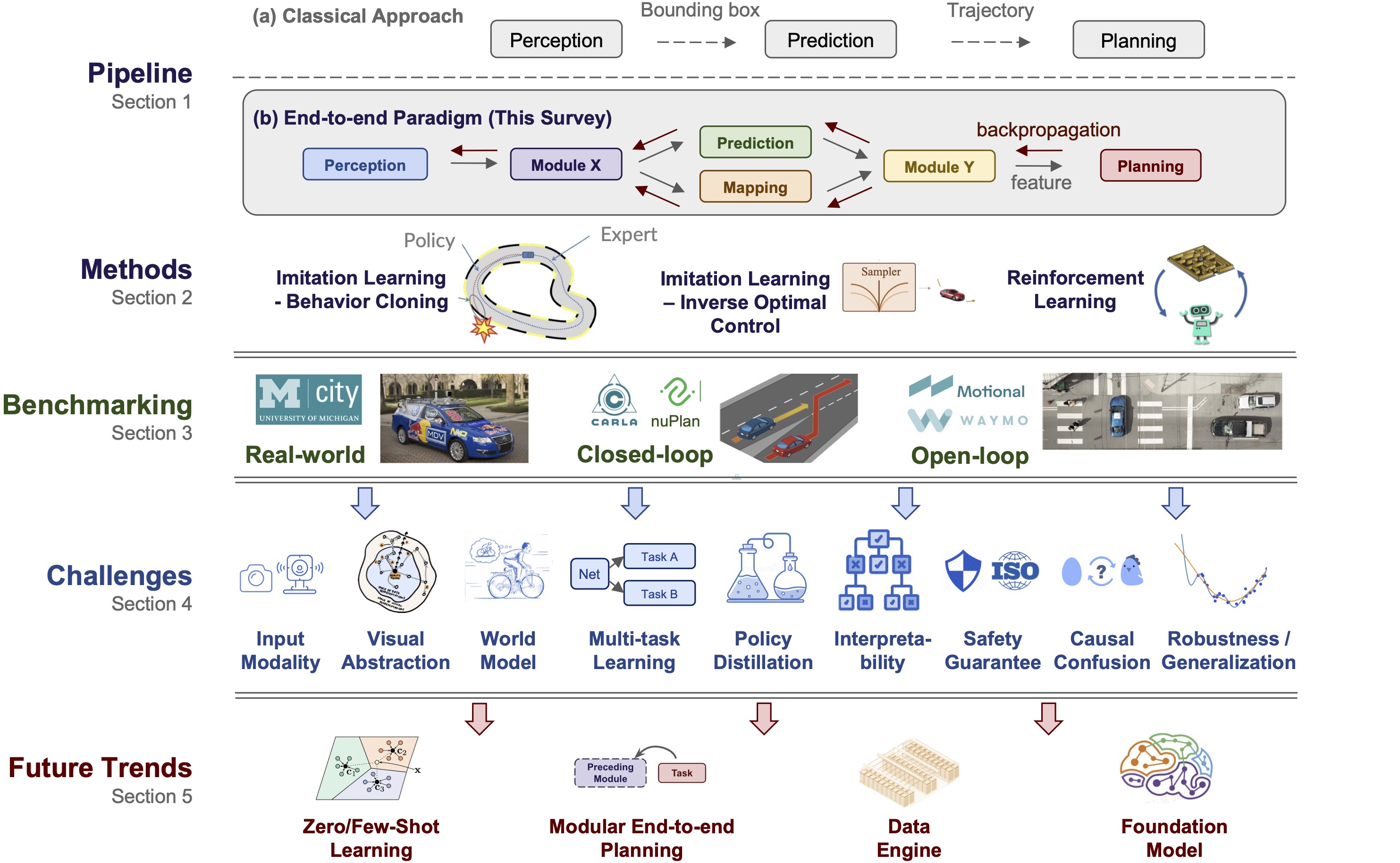

- paper: End-To-End Planning of Autonomous Driving in Industry and Academia: 2022-2023

- links:

- takeaway:

- just a quick scan of this paper….

for learning more about end-to-end https://github.com/OpenDriveLab/End-to-end-Autonomous-Driving

Architecture:

- paper: The Right Kind of Stubborn