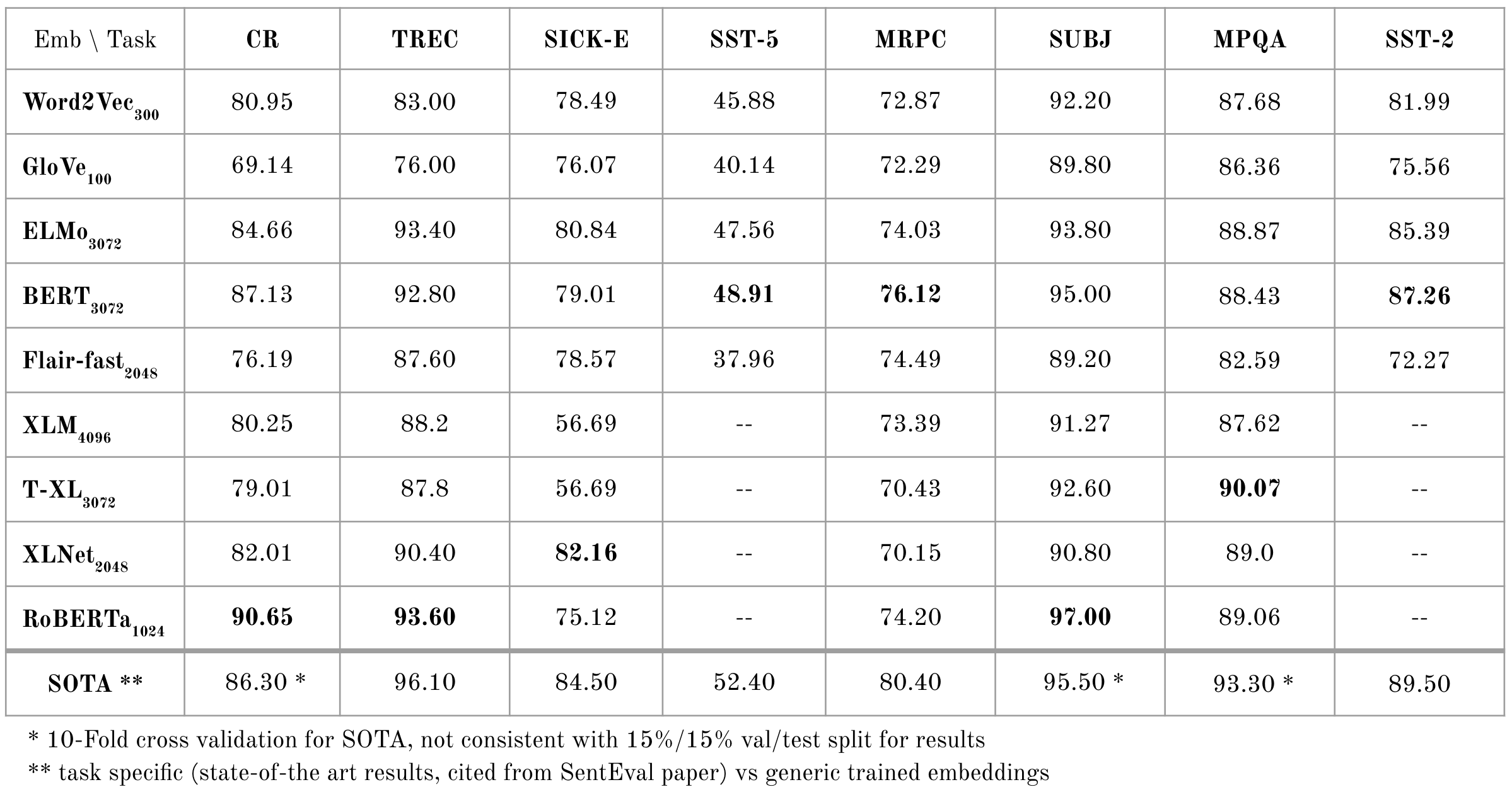

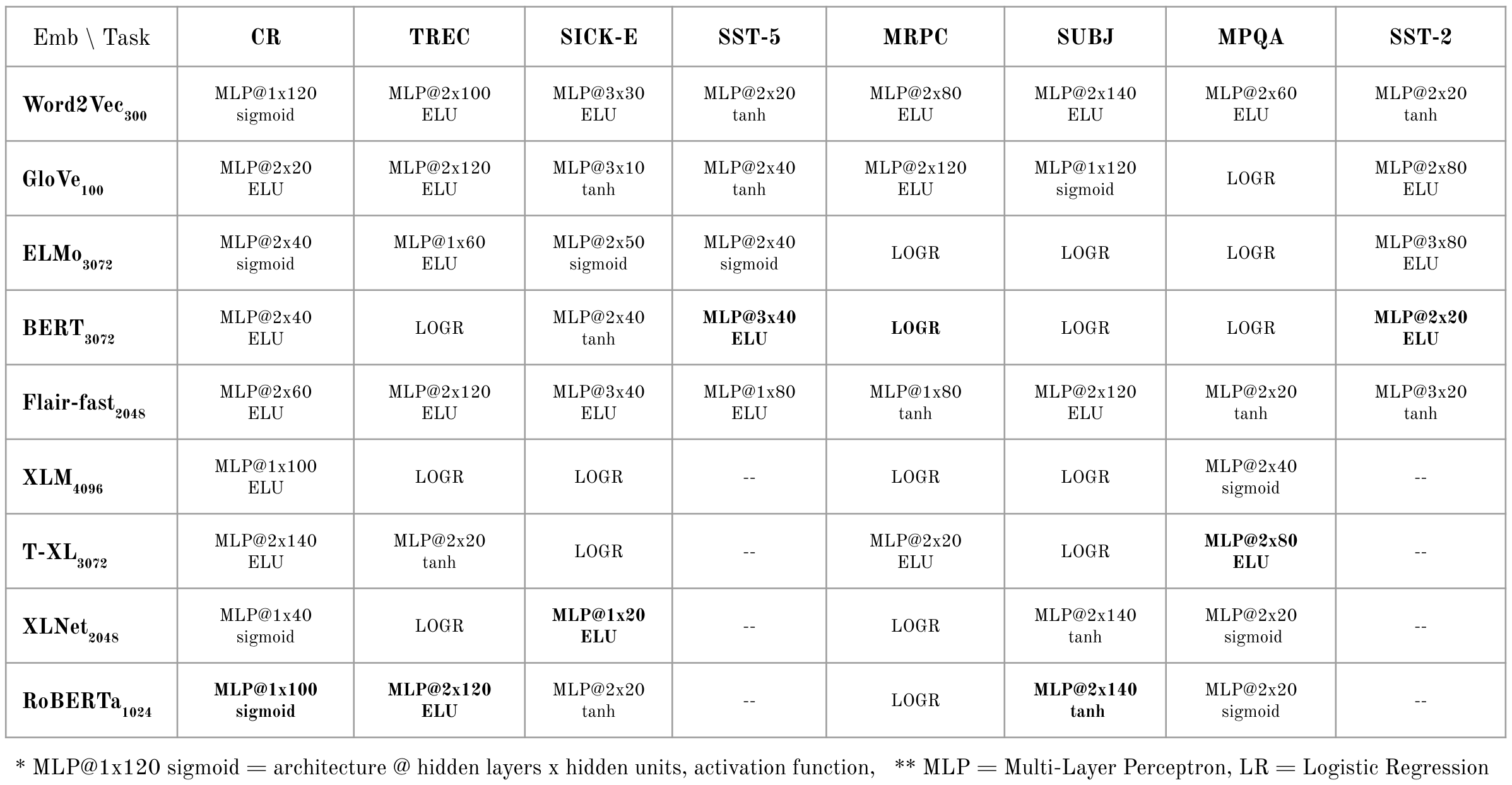

Leveraging the power of PyTorch and open source libraries Flair and SentEval, we perform a fair quantitative comparison of natural language processing (NLP) embeddings -- from classical, contextualized architectures like Word2Vec and GloVe to recent state-of-the-art transformer-based embeddings such as BERT and RoBERTa. We aim to benchmark them on downstream tasks by adding a top layer fine-tuning, while allowing for a certain architecture flexibility for each specific embedding. Using Sequential Bayesian Optimization, we fine-tune our models to achieve optimal perfomance and implement an extension of a hyperparameter optimization library (Hyperopt) to correct for inconsistences in sampling log base 2 and 10 uniform distribution for batch size and learning rate for training. Our results report transformer-based architectures (BERT, RoBERTa) to be the leading models on all downstream NLP tasks used.

Project includes a report with detailed description of embeddings, empirical tests and results, as well as a presentation split in two parts. Part 1 is a general overview of NLP Embeddings: How to represent textual input for neural networks; Part 2 reports empirical results of the quantitative embeddings comparison conducted with PyTorch and libraries SentEval, Flair and Hyperopt, on some of the most common NLP tasks (e.g. sentiment analysis, question answering, etc.).

- Word2Vec (2013)

- GloVe (2014)

- Character embeddings (2015)

- CoVe (2017)

- ELMo (2018)

- BERT (2018)

- XLM (2019)

- XLNet (2019)

- RoBERTa (2019)

The following animations provide visual representations of diffrences in sequence-to-sequence (seq2seq) architectures for specific embeddings:

In Transformer animations, each appearing element represents a key/value, and the element with the largest presence represents the query element.

The code runs as an extension of the following libraries:

Install the dependencies.

pip install flair segtok bpemb deprecated pytorch_transformers allennlpRun the bash script to get the data for downstream tasks.

data/downstream/get_transfer_data.bashLaunch the jupyter notebook Embeddings_benchmark.ipynb for training.